Technology peripherals

Technology peripherals

AI

AI

Is Nvidia's era of dominance over? ChatGPT sets off a chip war between Google and Microsoft, and Amazon also joins the game

Is Nvidia's era of dominance over? ChatGPT sets off a chip war between Google and Microsoft, and Amazon also joins the game

Is Nvidia's era of dominance over? ChatGPT sets off a chip war between Google and Microsoft, and Amazon also joins the game

After ChatGPT became popular, the AI war between the two giants Google and Microsoft has burned into a new field-server chips.

Today, AI and cloud computing have become battlegrounds, and chips have also become the key to reducing costs and winning business customers.

Originally, major companies such as Amazon, Microsoft, and Google were all famous for their software. But now, they have spent billions of dollars on chip development and production.

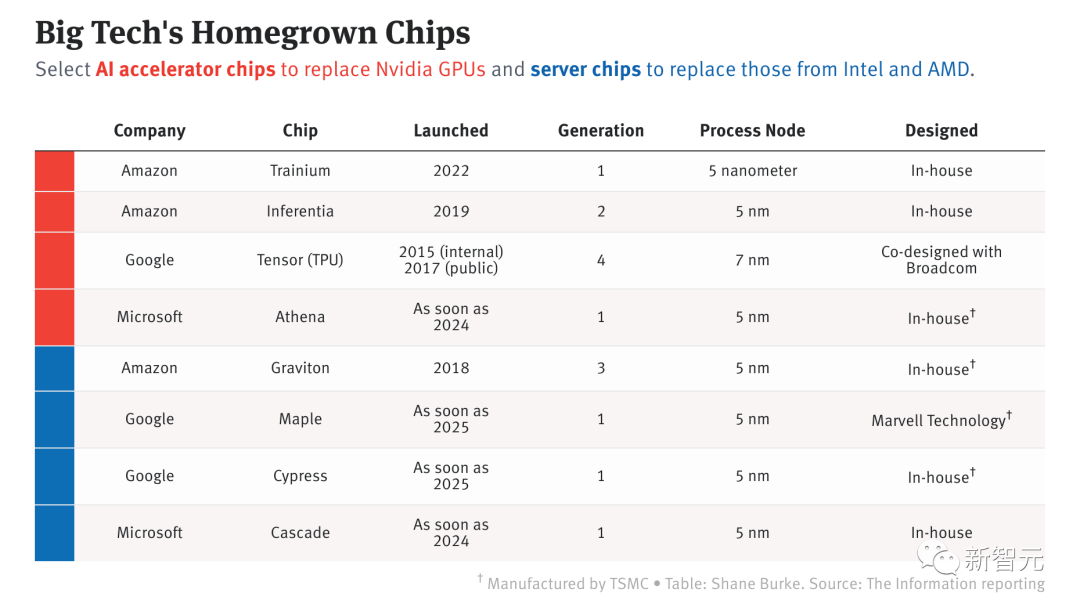

##AI chips developed by major technology giants

ChatGPT explodes, and major manufacturers start a chip competitionAccording to reports from foreign media The Information and other sources, these three major manufacturers have now launched or plan to release 8 server and AI chips for internal use Product development, cloud server rental, or both.

“If you can make silicon that’s optimized for AI, you’ve got a huge win ahead of you,” said Glenn O’Donnell, a director at research firm Forrester.

Will these huge efforts be rewarded?

The answer is, not necessarily.

Intel, AMD, and Nvidia can benefit from economies of scale, but for the big tech companies, the story is far from Not so.

They also face thorny challenges, such as hiring chip designers and convincing developers to build applications using their custom chips.

However, major manufacturers have made impressive progress in this field.

According to published performance data, Amazon’s Graviton server chips, as well as AI-specific chips released by Amazon and Google, are already comparable in performance to traditional chip manufacturers.

There are two main types of chips developed by Amazon, Microsoft and Google for their data centers: standard computing chips and specialized chips used to train and run machine learning models. It is the latter that powers large language models like ChatGPT.

Previously, Apple successfully developed chips for iPhone, iPad and Mac, improving the processing of some AI tasks. These large manufacturers may have learned inspiration from Apple.

Among the three major manufacturers, Amazon is the only cloud service provider that provides two types of chips in servers. The Israeli chip designer Annapurna Labs acquired in 2015 laid the foundation for these efforts. Base.

Google launched a chip for AI workloads in 2015 and is developing a standard server chip to improve server performance in Google Cloud.

In contrast, Microsoft's chip research and development started later, in 2019. Recently, Microsoft has accelerated the launch of AI chips designed specifically for LLM. axis.

The popularity of ChatGPT has ignited the excitement of AI among users around the world. This further promoted the strategic transformation of the three major manufacturers.

ChatGPT runs on Microsoft's Azure cloud and uses tens of thousands of Nvidia A100s. Both ChatGPT and other OpenAI software integrated into Bing and various programs require so much computing power that Microsoft has allocated server hardware to internal teams developing AI.

At Amazon, Chief Financial Officer Brian Olsavsky told investors on an earnings call last week that Amazon plans to shift spending from its retail business to AWS, in part by investing in support for ChatGPT Required infrastructure.

At Google, the engineering team responsible for building the Tensor Processing Unit has been moved to Google Cloud. It is reported that cloud organizations can now develop a roadmap for TPUs and the software that runs on them, hoping to let cloud customers rent more TPU-powered servers.

Google: TPU V4 specially tuned for AI

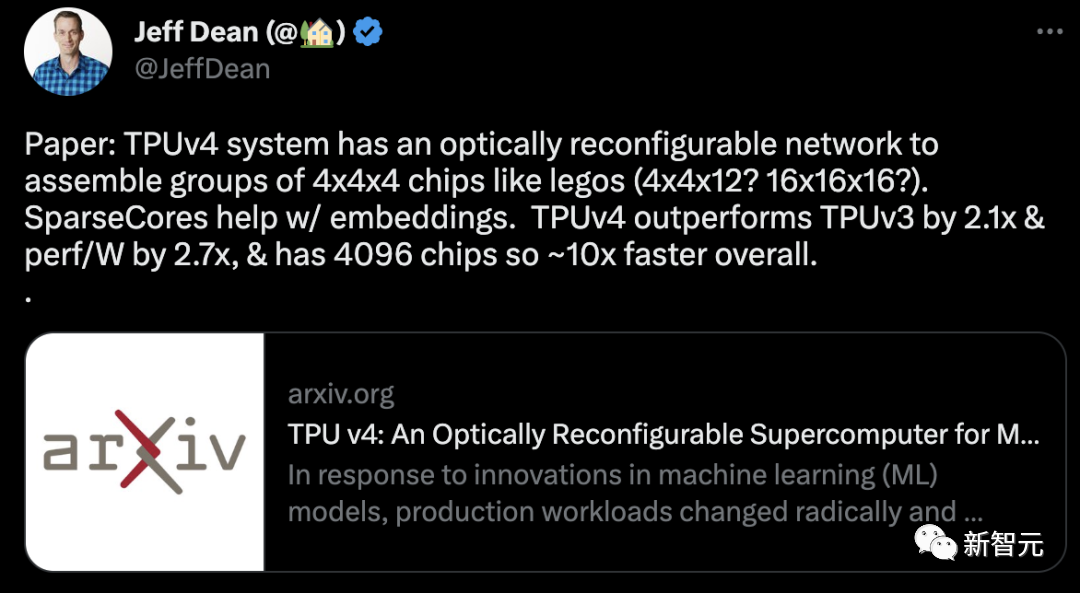

As early as 2020, Google deployed the most powerful AI chip at the time in its own data center—— TPU v4.

However, it was not until April 4 this year that Google announced the technical details of this AI supercomputer for the first time.

##Compared to TPU v3, TPU v4’s performance is 2.1 times higher, and after integrating 4096 chips , the performance of supercomputer has been improved by 10 times.

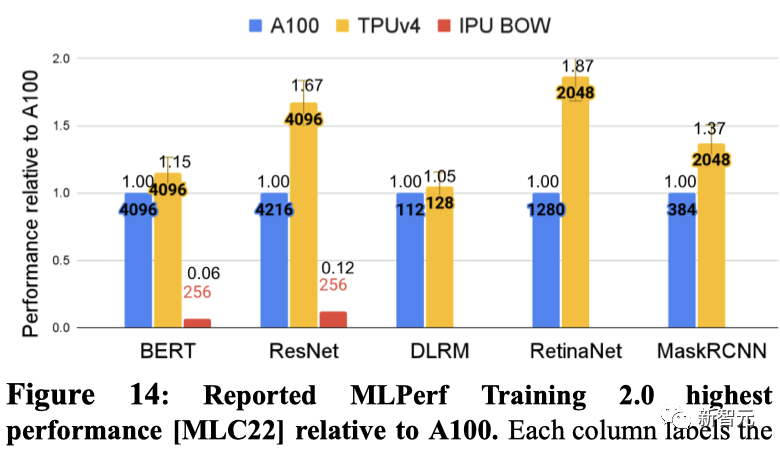

At the same time, Google also claims that its own chip is faster and more energy-efficient than Nvidia A100. For systems of comparable size, TPU v4 can provide 1.7 times better performance than NVIDIA A100, while also improving energy efficiency by 1.9 times.

For similarly sized systems, TPU v4 is 1.15 times faster than A100 on BERT and approximately 4.3 times faster than IPU. For ResNet, TPU v4 is 1.67x and about 4.5x faster respectively.

Separately, Google has hinted that it is working on a new TPU to compete with the Nvidia H100. Google researcher Jouppi said in an interview with Reuters that Google has "a production line for future chips."

Microsoft: Secret Weapon AthenaIn any case, Microsoft is still eager to try in this chip dispute.

Previously, news broke that a 300-person team secretly formed by Microsoft began developing a custom chip called "Athena" in 2019.

According to the original plan, "Athena" will be built using TSMC's 5nm process, and it is expected that each chip can The cost is reduced by 1/3.

If it can be implemented on a large scale next year, Microsoft's internal and OpenAI teams can use "Athena" to complete model training and inference at the same time.

In this way, the shortage of dedicated computers can be greatly alleviated.

Bloomberg reported last week that Microsoft’s chip division had cooperated with AMD to develop the Athena chip, which also caused AMD’s stock price to rise 6.5% on Thursday.

But an insider said that AMD is not involved, but is developing its own GPU to compete with Nvidia, and AMD has been discussing the design of the chip with Microsoft because Microsoft Expect to buy this GPU.

Amazon: Already taking a leadIn the chip competition with Microsoft and Google, Amazon seems to have taken a lead.

Over the past decade, Amazon has maintained a competitive advantage over Microsoft and Google in cloud computing services by providing more advanced technology and lower prices.

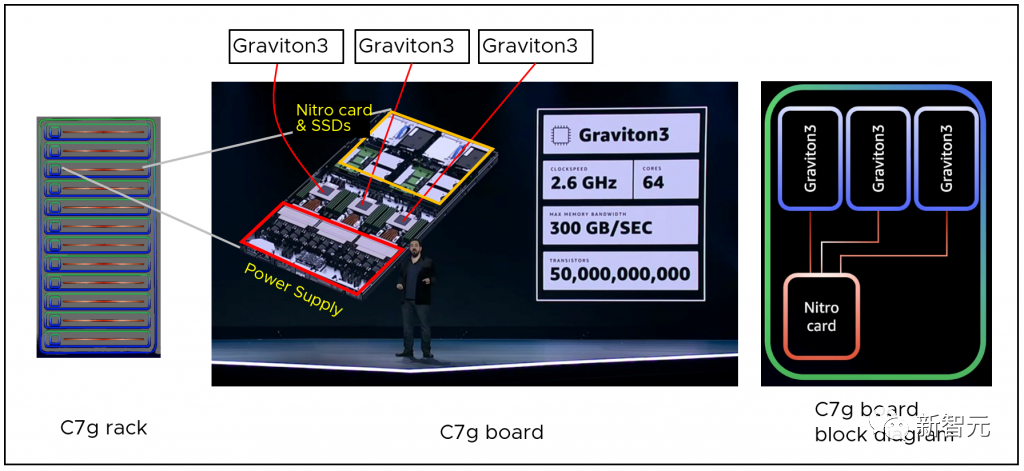

In the next ten years, Amazon is also expected to continue to maintain an advantage in the competition through its own internally developed server chip-Graviton.

As the latest generation of processors, AWS Graviton3 improves computing performance by up to 25% compared to the previous generation, and floating point performance is improved by up to 2 times. It also supports DDR5 memory, which has a 50% increase in bandwidth compared to DDR4 memory.

For machine learning workloads, AWS Graviton3 delivers up to 3x better performance than the previous generation and supports bfloat16.

Cloud services based on Graviton 3 chips are very popular in some areas, and have even reached a state of oversupply.

Another aspect of Amazon’s advantage is that it is currently the only cloud vendor that provides standard computing chips (Graviton) and AI-specific chips (Inferentia and Trainium) in its servers .

As early as 2019, Amazon launched its own AI inference chip-Inferentia.

It allows customers to run large-scale machine learning inference applications such as image recognition, speech recognition, natural language processing, personalization and fraud detection in the cloud at low cost.

The latest Inferentia 2 has improved the computing performance by 3 times, the total accelerator memory has been expanded by 4 times, the throughput has been increased by 4 times, and the latency has been reduced to 1/10.

After the launch of the first generation Inferentia, Amazon released a custom chip designed mainly for AI training—— Trainium.

It is optimized for deep learning training workloads, including image classification, semantic search, translation, speech recognition, natural language processing and recommendation engines, etc.

In some cases, chip customization can not only reduce costs by an order of magnitude, but also reduce energy consumption to 1/10 , and these customized solutions can provide customers with better services with lower latency.

It’s not that easy to shake NVIDIA’s monopoly

But so far, most AI workloads still run on GPUs, and NVIDIA produces most of them. Part of the chip.

According to previous reports, Nvidia’s independent GPU market share reaches 80%, and its high-end GPU market share reaches 90%.

In 20 years, 80.6% of cloud computing and data centers running AI around the world were driven by NVIDIA GPUs. In 2021, NVIDIA stated that about 70% of the world's top 500 supercomputers were driven by its own chips.

And now, even the Microsoft data center running ChatGPT uses tens of thousands of NVIDIA A100 GPUs.

For a long time, whether it is the top ChatGPT, or models such as Bard and Stable Diffusion, the computing power is provided by the NVIDIA A100 chip, which is worth about US$10,000 each.

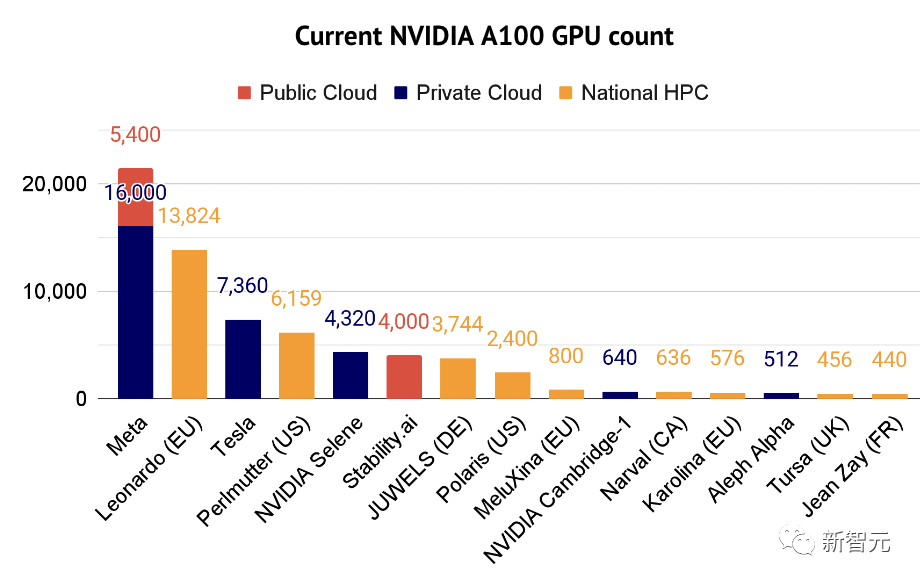

Not only that, A100 has now become the "main workhorse" for artificial intelligence professionals. The 2022 State of Artificial Intelligence report also lists some of the companies using the A100 supercomputer.

It is obvious that Nvidia has monopolized the global computing power and dominates the world with its own chips.

According to practitioners, the application-specific integrated circuit (ASIC) chips that Amazon, Google and Microsoft have been developing can perform machine learning tasks faster than general-purpose chips. , lower power consumption.

Director O'Donnell used this comparison when comparing GPUs and ASICs: "For normal driving, you can use a Prius, but if you have to use four-wheel drive on the mountain, use A Jeep Wrangler would be more suitable.”

But despite all their efforts, Amazon, Google and Microsoft all face the challenge of convincing developers to use these AI chips Woolen cloth?

Now, NVIDIA's GPUs are dominant, and developers are already familiar with its proprietary programming language CUDA for making GPU-driven applications.

If they switch to custom chips from Amazon, Google or Microsoft, they will need to learn a new software language. Will they be willing?

The above is the detailed content of Is Nvidia's era of dominance over? ChatGPT sets off a chip war between Google and Microsoft, and Amazon also joins the game. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

What method is used to convert strings into objects in Vue.js?

Apr 07, 2025 pm 09:39 PM

When converting strings to objects in Vue.js, JSON.parse() is preferred for standard JSON strings. For non-standard JSON strings, the string can be processed by using regular expressions and reduce methods according to the format or decoded URL-encoded. Select the appropriate method according to the string format and pay attention to security and encoding issues to avoid bugs.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

Vue.js How to convert an array of string type into an array of objects?

Apr 07, 2025 pm 09:36 PM

Vue.js How to convert an array of string type into an array of objects?

Apr 07, 2025 pm 09:36 PM

Summary: There are the following methods to convert Vue.js string arrays into object arrays: Basic method: Use map function to suit regular formatted data. Advanced gameplay: Using regular expressions can handle complex formats, but they need to be carefully written and considered. Performance optimization: Considering the large amount of data, asynchronous operations or efficient data processing libraries can be used. Best practice: Clear code style, use meaningful variable names and comments to keep the code concise.

How to set the timeout of Vue Axios

Apr 07, 2025 pm 10:03 PM

How to set the timeout of Vue Axios

Apr 07, 2025 pm 10:03 PM

In order to set the timeout for Vue Axios, we can create an Axios instance and specify the timeout option: In global settings: Vue.prototype.$axios = axios.create({ timeout: 5000 }); in a single request: this.$axios.get('/api/users', { timeout: 10000 }).

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

How to optimize database performance after mysql installation

Apr 08, 2025 am 11:36 AM

MySQL performance optimization needs to start from three aspects: installation configuration, indexing and query optimization, monitoring and tuning. 1. After installation, you need to adjust the my.cnf file according to the server configuration, such as the innodb_buffer_pool_size parameter, and close query_cache_size; 2. Create a suitable index to avoid excessive indexes, and optimize query statements, such as using the EXPLAIN command to analyze the execution plan; 3. Use MySQL's own monitoring tool (SHOWPROCESSLIST, SHOWSTATUS) to monitor the database health, and regularly back up and organize the database. Only by continuously optimizing these steps can the performance of MySQL database be improved.