Technology peripherals

Technology peripherals

AI

AI

Training big models pays attention to 'energy'! Tao Dacheng leads the team: All the 'efficient training' solutions are covered in one article, stop saying that hardware is the only bottleneck

Training big models pays attention to 'energy'! Tao Dacheng leads the team: All the 'efficient training' solutions are covered in one article, stop saying that hardware is the only bottleneck

Training big models pays attention to 'energy'! Tao Dacheng leads the team: All the 'efficient training' solutions are covered in one article, stop saying that hardware is the only bottleneck

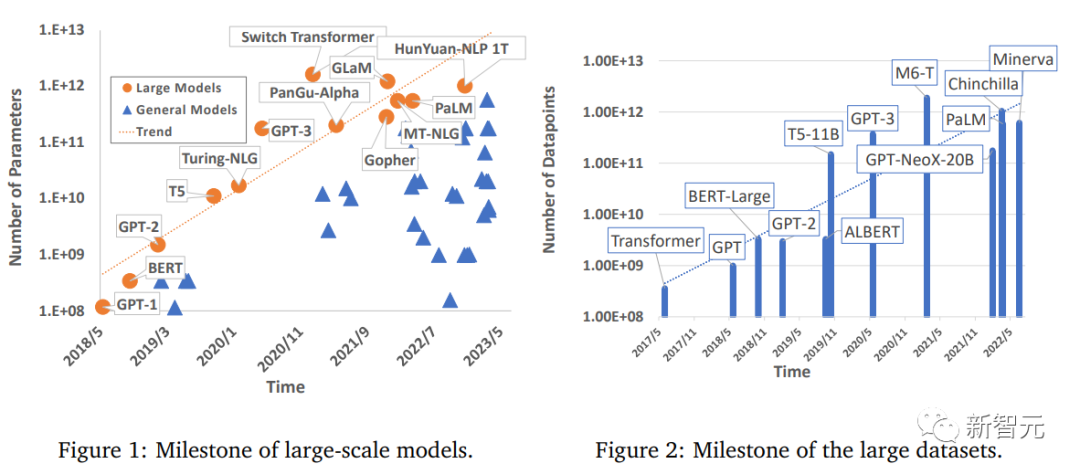

The field of deep learning has made significant progress, especially in aspects such as computer vision, natural language processing and speech. Large-scale models trained using big data are important for practical applications, improving industrial productivity and promoting society. Development has huge prospects.

However, large models also require large computing power to be trained. As people’s requirements for computing power continue to increase, ,Although there have been many studies exploring ,efficient training methods, there is still no comprehensive ,review on deep learning model acceleration techniques.

Recently, researchers from the University of Sydney, University of Science and Technology of China and other institutions published a review, comprehensively summarizing efficient training techniques for large-scale deep learning models and showing the training process Common mechanisms within each component in .

Paper link: https://arxiv.org/pdf/2304.03589.pdf

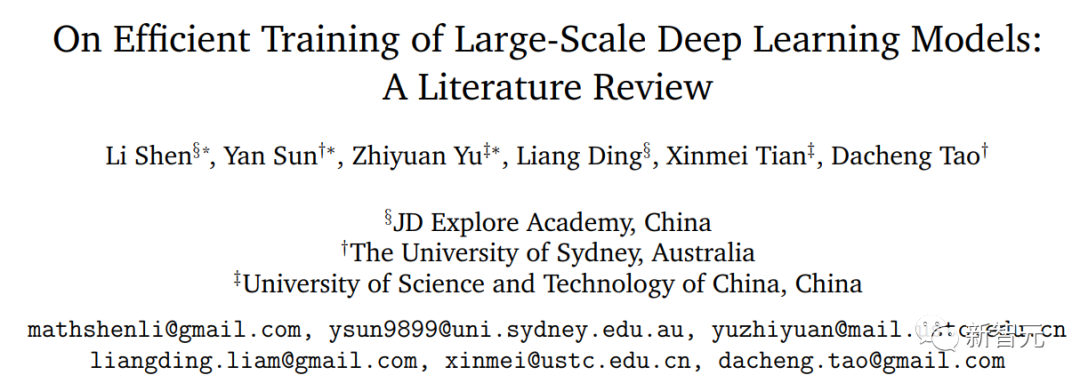

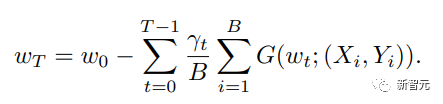

The researchers considered the most basic weight update formula and divided its basic components into five main aspects:

1, Data-centric (data-centric), including data set regularization, data sampling and data-centric course learning technology, can significantly Reduce the computational complexity of data samples;

2, Model-centric (model-centric), including acceleration of basic modules, compression training, model initialization and Model-centered course learning technology focuses on accelerating training by reducing parameter calculations;

3, Optimization-centric , including Selection of learning rate, use of large batch size, design of efficient objective function, model weighted average technology, etc.; focus on training strategies to improve the versatility of large-scale models;

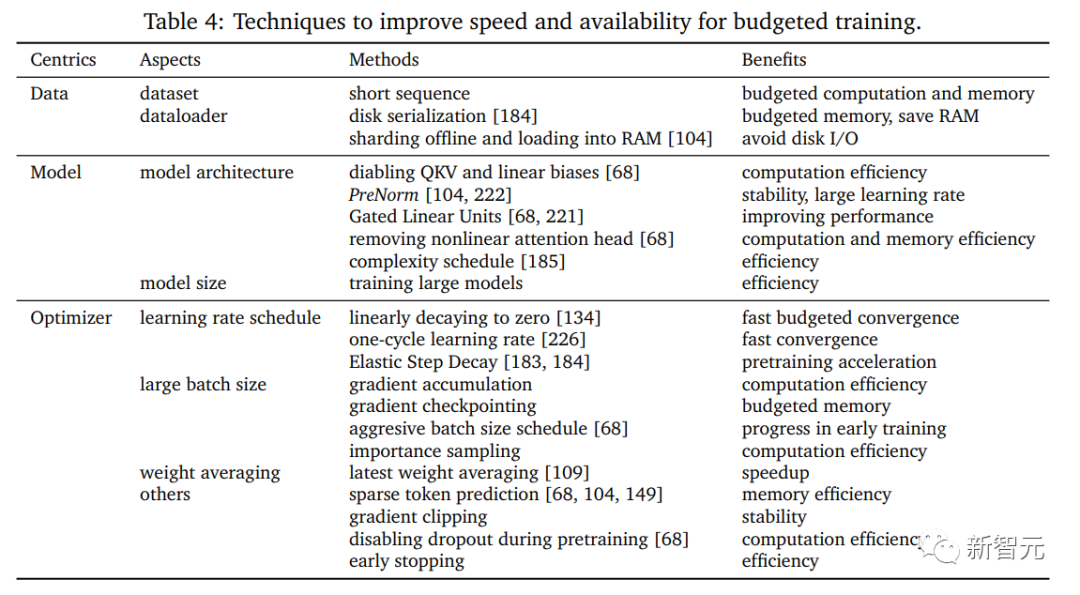

4,Budgeted training, including some acceleration technologies used when hardware is limited;

5, system-centric (system- centric), including some efficient distributed frameworks and open source libraries, providing sufficient hardware support for the implementation of accelerated algorithms.

Efficient data-centric training

Recently, large-scale models have made great progress, while their requirements on data sets have increased dramatically. Huge data samples are used to drive the training process and achieve excellent performance. Therefore, data-centric research is critical to actual acceleration.

The basic function of data processing is to efficiently increase the diversity of data samples without increasing the cost of labeling; since the cost of data labeling is often too expensive, Some development institutions cannot afford it, which also highlights the importance of research in data-centric fields; at the same time, data processing also focuses on improving the efficiency of parallel loading of data samples.

The researchers call all these efficient processing of data a "data-centric" approach, which can significantly improve the performance of training large-scale models.

This article reviews and studies technology from the following aspects:

Data RegularizationData Regularization

Data regularization is a preprocessing technique that enhances the diversity of original data samples through a series of data transformations, which can improve the equivalence of training samples in the feature space. Indicates that no additional labeling information is required.

Efficient data regularization methods are widely used in the training process and can significantly improve the generalization performance of large-scale models.

Data samplingData sampling

Data sampling is also an effective method, from Selecting a subset from a large batch of samples to update the gradient has the advantage of training in small batches to reduce the impact of unimportant or bad samples in the current batch.

Usually, the sampled data is more important, and the performance is equivalent to that of the model trained using the full batch; the probability of each iteration needs to be gradually adjusted along with the training process. to ensure there is no bias in sampling.

Data-centric Curriculum Learning

Curriculum learning at different stages of the training process Investigate progressive training settings to reduce overall computational cost.

In the beginning, use low-quality data sets to train enough to learn low-level features; then use high-quality data sets (more enhancements and complex pre-processing methods) Gradually helps learn complex features and achieve the same accuracy as using the entire training set.

Model-centered efficient training

Designing an efficient model architecture is always one of the most important studies in the field of deep learning. An excellent model should be an efficient one. A feature extractor that can be projected into easily separated high-level features.

Different from other works that pay special attention to efficient and novel model architectures, this paper pays more attention to equivalent alternatives to common modules in "model-centric" research. Achieve higher training efficiency under comparable conditions.

Almost all large-scale models are composed of small modules or layers, so the investigation of models can provide guidance for efficient training of large-scale models. Researchers mainly focus on the following Research on aspects:

Architecture Efficiency

With the number of parameters in the deep model The sharp increase has also brought huge computational consumption, so it is necessary to implement an efficient alternative to approximate the performance of the original version of the model architecture. This direction has gradually attracted the attention of the academic community; this replacement is not only for numerical calculations Approximation, also includes structural simplification and fusion in deep models.

The researchers differentiate existing acceleration techniques based on different architectures and present some observations and conclusions.

Compression Training Efficiency

Compression has always been a research direction in computing acceleration. One, plays a key role in digital signal processing (multimedia computing/image processing).

Traditional compression includes two main branches: quantization and sparseness. The article details their existing achievements and contributions to deep training.

Initialization Efficiency

Initialization of model parameters in the existing theoretical analysis It is a very important factor in practical scenarios.

A bad initialization state can even cause the entire training to crash and stagnate in the early training phase, while a good initialization state helps speed up within a smooth loss range Regarding the entire convergence speed, this article mainly studies evaluation and algorithm design from the perspective of model initialization.

Model-centric Curriculum Learning

From a model-centric perspective, course learning usually starts training from a small model or partial parameters in a large-scale model, and then gradually recovers to the entire architecture; in the accelerated training process, it shows a larger Advantages, and no obvious negative effects, the article reviews the implementation and efficiency of this method in the training process.

Optimization-centered efficient learning

The acceleration scheme of optimization methods has always been an important research direction in the field of machine learning, which can reduce complexity while achieving optimal conditions. Sex has always been a pursuit in academia.

In recent years, efficient and powerful optimization methods have made important breakthroughs in training deep neural networks. As a basic optimizer widely used in machine learning, the SGD class optimizer has successfully It helps deep models realize various practical applications. However, as the problem becomes increasingly complex, SGD is more likely to fall into local minima and cannot generalize stably.

In order to solve these difficulties, Adam and its variants were proposed to introduce adaptability in updates. This approach has achieved good results in large-scale network training, such as It is used in BERT, Transformer and ViT models.

In addition to the performance of the designed optimizer itself, the combination of accelerated training techniques is also important.

Based on the perspective of optimization, researchers summarized the current thinking on accelerated training into the following aspects:

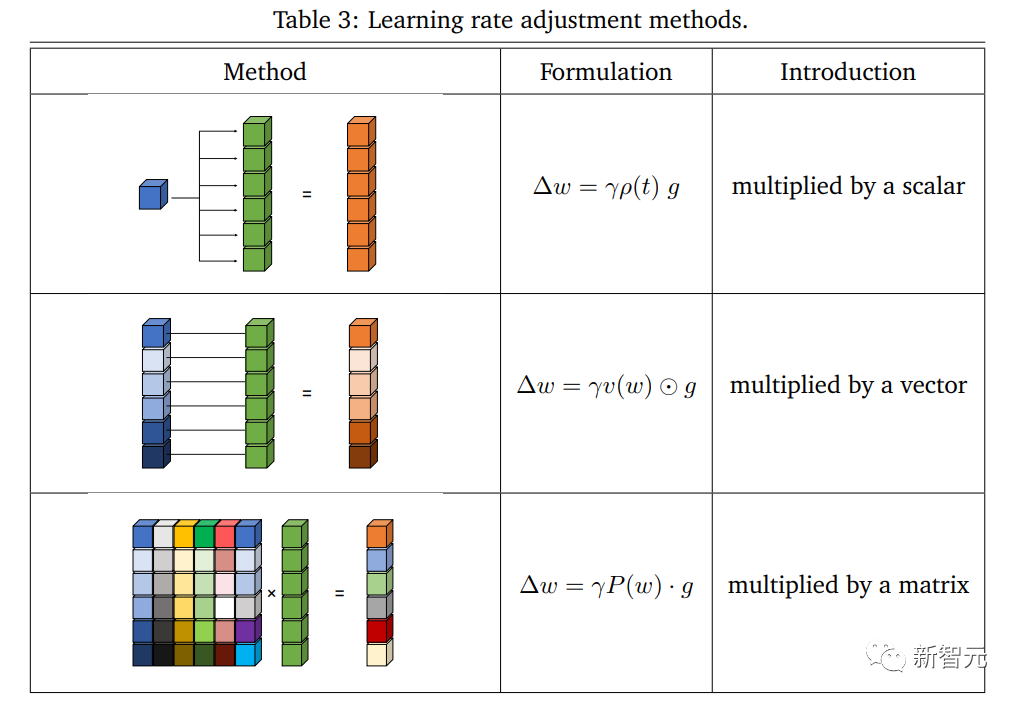

learning rate Learning rate

Learning rate is an important hyperparameter for non-convex optimization and is also crucial in current deep network training, like Adam Such adaptive methods and their variants have successfully achieved remarkable progress on deep models.

# Some strategies for adjusting the learning rate based on high-order gradients also effectively achieve accelerated training, and the implementation of learning rate attenuation will also affect training performance in the process.

Large batch size

Using a larger batch size will effectively Improving training efficiency can directly reduce the number of iterations required to complete an epoch training; when the total number of samples is fixed, processing a larger batch size is less expensive than processing multiple small batch size samples, because it can Improve memory utilization and reduce communication bottlenecks.

Efficient objective

The most basic ERM is on the minimization problem Play a key role in making many tasks practical.

With the deepening of research on large networks, some works pay more attention to the gap between optimization and generalization, and propose effective goals to reduce test errors; explain generalization from different perspectives ization and jointly optimizing it during training can greatly speed up the accuracy of testing.

Weighted average Averaged weights

Weighted average is a practical technique that can Enhance the versatility of the model, because the weighted average of historical states is considered, and there is a set of frozen or learnable coefficients, which can greatly speed up the training process.

Budgetized and efficient training

There have been several recent efforts focused on training deep learning models with fewer resources and achieving higher accuracy as much as possible.

This type of problem is defined as budgeted training, that is, training within a given budget (a limit on measurable costs) to achieve the highest model performance.

In order to systematically consider hardware support to approach the real situation, the researchers defined budget training as training on a given device and within a limited time, for example, training on a single low-end deep learning server for one day, to get the model with the best performance.

Research on budget training can shed light on how to create training recipes for budget training, including decisions about model size, model The configuration of the structure, learning rate arrangement and several other adjustable factors that affect performance, as well as the combination of efficient training techniques suitable for the available budget, this article mainly reviews several advanced techniques of budget training.

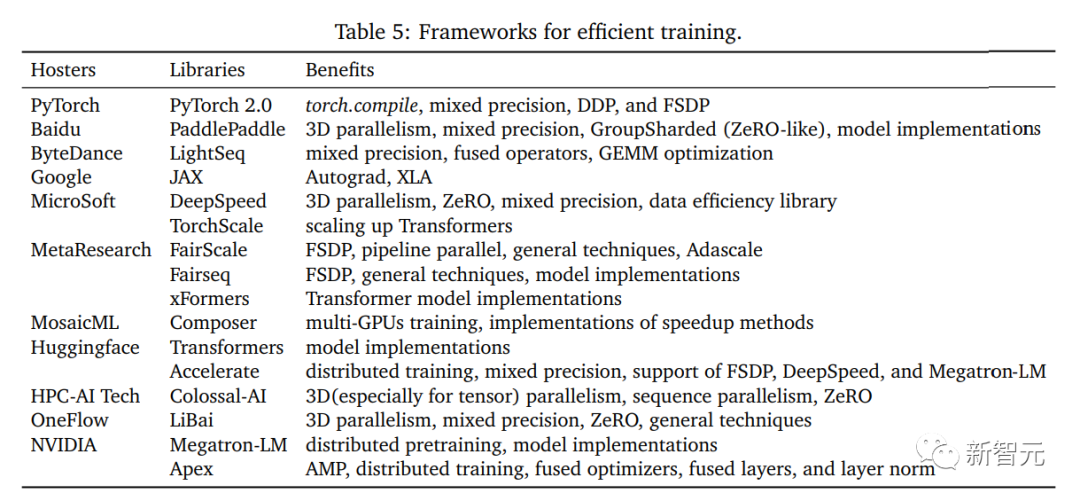

System-centered and efficient training

System-centered research is to provide specific implementation methods for the designed algorithms, and to study the ability to truly achieve high efficiency Efficient and practical execution of training hardware.

Researchers focus on the implementation of general-purpose computing devices, such as CPU and GPU devices in multi-node clusters, and resolving potential conflicts in design algorithms from a hardware perspective is the core of concern.

This article mainly reviews the hardware implementation technologies in existing frameworks and third-party libraries. These technologies effectively support the processing of data, models and optimization, and introduces some existing open source The platform provides a solid framework for model establishment, effective use of data for training, mixed precision training and distributed training.

System-centric Data Efficiency

## Efficient Data processing and data parallelism are two important concerns in system implementation.

With the rapid increase in data volume, inefficient data processing has gradually become a bottleneck for training efficiency, especially for large-scale training on multiple nodes. Design more hardware-friendly Computational methods and parallelization can effectively avoid wasting time in training.

System-centric Model Efficiency

With the rapid expansion of the number of model parameters ,From a model perspective, system efficiency has become ,one of the important bottlenecks, and the storage and ,computing efficiency of large-scale models brings huge ,challenges to hardware implementation.

This article mainly reviews how to achieve efficient I/O of deployment and streamlined implementation of model parallelism to speed up actual training.

System-centric Optimization Efficiency

The optimization process represents the The back propagation and update are also the most time-consuming calculations in training, so the implementation of system-centered optimization directly determines the efficiency of training.

In order to clearly interpret the characteristics of system optimization, the article focuses on the efficiency of different calculation stages and reviews the improvements of each process.

Open Source Frameworks

Efficient open source frameworks can facilitate training, as Grafting the bridge between algorithm design and hardware support, the researchers surveyed a range of open source frameworks and analyzed the strengths and weaknesses of each design.

Researchers review common training acceleration techniques for efficient training of large-scale deep learning models , taking into account all components in the gradient update formula, covering the entire training process in the field of deep learning.

The article also proposes a novel taxonomy, which summarizes these technologies into five main directions: data-centric, model-centric, optimization-centric, budget training and system-centric .

The first four parts mainly conduct comprehensive research from the perspective of algorithm design and methodology, while in the "System-centered Efficient Training" part, we summarize from the perspective of paradigm innovation and hardware support actual implementation.

The article reviews and summarizes commonly used or recently developed technologies corresponding to each section, the advantages and trade-offs of each technology, and discusses limitations and promising future research directions. ; While providing a comprehensive technical review and guidance, this review also proposes current breakthroughs and bottlenecks in efficient training.

The researchers hope to help researchers achieve general training acceleration efficiently and provide some meaningful and promising implications for the future development of efficient training; In addition to the following at the end of each section In addition to some potential developments mentioned, the broader and promising views are as follows:

1. Efficient Profile search

Efficient training can design pre-built and customizable profile search strategies for the model from the perspectives of data enhancement combination, model structure, optimizer design, etc. Related research has achieved some results progress.

New model architectures and compression modes, new pre-training tasks, and the use of “model-edge” knowledge are also worth exploring.

2. Adaptive Scheduler Adaptive Scheduler

Use an optimization-oriented schedule Schedulers such as course learning, learning rate and batch size, as well as model complexity, may achieve better performance; Budget-aware schedulers can dynamically adapt to the remaining budget, reducing the cost of manual design; Adaptive schedulers can be used Explore parallelism and communication methods while taking into account more general and practical scenarios, such as large-scale decentralized training in heterogeneous networks spanning multiple regions and data centers.

The above is the detailed content of Training big models pays attention to 'energy'! Tao Dacheng leads the team: All the 'efficient training' solutions are covered in one article, stop saying that hardware is the only bottleneck. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile