Technology peripherals

Technology peripherals

AI

AI

OpenAI uses GPT-4 to explain GPT-2's 300,000 neurons: This is what wisdom looks like

OpenAI uses GPT-4 to explain GPT-2's 300,000 neurons: This is what wisdom looks like

OpenAI uses GPT-4 to explain GPT-2's 300,000 neurons: This is what wisdom looks like

Although ChatGPT seems to bring humans closer to recreating intelligence, so far we have never fully understood what intelligence is, whether natural or artificial.

It is obviously necessary to understand the principles of intelligence. How to understand the intelligence of large language models? The solution given by OpenAI is: ask what GPT-4 says.

On May 9, OpenAI released its latest research, which used GPT-4 to automatically interpret neuron behavior in large language models and obtained many interesting results.

A simple way to study interpretability is to first understand the various components of the AI model (neurons and attention heads) )doing what. Traditional methods require humans to manually inspect neurons to determine which features of the data they represent. This process is difficult to scale, and applying it to neural networks with hundreds or hundreds of billions of parameters is prohibitively expensive.

So OpenAI proposed an automated method - using GPT-4 to generate and score natural language explanations of neuron behavior and apply it to another language Neurons in the model - Here they chose GPT-2 as the experimental sample and published a data set of interpretations and scores of these GPT-2 neurons.

- Paper address: https://openaipublic.blob.core.windows.net/ neuron-explainer/paper/index.html

- GPT-2 neuron diagram: https://openaipublic.blob.core.windows.net/neuron- explainer/neuron-viewer/index.html

- Code and dataset: https://github.com/openai/automated-interpretability

This technology allows people to use GPT-4 to define and automatically measure the quantitative concept of explainability of AI models: it is used to measure language models using natural language compression and reconstruction The ability of neurons to activate. Due to their quantitative nature, we can now measure progress in understanding the computational goals of neural networks.

OpenAI said that using the benchmark they established, using AI to explain AI can achieve scores close to human levels.

## OpenAI co-founder Greg Brockman also said that we have taken an important step towards using AI to automate alignment research.

Specific methodThe method of using AI to explain AI involves running three steps on each neuron:

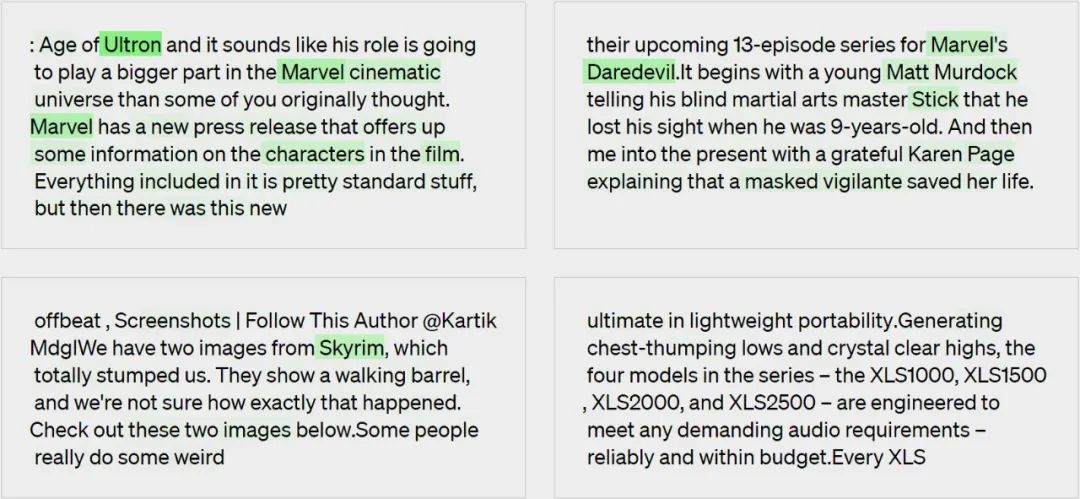

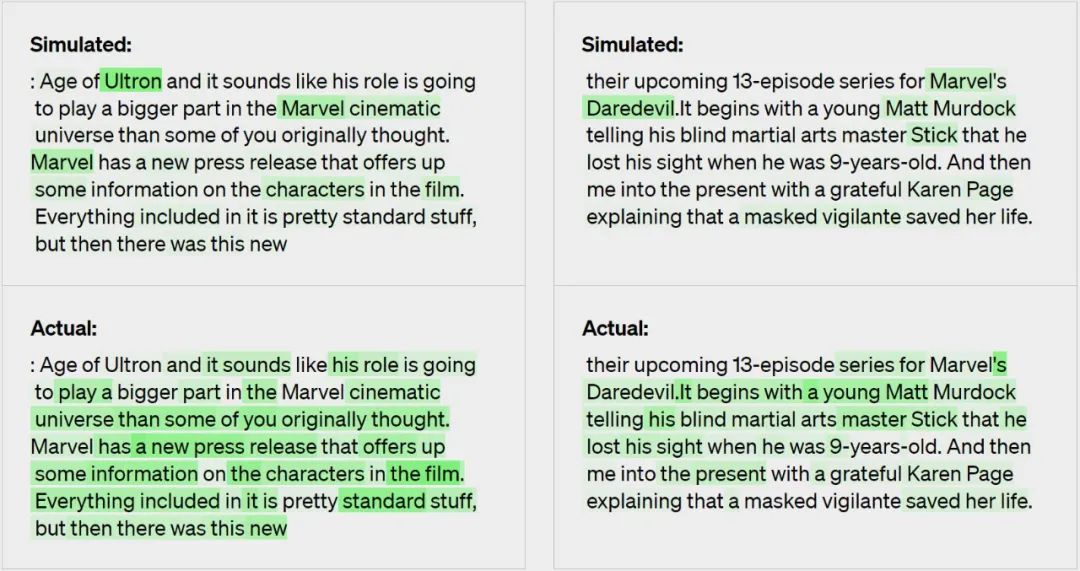

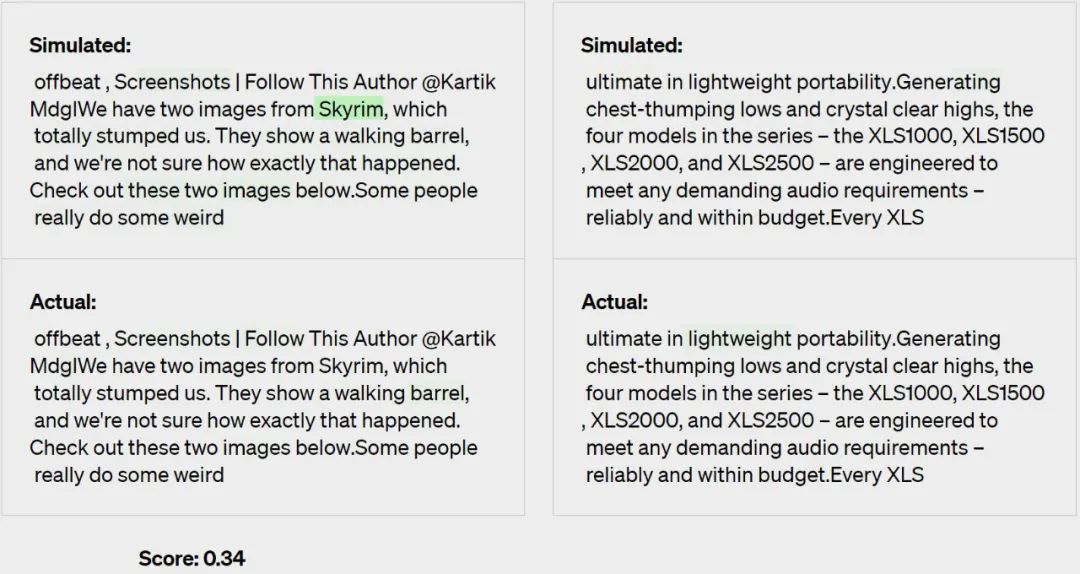

Step 1: Use GPT-4 to generate explanations

Explanations of model generation: References to movies, characters, and entertainment.

Step 2: Use GPT-4 to simulate

Use GPT-4 again to simulate the interpreted neural What will Yuan do.

Step 3: Comparison Explanations are scored based on how well simulated activations match real activations - in this case, GPT-4 scored 0.34.

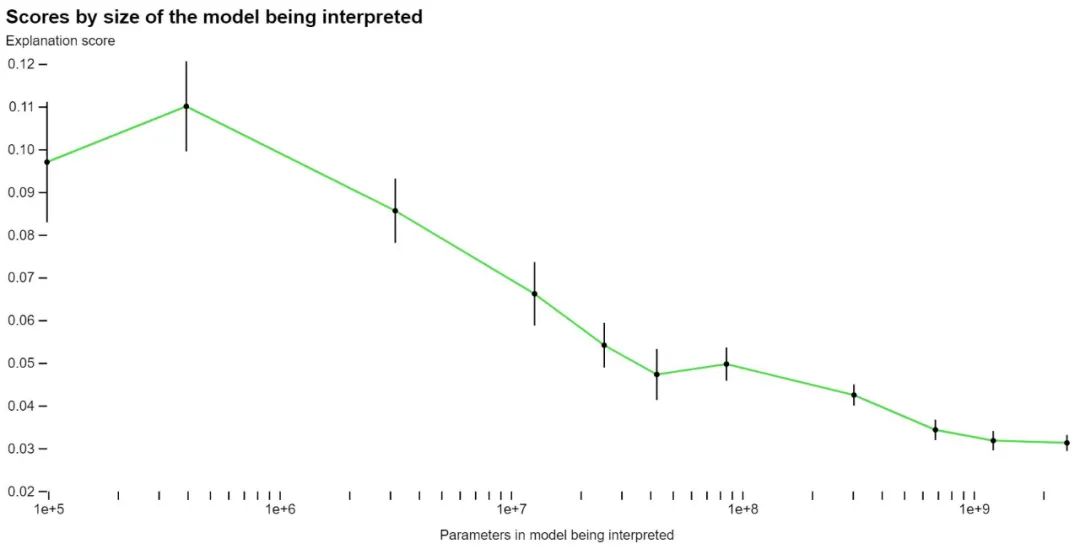

Using its own scoring method, OpenAI began measuring the effectiveness of their technology on different parts of the network and trying to improve the technology for parts that are currently unclear. For example, their technique does not work well with larger models, possibly because later layers are more difficult to interpret.

OpenAI says that while the vast majority of their explanations didn’t score highly, they believe they can now use ML technology to further enhance their ability to generate explanations. For example, they found that the following helped improve their scores:

- Iterative explanations. They could improve their scores by asking GPT-4 to think of possible counterexamples and then modify the explanation based on their activation.

- Use a larger model for explanation. As the ability of the explainer model improves, the average score will also increase. However, even GPT-4 gave worse explanations than humans, suggesting there is room for improvement.

- Change the structure of the explained model. Training the model with different activation functions improves the explanation score.

OpenAI says it is making open source the dataset and visualization tools written by GPT-4 that interpret all 307,200 neurons in GPT-2. At the same time, they also provide code for interpretation and scoring using models publicly available on the OpenAI API. They hope the research community will develop new techniques to generate higher-scoring explanations, as well as better tools to explore GPT-2 through explanations.

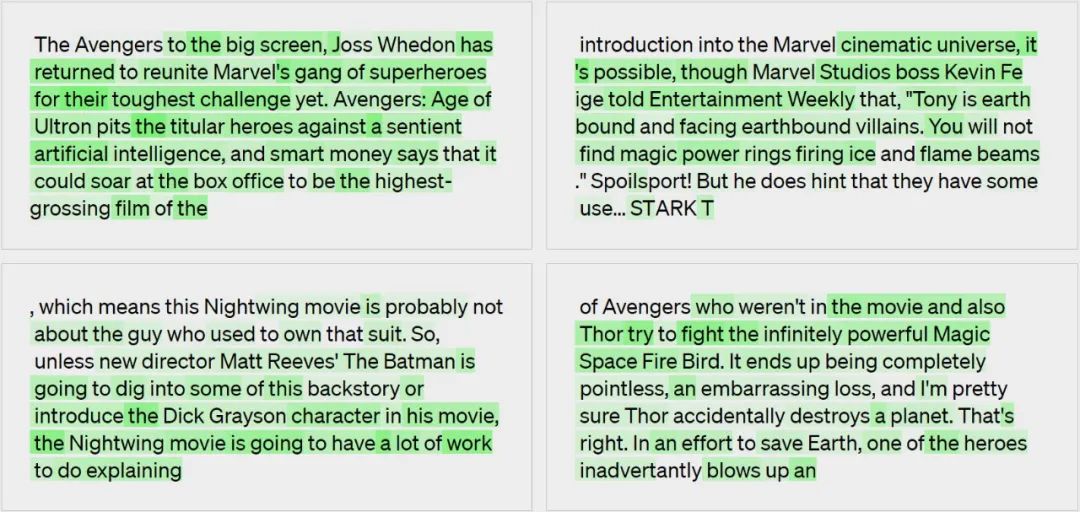

They found that more than 1,000 neurons had an explanation score of at least 0.8, meaning they accounted for most of the neuron's top activation behavior according to GPT-4. Most of these well-explained neurons are not very interesting. However, they also found many interesting neurons that GPT-4 did not understand. OpenAI hopes that as explanations improve, they may quickly uncover interesting qualitative insights into model computations.

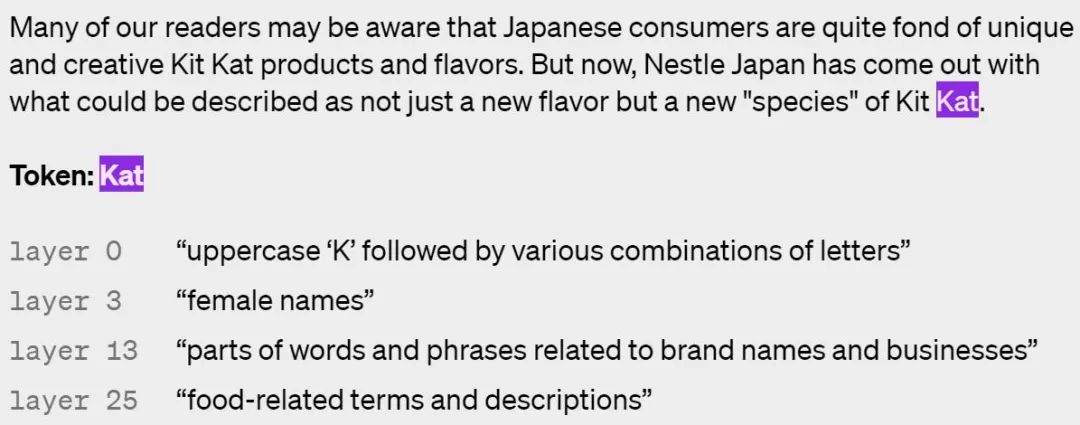

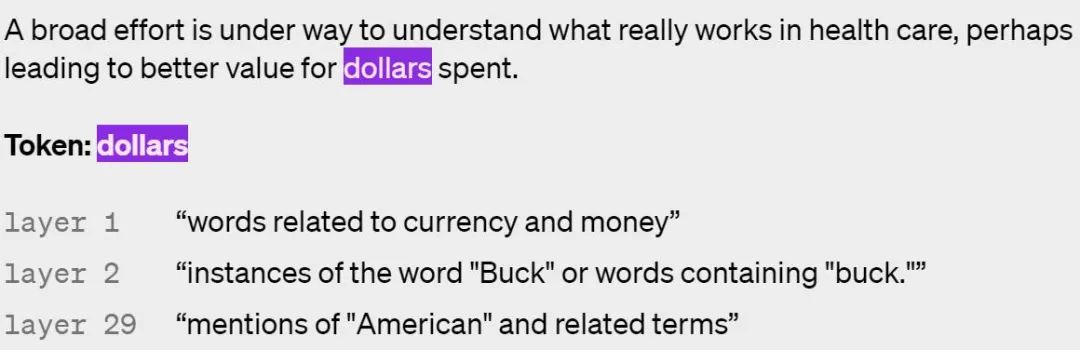

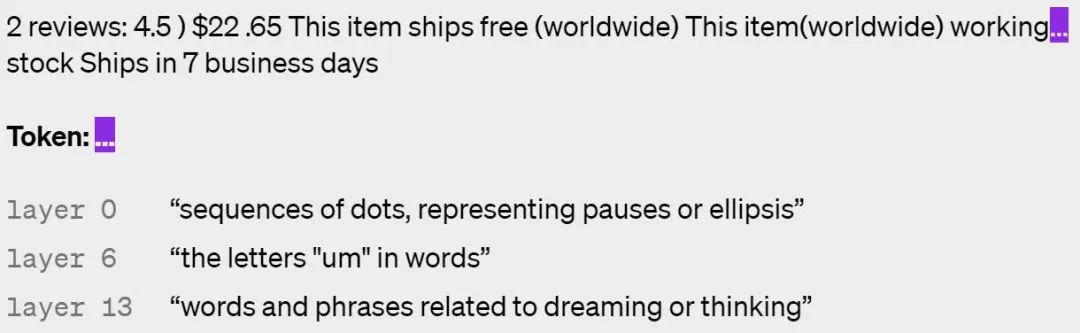

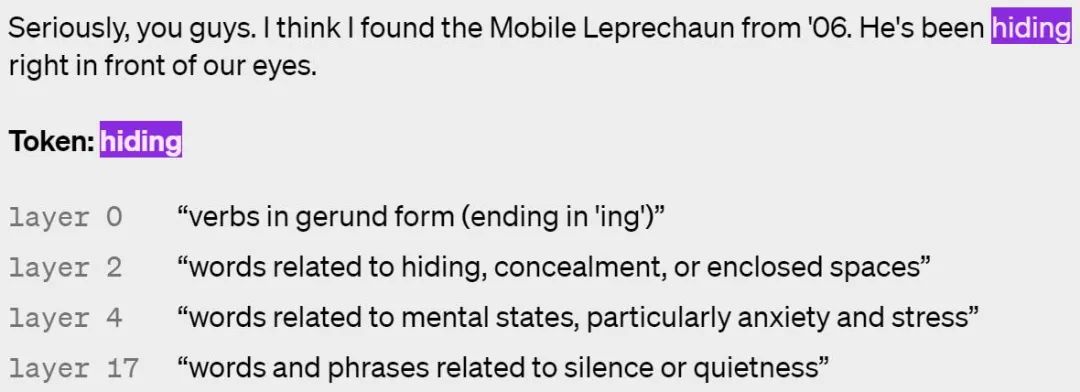

Here are some examples of neurons being activated in different layers, with higher layers being more abstract:

Future work of OpenAI

Currently, this method still has some limitations, and OpenAI hopes to solve these problems in future work:Ultimately, OpenAI hopes to use models to form, test, and iterate completely general hypotheses, just as explainability researchers do. Additionally, OpenAI hopes to interpret its largest models as a way to detect alignment and security issues before and after deployment. However, there is still a long way to go before that happens.

The above is the detailed content of OpenAI uses GPT-4 to explain GPT-2's 300,000 neurons: This is what wisdom looks like. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: