Briefly describe the five types of machine learning accelerators

Translator | Bugatti

Reviewer | Sun Shujuan

The past ten years have been the era of deep learning. We're excited about a series of big events, from AlphaGo to the DELL-E 2. Countless products or services driven by artificial intelligence (AI) have appeared in daily life, including Alexa devices, advertising recommendations, warehouse robots, and self-driving cars.

# In recent years, the size of deep learning models has grown exponentially. This is not news: the Wu Dao 2.0 model contains 1.75 trillion parameters, and training GPT-3 on 240 ml.p4d.24xlarge instances in the SageMaker training platform only takes about 25 days.

But as deep learning training and deployment evolves, it becomes more and more challenging. As deep learning models evolve, scalability and efficiency are two major challenges in training and deployment.

This article will summarize the five major types of machine learning (ML) accelerators.

Understand the ML life cycle in AI engineering

Before introducing the ML accelerator in a comprehensive way, you might as well take a look at the ML life cycle.

ML life cycle is the life cycle of data and models. Data can be said to be the root of ML and determines the quality of the model. Every aspect of the life cycle has opportunities for acceleration.

MLOps can automate the process of ML model deployment. However, due to its operational nature, it is limited to the horizontal process of AI workflow and cannot fundamentally improve training and deployment.

AI engineering goes far beyond the scope of MLOps. It can design the machine learning workflow process as well as the training and deployment architecture as a whole (horizontally and vertically). Additionally, it accelerates deployment and training through efficient orchestration of the entire ML lifecycle.

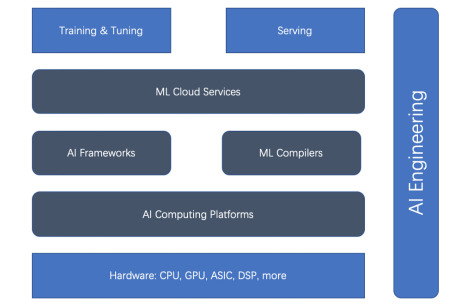

Based on the holistic ML life cycle and AI engineering, there are five main types of ML accelerators (or acceleration aspects): hardware accelerators, AI computing platforms, AI frameworks, ML compilers, and cloud services. First look at the relationship diagram below.

Figure 1. The relationship between training and deployment accelerator

We can see that hardware accelerators and AI frameworks are the mainstream of acceleration . But recently, ML compilers, AI computing platforms, and ML cloud services have become increasingly important.

The following are introduced one by one.

1. AI Framework

When it comes to accelerating ML training and deployment, choosing the right AI framework cannot be avoided. Unfortunately, there is no one-size-fits-all perfect or optimal AI framework. Three AI frameworks widely used in research and production are TensorFlow, PyTorch, and JAX. They each excel in different aspects, such as ease of use, product maturity, and scalability.

TensorFlow: TensorFlow is the flagship AI framework. TensorFlow has dominated the deep learning open source community from the beginning. TensorFlow Serving is a well-defined and mature platform. For the Internet and IoT, TensorFlow.js and TensorFlow Lite are also mature.

But due to the limitations of early exploration of deep learning, TensorFlow 1.x was designed to build static graphs in a non-Pythonic way. This becomes a barrier to immediate evaluation using the "eager" mode, which allows PyTorch to rapidly improve in the research field. TensorFlow 2.x tries to catch up, but unfortunately upgrading from TensorFlow 1.x to 2.x is cumbersome.

TensorFlow also introduces Keras to make it easier to use overall, and XLA (Accelerated Linear Algebra), an optimizing compiler, to speed up the bottom layer.

PyTorch: With its eager mode and Python-like approach, PyTorch is a workhorse in today’s deep learning community and is used in everything from research to production. In addition to TorchServe, PyTorch also integrates with framework-agnostic platforms such as Kubeflow. In addition, PyTorch's popularity is inseparable from the success of Hugging Face's Transformers library.

JAX: Google introduced JAX, based on device-accelerated NumPy and JIT. Just as PyTorch did a few years ago, it is a more native deep learning framework that is quickly gaining popularity in the research community. But it's not yet an "official" Google product, as Google claims.

2. Hardware accelerator

There is no doubt that NVIDIA’s GPU can accelerate deep learning training, but it was originally designed for video cards.

After the emergence of general-purpose GPUs, graphics cards used for neural network training have become extremely popular. These general-purpose GPUs can execute arbitrary code, not just rendering subroutines. NVIDIA's CUDA programming language provides a way to write arbitrary code in a C-like language. General-purpose GPU has a relatively convenient programming model, large-scale parallelism mechanism and high memory bandwidth, and now provides an ideal platform for neural network programming.

Today, NVIDIA supports a range of GPUs from desktop to mobile, workstation, mobile workstation, gaming console and data center.

With the great success of NVIDIA GPU, there has been no shortage of successors along the way, such as AMD's GPU and Google's TPU ASIC.

3. AI Computing Platform

As mentioned earlier, the speed of ML training and deployment depends largely on hardware (such as GPU and TPU). These driver platforms (i.e., AI computing platforms) are critical to performance. There are two well-known AI computing platforms: CUDA and OpenCL.

CUDA: CUDA (Compute Unified Device Architecture) is a parallel programming paradigm released by NVIDIA in 2007. It is designed for numerous general-purpose applications on graphics processors and GPUs. CUDA is a proprietary API that only supports NVIDIA's Tesla architecture GPUs. Graphics cards supported by CUDA include GeForce 8 series, Tesla and Quadro.

OpenCL: OpenCL (Open Computing Language) was originally developed by Apple and is now maintained by the Khronos team for heterogeneous computing, including CPUs, GPUs, DSPs and other types of processors . This portable language is adaptable enough to enable high performance on every hardware platform, including Nvidia's GPUs.

NVIDIA is now OpenCL 3.0 compliant for use with R465 and higher drivers. Using the OpenCL API, one can launch computational kernels written in a limited subset of the C programming language on the GPU.

4. ML Compiler

ML compiler plays a vital role in accelerating training and deployment. ML compilers can significantly improve the efficiency of large-scale model deployment. There are many popular compilers such as Apache TVM, LLVM, Google MLIR, TensorFlow XLA, Meta Glow, PyTorch nvFuser and Intel PlaidML.

5. ML Cloud Services

ML cloud platform and services manage the ML platform in the cloud. They can be optimized in several ways to increase efficiency.

Take Amazon SageMaker as an example. This is a leading ML cloud platform service. SageMaker provides a wide range of features for the ML lifecycle: from preparation, building, training/tuning to deployment/management.

It optimizes many aspects to improve training and deployment efficiency, such as multi-model endpoints on GPUs, cost-effective training using heterogeneous clusters, and a proprietary Graviton processor suitable for CPU-based ML inference.

Conclusion

As the scale of deep learning training and deployment continues to expand, the challenges are becoming more and more challenging. Improving the efficiency of deep learning training and deployment is complex. Based on the ML life cycle, there are five aspects that can accelerate ML training and deployment: AI framework, hardware accelerator, computing platform, ML compiler and cloud service. AI engineering can coordinate all of these and use engineering principles to improve efficiency across the board.

Original title: 5 Types of ML Accelerators, author: Luhui Hu

The above is the detailed content of Briefly describe the five types of machine learning accelerators. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1209

1209

24

24

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year