Technology peripherals

Technology peripherals

AI

AI

Meta continuously throws the AI to accelerate the ultimate move! The first AI inference chip, AI supercomputer specially designed for large model training

Meta continuously throws the AI to accelerate the ultimate move! The first AI inference chip, AI supercomputer specially designed for large model training

Meta continuously throws the AI to accelerate the ultimate move! The first AI inference chip, AI supercomputer specially designed for large model training

Compiled | Li Shuiqing

Editor | Xin Yuan

Zhidongxi News on May 19th, local time on May 18th, Meta issued an announcement on its official website. In order to cope with the sharp growth in demand for AI computing power in the next ten years, Meta is executing a grand plan - to build a new environment specifically for AI. Generation infrastructure.

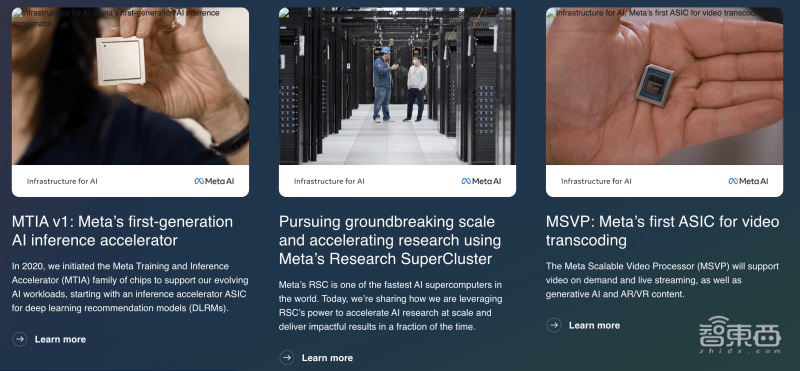

Meta announced its latest progress in building next-generation infrastructure for AI, including the first custom chip for running AI models, a new AI-optimized data center design, the first video transcoding ASIC, and the integration of 16,000 GPU, AI supercomputer RSC used to accelerate AI training, etc.

▲Meta official website’s disclosure of AI infrastructure details

Meta views AI as the company’s core infrastructure. Since Meta broke ground on its first data center in 2010, AI has become the engine of more than 3 billion people using the Meta family of applications every day. From the Big Sur hardware in 2015 to the development of PyTorch, to the initial deployment of Meta's AI supercomputer last year, Meta is currently further upgrading and evolving these infrastructures.

1. Meta’s first generation AI inference accelerator, 7nm process, 102.4TOPS computing power

MTIA (Meta Training and Inference Accelerator) is Meta’s first in-house customized accelerator chip series for inference workloads.

AI workloads are ubiquitous in Meta’s business and are the basis for a wide range of application projects, including content understanding, information flow, generative AI, and ad ranking. As AI models increase in size and complexity, underlying hardware systems need to provide exponential increases in memory and computation while maintaining efficiency. However, Meta found that it was difficult for CPUs to meet the efficiency level requirements required by its scale, so it designed Meta's self-developed training and inference accelerator MTIA ASIC series to address this challenge.

Starting in 2020, Meta designed the first generation MTIA ASIC for its internal workloads. The accelerator uses TSMC's 7nm process, runs at 800MHz, and provides 102.4TOPS computing power at INT8 precision and 51.2TFLOPS computing power at FP16 precision. Its thermal design power (TDP) is 25W.

According to reports, MTIA provides higher computing power and efficiency than CPU. By deploying MTIA chips and GPUs at the same time, it will provide better performance, lower latency and higher efficiency for each workload.

2. Lay out the next generation data center and develop the first video transcoding ASIC

Meta’s next-generation data center design will support its current products while supporting training and inference for future generations of AI hardware. This new data center will be optimized for AI, supporting liquid-cooled AI hardware and a high-performance AI network connecting thousands of AI chips for data center-scale AI training clusters.

According to the official website, Meta’s next-generation data center will also be built faster and more cost-effectively, and will be complemented by other new hardware, such as Meta’s first internally developed ASIC solution, MSVP, designed to serve Meta’s growing Powered by video workloads.

With the emergence of new technology content such as generative AI, people's demand for video infrastructure has further intensified, which prompted Meta to launch a scalable video processor MSVP.

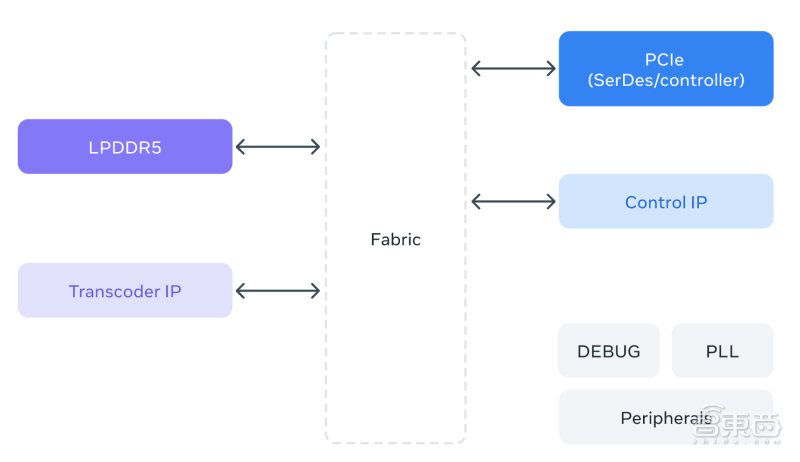

MSVP is Meta’s first in-house developed ASIC for video transcoding. MSVP is programmable and scalable, and can be configured to efficiently support the high-quality transcoding required for on-demand, as well as the low latency and faster processing times required for live streaming. In the future, MSVP will also help bring new forms of video content to every member of the Meta family of applications - including AI-generated content as well as VR (virtual reality) and AR (augmented reality) content.

▲MSVP architecture diagram

3. The AI supercomputer integrates 16,000 GPUs and supports LLaMA large models to accelerate training iterations

According to Meta’s announcement, its AI Super Computer (RSC) is one of the fastest artificial intelligence supercomputers in the world and is designed to train the next generation of large-scale AI models for new AR tools, content understanding systems, real-time translation technology, etc. Provide motivation.

Meta RSC features 16,000 GPUs, all accessible through a three-level Clos network structure, providing full bandwidth to each of the 2,000 training systems. Over the past year, the RSC has been promoting research projects like LLaMA.

LLaMA is a large language model built and open sourced by Meta earlier this year, with a scale of 65 billion parameters. Meta says its goal is to provide a smaller, higher-performance model that researchers can study and fine-tune for specific tasks without the need for significant hardware.

Meta trained LLaMA 65B and the smaller LLaMA 33B based on 1.4 trillion Tokens. Its smallest model, LLaMA 7B, also uses one trillion Tokens for training. The ability to run at scale allows Meta to accelerate training and tuning iterations, releasing models faster than other businesses.

Conclusion: The application of large model technology forces major manufacturers to accelerate the layout of infrastructure

Meta custom-designs much of its infrastructure primarily because this allows it to optimize the end-to-end experience, from the physical layer to the software layer to the actual user experience. Because the stack is controlled from top to bottom, it can be customized to your specific needs. These infrastructures will support Meta in developing and deploying larger and more complex AI models.

Over the next few years, we will see increased specialization and customization in chip design, specialized and workload-specific AI infrastructure, new systems and tools, and increased efficiency in product and design support. These will deliver increasingly sophisticated models and products built on the latest research, enabling people around the world to use this emerging technology.

Source: Meta official website

The above is the detailed content of Meta continuously throws the AI to accelerate the ultimate move! The first AI inference chip, AI supercomputer specially designed for large model training. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

How to Use DALL-E 3: Tips, Examples, and Features

Mar 09, 2025 pm 01:00 PM

How to Use DALL-E 3: Tips, Examples, and Features

Mar 09, 2025 pm 01:00 PM

DALL-E 3: A Generative AI Image Creation Tool Generative AI is revolutionizing content creation, and DALL-E 3, OpenAI's latest image generation model, is at the forefront. Released in October 2023, it builds upon its predecessors, DALL-E and DALL-E 2

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Elon Musk & Sam Altman Clash over $500 Billion Stargate Project

Mar 08, 2025 am 11:15 AM

Elon Musk & Sam Altman Clash over $500 Billion Stargate Project

Mar 08, 2025 am 11:15 AM

The $500 billion Stargate AI project, backed by tech giants like OpenAI, SoftBank, Oracle, and Nvidia, and supported by the U.S. government, aims to solidify American AI leadership. This ambitious undertaking promises a future shaped by AI advanceme

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Sora vs Veo 2: Which One Creates More Realistic Videos?

Mar 10, 2025 pm 12:22 PM

Sora vs Veo 2: Which One Creates More Realistic Videos?

Mar 10, 2025 pm 12:22 PM

Google's Veo 2 and OpenAI's Sora: Which AI video generator reigns supreme? Both platforms generate impressive AI videos, but their strengths lie in different areas. This comparison, using various prompts, reveals which tool best suits your needs. T

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)