Technology peripherals

Technology peripherals

It Industry

It Industry

Alibaba Cloud launches comprehensive transformation plan and launches large language model 'Tongyi Qianwen'

Alibaba Cloud launches comprehensive transformation plan and launches large language model 'Tongyi Qianwen'

Alibaba Cloud launches comprehensive transformation plan and launches large language model 'Tongyi Qianwen'

According to news on May 23, Alibaba Cloud, a subsidiary of Alibaba Group, plans to conduct a round of organizational and personnel optimization to further improve its business strategy, organization and operations. The news comes five days before Zhang Yong, chairman of the board of directors of Alibaba Group and CEO of Alibaba Cloud Intelligence, announced that Alibaba Cloud will completely spin off from Alibaba Group and complete its listing in the next 12 months.

Multiple sources said that this optimization plan started in mid-May, and Alibaba Group just announced last year’s performance last month. Although the news about Alibaba Cloud's 7% layoffs has attracted much attention, Alibaba Cloud has responded that this is a routine optimization of organizational positions and personnel. According to a company insider, the company's layoff compensation standard is "N 1 1", and untaken annual leave and companion leave can be converted into cash.

Optimization of organization and personnel is carried out every year, and Alibaba Cloud, as an important business segment of Alibaba Group, is no exception. This optimization is seen as a move to further strengthen business strategy and improve organizational efficiency. Since Zhang Yong took over Alibaba Cloud in December last year, he has taken a number of important measures, one of which is the largest price reduction in Alibaba Cloud products in history. This price reduction is aimed at reducing the cost of cloud services and expanding market share.

According to IDC data, Alibaba Cloud has always been in the leading position in the domestic public cloud market, but in the second half of 2022, its market share fell by 4.8% compared with the same period last year. At the same time, the overall growth rate of the public cloud market has been significantly slow, and the revenue growth rate has dropped by nearly 24 percentage points year-on-year. This may be a background reason for Alibaba Cloud's optimization.

After offsetting the impact of cross-segment transactions, Alibaba Group’s cloud business revenue in the first quarter of this year fell by 2% year-on-year, with revenue of 18.582 billion yuan. In response to this situation, Alibaba Cloud launched the latest large language model "Tongyi Qianwen" in April, and plans to comprehensively transform all products to adapt to the development of the artificial intelligence era.

To sum up, Alibaba Cloud's organization and personnel optimization plan aims to further optimize business strategies, improve organizational efficiency, and adapt to changes in the current public cloud market. Alibaba Cloud will continue to strive to maintain its leading position in the field of cloud computing to provide users with better cloud services.

The above is the detailed content of Alibaba Cloud launches comprehensive transformation plan and launches large language model 'Tongyi Qianwen'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1389

1389

52

52

Alibaba Cloud announced that the 2024 Yunqi Conference will be held in Hangzhou from September 19th to 21st. Free application for free tickets

Aug 07, 2024 pm 07:12 PM

Alibaba Cloud announced that the 2024 Yunqi Conference will be held in Hangzhou from September 19th to 21st. Free application for free tickets

Aug 07, 2024 pm 07:12 PM

According to news from this website on August 5, Alibaba Cloud announced that the 2024 Yunqi Conference will be held in Yunqi Town, Hangzhou from September 19th to 21st. There will be a three-day main forum, 400 sub-forums and parallel topics, as well as nearly four Ten thousand square meters of exhibition area. Yunqi Conference is free and open to the public. From now on, the public can apply for free tickets through the official website of Yunqi Conference. An all-pass ticket of 5,000 yuan can be purchased. The ticket website is attached on this website: https://yunqi.aliyun.com/2024 /ticket-list According to reports, the Yunqi Conference originated in 2009 and was originally named the First China Website Development Forum. In 2011, it evolved into the Alibaba Cloud Developer Conference. In 2015, it was officially renamed the "Yunqi Conference" and has continued to successful move

Alibaba Cloud announced that it will open source Tongyi Qianwen's 14 billion parameter model Qwen-14B and its dialogue model, which will be free for commercial use.

Sep 26, 2023 pm 08:05 PM

Alibaba Cloud announced that it will open source Tongyi Qianwen's 14 billion parameter model Qwen-14B and its dialogue model, which will be free for commercial use.

Sep 26, 2023 pm 08:05 PM

Alibaba Cloud today announced an open source project called Qwen-14B, which includes a parametric model and a conversation model. This open source project allows free commercial use. This site states: Alibaba Cloud has previously open sourced a parameter model Qwen-7B worth 7 billion. The download volume in more than a month has exceeded 1 million times. According to the data provided by Alibaba Cloud, Qwen -14B surpasses models of the same size in multiple authoritative evaluations, and some indicators are even close to Llama2-70B. According to reports, Qwen-14B is a high-performance open source model that supports multiple languages. Its overall training data exceeds 3 trillion Tokens, has stronger reasoning, cognition, planning and memory capabilities, and supports a maximum context window of 8k

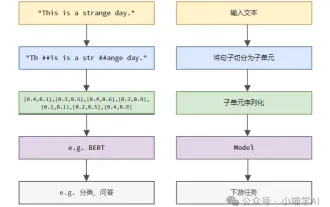

Understand Tokenization in one article!

Apr 12, 2024 pm 02:31 PM

Understand Tokenization in one article!

Apr 12, 2024 pm 02:31 PM

Language models reason about text, which is usually in the form of strings, but the input to the model can only be numbers, so the text needs to be converted into numerical form. Tokenization is a basic task of natural language processing. It can divide a continuous text sequence (such as sentences, paragraphs, etc.) into a character sequence (such as words, phrases, characters, punctuation, etc.) according to specific needs. The units in it Called a token or word. According to the specific process shown in the figure below, the text sentences are first divided into units, then the single elements are digitized (mapped into vectors), then these vectors are input to the model for encoding, and finally output to downstream tasks to further obtain the final result. Text segmentation can be divided into Toke according to the granularity of text segmentation.

Three secrets for deploying large models in the cloud

Apr 24, 2024 pm 03:00 PM

Three secrets for deploying large models in the cloud

Apr 24, 2024 pm 03:00 PM

Compilation|Produced by Xingxuan|51CTO Technology Stack (WeChat ID: blog51cto) In the past two years, I have been more involved in generative AI projects using large language models (LLMs) rather than traditional systems. I'm starting to miss serverless cloud computing. Their applications range from enhancing conversational AI to providing complex analytics solutions for various industries, and many other capabilities. Many enterprises deploy these models on cloud platforms because public cloud providers already provide a ready-made ecosystem and it is the path of least resistance. However, it doesn't come cheap. The cloud also offers other benefits such as scalability, efficiency and advanced computing capabilities (GPUs available on demand). There are some little-known aspects of deploying LLM on public cloud platforms

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

Efficient parameter fine-tuning of large-scale language models--BitFit/Prefix/Prompt fine-tuning series

Oct 07, 2023 pm 12:13 PM

Efficient parameter fine-tuning of large-scale language models--BitFit/Prefix/Prompt fine-tuning series

Oct 07, 2023 pm 12:13 PM

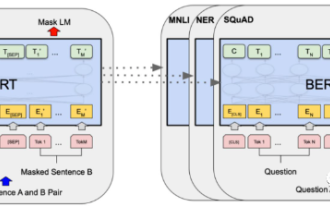

In 2018, Google released BERT. Once it was released, it defeated the State-of-the-art (Sota) results of 11 NLP tasks in one fell swoop, becoming a new milestone in the NLP world. The structure of BERT is shown in the figure below. On the left is the BERT model preset. The training process, on the right is the fine-tuning process for specific tasks. Among them, the fine-tuning stage is for fine-tuning when it is subsequently used in some downstream tasks, such as text classification, part-of-speech tagging, question and answer systems, etc. BERT can be fine-tuned on different tasks without adjusting the structure. Through the task design of "pre-trained language model + downstream task fine-tuning", it brings powerful model effects. Since then, "pre-training language model + downstream task fine-tuning" has become the mainstream training in the NLP field.

RoSA: A new method for efficient fine-tuning of large model parameters

Jan 18, 2024 pm 05:27 PM

RoSA: A new method for efficient fine-tuning of large model parameters

Jan 18, 2024 pm 05:27 PM

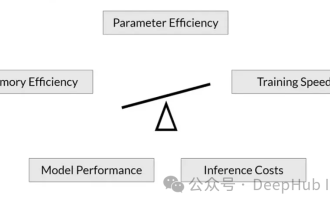

As language models scale to unprecedented scale, comprehensive fine-tuning for downstream tasks becomes prohibitively expensive. In order to solve this problem, researchers began to pay attention to and adopt the PEFT method. The main idea of the PEFT method is to limit the scope of fine-tuning to a small set of parameters to reduce computational costs while still achieving state-of-the-art performance on natural language understanding tasks. In this way, researchers can save computing resources while maintaining high performance, bringing new research hotspots to the field of natural language processing. RoSA is a new PEFT technique that, through experiments on a set of benchmarks, is found to outperform previous low-rank adaptive (LoRA) and pure sparse fine-tuning methods using the same parameter budget. This article will go into depth

Detailed explanation of Maven Alibaba Cloud image configuration

Feb 21, 2024 pm 10:12 PM

Detailed explanation of Maven Alibaba Cloud image configuration

Feb 21, 2024 pm 10:12 PM

Detailed explanation of Maven Alibaba Cloud image configuration Maven is a Java project management tool. By configuring Maven, you can easily download dependent libraries and build projects. The Alibaba Cloud image can speed up Maven's download speed and improve project construction efficiency. This article will introduce in detail how to configure Alibaba Cloud mirroring and provide specific code examples. What is Alibaba Cloud Image? Alibaba Cloud Mirror is the Maven mirror service provided by Alibaba Cloud. By using Alibaba Cloud Mirror, you can greatly speed up the downloading of Maven dependency libraries. Alibaba Cloud Mirror