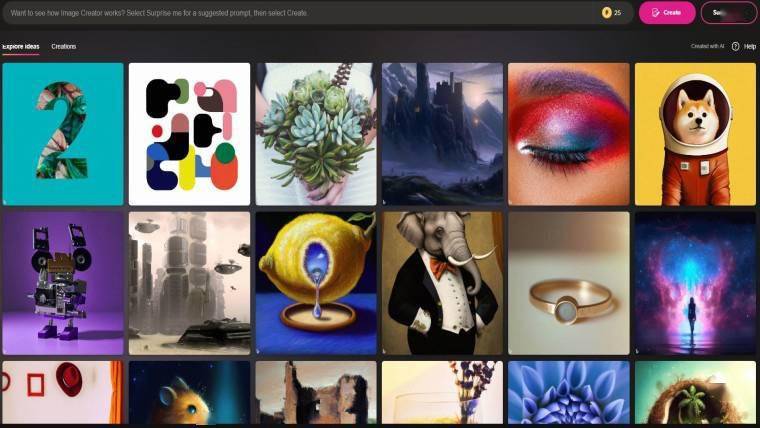

IT Home News on May 24, Microsoft has recently launched a variety of software and services based on artificial intelligence. In March, the company officially released Bing Image Creator, which allows users to create works of art using simple text prompts. In April, the company launched a full public beta of Microsoft Designer, allowing users to use text prompts and AI models to create blog posts, websites and other projects.

However, there are also concerns that malicious use of AI-generated art could lead to the spread of false information. As more and more "deepfake" images and videos emerge, Microsoft has decided to take proactive steps to ensure its procedurally generated AI art is identifiable. Today, at Microsoft's Build 2023 developer conference, the company announced that it will be adding a feature in the coming months that will let anyone identify whether an image or video clip generated by Bing Image Creator and Microsoft Designer was generated by AI. .

According to Microsoft, they use cryptography to mark and sign content generated by artificial intelligence and add information containing source metadata information. Microsoft has been a leader in the development of methods to verify provenance and co-founded Project Origin and the Content Provenance and Authenticity Alliance (C2PA) standards body. Microsoft will use C2PA standards to sign and verify the generated content to verify the authenticity of its media sources. ”

Microsoft said the feature will work with "major image and video formats" supported by its two AI content generation programs, but did not announce which formats are supported. IT House previously reported that Microsoft announced earlier this month that Bing Image Creator now supports more than 100 languages and that the program has created more than 200 million images.

The above is the detailed content of Microsoft cracks down on deepfakes, adds 'ID' to AI-generated images and videos. For more information, please follow other related articles on the PHP Chinese website!