Accelerate reading and writing: Because the cache is usually full memory (for example Redis, Memcache), and the storage layer usually does not have strong read and write performance (such as MySQL), and the memory read and write speed is much higher than disk I/O. The use of cache can effectively accelerate reading and writing and optimize user experience.

Reduce back-end load: Help the back-end reduce access (Mysql is set with a maximum number of connections, if a large number of accesses reach the database at the same time, and disk I/O The speed is very slow, which can easily cause the maximum number of connections to be used up, but Redis theoretical maximum) and complex calculations (such as very complex SQL statements) reduce the load on the backend to a great extent.

Data inconsistency: The data in the cache layer and the storage layer are inconsistent within a certain time window The time window is related to the update strategy.

Code maintenance cost: After adding the cache, the logic of the cache layer and the storage layer needs to be processed at the same time, which increases the cost of maintaining the code for developers.

Operation and maintenance costs: Take Redis Cluster as an example. After joining, the operation and maintenance costs will be increased virtually.

Complex calculations with high overhead: Take MySQL as an example, some complex operations or calculations (For example, a large number of joint table operations, some grouping calculations), if caching is not added, not only will it be unable to meet the high concurrency, but it will also bring a huge burden to MySQL.

Accelerate request response: Even if querying a single piece of backend data is fast enough, you can still use cache. Taking Redis as an example, tens of thousands of reads can be completed per second. Write, and the batch operations provided can optimize the response time of the entire IO chain

Thinking: Redis in the production environment often loses some data. Once it is written, it may be gone after a while. what is the reason?

Normally, the Redis cache is stored in memory, but considering that memory is precious and limited, it is common to use cheap and large disks for storage. A machine might only have a few dozen gigabytes of memory, but could have several terabytes of hard drive capacity. Redis is mainly based on memory to perform high-performance, high-concurrency read and write operations. So since the memory is limited, for example, redis can only use 10G. What will you do if you write 20G of data into it? Of course, the 10G data will be deleted, and then the 10G data will be retained. What data needs to be deleted? What data needs to be retained? Obviously, you need to delete infrequently used data and retain frequently used data. Redis's expiration policy determines that even if the data has expired, it will continue to occupy memory.

In Redis, when the used memory reaches the maxmemory upper limit (used_memory>maxmemory), the corresponding overflow control policy will be triggered. The specific policy is controlled by the maxmemory-policy parameter.

Redis supports 6 strategies:

noeviction: Default strategy, no data will be deleted, all write operations will be rejected and returned to the client Error message (error) OOM command not allowed when used memory, at this time Redis only responds to read operations

According to the LRU algorithm, delete the key value with the timeout attribute (expire) and release enough space . If there are no deletable key objects, fall back to the noeviction strategy

volatile-random: randomly delete expired keys until enough space is freed

allkeys-lru: Delete keys according to the LRU algorithm, regardless of whether the data has a timeout attribute set, until enough space is made available

allkeys-random: Delete all keys randomly until enough space is made available Until there is enough space (not recommended)

volatile-ttl: Delete the most recently expired data based on the ttl (time to live, TTL) attribute of the key-value object. If not, fall back to the noeviction strategy

LRU: Least Recently Used, the least recently used, cached elements have a timestamp, when the cache capacity is full and needs to be freed up When a new element is cached, the element with the timestamp farthest from the current time among the existing cache elements will be cleared from the cache.

The memory overflow control strategy can be dynamically configured using config set maxmemory-policy{policy}. Write commands lead to frequent execution of memory recovery when memory overflows, which is very costly. In the master-slave replication architecture, the delete command corresponding to the memory recovery operation will be synchronized to the slave node to ensure the data consistency of the master and slave nodes, resulting in write amplification. The problem.

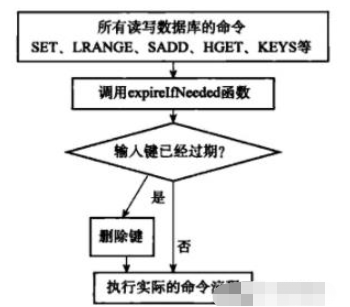

The expiration strategy adopted by the Redis server is: Lazy deletion Regular deletion

Lazy deletion:

Each Redis library contains an expiration dictionary, which saves the expiration time of all keys. When the client reads a key, it will first check whether the key has expired in the expiration dictionary. If the key has expired, it will perform a delete operation and return empty. This strategy is to save CPU costs, but using this method alone has the problem of memory leakage. When the expired key has not been accessed, it will not be deleted in time, resulting in the memory not being released in time.

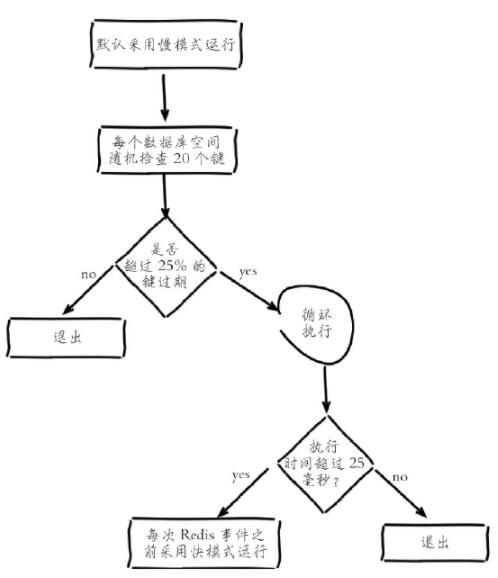

Scheduled deletion:

Redis maintains a scheduled task internally, and runs 10 expiration scans per second by default (modified by hz configuration in redis.conf) times), the scan does not traverse all keys in the expired dictionary, but uses an adaptive algorithm to recycle keys based on the expiration ratio of the key using two rate modes: fast and slow:

1. Randomly from the expired dictionary Take out 20 keys

2. Delete the expired keys among these 20 keys

3. If the proportion of expired keys exceeds 25%, repeat steps 1 and 2

to ensure that there will be no loop in the scan Excessive, it has been executing scheduled deletion scheduled tasks and cannot provide services to the outside world, causing the thread to get stuck. It also increases the upper limit of the scan time. The default is 25 milliseconds (that is, the default is in slow mode. If 25 milliseconds have not been completed, switch to block mode, the timeout time in mode is 1 millisecond and can only be run once within 2 seconds. When the slow mode is completed and exits normally, it will switch back to the fast mode)

1. The application first retrieves the data from the cache. If it does not get it, it retrieves the data from the database. After success, it puts it in the cache.

2. Delete the cache first, and then update the database: This operation has a big problem. After the cache is deleted, the request to update the data receives a read request. At this time, because the cache has been deleted, the read request will be read directly. library, the data for read operations is old and will be loaded into the cache. Subsequent read requests will access all the old data.

3. Update the database first, then delete the cache (recommended). Why not update the cache after writing to the database? The main reason is that two concurrent write operations may cause dirty data.

Caching all data is more versatile than partial data, but from actual experience, applications only need a few important ones for a long time properties.

Caching all data takes up more space than part of the data. There are the following problems:

All data will cause memory failure waste.

All data may generate a large amount of network traffic each time it is transmitted, and it will take a relatively long time. In extreme cases, it may block the network.

The CPU overhead of serialization and deserialization of all data is greater.

Full data has obvious advantages, but if you want to add new fields to some data, you need to modify the business code and usually refresh the cached data. .

The above is the detailed content of What is the Redis cache update strategy?. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis