How to solve the deep paging problem of SQL query in MySQL tuning

1. Problem introduction

For example, there is currently a table test_user, and then insert 3 million data into this table:

CREATE TABLE `test_user` ( `id` int(11) NOT NULL AUTO_INCREMENT COMMENT '主键id', `user_id` varchar(36) NOT NULL COMMENT '用户id', `user_name` varchar(30) NOT NULL COMMENT '用户名称', `phone` varchar(20) NOT NULL COMMENT '手机号码', `lan_id` int(9) NOT NULL COMMENT '本地网', `region_id` int(9) NOT NULL COMMENT '区域', `create_time` datetime NOT NULL COMMENT '创建时间', PRIMARY KEY (`id`), KEY `idx_user_id` (`user_id`) ) ENGINE=InnoDB AUTO_INCREMENT;

In the process of database development, we often use paging , the core technology is to use limit start, count paging statements to read data.

Let’s look at the execution time of paging starting from 0, 10000, 100000, 500000, 1000000, and 1800000 (100 entries per page).

SELECT * FROM test_user LIMIT 0,100; # 0.031 SELECT * FROM test_user LIMIT 10000,100; # 0.047 SELECT * FROM test_user LIMIT 100000,100; # 0.109 SELECT * FROM test_user LIMIT 500000,100; # 0.219 SELECT * FROM test_user LIMIT 1000000,100; # 0.547s SELECT * FROM test_user LIMIT 1800000,100; # 1.625s

We have seen that as the starting record increases, the time also increases. After changing the starting record to 2.9 million, we can see that there is a great correlation between the limit in the paging statement and the starting page number

SELECT * FROM test_user LIMIT 2900000,100; # 3.062s

We were surprised to find that MySQL paging starting point when the amount of data is large The larger the value, the slower the query speed!

So why does the above situation occur?

Answer: Because the syntax of limit 2900000,100 actually means that mysql scans the first 2900100 pieces of data and then discards the first 3000000 rows. This step is actually wasted.

We can also conclude the following two things from this:

The query time of the limit statement is proportional to the position of the starting record.

Mysql's limit statement is very convenient, but it is not suitable for direct use on tables with many records.

2. Limit usage in MySQL

The limit clause can be used to force the select statement to return the specified number of records. Its syntax format is as follows:

SELECT * FROM 表名 limit m,n; SELECT * FROM table LIMIT [offset,] rows;

limit accepts one or two numeric parameters. The parameter must be an integer constant. If two parameters are given:

The first parameter specifies the offset of the first returned record row

The second The parameter specifies the maximum number of record rows to be returned.

2.1 m means to start retrieval from m 1 record lines, and n means to retrieve n pieces of data. (m can be set to 0)

SELECT * FROM 表名 limit 6,5;

The above SQL indicates that starting from the 7th record row, 5 pieces of data will be taken out

2.2 It is worth noting that n can Is set to -1. When n is -1, it means to retrieve from row m1 until the last piece of data is retrieved.

SELECT * FROM 表名 limit 6,-1;

The above SQL indicates that all data after the 6th record row are retrieved

2.3 If only m is given, it means that m records will be taken out starting from the first record line

SELECT * FROM 表名 limit 6;

2.4 Take out the first 3 records in reverse order of age Row

select * from student order by age desc limit 3;

2.5 Skip the first 3 rows and then fetch the next 2 rows

select * from student order by age desc limit 3,2;

3. Deep paging optimization strategy

Method 1: Use primary key id or unique index optimization

That is, first find the maximum id of the last paging, and then use the index on the id to query:

SELECT * FROM test_user WHERE id>1000000 LIMIT 100; # 0.047秒

Using this optimized SQL is faster than the previous query Already 11 times faster. In addition to using the primary key ID, you can also use a unique index to quickly locate specific data, thereby avoiding a full table scan. The following is the corresponding SQL optimization code to read data with unique keys (pk) in the range of 1000 to 1019:

SELECT * FROM 表名称 WHERE pk>=1000 ORDER BY pk ASC LIMIT 0,20

Reason: Index scanning will be very fast.

Applicable scenarios: If the data is queried and sorted according to pk or id, and all data is not missing, you can optimize in this way, otherwise the paging operation will leak data.

Method 2: Use index coverage optimization

We all know that if the statement that uses an index query only contains that index column (that is, index coverage), Then this situation will be queried quickly.

Why is index coverage query so fast?

Answer: Because there is an optimization algorithm for index search, and the data is on the query index, there is no need to find the relevant data address, which saves a lot of time. When the amount of concurrency is high, Mysql also provides a cache associated with the index. Making full use of this cache can achieve better results.

Since the id field is the primary key in our test table test_user, the primary key index is included by default. Now let's see how the query using the covering index performs.

This time we query the data from rows 1000001 to 1000100 (using a covering index, including only the id column):

SELECT id FROM test_user LIMIT 1000000,100; # 0.843秒

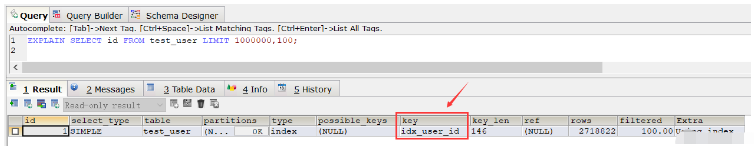

From this result, we found that the query speed is slower than the full table scan speed (Of course, after repeatedly executing this SQL, the speed becomes much faster after multiple queries, saving almost half the time. This is due to caching). Then use the explain command to view the execution plan of the SQL and find that the SQL execution Common index used idx_user_id:

EXPLAIN SELECT id FROM test_user LIMIT 1000000,100;

If we delete the normal index, the primary key index will be used when executing the above SQL. If we do not delete the ordinary index, in this case, if we want the above SQL to use the primary key index, we can use the order by statement:

SELECT id FROM test_user ORDER BY id ASC LIMIT 1000000,100; # 0.250秒

Then if we also want to query all columns, there are two methods , one is in the form of id>=, and the other is to use join.

The first way of writing:

SELECT * FROM test_user WHERE ID >= (SELECT id FROM test_user ORDER BY id ASC LIMIT 1000000,1) LIMIT 100;

The above SQL query time is 0.281 seconds

The second way of writing:

SELECT * FROM (SELECT id FROM test_user ORDER BY id ASC LIMIT 1000000,100) a LEFT JOIN test_user b ON a.id = b.id;

The above SQL query time is 0.252 seconds

Method 3: Reorder based on index

PageNum represents the page number, and its value starts from 0; pageSize represents the number of pieces of data per page.

SELECT * FROM 表名称 WHERE id_pk > (pageNum*pageSize) ORDER BY id_pk ASC LIMIT pageSize;

适应场景:

适用于数据量多的情况

最好ORDER BY后的列对象是主键或唯一索引

id数据没有缺失,可以作为序号使用

使用ORDER BY操作能利用索引被消除,但结果集是稳定的

原因:

索引扫描,速度会很快

但MySQL的排序操作,只有ASC没有DESC。在MySQL中,索引的存储顺序是升序ASC,没有降序DESC的索引。这就是为什么默认情况下,order by 是按照升序排序的原因

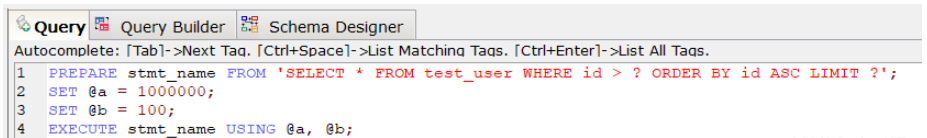

方法四:基于索引使用prepare

PREPARE预编译一个SQL语句,并为其分配一个名称 stmt_name,以便以后引用该语句,预编译好的语句用EXECUTE执行。

PREPARE stmt_name FROM 'SELECT * FROM test_user WHERE id > ? ORDER BY id ASC LIMIT ?'; SET @a = 1000000; SET @b = 100; EXECUTE stmt_name USING @a, @b;;

上述SQL查询时间为0.047秒。

对于定义好的PREPARE预编译语句,我们可以使用下述命令来释放该预编译语句:

DEALLOCATE PREPARE stmt_name;

原因:

索引扫描,速度会很快.

prepare语句又比一般的查询语句快一点。

方法五:利用"子查询+索引"快速定位数据

其中page表示页码,其取值从0开始;pagesize表示指的是每页多少条数据。

SELECT * FROM your_table WHERE id <= (SELECT id FROM your_table ORDER BY id DESC LIMIT ($page-1)*$pagesize ORDER BY id DESC LIMIT $pagesize);

方法六:利用复合索引进行优化

假设数据表 collect ( id, title ,info ,vtype) 就这4个字段,其中id是主键自增,title用定长,info用text, vtype是tinyint,vtype是一个普通索引。

现在往里面填充数据,填充10万条记录,数据库表占用硬1.6G。

select id,title from collect limit 1000,10;

执行上述SQL速度很快,基本上0.01秒就OK。

select id,title from collect limit 90000,10;

然后再执行上述SQL,就发现非常慢,基本上平均8~9秒完成。

这个时候如果我们执行下述,我们会发现速度又变的很快,0.04秒就OK。

select id from collect order by id limit 90000,10;

那么这个现象的原因是什么?

答案:因为用了id主键做索引, 这里实现了索引覆盖,当然快。

所以如果想一起查询其它列的话,可以按照索引覆盖进行优化,具体如下:

select id,title from collect where id >= (select id from collect order by id limit 90000,1) limit 10;

再看下面的语句,带上where 条件:

select id from collect where vtype=1 order by id limit 90000,10;

可以发现这个速度上也是很慢的,用了8~9秒!

这里有一个疑惑:vtype 做了索引了啊?怎么会慢呢?

vtype做了索引是不错,如果直接对vtype进行过滤:

select id from collect where vtype=1 limit 1000,10;

可以看到速度还是很快的,基本上0.05秒,如果从9万开始,那就是0.05*90=4.5秒的速度了。

其实加了 order by id 就不走索引,这样做还是全表扫描,解决的办法是:复合索引!

因此针对下述SQL深度分页优化时可以加一个search_index(vtype,id)复合索引:

select id from collect where vtype=1 order by id limit 90000,10;

综上:

在进行SQL查询深度分页优化时,如果对于有where条件,又想走索引用limit的,必须设计一个索引,将where放第一位,limit用到的主键放第二位,而且只能select 主键。

最后根据查询出的主键走一级索引找到对应的数据。

按这样的逻辑,百万级的limit 在0.0x秒就可以分完,完美解决了分页问题。

The above is the detailed content of How to solve the deep paging problem of SQL query in MySQL tuning. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL are essential skills for developers. 1.MySQL is an open source relational database management system, and SQL is the standard language used to manage and operate databases. 2.MySQL supports multiple storage engines through efficient data storage and retrieval functions, and SQL completes complex data operations through simple statements. 3. Examples of usage include basic queries and advanced queries, such as filtering and sorting by condition. 4. Common errors include syntax errors and performance issues, which can be optimized by checking SQL statements and using EXPLAIN commands. 5. Performance optimization techniques include using indexes, avoiding full table scanning, optimizing JOIN operations and improving code readability.

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

How to recover data after SQL deletes rows

Apr 09, 2025 pm 12:21 PM

Recovering deleted rows directly from the database is usually impossible unless there is a backup or transaction rollback mechanism. Key point: Transaction rollback: Execute ROLLBACK before the transaction is committed to recover data. Backup: Regular backup of the database can be used to quickly restore data. Database snapshot: You can create a read-only copy of the database and restore the data after the data is deleted accidentally. Use DELETE statement with caution: Check the conditions carefully to avoid accidentally deleting data. Use the WHERE clause: explicitly specify the data to be deleted. Use the test environment: Test before performing a DELETE operation.

How to use AWS Glue crawler with Amazon Athena

Apr 09, 2025 pm 03:09 PM

How to use AWS Glue crawler with Amazon Athena

Apr 09, 2025 pm 03:09 PM

As a data professional, you need to process large amounts of data from various sources. This can pose challenges to data management and analysis. Fortunately, two AWS services can help: AWS Glue and Amazon Athena.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

How to build a SQL database

Apr 09, 2025 pm 04:24 PM

How to build a SQL database

Apr 09, 2025 pm 04:24 PM

Building an SQL database involves 10 steps: selecting DBMS; installing DBMS; creating a database; creating a table; inserting data; retrieving data; updating data; deleting data; managing users; backing up the database.