Database

Database

Mysql Tutorial

Mysql Tutorial

What are the core principles of mysql large-scale website technical architecture?

What are the core principles of mysql large-scale website technical architecture?

What are the core principles of mysql large-scale website technical architecture?

1. Evolution of large-scale website architecture

A. Characteristics of large-scale website software systems

High concurrency, large traffic; high availability; Massive data; widely distributed users and complex network conditions; poor security environment; rapid changes in requirements and frequent releases; progressive development;

B. The evolution and development process of large-scale website architecture

1. Initial stage: one server, LNMP

2. Separation of application services and data services: application server (CPU); database server (fast disk retrieval and data caching); file server (large hard disk) ;

3. Use cache to improve website performance: local cache cached on the application server (fast access speed, limited by application server memory, limited data volume), remote distributed cache (use cluster to deploy large memory) The server acts as a dedicated cache server)

4. Application server cluster: Scheduling through load balancing

5. Database read and write separation

6. Use reverse proxy and CDN acceleration : CDN (deployed in the nearest network computer room), reverse proxy (deployed in the central computer room)

7. Use distributed file systems and distributed database systems

8. Use NoSQL and search Engine

9.Business split

10.Distributed service

C. Values for the evolution of large website architecture

1 .The core value of large-scale website architecture technology is to respond flexibly to the needs of the website

2.The main force driving the development of large-scale website technology is the business development of the website

D.Website architecture design Misunderstanding

1. Blindly following the solutions of big companies

2. Technology for the sake of technology

3. Trying to use technology to solve all problems: Technology is used To solve business problems, and business problems can also be solved through business means

2. Large website architecture model

Each model depicts a continuous A recurring problem and the solution core for that problem. This way, you can use the solution again and again without having to duplicate work. The key to a pattern is the repeatability of the pattern.

A. Website architecture pattern

1. Layering

- ## Layering: It is the most common in enterprise application systems An architectural pattern that divides the system into several parts in the horizontal dimension. Each part is responsible for a relatively single responsibility, and then forms a complete system through the dependence and calls of the upper layer on the lower layer.

- The website software system is divided into application layer (view layer, business logic layer), service layer (data interface layer, logical processing layer), and data layer

- It can better divide a huge software system into different parts to facilitate the division of labor and cooperative development and maintenance; there is a certain degree of independence between each layer, as long as the calling interface remains unchanged , each layer can independently deepen development according to specific issues without requiring other layers to make corresponding adjustments.

- Cut vertically. Separate different functions and services and package them into modular units with high cohesion and low coupling. The granularity of segmentation for large websites can be very small.

- That is, different modules are deployed on different servers and work together through remote calls. This means that more computers can be used to complete the same function.

- Problem: The network may have a serious impact on performance; the probability of multiple server downtime is high; it is also very difficult to maintain data consistency in a distributed environment; This makes the website dependent on complex development, processing and maintenance difficult;

- #Common distributed solutions: distributed applications and services; distributed static resources; distributed data and storage; distributed Computing (Hadoop and its MapReduce); distributed configuration; distributed locks; distributed files, etc.;

- Multiple servers deploy the same application to form a cluster, and jointly provide external services through load balancing equipment.

- Caching is to store some data in the location closest to the calculation to speed up processing.

- CDN, reverse proxy, local cache, distributed cache.

- Two prerequisites for using cache: First, the data access hot spots are unbalanced; second, the data is valid within a certain period of time;

- The message passing between businesses is not a synchronous call, but a business operation is divided into multiple stages, and each stage is shared by Data is executed asynchronously to collaborate.

- A single server can achieve asynchronous execution through multi-threaded shared memory queues; in a distributed system, multiple server clusters can achieve asynchronous execution through distributed message queues.

- In the typical producer-consumer model, there is no direct call relationship between producers and consumers. The characteristic of this model is that it can improve the availability of the system. , speed up the website's response speed and eliminate concurrent access peaks.

Using asynchronous methods to process business may have an impact on user experience and business processes, and requires product design support.

7. Redundancy

To ensure that the website can still continue to serve without losing data when the server goes down , a certain degree of server redundancy operation and data redundancy backup are required.

Small websites also need at least two servers to build a cluster. In addition to regular backup and storage to achieve cold backup, the database also needs to be shared between master and slave, real-time synchronization and hot backup.

#Large companies may back up the entire data center and synchronize it to local disaster recovery centers.

8. Automation

mainly focuses on release operation and maintenance.

Automation of release process: automated code management, automated testing, automated security detection, automated deployment.

Automated monitoring: automated alarms, automated failover, automated failure recovery, automated degradation, and automated resource allocation.

9. Security

B. Application of architectural pattern in Sina Weibo

3 , Core architectural elements of large websites

Architecture: the highest level of planning, a decision that is difficult to change.

Software architecture: An abstract description of the overall structure and components of software, used to guide the design of all aspects of large-scale software systems.

A. Performance

Browser side: browser cache, page compression, reasonable layout, reducing cookie transmission, CDN, etc.

Application server side: server local cache, distributed cache, asynchronous operation and message queue cooperation, clustering, etc.

Code: Multi-threading, improved memory management, etc.

Database: indexing, caching, SQL optimization, NoSQL technology

B. Availability

#The main means of running environments such as servers, databases and file storage is redundancy.

When developing software, we use pre-release verification, automated testing, automated release, grayscale release, etc.

C. Scalability

Scalability refers to easing the rising pressure of concurrent user access and the growing demand for data storage by continuously adding servers to the cluster. . Whether adding a new server can provide the same services as the original server.

Application server: Servers can be continuously added to the cluster through appropriate load balancing equipment.

Cache server: Adding new ones may cause the cache route to become invalid. A routing algorithm is required.

Relational database: through routing partitioning and other means.

D. Scalability

Measurement standard: When the website adds business products, whether it can achieve Existing products are transparent and have no impact; whether there is little coupling between different products;

means: event-driven architecture (message queue), distributed services (combining business and available Service sharing, called through distributed service framework)

E. Security

4. Instant response: website High-performance architecture

A. Website performance test

1. Websites from different perspectives

User perspective Performance: Optimize the HTML style of the page, take advantage of the concurrency and asynchronous features of the browser, adjust the browser caching strategy, use CDN services, reflection proxies, etc.

Website performance from a developer’s perspective: using cache to speed up data reading, using clusters to improve throughput, using asynchronous messages to speed up request responses and achieve peak clipping, and using code optimization methods Improve program performance.

Website performance from the perspective of operation and maintenance personnel: building and optimizing backbone networks, using cost-effective customized servers, using virtualization technology to optimize resource utilization, etc.

2. Performance test indicators

Response time: The test method is to repeat the request and divide the total test time of 10,000 times Take ten thousand.

Concurrency number: The number of requests that the system can handle at the same time (number of website system users >>Number of website online users>>Number of concurrent website users), test program Test the system's concurrent processing capabilities by simulating concurrent users through multiple threads.

Throughput: the number of requests processed by the system per unit time (TPS, HPS, QPS, etc.)

Performance Counters: Some data joysticks that describe the performance of a server or operating system. A rewritten version of this sentence is: This involves system load, number of objects and threads, memory usage, CPU usage, and disk and network I/O.

3. Performance testing methods: performance testing, load testing, stress testing, stability testing

Performance testing is performed to continuously add load to the system to obtain system performance indicators, maximum load capacity, and maximum pressure endurance. The so-called increase in access pressure means to continuously increase the number of concurrent requests for the test program.

5. Performance optimization strategy

Performance analysis: Check the logs of each link in request processing, and analyze which link has an unreasonable response time or exceeds expectations; then check the monitoring data .

B. Web front-end performance optimization

1. Browser access optimization: reduce http requests (merge CSS/JS/images ), use browser cache (Cache-Control and Expires in HTTP header), enable compression (Gzip), put CSS at the top of the page and JS at the bottom of the page, reduce cookie transmission

2.CND acceleration

3. Reverse proxy: Accelerate web requests by configuring the cache function. (It can also protect real servers and implement load balancing functions)

C. Application server performance optimization

1. Distributed cache

The first law of website performance optimization: Prioritize the use of cache to optimize performance

It is mainly used to store data that has a high read-write ratio and rarely changes. When the cache cannot be hit, the database is read and the data is written to the cache again.

2. Reasonable use of cache: Do not frequently modify data, access without hot spots, data inconsistency and dirty reading, cache availability (cache hot standby), cache warm-up ( Preload some caches when the program starts), cache penetration

3. Distributed cache architecture: distributed caches that need to be updated synchronized (JBoss Cache), distributed caches that do not communicate with each other (Memcached)

4. Asynchronous operation: Use the message queue (which can improve the scalability and performance of the website), which has a good peak-cutting effect. The transaction messages generated by high concurrency in a short period of time are stored in the message queue.

5. Use cluster

6. Code optimization:

Multi-threading (IO blocking and multi-CPU, number of startup threads = [task execution time /(Task execution time-IO waiting time)]*CPU core number, need to pay attention to thread safety: design objects as stateless objects, use local objects, use locks when accessing resources concurrently);

Resource reuse (single case and object pool);

Data structure;

Garbage collection

D. Storage performance optimization

1. Databases mostly use B-trees with two-level indexes, and the tree has the most levels. Three floors. It may take 5 disk accesses to update a record.

2. So many NoSQL products use LSM trees, which can be regarded as an N-order merge tree.

3. RAID (Redundant Array of Inexpensive Disks), RAID0, RAID1, RAID10, RAID5, RAID6, are widely used in traditional relational databases and file systems.

4.HDFS (Hadoop distributed file system), cooperates with MapReduce for big data processing.

5. Foolproof: High-availability architecture of the website

A. Measurement and assessment of website usability

1. Website Availability measurement

Website unavailability time (downtime time) = fault repair time point - fault discovery (reporting) time point

Website annual availability index = (1-website unavailable time/total annual time)*100%

2 9s are basically available, 88 hours; 3 9s are more High availability, 9 hours; 4 9s is high availability with automatic recovery capability, 53 minutes; 5 9s is extremely high availability, less than 5 minutes; QQ is 99.99, 4 9s, and is unavailable for about 53 minutes a year.

2. Website usability assessment

Fault score: refers to the method of classifying and weighting website faults to calculate fault responsibility

Fault score = failure time (minutes) * failure weight

B. Highly available website architecture

The main method is redundant backup and failover of data and services. The application layer and service layer use cluster load balancing to achieve high availability, and the data layer uses data synchronous replication to achieve redundant backup.

C. Highly available applications

1. Failover of stateless services through load balancing: Even if the application access is very small, at least two servers should be deployed for use Load balancing builds a small cluster.

2. Session management of application server cluster

Session replication: Session synchronization between servers, small cluster

Session binding: Use source address Hash to distribute requests originating from the same IP to the same server, which has an impact on high availability.

Use Cookie to record Session: size limit, each request response needs to be transmitted, and if you turn off cookies, you will not be able to access

- # #Session server: Using distributed cache, database, etc., high availability, high scalability, and good performance

D. High availability service

1. Hierarchical management: Servers are hierarchical in operation and maintenance. Core applications and services prioritize using better hardware, and the operation and maintenance response speed is also extremely fast.2. Timeout setting: Set the timeout period for the service call in the application. Once the timeout expires, the communication framework will throw an exception. Based on the service scheduling policy, the application can choose to continue retrying or transfer the request to a server that provides the same service. on other servers.

The application completes the call to the service through asynchronous methods such as message queues to avoid the situation where the entire application request fails when a service fails.

4. Service degradation: Deny service, reject calls from low-priority applications or randomly reject some request calls; close functions, close some unimportant services or close some unimportant functions internally.

5. Idempotent design: In the service layer, it is guaranteed that repeated calls to the service will produce the same results as calls once, that is, the service is idempotent.

E. Highly available data

1.CAP principle

Highly available data: data persistence (permanent storage, backup copies will not be lost), data accessibility (quick switching between different devices), data consistency (in the case of multiple copies, the copy data is guaranteed to be consistent)

CAP principle: A storage system that provides data services cannot simultaneously meet the three conditions of data consistency (Consistency), data availability (Availability), and partition tolerance (Partition Tolerance (the system has scalability across network partitions)).

Usually large websites will enhance the availability (A) and scalability (P) of the distributed system, sacrificing consistency (C) to a certain extent. Generally speaking, data inconsistency usually occurs when the system has high concurrent write operations or the cluster status is unstable. The application system needs to understand the data inconsistency of the distributed data processing system and make compensation and error correction to a certain extent. To avoid incorrect application system data.

Data consistency can be divided into: strong data consistency (all operations are consistent), data user consistency (copies may be inconsistent but error correction is performed when users access it) The verification determines that a correct data is returned to the user), and the data is ultimately consistent (the copy and user access may be inconsistent, but the system reaches consistency after a period of self-recovery and correction)

2. Data backup

Asynchronous hot backup: The write operation of multiple data copies is completed asynchronously. When the application receives a successful response from the data service system for the write operation, it only writes If one copy is successful, the storage system will write other copies asynchronously (it may fail)

Synchronous hot backup: The writing operation of multiple data copies is completed synchronously, that is, the application When the program receives the write success response from the data service system, multiple copies of the data have been written successfully.

3. Failover

Failure confirmation: heartbeat detection, application access failure

Access transfer: After confirming that a server is down, reroute data read and write access to other servers

Data recovery: from a healthy server Copy the data and restore the number of data copies to the set value

F. Software quality assurance for high-availability websites

1. Website release

2. Automated testing: The tool Selenium

performs pre-release verification on the pre-release server. We will first release it to the pre-release machine for use by development engineers and test engineers. . The configuration, environment, data center, etc. need to be the same as the production environment

4. Code control: svn, git; trunk development, branch release; branch development, trunk release (mainstream);

5. Automated release

6. Grayscale release: Divide the cluster server into several parts, release only a part of the server every day, and observe that the operation is stable and without faults. If problems are found during the period, you only need to roll back a part of the released server. Also commonly used for user testing (AB testing).

G. Website operation monitoring

1. Collection of monitoring data

Collect user behavior logs, including operating system and Browser version, IP address, page access path, page dwell time and other information. Including server-side log collection and client-side browser log collection.

Server performance collection: such as system load, memory usage, disk IO, network IO, etc., tools Ganglia, etc.

Run data reports: such as buffer hit rate, average response delay time, number of emails sent per minute, total number of tasks to be processed, etc.

2. Monitoring and management

System alarm: Set thresholds for various monitoring indicators, use email and instant messaging tools , SMS and other alarms

Failure transfer: proactively notify the application to perform failover

Automatic graceful downgrade: based on monitoring The parameters determine the application load, appropriately uninstall some servers with low-load applications, and reinstall high-load applications to balance the overall application load.

6. Never-ending: The scalability architecture of the website

The so-called scalability of the website means that there is no need to change the website Software and hardware design can expand or shrink the website's service processing capabilities simply by changing the number of deployed servers.

A. Scalability design of website architecture

1. Physical separation of different functions to achieve scaling: vertical separation (separation after layering), separate different parts of the business processing process Separate deployment to achieve system scalability; horizontal separation (separation after business segmentation), separate deployment of different business modules to achieve system scalability.

2. A single function can be scaled through cluster scale

B. Scalability design of application server cluster

1. The application server should be designed to be seamless Stateful, does not store request context information.

2. Load balancing:

HTTP redirection load balancing: calculate a real Web server address based on the user's HTTP request, and write the server address Returned to the user's browser in an HTTP redirect response. This solution has its advantages, but the disadvantages are that it requires two requests, and the processing power of the redirect server itself may be limited. In addition, 302 jumps may also affect SEO.

DNS domain name resolution load balancing: Configure multiple A records on the DNS server to point to different IPs. The advantage is that the load balancing work is transferred to the DNS. Many also support geographical location. Returns the nearest server. The disadvantage is that the A record may be cached, and the control lies with the domain name service provider.

Reflective proxy load balancing: The address requested by the browser is the reverse proxy server. After the reverse proxy server receives the request, it calculates a real server based on the load balancing algorithm. The address of the physical server and forwards the request to the real server. After the processing is completed, the response is returned to the reverse proxy server, and the reverse proxy server returns the response to the user. Also called application layer (HTTP layer) load balancing. Its deployment is simple, but the performance of the reverse proxy can become a bottleneck.

IP load balancing: After the user request data packet reaches the load balancing server, the load balancing server obtains the network data packet in the operating system kernel process, and calculates a load balancing algorithm based on the load balancing algorithm. Real web server, and then modify the data destination IP address to the real server, without the need for processing by the user process. After the real server completes processing, the response packet returns to the load balancing server, and the load balancing server modifies the source address of the packet to its own IP address and sends it to the user's browser.

Data link layer load balancing: triangle transmission mode, the IP address is not modified during the data distribution process of the load balancing server, only the destination mac address is modified, through the virtual IP of all servers The address is consistent with the IP address of the load balancing server. The source address and destination address of the data packet are not modified. Since the IP is consistent, the response data packet can be returned directly to the user's browser. Also called direct routing (DR). Represents the product LVS (Linux Virtual Server).

3. Load balancing algorithm:

Round Robin (RR): All requests are pooled and distributed to each application On the server

Weighted Round Robin (WRR): Based on the server hardware performance, distribution is performed according to the configured weight on the basis of polling

Random: Requests are randomly distributed to each application server

Least Connections: The record server is processing The number of connections, distribute new requests to the server with the fewest connections

Source address hashing (Source Hashing): Hash calculation based on the IP address of the request source

C. Scalability design of distributed cache cluster

1.Access model of Memcached distributed cache cluster

Enter the routing algorithm module through KEY, and the routing algorithm calculates a Memcached server for reading and writing.

2. Scalability challenges of Memcached distributed cache cluster

A simple routing algorithm is to use the remainder Hash method: divide the Hash value of the cached data KEY by the number of servers, and find the remainder The corresponding server number. Doesn't scale well.

3. Distributed cache’s Consistent Hash algorithm

First construct an integer ring with a length of 2 to the power of 32 (consistent Hash ring), according to the node The hash value of the name places the cache server node on this hash ring. Then calculate the Hash value based on the KEY value of the data that needs to be cached, and then search clockwise on the Hash ring for the cache server node closest to the Hash value of the KEY to complete the Hash mapping search from KEY to the server.

D. Scalability design of data storage server cluster

1. Scalability design of relational database cluster

Data replication (master-slave), Split tables, databases, data sharding (Cobar)

2. Scalability design of NoSQL database

NoSQL abandons normalization based on relational algebra and Structured Query Language (SQL) The data model also does not guarantee transactional consistency (ACID). Enhanced high availability and scalability. (Apache HBase)

The above is the detailed content of What are the core principles of mysql large-scale website technical architecture?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1371

1371

52

52

Unable to log in to mysql as root

Apr 08, 2025 pm 04:54 PM

Unable to log in to mysql as root

Apr 08, 2025 pm 04:54 PM

The main reasons why you cannot log in to MySQL as root are permission problems, configuration file errors, password inconsistent, socket file problems, or firewall interception. The solution includes: check whether the bind-address parameter in the configuration file is configured correctly. Check whether the root user permissions have been modified or deleted and reset. Verify that the password is accurate, including case and special characters. Check socket file permission settings and paths. Check that the firewall blocks connections to the MySQL server.

mysql whether to change table lock table

Apr 08, 2025 pm 05:06 PM

mysql whether to change table lock table

Apr 08, 2025 pm 05:06 PM

When MySQL modifys table structure, metadata locks are usually used, which may cause the table to be locked. To reduce the impact of locks, the following measures can be taken: 1. Keep tables available with online DDL; 2. Perform complex modifications in batches; 3. Operate during small or off-peak periods; 4. Use PT-OSC tools to achieve finer control.

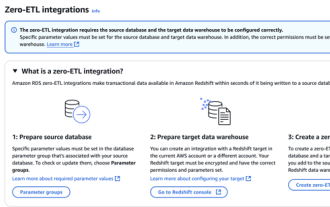

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

Query optimization in MySQL is essential for improving database performance, especially when dealing with large data sets

Apr 08, 2025 pm 07:12 PM

1. Use the correct index to speed up data retrieval by reducing the amount of data scanned select*frommployeeswherelast_name='smith'; if you look up a column of a table multiple times, create an index for that column. If you or your app needs data from multiple columns according to the criteria, create a composite index 2. Avoid select * only those required columns, if you select all unwanted columns, this will only consume more server memory and cause the server to slow down at high load or frequency times For example, your table contains columns such as created_at and updated_at and timestamps, and then avoid selecting * because they do not require inefficient query se

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

Do mysql need to pay

Apr 08, 2025 pm 05:36 PM

MySQL has a free community version and a paid enterprise version. The community version can be used and modified for free, but the support is limited and is suitable for applications with low stability requirements and strong technical capabilities. The Enterprise Edition provides comprehensive commercial support for applications that require a stable, reliable, high-performance database and willing to pay for support. Factors considered when choosing a version include application criticality, budgeting, and technical skills. There is no perfect option, only the most suitable option, and you need to choose carefully according to the specific situation.

Can mysql run on android

Apr 08, 2025 pm 05:03 PM

Can mysql run on android

Apr 08, 2025 pm 05:03 PM

MySQL cannot run directly on Android, but it can be implemented indirectly by using the following methods: using the lightweight database SQLite, which is built on the Android system, does not require a separate server, and has a small resource usage, which is very suitable for mobile device applications. Remotely connect to the MySQL server and connect to the MySQL database on the remote server through the network for data reading and writing, but there are disadvantages such as strong network dependencies, security issues and server costs.

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.