Technology peripherals

Technology peripherals

AI

AI

How to catch inappropriate content in the era of big models? EU bill requires AI companies to ensure users' right to know

How to catch inappropriate content in the era of big models? EU bill requires AI companies to ensure users' right to know

How to catch inappropriate content in the era of big models? EU bill requires AI companies to ensure users' right to know

Over the past 10 years, big tech companies have become very good at many technologies: language, prediction, personalization, archiving, text parsing, and data processing. But they're still terrible at catching, flagging, and removing harmful content. As for the election and vaccine conspiracy theories spreading in the United States, one need only look back at the events of the past two years to understand the real-world harm they are causing.

This difference raises some questions. Why aren’t tech companies improving on content moderation? Can they be forced to do this? Will new advances in artificial intelligence improve our ability to catch bad information?

Most often, when tech companies are asked by the U.S. Congress to explain their role in spreading hate and misinformation, they tend to blame their failures on the complexity of the language itself. Executives say understanding and preventing contextual hate speech in different languages and contexts is a difficult task.

One of Mark Zuckerberg’s favorite sayings is that technology companies should not be responsible for solving all the world’s political problems.

(Source: STEPHANIE ARNETT/MITTR | GETTY IMAGES)

(Source: STEPHANIE ARNETT/MITTR | GETTY IMAGES)

Most companies currently use both technology and human content moderators, with the latter’s work being undervalued and this reflected in their meager pay.

For example, AI is currently responsible for 97% of all content removed on Facebook.

However, AI isn’t good at interpreting nuance and context, so it’s unlikely to fully replace human content moderators, even if humans do, said Renee DiResta, research manager at the Stanford Internet Observatory. Not always good at explaining these things.

Because automated content moderation systems are typically trained on English data, cultural background and language can also pose challenges in effectively processing content in other languages.

Professor Hani Farid of the School of Information at the University of California, Berkeley, provides a more obvious explanation. According to Farid, because content moderation is not in the financial interest of tech companies, it does not keep up with the risks. It's all about greed. Stop pretending it's not about money. ”

Due to the lack of federal regulation, it is difficult for victims of online violence to demand that platforms bear financial responsibility.

Content moderation seems to be a never-ending war between tech companies and bad actors. When tech companies roll out content moderation rules, bad actors often use emojis or intentional misspellings to avoid detection. Then these companies try to close the loopholes, and people find new loopholes, and the cycle continues.

Now, the large language model is coming...

The current situation is already very difficult. With the emergence of generative artificial intelligence and large-scale language models such as ChatGPT, the situation may become even worse. Generative technology has its problems—for example, its tendency to confidently make things up and present them as fact—but one thing is clear: AI is getting better at language. Very powerful.

While both DiResta and Farid are cautious, they believe it is too early to make a judgment on how things will develop. Although many large models like GPT-4 and Bard have built-in content moderation filters, they can still produce toxic output, such as hate speech or instructions on how to build a bomb.

Generative AI enables bad actors to conduct disinformation campaigns at greater scale and speed. This is a dire situation given that methods for identifying and labeling AI-generated content are woefully inadequate.

On the other hand, the latest large-scale language models perform better at text interpretation than previous artificial intelligence systems. In theory, they could be used to facilitate the development of automated content moderation.

Tech companies need to invest in redesigning large language models to achieve this specific goal. While companies like Microsoft have begun looking into the matter, there has yet to be significant activity.

Farid said: "While we have seen many technological advances, I am skeptical about any improvements in content moderation."

While large language models are advancing rapidly, they still face challenges in contextual understanding, which may prevent them from understanding subtle differences between posts and images as accurately as human moderators. Cross-cultural scalability and specificity also pose problems. "Do you deploy a model for a specific type of niche? Do you do it by country? Do you do it by community? It's not a one-size-fits-all question," DiResta said.

New tools based on new technologies

Whether generative AI ultimately harms or helps the online information landscape may depend largely on whether tech companies can come up with good, widely adopted tools that tell us whether content was generated by AI .

DiResta told me that detecting synthetic media may be a technical challenge that needs to be prioritized because it is challenging. This includes methods like digital watermarking, which refers to embedding a piece of code as a permanent mark that the attached content was produced by artificial intelligence. Automated tools for detecting AI-generated or manipulated posts are attractive because, unlike watermarks, they do not require active tagging by the creator of AI-generated content. In other words, current tools that try to identify machine-generated content are not doing well enough.

Some companies have even proposed using mathematics to securely record cryptographic signatures of information, such as how a piece of content was generated, but this would rely on voluntary disclosure technologies like watermarks.

The latest version of the Artificial Intelligence Act (AI Act) proposed by the European Union just last week requires companies that use generative artificial intelligence to notify users when the content is indeed generated by a machine. We'll likely hear more about emerging tools in the coming months, as demand for transparency in AI-generated content increases.

Support: Ren

Original text:

https://www.technologyreview.com/2023/05/15/1073019/catching-bad-content-in-the-age-of-ai/

The above is the detailed content of How to catch inappropriate content in the era of big models? EU bill requires AI companies to ensure users' right to know. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

Big model app Tencent Yuanbao is online! Hunyuan is upgraded to create an all-round AI assistant that can be carried anywhere

Jun 09, 2024 pm 10:38 PM

On May 30, Tencent announced a comprehensive upgrade of its Hunyuan model. The App "Tencent Yuanbao" based on the Hunyuan model was officially launched and can be downloaded from Apple and Android app stores. Compared with the Hunyuan applet version in the previous testing stage, Tencent Yuanbao provides core capabilities such as AI search, AI summary, and AI writing for work efficiency scenarios; for daily life scenarios, Yuanbao's gameplay is also richer and provides multiple features. AI application, and new gameplay methods such as creating personal agents are added. "Tencent does not strive to be the first to make large models." Liu Yuhong, vice president of Tencent Cloud and head of Tencent Hunyuan large model, said: "In the past year, we continued to promote the capabilities of Tencent Hunyuan large model. In the rich and massive Polish technology in business scenarios while gaining insights into users’ real needs

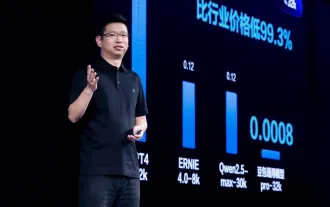

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Bytedance Beanbao large model released, Volcano Engine full-stack AI service helps enterprises intelligently transform

Jun 05, 2024 pm 07:59 PM

Tan Dai, President of Volcano Engine, said that companies that want to implement large models well face three key challenges: model effectiveness, inference costs, and implementation difficulty: they must have good basic large models as support to solve complex problems, and they must also have low-cost inference. Services allow large models to be widely used, and more tools, platforms and applications are needed to help companies implement scenarios. ——Tan Dai, President of Huoshan Engine 01. The large bean bag model makes its debut and is heavily used. Polishing the model effect is the most critical challenge for the implementation of AI. Tan Dai pointed out that only through extensive use can a good model be polished. Currently, the Doubao model processes 120 billion tokens of text and generates 30 million images every day. In order to help enterprises implement large-scale model scenarios, the beanbao large-scale model independently developed by ByteDance will be launched through the volcano

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

Using Shengteng AI technology, the Qinling·Qinchuan transportation model helps Xi'an build a smart transportation innovation center

Oct 15, 2023 am 08:17 AM

"High complexity, high fragmentation, and cross-domain" have always been the primary pain points on the road to digital and intelligent upgrading of the transportation industry. Recently, the "Qinling·Qinchuan Traffic Model" with a parameter scale of 100 billion, jointly built by China Vision, Xi'an Yanta District Government, and Xi'an Future Artificial Intelligence Computing Center, is oriented to the field of smart transportation and provides services to Xi'an and its surrounding areas. The region will create a fulcrum for smart transportation innovation. The "Qinling·Qinchuan Traffic Model" combines Xi'an's massive local traffic ecological data in open scenarios, the original advanced algorithm self-developed by China Science Vision, and the powerful computing power of Shengteng AI of Xi'an Future Artificial Intelligence Computing Center to provide road network monitoring, Smart transportation scenarios such as emergency command, maintenance management, and public travel bring about digital and intelligent changes. Traffic management has different characteristics in different cities, and the traffic on different roads

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

Uncovering the NVIDIA large model inference framework: TensorRT-LLM

Feb 01, 2024 pm 05:24 PM

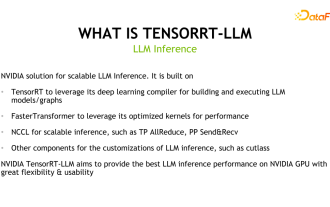

1. Product positioning of TensorRT-LLM TensorRT-LLM is a scalable inference solution developed by NVIDIA for large language models (LLM). It builds, compiles and executes calculation graphs based on the TensorRT deep learning compilation framework, and draws on the efficient Kernels implementation in FastTransformer. In addition, it utilizes NCCL for communication between devices. Developers can customize operators to meet specific needs based on technology development and demand differences, such as developing customized GEMM based on cutlass. TensorRT-LLM is NVIDIA's official inference solution, committed to providing high performance and continuously improving its practicality. TensorRT-LL

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

Benchmark GPT-4! China Mobile's Jiutian large model passed dual registration

Apr 04, 2024 am 09:31 AM

According to news on April 4, the Cyberspace Administration of China recently released a list of registered large models, and China Mobile’s “Jiutian Natural Language Interaction Large Model” was included in it, marking that China Mobile’s Jiutian AI large model can officially provide generative artificial intelligence services to the outside world. . China Mobile stated that this is the first large-scale model developed by a central enterprise to have passed both the national "Generative Artificial Intelligence Service Registration" and the "Domestic Deep Synthetic Service Algorithm Registration" dual registrations. According to reports, Jiutian’s natural language interaction large model has the characteristics of enhanced industry capabilities, security and credibility, and supports full-stack localization. It has formed various parameter versions such as 9 billion, 13.9 billion, 57 billion, and 100 billion, and can be flexibly deployed in Cloud, edge and end are different situations

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

Advanced practice of industrial knowledge graph

Jun 13, 2024 am 11:59 AM

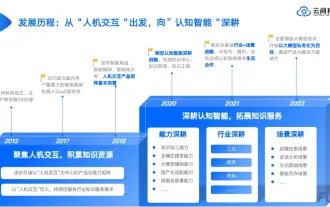

1. Background Introduction First, let’s introduce the development history of Yunwen Technology. Yunwen Technology Company...2023 is the period when large models are prevalent. Many companies believe that the importance of graphs has been greatly reduced after large models, and the preset information systems studied previously are no longer important. However, with the promotion of RAG and the prevalence of data governance, we have found that more efficient data governance and high-quality data are important prerequisites for improving the effectiveness of privatized large models. Therefore, more and more companies are beginning to pay attention to knowledge construction related content. This also promotes the construction and processing of knowledge to a higher level, where there are many techniques and methods that can be explored. It can be seen that the emergence of a new technology does not necessarily defeat all old technologies. It is also possible that the new technology and the old technology will be integrated with each other.

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

New test benchmark released, the most powerful open source Llama 3 is embarrassed

Apr 23, 2024 pm 12:13 PM

If the test questions are too simple, both top students and poor students can get 90 points, and the gap cannot be widened... With the release of stronger models such as Claude3, Llama3 and even GPT-5 later, the industry is in urgent need of a more difficult and differentiated model Benchmarks. LMSYS, the organization behind the large model arena, launched the next generation benchmark, Arena-Hard, which attracted widespread attention. There is also the latest reference for the strength of the two fine-tuned versions of Llama3 instructions. Compared with MTBench, which had similar scores before, the Arena-Hard discrimination increased from 22.6% to 87.4%, which is stronger and weaker at a glance. Arena-Hard is built using real-time human data from the arena and has a consistency rate of 89.1% with human preferences.

GPT Store can't even open its doors. How dare this domestic platform take this path? ?

Apr 19, 2024 pm 09:30 PM

GPT Store can't even open its doors. How dare this domestic platform take this path? ?

Apr 19, 2024 pm 09:30 PM

Pay attention, this man has connected more than 1,000 large models, allowing you to plug in and switch seamlessly. Recently, a visual AI workflow has been launched: giving you an intuitive drag-and-drop interface, you can drag, pull, and drag to arrange your own workflow on an infinite canvas. As the saying goes, war costs speed, and Qubit heard that within 48 hours of this AIWorkflow going online, users had already configured personal workflows with more than 100 nodes. Without further ado, what I want to talk about today is Dify, an LLMOps company, and its CEO Zhang Luyu. Zhang Luyu is also the founder of Dify. Before joining the business, he had 11 years of experience in the Internet industry. I am engaged in product design, understand project management, and have some unique insights into SaaS. Later he