Implementing a web crawler using PHP

Web crawler is an automated tool that browses web pages on the Internet, collects information and stores it in a database. In today's big data era, web crawlers are becoming more and more important because they can find large amounts of information and perform data analysis. In this article, we will learn how to write a web crawler in PHP and use it for text mining and data analysis.

Web crawlers are a good option for collecting content from websites. It is important to note that you should always strictly adhere to ethical and legal guidelines. If you want to write your own web crawler, follow these steps.

- Installing and configuring the PHP environment

First, you need to install the PHP environment. You can download the latest PHP version from the official website "php.net". After downloading, you need to install PHP to your computer. In most cases, you can find videos and articles on the Internet on how to install PHP.

- Setting up the source code of the web crawler

To start writing a web crawler, you need to open the source code editor. You can use any text editor to write a web crawler, but we recommend using professional PHP development tools such as "PHPStorm" or "Sublime Text".

3. Write a web crawler program

The following is a simple web crawler code. You can follow the program instructions to create a web crawler and crawl data.

<?php

// 定义URL

$startUrl = "https://www.example.com";

$depth = 2;

// 放置已经处理的URL和当前的深度

$processedUrls = [

$startUrl => 0

];

// 运行爬虫

getAllLinks($startUrl, $depth);

//获取给定URL的HTML

function getHTML($url) {

$curl = curl_init();

curl_setopt($curl, CURLOPT_URL, $url);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

$html = curl_exec($curl);

curl_close($curl);

return $html;

}

//获取所有链接

function getAllLinks($url, $depth) {

global $processedUrls;

if ($depth === 0) {

return;

}

$html = getHTML($url);

$dom = new DOMDocument();

@$dom->loadHTML($html);

$links = $dom->getElementsByTagName('a');

foreach ($links as $link) {

$href = $link->getAttribute('href');

if (strpos($href, $url) !== false && !array_key_exists($href, $processedUrls)) {

$processedUrls[$href] = $processedUrls[$url] + 1;

echo $href . " (Depth: " . $processedUrls[$href] . ")" . PHP_EOL;

getAllLinks($href, $depth - 1);

}

}

}This program is called "Depth-first search (DFS)". It starts from the starting URL and crawls its links downwards while recording their depth until the target depth.

4. Store data

After you obtain the data, you need to store them in the database for later analysis. You can use any favorite database like MySQL, SQLite or MongoDB, depending on your needs.

- Text Mining and Data Analysis

After storing the data, you can use programming languages such as Python or R to perform text mining and data analysis. The purpose of data analysis is to help you derive useful information from the data you collect.

Here are some data analysis techniques you can use:

- Text analysis: Text analysis can help you extract useful information from large amounts of text data, such as sentiment analysis, topic building Model, entity recognition, etc.

- Cluster analysis: Cluster analysis can help you divide your data into different groups and see the similarities and differences between them.

- Predictive Analytics: Using predictive analytics technology, you can plan your business for the future and predict trends based on previous historical situations.

Summary

Web crawlers are a very useful tool that can help you collect data from the Internet and use them for analysis. When using web crawlers, be sure to follow ethical and legal regulations to maintain moral standards. I hope this article was helpful and encouraged you to start creating your own web crawlers and data analysis.

The above is the detailed content of Implementing a web crawler using PHP. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

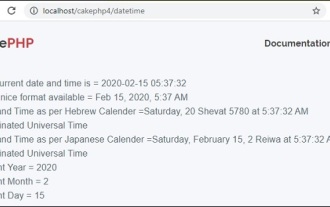

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

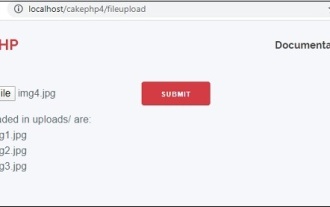

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

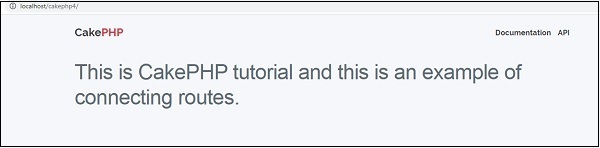

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

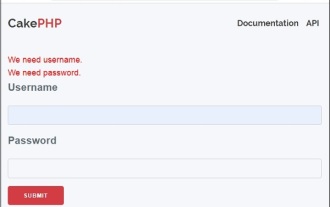

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c