In recent months, Microsoft has been busy building generative AI into many of its products and services, including search engine Bing, browser Edge, GitHub and Office productivity suite.

At the Microsoft Build 2023 conference held early this morning, these results finally came out, and they went a step further: the Microsoft AI universe is becoming more and more complete.

The topic of this year’s Microsoft Build conference is highly focused on AI. Microsoft CEO Satya Nadella first discussed platform shifts and recalled technology changes over the years, and then went straight to the announcement: "We announced more than 50 updates for developers, from bringing Bing to ChatGPT to Windows Copilot to New Copilot Stack, Azure AI Studio and new data analysis platform Microsoft Fabric with universal scalability."

Next, Microsoft successively released these Important updates and changes more relevant to ordinary users include: the addition of the CoPilot experience in Windows 11 and Edge, and the new Bing AI and Copilot plug-ins for OpenAI ChatGPT.

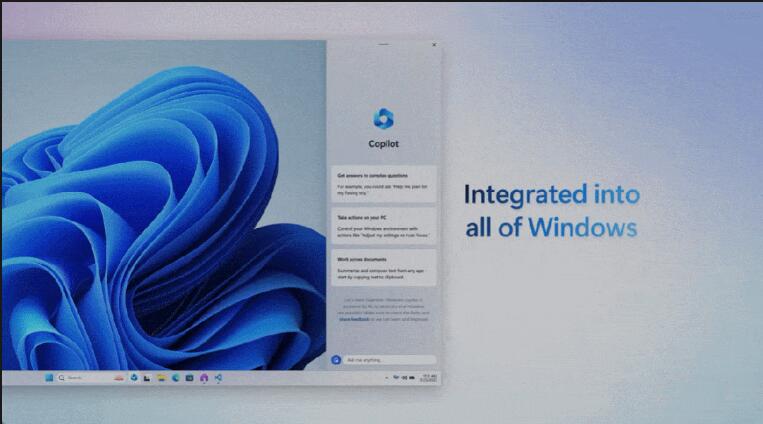

This time Microsoft released Windows Copilot, becoming the first PC platform to provide users with centralized AI assistance. Windows Copilot works with Bing Chat and first/third-party plug-ins, allowing users to focus on bringing their ideas to life, completing complex projects, and collaborating instead of spending energy finding, launching, and working across multiple applications.

Windows Copilot is very simple to call and use. Once opened, Windows Copilot becomes a sidebar tool integrated into the operating system to help users complete various tasks.

#Microsoft said that Windows Copilot will become an efficient personal assistant to help users take actions, customize settings and seamlessly link to their favorite app.

In addition, many functions of Windows, including copy/paste, Snap Assist, screenshots and personalization, can be made more useful through Windows Copilot . For example, users can not only copy/paste, but also ask Windows Copilot to rewrite, summarize, or explain the content.

Meanwhile, just like with Bing Chat, users can ask Windows Copilot a series of questions ranging from simple to complex. For example, if I want to call my family in Cyprus, I can quickly check the local time to make sure I don't wake them up in the middle of the night. If I want to plan a trip to Cyprus to visit them, I can ask Windows Copilot to look up flights and accommodation.

Microsoft said that Windows Copilot will begin public testing in June, and then gradually roll out to existing Windows 11 User push.

Since Bing announced its access to ChatGPT, Bing users have participated in more than 500 million chats and created more than 2 billion images, and daily downloads of the Bing mobile app have grown 8x since launch.

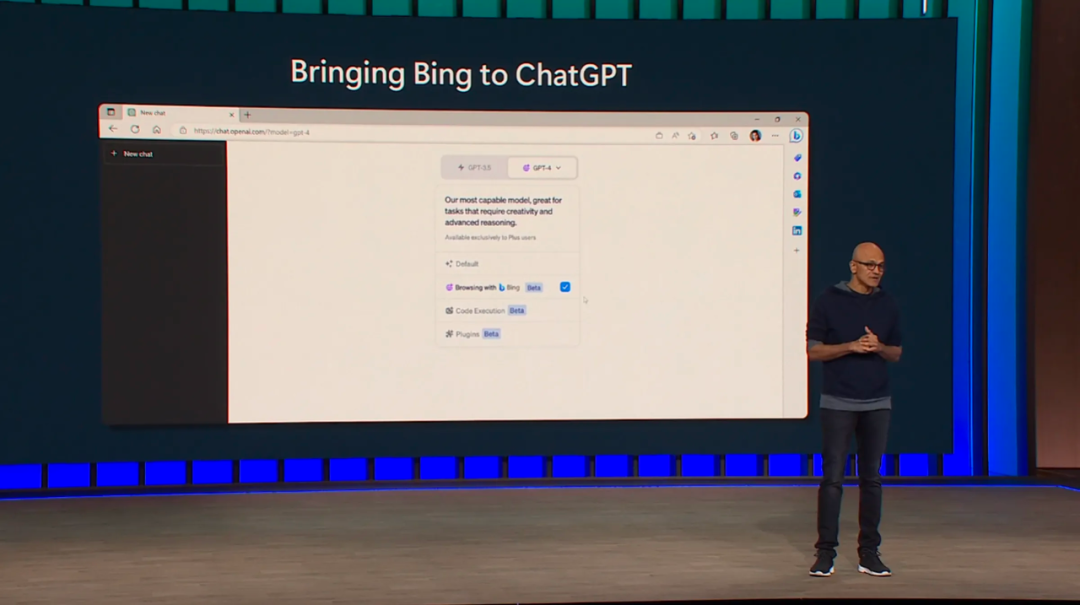

At this conference, Bing ushered in three key updates: integrating Bing search into ChatGPT, establishing a common plug-in platform with OpenAI and new plug-in partners, and expanding Bing Chat across Microsoft Integration in Copilot.

"Today's updates create greater opportunities for developers and more amazing experiences for people as we continue to transform search."

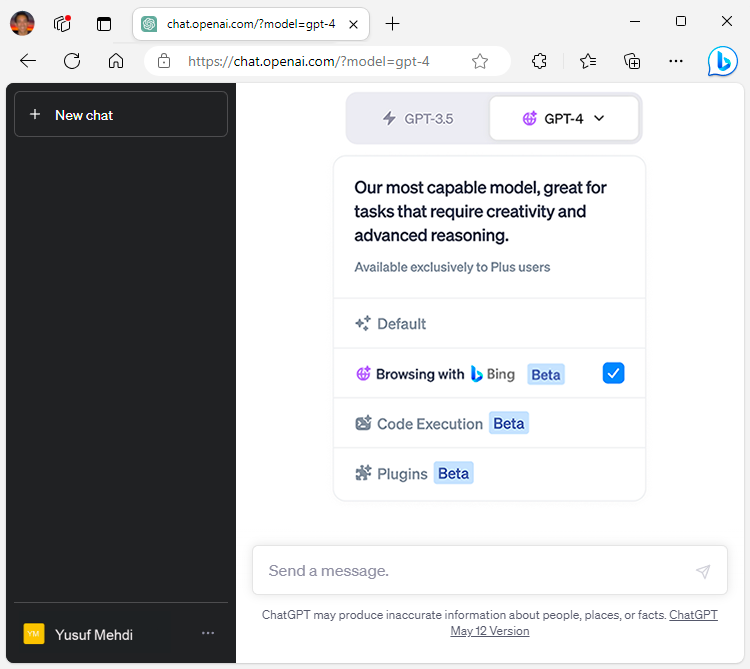

One of the noteworthy changes is that Bing will become the default search engine plug-in for ChatGPT. Nadella also showed how it will work:

"This is just the best experience we plan to bring to Bing with our partners at OpenAI." Bringing the beginning of the ChatGPT experience." Nadella said.

Microsoft said that after ChatGPT is connected to the new Bing, it can provide more timely and updated answers through network access. ChatGPT Plus subscribers can experience this feature immediately.

## Default Bing search in ChatGPT

Accelerate building the Bing ecosystem and enhance performance with plug-ins

Microsoft and OpenAI will support and grow the AI plug-in ecosystem through interoperability. This means developers can now use one platform to build and submit plug-ins for both consumer and enterprise, including ChatGPT, Bing, Dynamics 365 Copilot, Microsoft 365 Copilot and Windows Copilot.

As part of this shared plug-in platform, Bing is adding support for various plug-ins. In addition to the previously announced OpenTable and Wolfram Alpha add-ons, Microsoft has announced the addition of Expedia, Instacart, Kayak, Klarna, Redfin, TripAdvisor and Zillow to the Bing ecosystem.

With plug-ins built directly into the chat system, Bing makes relevant suggestions based on users’ conversations. For example, users can use the OpenTable plug-in to ask about restaurants and other related topics. In addition, Microsoft also stated that such functions will be available in the Bing application on the mobile terminal, which will greatly expand application scenarios.

In addition, dozens of companies are becoming partners of the Bing Chat plug-in:

Bing Plugin

In the meantime, developers can now use Plug-ins integrate their apps and services into Microsoft 365 Copilot. Plug-ins for Microsoft 365 Copilot include the ChatGPT and Bing plug-ins, as well as the Teams messaging extension and the Power Platform connector.

Integrating Bing into Copilot

In the meantime, as With the release of Windows Copilot, Microsoft has also integrated the powerful features of Bing Chat into Windows 11. Working with Windows Copilot and Bing Chat, including a shared plug-in platform with Bing and OpenAI, will enable these plug-ins to be enhanced with apps on Windows. This will make it easier than ever to get personalized answers, relevant recommendations and take quick action.

In addition to Windows, Microsoft has also integrated its universal plug-in platform natively into the Edge browser, making it the first browser to integrate AI search.

We know that developers are constantly dealing with manual development settings at work, not only frequent clicks , as well as multiple tool logins, suboptimal navigation of file systems, and context switching. All of these can seriously impact their development efficiency.

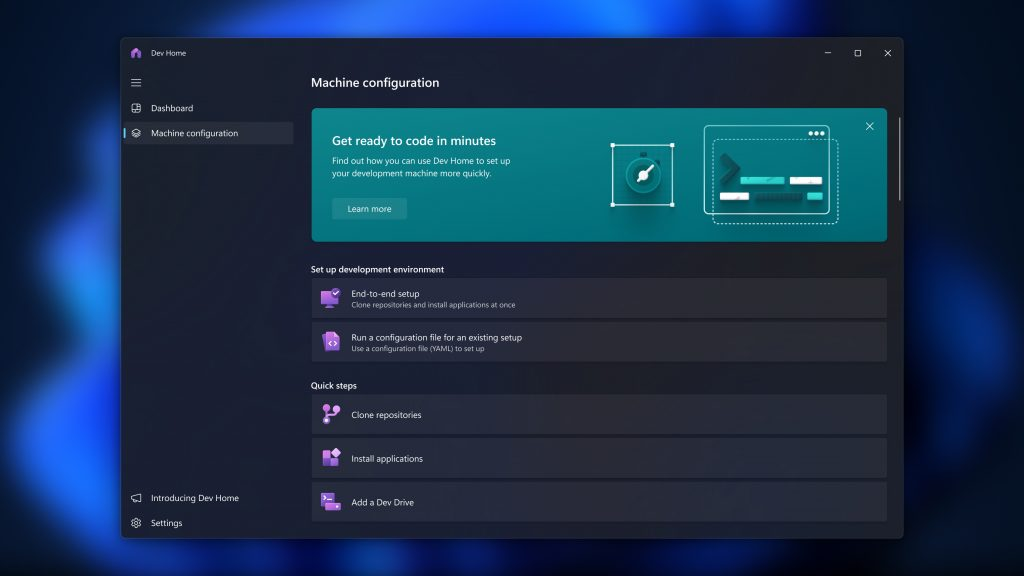

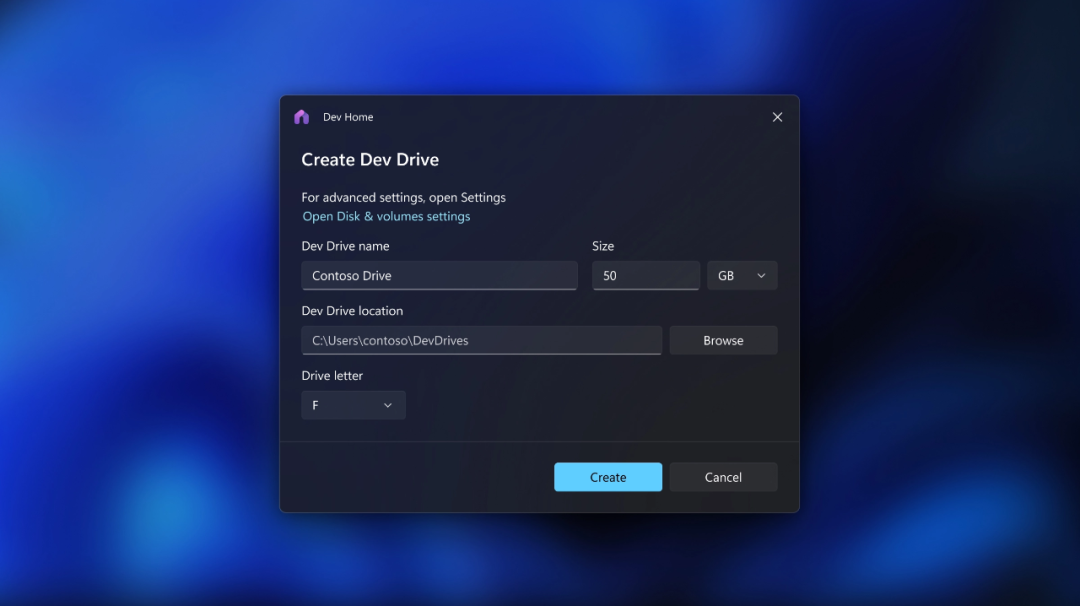

Therefore, in order to improve developer productivity, Microsoft launched Dev Home, a new productivity partner in Windows 11. A preview version of Dev Home is currently available, with WinGet configuration functionality for simpler and faster setup, Dev Drive functionality for enhanced file system functionality, and a new custom control panel for tracking all workflows and tasks in one place . These can simplify the developer's workflow.

Dev Home can easily link to GitHub to easily install the tools and packages you need.

Dev Home can also configure user coding environments on the cloud using Microsoft Dev Box and GitHub Codespaces. In summary, with Dev Home designed for developers, Microsoft provides the ultimate productivity partner, allowing them to focus on what they do best – writing code.

Dev home address: https://github.com/microsoft/DevHome

WinGet configuration for unattended and reliable development machine setup

With the new WinGet configuration, developers can just click You'll be ready to code in a few clicks. This unattended, reliable and repeatable mechanism allows developers to skip the manual work of setting up a new machine or starting a new project, eliminating the hassle of downloading the correct versions of software, packages, tools and frameworks and applying settings. Setup time can be reduced from days to hours.

Dev Drive: New storage volume tailored for developers

Users often encounter situations where they need to deal with repositories containing thousands of files and directories, which poses challenges for I/O operations such as builds. Now, Microsoft has launched Dev Drive, a new storage volume that combines performance and security features and is customized for developers.

Dev Drive is based on the Resilient File System and provides up to 30% file system improvements in build times for file I/O scenarios.

Dev Home makes setting up a Dev Drive during environment setup very simple. It's great for hosting project source code, working folders, and package cache. Microsoft will make Dev Drive available in preview later this week.

Efficiently track user workflow in Dev Home

Dev Home can also help users manage running projects by adding GitHub widgets to effectively track all coding tasks or requests made, as well as track CPU and GPU performance. Additionally, Microsoft is working with Team Xbox to integrate GDK into Dev Home, making it easier to start creating games.

GitHub Copilot X-powered Windows Terminal

Users of GitHub Copilot You'll be able to use natural language AI models to recommend commands, interpret errors, and take actions within the Terminal app through inline and experimental chat experiences.

In addition, Microsoft is also experimenting with AI functions supported by GitHub Copilot in developer tools such as WinDBG to help users improve work efficiency. The GitHub Copilot Chat waitlist is now open, and Microsoft will soon open access to these features.

In addition, Microsoft continues to invest Essential tools to democratize building applications for the new era of artificial intelligence. Whether you are developing on x86/x64 or Arm64, Microsoft wants to make it easy for users to bring AI-driven experiences to Windows applications in the cloud and edge computing.

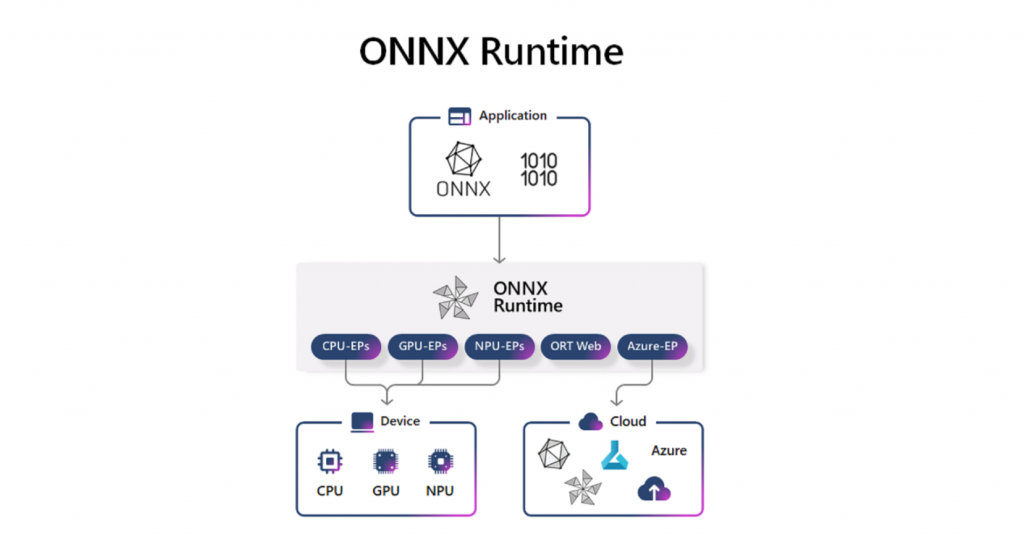

Last year at Build, Microsoft announced a new development model, Hybrid Loop, that can span Azure and clients The device implements hybrid AI scenarios. Likewise, at Build today, Microsoft said its vision has been realized. Using ONNX Runtime as the gateway for Windows AI and Olive, Microsoft has created a tool chain that reduces the burden on users when optimizing various Windows and other device models. With ONNX Runtime, third-party developers can access tools used internally by Microsoft to run AI models on Windows or other devices across CPU, GPU, NPU or hybrid with Azure.

When running models, ONNX Runtime now supports running the same API on the device or in the cloud, supporting hybrid inference scenarios. In addition, user applications can use local resources and use local resources when needed. Switch to the cloud. With the new Azure EP preview, users can connect to models deployed in AzureML and even to the Azure OpenAI service. With just a few lines of code, users can specify cloud endpoints and define criteria for when to use the cloud. As a result, users have greater control over costs because Azure EP gives users the flexibility to choose to use larger models in the cloud or smaller on-premises models at runtime.

Users can also optimize models for different hardware using Olive, an extensible tool chain that combines cutting-edge technologies for model compression, optimization and compilation. Based on this, users can use ONNX Runtime across platforms such as Windows, iOS, Android and Linux.

To summarize, both ONNX Runtime and Olive help speed the deployment of AI models into applications. ONNX Runtime makes it easier for users to create stunning AI experiences on Windows and other platforms while reducing engineering effort and increasing performance.

Microsoft has released a number of new features for the Microsoft Store, including those related to AI technology:

The above is just the content of the keynote on the first day of the multi-day build developer conference, and more content will be released in the future.

We can see from this that Microsoft has joined forces with OpenAI to create an AI universe that will not only transform and upgrade its own products but also radiate and influence the entire technology community.

Perhaps this wave of AI has just begun.

The above is the detailed content of Windows Copilot debuts, ChatGPT uses Bing search by default, and the universe of Microsoft and OpenAI is here. For more information, please follow other related articles on the PHP Chinese website!

Solution to failed connection between wsus and Microsoft server

Solution to failed connection between wsus and Microsoft server

What is the difference between a router and a cat?

What is the difference between a router and a cat?

How to unlock android permission restrictions

How to unlock android permission restrictions

winkawaksrom

winkawaksrom

How to buy Bitcoin

How to buy Bitcoin

Commonly used permutation and combination formulas

Commonly used permutation and combination formulas

How to check if port 445 is closed

How to check if port 445 is closed

pci device universal driver

pci device universal driver

How to use each function in js

How to use each function in js