[Implementation function]

1. Configure three servers

2. Deploy the same service code on three servers respectively

3. Use nginx to achieve load balancing

[Implementation ideas]

Our nginx load balancer will be deployed on an interactive server and configured with the other two servers connection, all requests directly access the nginx service interface, and then the nginx load balancer will choose the actual calling server port.

[Development and deployment environment]

Development environment: windows 7 x64 sp1 English version

Visualstudio 2017

Deployment environment: Alibaba Cloud ecs instance windows server 2012 x64

iis 7.0

[Required technology]

asp.net webapi2

[Implementation process]

Use asp.net webapi2 to write an interface that simply returns json. In order to show that we are calling interfaces on different servers, we generate three interfaces in digital form. services and deployed to iis on three servers respectively.

public ihttpactionresult gettest()

{

//throw new exception_dg_internationalization(1001);

string ip = request.getipaddressfromrequest();

return ok("test api . client ip address is -> "+ip+" the server is ===== 333 =====");

}The numbers behind the three servers I deployed are 111, 222, and 333

Note: return ok is my customized return format. For specific simple codes, you can directly return json() ;

request.getipaddressfromrequest(); is my extended method of obtaining IP address. Please implement it according to your own situation.

[System Test]

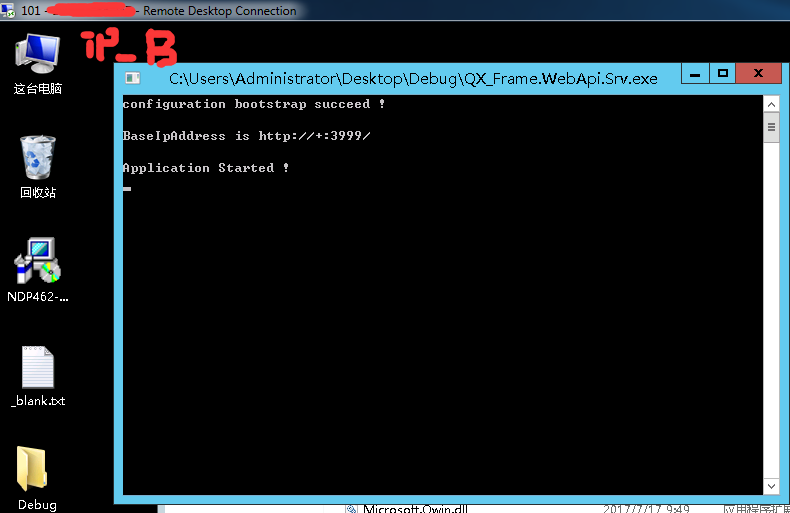

After we generate the three background codes, we deploy them to three servers respectively.

In order to keep the server information confidential, all my ip addresses below will be identified by ip_a, ip_b, ip_c

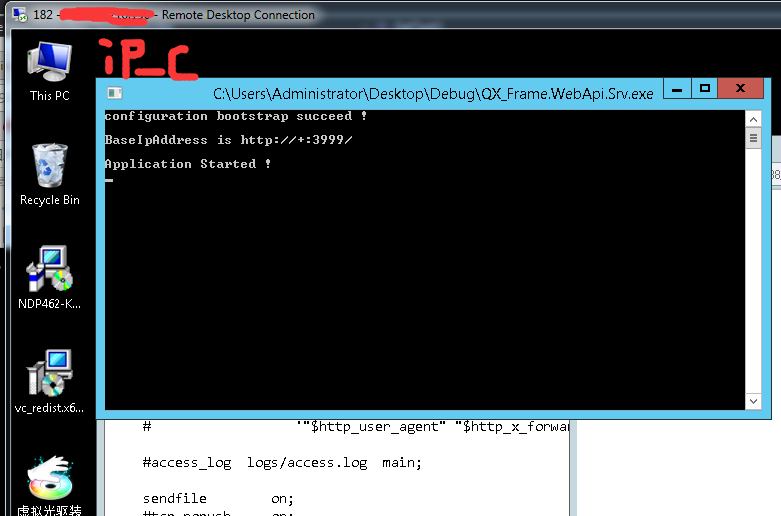

The third one is the server where we will deploy nginx:

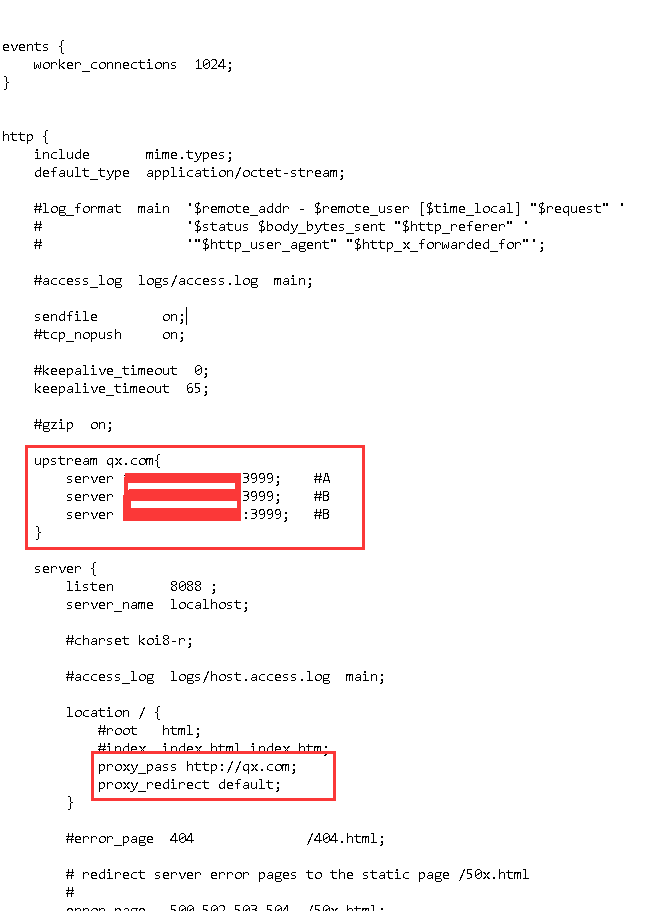

After deployment, we configure nginx:

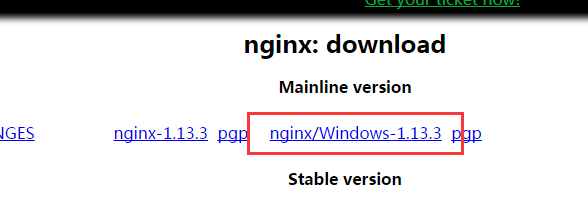

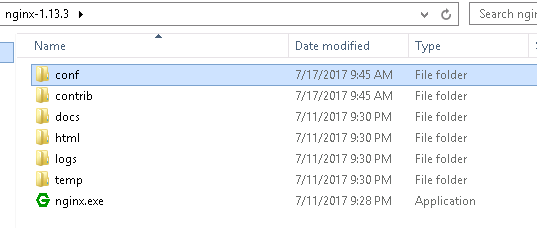

Download nginx:

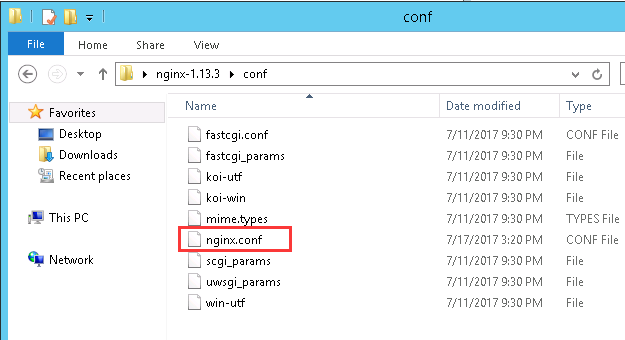

Then unzip it to the ip_c server and open nginx.conf in the conf folder

Edit the content as follows

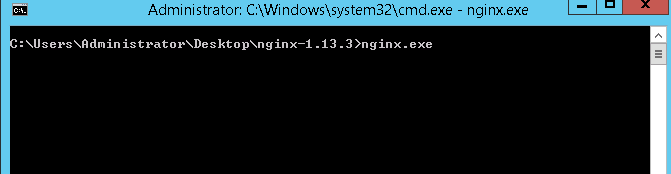

Then we start the service:

cmd command switches to the root directory of nginx

In this way, even if the service is started... Just a complaint, why not prompt that the service is started successfully... Well, it is not humane!

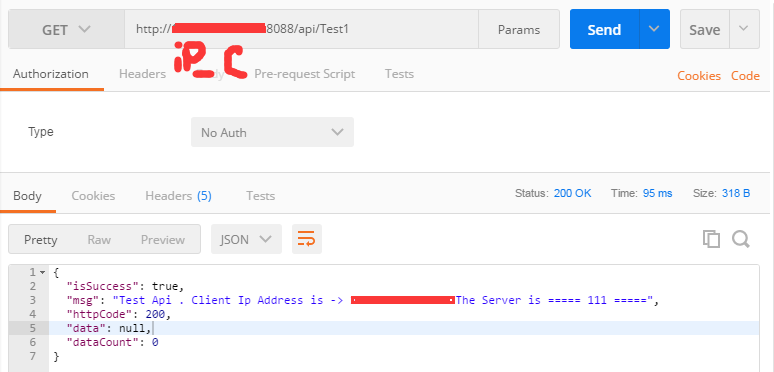

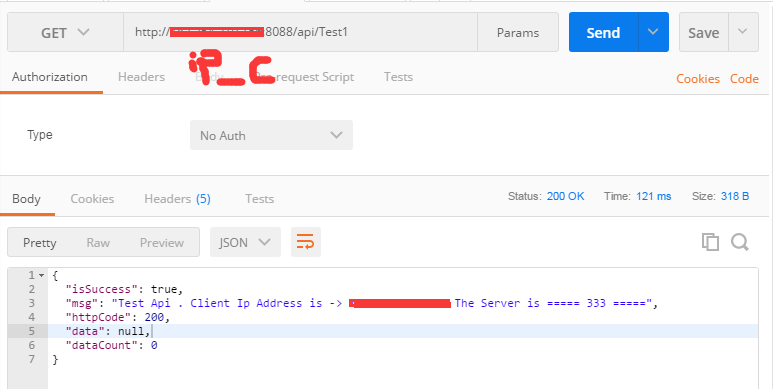

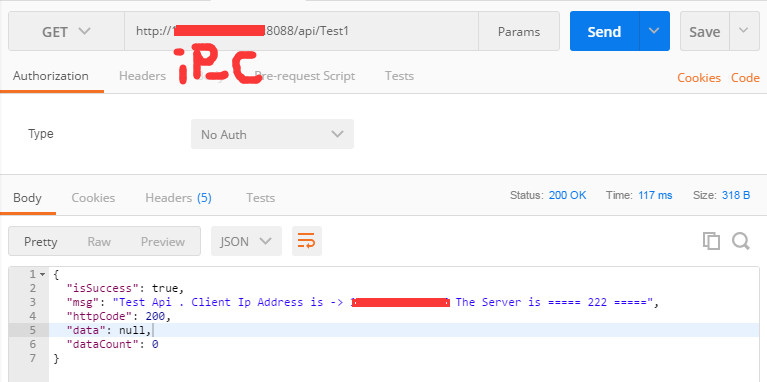

Then we open any interface testing tool and execute the same request three times:

Request 1,

Request two,

Request three,

It can be found that the background service interface we call is not the same for every request. This ensures that when a large number of customers access the same server address, the pressure of one server can be allocated to several servers, reaching the load. balanced purpose.

The above is the detailed content of Nginx load balancing configuration example analysis. For more information, please follow other related articles on the PHP Chinese website!