How to solve common problems of caching data based on Redis

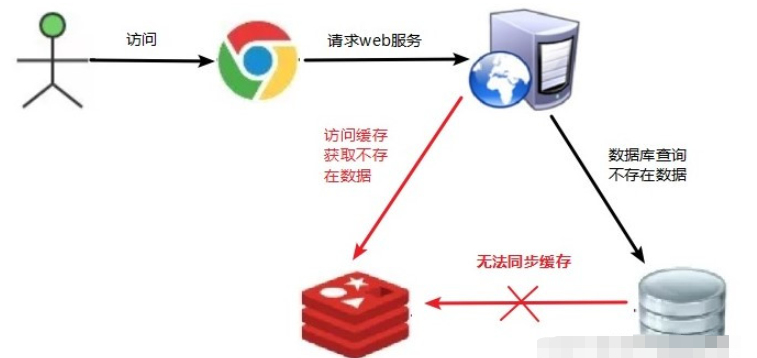

1. Cache penetration

1.1 Problem description

Cache penetration is to request a non-existent key on the client/browser side. This key does not exist in redis. There is no data source in the database. Every time a request for this key cannot be obtained from the cache, the data source will be requested.

If you use a non-existent user ID to access user information, it is neither in redis nor in the database. Multiple requests may overwhelm the data source

1.2 Solution

A data that must not exist in the cache and cannot be queried. Since the cache is passively written when there is a miss, the cache does not exist. For fault tolerance considerations, the data that cannot be queried will not be Caching in redis will cause the database to be requested every time data that does not exist is requested, which loses the meaning of caching.

(1) If the data returned by a query is empty (regardless of whether the data does not exist), we will still cache the empty result (null) and set the expiration time of the empty result to be very short, with the longest No more than five minutes

(2) Set the accessible list (whitelist): use the bitmaps type to define an accessible list, the list id is used as the offset of the bitmaps, each access and the id in the bitmap Compare, if the access id is not in the bitmaps, intercept and disallow access.

(3) Use Bloom filter

(4) Conduct real-time data monitoring and find that when the hit rate of Redis decreases rapidly, check the access objects and access data, and set up a blacklist.

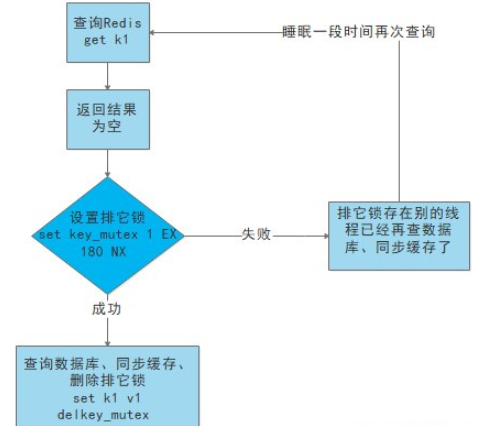

2. Cache breakdown

2.1 Problem description

When the user requests data for an existing key, the data for the key in redis has become outdated. If a large number of concurrent requests find that the cache has expired, the data source will be requested to load the data and cached in redis. At this time, the large number of concurrency may overwhelm the database service.

2.2 Solution

When the data of a certain key is requested in large numbers, this key is hot data and needs to be considered to avoid the "breakdown" problem.

(1) Pre-set popular data: Before redis peak access, store some popular data into redis in advance, and increase the duration of these popular data keys

(2) Real-time adjustment : On-site monitoring of which data is popular and real-time adjustment of key expiration length

(3) Use lock:

is when the cache expires (the value taken out is judged to be Empty), instead of loading the db immediately.

First use some operations of the cache tool with a successful operation return value (such as Redis's SETNX) to set a mutex key

When When the operation returns successfully, perform the load db operation again, restore the cache, and finally delete the mutex key;

When the operation returns failure, it proves that there is a thread loading db, and the current thread sleeps for a while Time to retry the entire get cache method.

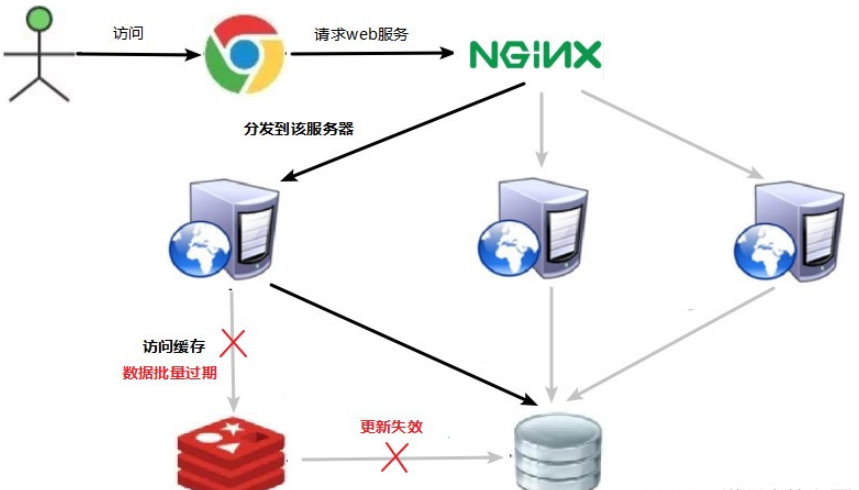

3. Cache avalanche

3.1 Problem description

The corresponding data exists, but the key data Has expired (the redis cache will expire and this key will be automatically deleted). At this time, a large number of concurrent requests access different keys, that is, a large number of different keys are accessed at the same time. At this time, the key is in the expiration stage, and the database will be requested. A large number of concurrent requests It will overwhelm the database server. This situation is called cache avalanche. The difference from cache breakdown is that the former is a key.

3.2 Solution

The avalanche effect when the cache fails has a terrible impact on the underlying system!

(1) Build a multi-level cache architecture:

nginx cache redis cache other caches (ehcache, etc.)

(2) Use locks or queues:

Use locks or queues to ensure that there will not be a large number of threads accessing the database at once Read and write are performed continuously to avoid a large number of concurrent requests from falling on the underlying storage system in the event of a failure. Not applicable to high concurrency situations

(3) Set the expiration flag to update the cache:

Record whether the cached data has expired ( Set the advance amount), if it expires, it will trigger a notification to another thread to update the cache of the actual key in the background.

(4) Spread the cache expiration time:

For example, we can based on the original expiration time Add a random value, such as 1-5 minutes random, so that the repetition rate of each cache's expiration time will be reduced, making it difficult to cause collective failure events.

The above is the detailed content of How to solve common problems of caching data based on Redis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Solution to 0x80242008 error when installing Windows 11 10.0.22000.100

May 08, 2024 pm 03:50 PM

Solution to 0x80242008 error when installing Windows 11 10.0.22000.100

May 08, 2024 pm 03:50 PM

1. Start the [Start] menu, enter [cmd], right-click [Command Prompt], and select Run as [Administrator]. 2. Enter the following commands in sequence (copy and paste carefully): SCconfigwuauservstart=auto, press Enter SCconfigbitsstart=auto, press Enter SCconfigcryptsvcstart=auto, press Enter SCconfigtrustedinstallerstart=auto, press Enter SCconfigwuauservtype=share, press Enter netstopwuauserv , press enter netstopcryptS

Analyze PHP function bottlenecks and improve execution efficiency

Apr 23, 2024 pm 03:42 PM

Analyze PHP function bottlenecks and improve execution efficiency

Apr 23, 2024 pm 03:42 PM

PHP function bottlenecks lead to low performance, which can be solved through the following steps: locate the bottleneck function and use performance analysis tools. Caching results to reduce recalculations. Process tasks in parallel to improve execution efficiency. Optimize string concatenation, use built-in functions instead. Use built-in functions instead of custom functions.

Golang API caching strategy and optimization

May 07, 2024 pm 02:12 PM

Golang API caching strategy and optimization

May 07, 2024 pm 02:12 PM

The caching strategy in GolangAPI can improve performance and reduce server load. Commonly used strategies are: LRU, LFU, FIFO and TTL. Optimization techniques include selecting appropriate cache storage, hierarchical caching, invalidation management, and monitoring and tuning. In the practical case, the LRU cache is used to optimize the API for obtaining user information from the database. The data can be quickly retrieved from the cache. Otherwise, the cache can be updated after obtaining it from the database.

Caching mechanism and application practice in PHP development

May 09, 2024 pm 01:30 PM

Caching mechanism and application practice in PHP development

May 09, 2024 pm 01:30 PM

In PHP development, the caching mechanism improves performance by temporarily storing frequently accessed data in memory or disk, thereby reducing the number of database accesses. Cache types mainly include memory, file and database cache. Caching can be implemented in PHP using built-in functions or third-party libraries, such as cache_get() and Memcache. Common practical applications include caching database query results to optimize query performance and caching page output to speed up rendering. The caching mechanism effectively improves website response speed, enhances user experience and reduces server load.

How to use Redis cache in PHP array pagination?

May 01, 2024 am 10:48 AM

How to use Redis cache in PHP array pagination?

May 01, 2024 am 10:48 AM

Using Redis cache can greatly optimize the performance of PHP array paging. This can be achieved through the following steps: Install the Redis client. Connect to the Redis server. Create cache data and store each page of data into a Redis hash with the key "page:{page_number}". Get data from cache and avoid expensive operations on large arrays.

How to upgrade Win11 English 21996 to Simplified Chinese 22000_How to upgrade Win11 English 21996 to Simplified Chinese 22000

May 08, 2024 pm 05:10 PM

How to upgrade Win11 English 21996 to Simplified Chinese 22000_How to upgrade Win11 English 21996 to Simplified Chinese 22000

May 08, 2024 pm 05:10 PM

First you need to set the system language to Simplified Chinese display and restart. Of course, if you have changed the display language to Simplified Chinese before, you can just skip this step. Next, start operating the registry, regedit.exe, directly navigate to HKEY_LOCAL_MACHINESYSTEMCurrentControlSetControlNlsLanguage in the left navigation bar or the upper address bar, and then modify the InstallLanguage key value and Default key value to 0804 (if you want to change it to English en-us, you need First set the system display language to en-us, restart the system and then change everything to 0409) You must restart the system at this point.

Can navicat connect to redis?

Apr 23, 2024 pm 05:12 PM

Can navicat connect to redis?

Apr 23, 2024 pm 05:12 PM

Yes, Navicat can connect to Redis, which allows users to manage keys, view values, execute commands, monitor activity, and diagnose problems. To connect to Redis, select the "Redis" connection type in Navicat and enter the server details.

How to find the update file downloaded by Win11_Share the location of the update file downloaded by Win11

May 08, 2024 am 10:34 AM

How to find the update file downloaded by Win11_Share the location of the update file downloaded by Win11

May 08, 2024 am 10:34 AM

1. First, double-click the [This PC] icon on the desktop to open it. 2. Then double-click the left mouse button to enter [C drive]. System files will generally be automatically stored in C drive. 3. Then find the [windows] folder in the C drive and double-click to enter. 4. After entering the [windows] folder, find the [SoftwareDistribution] folder. 5. After entering, find the [download] folder, which contains all win11 download and update files. 6. If we want to delete these files, just delete them directly in this folder.