Technology peripherals

Technology peripherals

AI

AI

'AI face-changing' defrauds millions in nine seconds? How to use technology to counter AI abuse?

'AI face-changing' defrauds millions in nine seconds? How to use technology to counter AI abuse?

'AI face-changing' defrauds millions in nine seconds? How to use technology to counter AI abuse?

Produced by Sohu Technology

Author|Zheng Songyi

Editor|Yang Jin

Recently, AI face-changing scams have occurred frequently, and the confirmation of "Musk's prediction" has come earlier than expected. Two months ago, Musk and thousands of others signed a joint letter calling for a six-month suspension of training of more powerful AI systems. The reason was that they were worried that more powerful AI systems would pose potential risks to society and humans. They never imagined that risks had already invaded human life. .

On May 22, the topic "AI fraud is breaking out across the country" rushed to the hot search list. After the owner of a technology company in Fujian was defrauded out of 4.3 million yuan in 10 minutes by a scammer who pretended to be a friend through AI face-changing technology, another Anhui man was defrauded out of 1.32 million yuan in just 9 seconds by a scammer using AI face-changing technology. , netizens called it "impossible to guard against."

Xia Bohan, a lawyer at Beijing Huarang Law Firm, told Sohu Technology, "This behavior constitutes a crime of fraud, which can be punished with up to life imprisonment depending on the amount of fraud. For deep synthesis service providers, according to the "Internet Information Services Deep Synthesis Management" "Provisions", complete management rules and platform conventions should be formulated and disclosed, management responsibilities should be performed in accordance with the law, and technical or manual methods should be used to review the input data and synthesis results of deep synthesis service users."

It is reported that in addition to AI face-changing video fraud, some e-commerce anchors use AI face-changing technology to disguise themselves as celebrities such as "Jackie Chan" and "Yang Mi" to deliver goods live, thereby attracting traffic and saving live broadcast costs. Relevant personnel of the Douyin platform told Sohu Technology, “The platform does not allow face-swapping live broadcasts. If found, the broadcast will be stopped directly, and credit points will be deducted from the infringing merchants. Accounts that still change their faces for live broadcasts after being disposed of will be restricted. Its live broadcast permission."

Risk problems caused by technology will ultimately need to be remedied by technical means. Fortunately, as AI face-changing is increasingly abused, new models for identifying AI face-changing technology have also been applied.

How is "AI face changing" implemented?

The original intention of the research and development of AI face-changing technology is to be used in film and television entertainment, game development and other fields, aiming to provide users with a more realistic viewing experience.

It is understood that the implementation principles of AI face-changing technology mainly include face detection, face alignment, feature extraction, feature fusion, and image reconstruction.

Among them, face detection technology is used to detect the face position and facial features in the input image; face alignment technology uses techniques such as affine changes to make the facial feature points of different faces the same; feature extraction technology uses deep learning Models, such as convolutional neural networks (CNN), extract facial shape, texture, color and other features; feature fusion technology uses linear interpolation or non-linear interpolation transformation methods to fuse the facial features of two different faces to generate new facial features ; Finally, the reconstructed image technology is used to map the new facial features back into the image space to generate a new image with a face-changing effect.

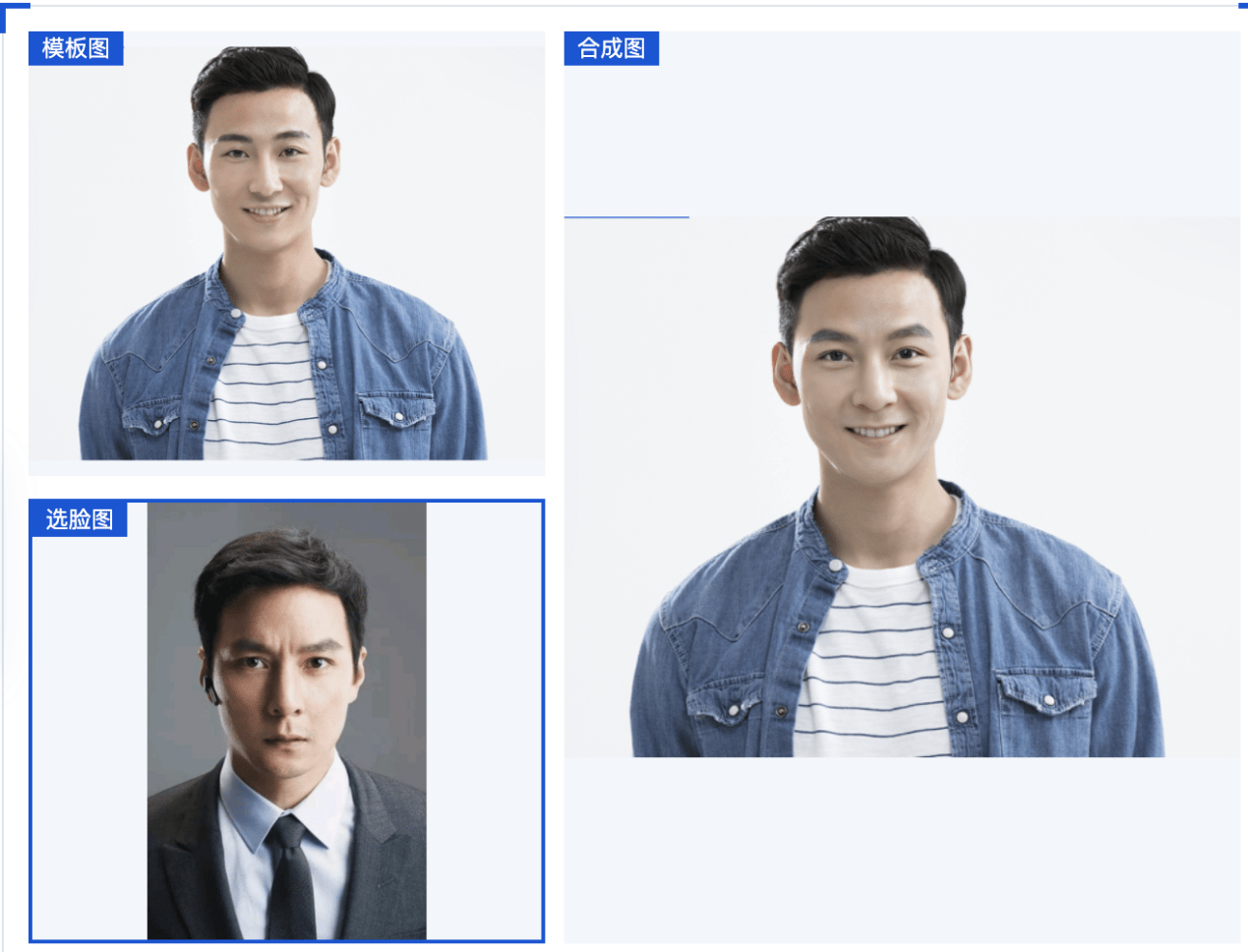

Sohu Technology selected a currently popular "Tencent Cloud AI Face Fusion" application as a test software to see how far "AI face-changing" technology can achieve in reshaping portraits.

Sohu Technology randomly selected a man's picture as a template, and used Daniel Wu's picture as a face selection. The test results showed that the AI-processed man's portrait showed the characteristics of Daniel Wu between his eyebrows and eyes, but the hairstyle and other features other than the face were Parts have not changed.

According to Baotou police disclosure, on April 20, a friend of Mr. Guo, the legal representative of a technology company in Fuzhou, suddenly contacted him via WeChat video. After the two chatted briefly, the friend told Mr. Guo that his friend was bidding in other places. , a deposit of 4.3 million yuan is required, and a public-to-public account transfer is required, so I want to borrow Mr. Guo’s company’s account to make the payment. The friend asked Mr. Guo for his bank card number and claimed that the money had been transferred to Mr. Guo’s account. He also sent a screenshot of the bank transfer receipt to Mr. Guo via WeChat. Based on the trust of the video chat, Mr. Guo did not verify whether the money had arrived, and transferred 4.3 million yuan to the other party in two installments. After the money was transferred, Mr. Guo sent a message to his friend on WeChat saying that the matter had been settled. But what he didn't expect was that the news that his friend came back turned out to be a question mark.

Mr. Guo called his friend, but the other party said that there was no such thing. Only then did he realize that he had encountered a "high-end" scam. The other party used intelligent AI face-changing technology to pretend to be a friend and defrauded him.

IT technicians analyzed from Sohu Technology that there are hackers who specialize in developing AI real-time face-changing tools, which is similar to the current real-time beautification implanted in mobile phones. Criminals may use loopholes to steal the WeChat accounts of the victim's friends, obtain photos or videos of the victim's friends through social platforms, capture their own facial features with cameras, and then use real-time AI face-changing tools to fuse the victim's friends' facial features with their own , Pretending to be a friend of the victim to have a conversation, in order to achieve the purpose of fraud.

What is the progress of technology to counter AI abuse?

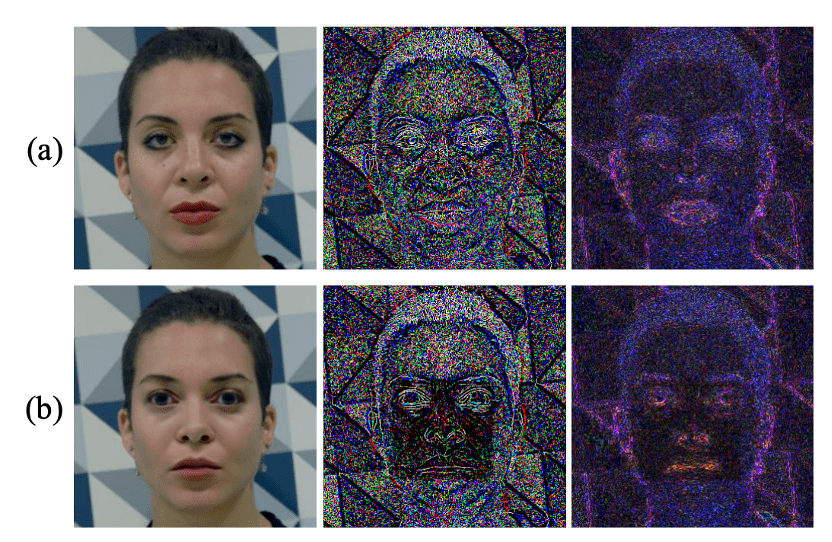

There are always people who better than you. In 2020, Microsoft Research Asia and Peking University jointly published the paper "Face X-ray for More General Face Forgery Detection". Face X-Ray, a new model used to identify AI face-changing technology, has two major attributes: it can generalize To unknown face-swapping algorithms, it can provide explainable face-swapping boundaries.

Guo Baining, one of the main authors of the paper and executive vice president of Microsoft Research Asia, said, "During the image collection process, each image has its own unique characteristics, which may come from the shooting hardware or processing software. As long as The images are not generated in one piece. They will leave clues during the fusion process. These clues are invisible to the human eye, but can be captured by deep learning."

The paper demonstrates image noise analysis and error level analysis technology. It can be seen that real images show consistent noise patterns, but face replacement will obviously be different (group a pictures are real faces, group b pictures are synthetic images , the middle column is the noise analysis image, and the right column is the error level analysis image).

It can be seen that effective identification of "AI face-changing" has a technical basis and is not an unsolvable problem. Domestic technology companies represented by Tencent Cloud are using "Anti-Deepfake (ATDF)" technology to develop "face-changing screening" applications, aiming to use AI technology to counter the abuse of AI technologies such as face-changing.

According to reports, Anti-Deepfake is divided into two categories: passive detection and active defense. Passive detection technology focuses on post-event evidence collection, that is, detecting videos that have been produced and disseminated to determine whether they are fake face videos; Another type of active defense technology focuses on prior defense, that is, adding hidden information, such as watermarks, anti-noise, etc., before the face data is released and disseminated, to actively trace the source or prevent malicious users from using face videos with noise added for forgery. , thereby achieving the purpose of protecting the human face and achieving active defense.

Article 6 of the "Regulations on the Management of Deep Synthesis of Internet Information Services" jointly issued by the Cyberspace Administration of China, the Ministry of Industry and Information Technology, and the Ministry of Public Security clearly stipulates that "No organization or individual may use deep synthesis services to produce, copy, publish, or disseminate legal, administrative For information prohibited by regulations, deep synthesis services shall not be used to engage in activities prohibited by laws and administrative regulations such as endangering national security and interests, damaging the country's image, infringing on social and public interests, disrupting economic and social order, infringing on the legitimate rights and interests of others, etc."

Xia Bohan emphasized, “In addition to AI face-changing video fraud, many mobile applications currently require face-swiping authentication. Criminals may use AI face-changing technology to impersonate themselves as real rights holders. This requires us to strictly protect it. To prevent leakage of personal information, it is also recommended to set up multiple other identity verification methods. On the other hand, software vendors also need to further develop technology to improve security and prevent criminals from taking advantage of the situation."

The above is the detailed content of 'AI face-changing' defrauds millions in nine seconds? How to use technology to counter AI abuse?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1382

1382

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist