Technology peripherals

Technology peripherals

AI

AI

Meta's self-developed AI chip progress: the first AI chip will be launched in 2025, as well as a video AI chip

Meta's self-developed AI chip progress: the first AI chip will be launched in 2025, as well as a video AI chip

Meta's self-developed AI chip progress: the first AI chip will be launched in 2025, as well as a video AI chip

News on May 19, according to foreign media Techcrunch, in an online event this morning, Facebook parent company Meta disclosed for the first time the progress of its self-developed AI chips, which can support its recently launched advertising design and Generative AI technology for creative tools.

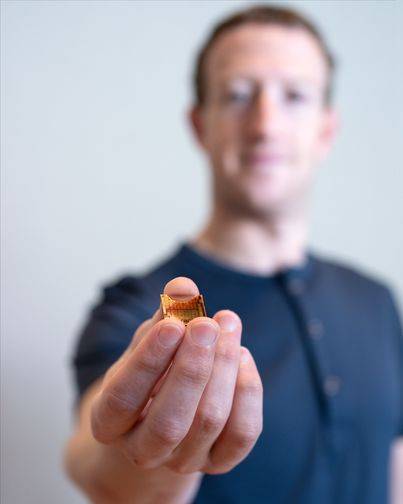

△Meta CEO Zuckerberg shows off the first self-developed AI chip MTIA

Alexis Bjorlin, vice president of infrastructure at Meta, said: “Building our own [hardware] capabilities gives us control over every layer of the stack, from data center design to training framework. This level of Vertical integration can push the boundaries of artificial intelligence research on a large scale.”The first self-developed AI chip MTIA

Over the past decade or so, Meta has spent billions of dollars recruiting top data scientists and building new types of artificial intelligence, including now a discovery engine, moderation filters, and ad recommenders across its apps and services Powered by artificial intelligence. This company has been striving to turn its many ambitious AI research innovations into products, especially in the area of generative AI.

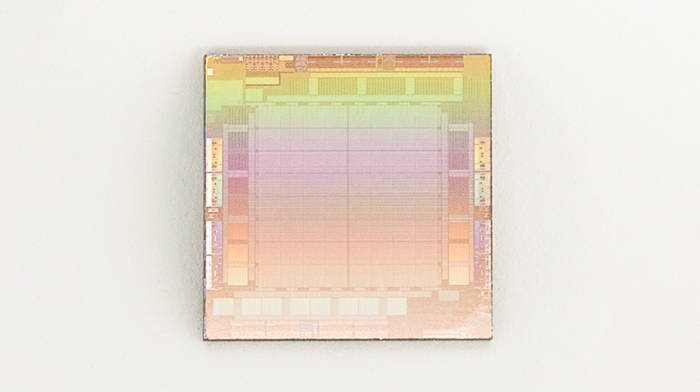

Since 2016, leading Internet companies have been actively developing cloud AI chips. Google has been designing and deploying self-developed AI chips called Tensor Processing Units (TPU) for training generative AI systems such as PaLM-2, Imagen, etc.; Amazon provides AWS customers with two self-developed AI chips, AWS Trainium and AWS Inferentia. chip for application. Microsoft is also rumored to be working with AMD to develop an AI chip called Athena.Previously, Meta ran its AI workloads primarily using a combination of third-party CPUs and custom chips designed to accelerate AI algorithms—CPUs tend to be less efficient than GPUs at handling such tasks. In order to turn the situation around, Meta developed its first-generation self-developed AI chip MTIA (MTIA v1) based on the 7nm process in 2020.

Meta calls the AI chip Meta Training and Inference Accelerator, or MTIA for short, and describes it as part of a “family” of AI chips that accelerate AI training and inference workloads. An MTIA is an ASIC, a chip that combines different circuits on a single substrate, allowing it to be programmed to perform one or more tasks in parallel.

According to the introduction, MTIA v1 is manufactured using a 7-nanometer process, and its internal 128MB memory can be expanded to up to 128GB. Meta said that MTIA can be specially used to handle work related to AI recommendation systems, helping users find the best post content and present it to users faster, and its computing performance and processing efficiency are better than CPU. In addition, in the benchmark test of Meta design, MTIA is also more efficient than GPU in processing "low complexity" and "medium complexity" AI models.

Meta said there is still some work to be done in the memory and network areas of MTIA chips, which will create bottlenecks as the size of AI models grows, requiring the workload to be distributed across multiple chips. Recently, Meta has acquired the AI network technology team of Oslo-based British chip unicorn Graphcore for this purpose. Currently, MTIA focuses more on inference capabilities than training capabilities for the Meta application family's "recommended workloads."

Meta emphasized that it will continue to improve MTIA, which has "significantly" improved the company's efficiency in terms of performance per watt when running recommended workloads - in turn allowing Meta to run "more enhanced" and "cutting-edge" artificial intelligence work load.

According to the plan, Meta will officially launch its self-developed MTIA chip in 2025.

Meta’s AI supercomputer RSC

According to reports, Meta originally planned to launch its self-developed custom AI chips on a large scale in 2022, but ultimately delayed it and instead ordered billions of dollars worth of Nvidia GPUs for its supercomputer Research SuperCluster (RSC). , which required a major redesign of its multiple data centers.

According to reports, RSC debuted in January 2022 and was assembled in partnership with Penguin Computing, Nvidia and Pure Storage, and has completed the second phase of expansion. Meta says it now contains a total of 2,000 Nvidia DGX A100 systems, equipped with 16,000 Nvidia A100 GPUs.Although, RSC’s computing power currently lags behind Microsoft and Google’s AI supercomputers. Google claims its AI-focused supercomputer is powered by 26,000 Nvidia H100 GPUs. Meta notes that the advantage of RSC is that it allows researchers to train models using actual examples from Meta’s production systems. Unlike the company's previous AI infrastructure, which leveraged open source and publicly available data sets, this infrastructure is now available.

RSC AI supercomputers are advancing AI research in multiple areas, including generative AI, pushing the boundaries of research. "This is really about the productivity of AI research," a Meta spokesperson said. We want to provide AI researchers with state-of-the-art infrastructure that enables them to develop models and provide them with a training platform to advance AI. ”

Meta claims that at its peak, RSC could reach nearly 5 exaflops of computing power, making it one of the fastest in the world.

Meta uses RSC for LLaMA training, where RSC refers to the acronym for "Large Scale Language Model Meta Artificial Intelligence". Meta says the largest LLaMA model was trained on 2,048 A100 GPUs and took 21 days.

"Building our own supercomputing capabilities gives us control over every layer of the stack; from data center design to training frameworks," a Meta spokesperson added: "RSC will help Meta's AI researchers build new and better AI models that learn from trillions of examples; work across hundreds of different languages; work together to seamlessly analyze text, images, and videos; develop new augmented reality tools; and more.”

In the future, Meta may introduce its self-developed AI chip MTIA into RSC to further improve its AI performance.

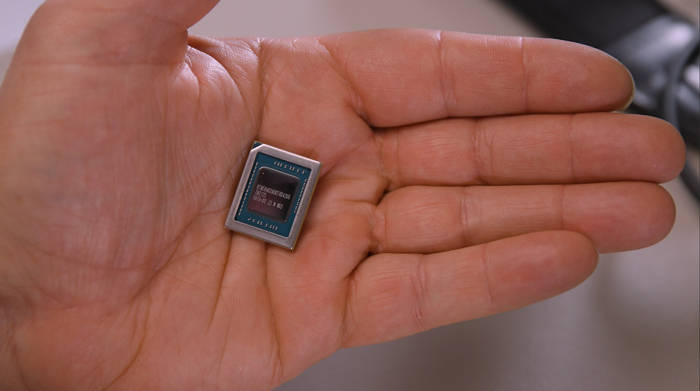

AI chip MSVP for video processing

In addition to MTIA, Meta is also developing another AI chip called Meta Scalable Video Processor (MSVP), which is mainly designed to meet the data processing needs of video on demand and live streaming media that continue to grow. Meta ultimately hopes to Most of the mature and stable audio and video content processing work is performed by MSVP.

In fact, Meta began to conceive of custom server-side video processing chips many years ago, and announced the launch of ASICs for video transcoding and inference work in 2019. This is the culmination of some of those efforts and a new push for competitive advantage. Especially in the field of live video streaming.

“On Facebook alone, people spend 50% of their time watching videos,” Meta technical directors Harikrishna Reddy and Yunqing Chen wrote in a blog post published on the morning of the 19th: “To serve the world Across various devices everywhere (mobile, laptop, TV, etc.), a video uploaded to Facebook or Instagram is transcoded into multiple bitstreams with different encoding formats, resolutions and qualities... MSVP is programmable and scalable and can be configured to efficiently support the high-quality transcoding required for VOD as well as the low latency and faster processing times required for live streaming.”

△MSVP

Meta says its plan is to eventually offload most "stable and mature" video processing workloads to MSVP and only use software video encoding for workloads that require specific customization and "significantly" higher quality. Meta says we will continue to improve video quality with MSVP using pre-processing methods such as intelligent noise reduction and image enhancement, as well as post-processing methods such as artifact removal and super-resolution.

“In the future, MSVP will enable us to support more of Meta’s most important use cases and needs, including short-form video – enabling efficient delivery of generative AI, AR/VR and other Metaverse content,” said Reddy and Chen .

Editor: Xinzhixun-Rurounijian

The above is the detailed content of Meta's self-developed AI chip progress: the first AI chip will be launched in 2025, as well as a video AI chip. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

According to news on November 14, Nvidia officially released the new H200 GPU at the "Supercomputing23" conference on the morning of the 13th local time, and updated the GH200 product line. Among them, the H200 is still built on the existing Hopper H100 architecture. However, more high-bandwidth memory (HBM3e) has been added to better handle the large data sets required to develop and implement artificial intelligence, making the overall performance of running large models improved by 60% to 90% compared to the previous generation H100. The updated GH200 will also power the next generation of AI supercomputers. In 2024, more than 200 exaflops of AI computing power will be online. H200

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

On June 19, according to media reports in Taiwan, China, Google (Google) has approached MediaTek to cooperate in order to develop the latest server-oriented AI chip, and plans to hand it over to TSMC's 5nm process for foundry, with plans for mass production early next year. According to the report, sources revealed that this cooperation between Google and MediaTek will provide MediaTek with serializer and deserializer (SerDes) solutions and help integrate Google’s self-developed tensor processor (TPU) to help Google create the latest Server AI chips will be more powerful than CPU or GPU architectures. The industry points out that many of Google's current services are related to AI. It has invested in deep learning technology many years ago and found that using GPUs to perform AI calculations is very expensive. Therefore, Google decided to

According to online rumors, vivo will launch a large self-developed AI model: similar to Microsoft Copilot

Oct 17, 2023 pm 05:57 PM

According to online rumors, vivo will launch a large self-developed AI model: similar to Microsoft Copilot

Oct 17, 2023 pm 05:57 PM

Vivo has officially announced that it will hold the 2023 Developers Conference with the theme of "Together Together" at the Shenzhen International Convention and Exhibition Center on November 1, 2023. It will release its self-developed AI large model, self-developed operating system, and OriginOS4 system. . Recently, the well-known digital blogger "Digital Chat Station" broke the news that vivo's self-developed AI large model will be launched on OriginOS4, similar to Microsoft Copilot, with a separate floating window called from the sidebar, and a mode integrated on the voice assistant It’s not the same. The amount of AI data and system integration are done well, and all employees have an intelligent system. Judging from the picture, vivo’s self-developed AI large model will be named “JoviCopilot”. It is reported that JoviCopilot can

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

After the debut of the NVIDIA H200, known as the world's most powerful AI chip, the industry began to look forward to NVIDIA's more powerful B100 chip. At the same time, OpenAI, the most popular AI start-up company this year, has begun to develop a more powerful and complex GPT-5 model. Guotai Junan pointed out in the latest research report that the B100 and GPT5 with boundless performance are expected to be released in 2024, and the major upgrades may release unprecedented productivity. The agency stated that it is optimistic that AI will enter a period of rapid development and its visibility will continue until 2024. Compared with previous generations of products, how powerful are B100 and GPT-5? Nvidia and OpenAI have already given a preview: B100 may be more than 4 times faster than H100, and GPT-5 may achieve super

In-depth reveal: The secret behind Apple's own camera ISP development!

Nov 11, 2023 pm 02:45 PM

In-depth reveal: The secret behind Apple's own camera ISP development!

Nov 11, 2023 pm 02:45 PM

According to news on November 11, recently, the authority in the field of mobile phone chips, @手机 Chip Daren, revealed in his latest Weibo that Apple will soon launch two new M3 series chips to further consolidate its technical strength in the field of mobile device chips. These two chips will use advanced 3nm technology to bring users more excellent performance and energy efficiency. Details about these two chips are still mysterious. However, according to mobile phone chip experts, the fourth chip of the M3 series is named M3 Ultra and will use advanced "UltraFusion" technology. The characteristic of this technology is that two M3Max chips are cleverly spliced together to provide the device with more powerful computing power and processing speed. As for the fifth M3 series core

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

KL730's progress in energy efficiency has solved the biggest bottleneck in the implementation of artificial intelligence models - energy costs. Compared with the industry and previous Nerner chips, the KL730 chip has increased by 3 to 4 times. The KL730 chip supports the most advanced lightweight GPT large Language models, such as nanoGPT, and provide effective computing power of 0.35-4 tera per second. AI company Kneron today announced the release of the KL730 chip, which integrates automotive-grade NPU and image signal processing (ISP) to bring safe and low-energy AI The capabilities are empowered in various application scenarios such as edge servers, smart homes, and automotive assisted driving systems. San Diego-based Kneron is known for its groundbreaking neural processing units (NPUs), and its latest chip, the KL730, aims to achieve

The latest progress and application practice of PHP cryptography

Aug 17, 2023 pm 12:16 PM

The latest progress and application practice of PHP cryptography

Aug 17, 2023 pm 12:16 PM

The latest progress and application practice of PHP cryptography Introduction: With the rapid development of the Internet and the increasing importance of data security, cryptography, as a discipline that studies the protection of information security, has received widespread attention and research. In this regard, recent advances in PHP cryptography enable developers to better protect users' sensitive information and provide more secure applications. This article will introduce the latest progress in PHP cryptography, as well as code examples in practical application scenarios. 1. Latest Developments in Hash Algorithms Hash algorithms are a commonly used technology in cryptography.

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

While the world is still obsessed with NVIDIA H100 chips and buying them crazily to meet the growing demand for AI computing power, on Monday local time, NVIDIA quietly launched its latest AI chip H200, which is used for training large AI models. Compared with other The performance of the previous generation products H100 and H200 has been improved by about 60% to 90%. The H200 is an upgraded version of the Nvidia H100. It is also based on the Hopper architecture like the H100. The main upgrade includes 141GB of HBM3e video memory, and the video memory bandwidth has increased from the H100's 3.35TB/s to 4.8TB/s. According to Nvidia’s official website, H200 is also the company’s first chip to use HBM3e memory. This memory is faster and has larger capacity, so it is more suitable for large languages.