Technology peripherals

Technology peripherals

AI

AI

AIGC+robot=embodied intelligence? Two of the coolest men in Silicon Valley coincide with each other and A-shares preview the 'next wave”

AIGC+robot=embodied intelligence? Two of the coolest men in Silicon Valley coincide with each other and A-shares preview the 'next wave”

AIGC+robot=embodied intelligence? Two of the coolest men in Silicon Valley coincide with each other and A-shares preview the 'next wave”

New AI themes are emerging one after another. This time it is the turn of the embodied intelligence concept formed by the fusion of "robot AI".

Musk, the "Iron Man of Silicon Valley", and Jen-Hsun Huang, the "Godfather of Graphics Cards" who loves black leather jackets, have both made positive statements, which coincides with each other.

On May 16, local time, Tesla’s 2023 annual shareholder meeting was held. Musk said at the meeting that humanoid robots will be Tesla’s main long-term source of value in the future, “If The ratio of humanoid robots to people is about 2:1, so people’s demand for robots may be 10 billion or even 20 billion, far exceeding the number of electric vehicles.”

On the same day, Nvidia founder and CEO Jensen Huang said at the ITF World 2023 Semiconductor Conference that The next wave of AI will be "embodied intelligence" . He also announced Nvidia VIMA, which is a Multimodal embodied artificial intelligence systems capable of performing complex tasks guided by visual textual cues.

Reflected in the A-share secondary market, today, related targets rose strongly, with many stocks hitting their daily limits. As of the close, MOONS Electric, Youde Precision, and OBI Zhongguang were at daily limit, while Robotics, ArcSoft Technology, Yuntian Lifei, Haozhi Electromechanical, Eft, etc. were up more than 10%.

Musk has been emphasizing the potential of his humanoid robots since 2021, and has unswervingly proposed the broad prospects of "robot artificial intelligence". At a time when AIGC (generative AI) is hot, the popularization of the term "embodied intelligence" comes at the right time, pushing "robot AI" to the center of the stage again.

AIGC, embodied intelligence, and robots, how are the three connected?

Tesla Humanoid Robot≈Embodied Intelligent Robot

People are already familiar with Musk’s humanoid robots. From the perspective of end use, Tesla humanoid robots and embodied intelligent robots are almost equal. Musk’s long-term goal for Tesla robots is to enable robots to adapt to the environment and do what humans do, so that they can serve thousands of households, such as cooking, mowing lawns, taking care of the elderly, etc.

An embodied intelligent robot is an intelligent agent that has a physical entity, can interact multi-modally with the real world, perceive and understand the environment like humans, and complete tasks through autonomous learning. Dieter Fox, senior director of robotics research at NVIDIA and a professor at the University of Washington, previously pointed out that a key goal of robotics research is to build robots that are helpful to humans in the real world.

AIGC provides new ideas for embodied intelligence to break through technical bottlenecksEmbodied intelligence is a basic issue in intelligence science and a big problem. AIGC provides new ideas for the realization of embodied intelligence.

In 1950, Turing first proposed the concept of embodied intelligence in his paper "Computing Machinery and Intelligence". In the next few decades, physical intelligence has not made great progress due to technical constraints.

As Li Feifei, a professor of computer science at Stanford University, said, "

The meaning of embodiment is not the body itself, but the overall needs and functions of interacting with the environment and doing things in the environment."Interaction with people and the environment is the first step for embodied intelligent robots to develop the ability to understand and transform the objective world. In this regard, the most direct obstacle is that people rely heavily on handwritten code. To control robots, the "Tower of Babel" is rising in front of humans and artificial intelligence.

In the era of AIGC, large AI models such as GPT have provided new solutions. Many researchers have tried to use multi-modal large language models as a bridge for communication between humans and robots. That is, by jointly training images, text, and embodied data, and introducing multi-modal input, the model can enhance the model's understanding of real-life objects and help robots handle embodied reasoning tasks.

Microsoft, Google, Alibaba, etc. are actively exploring

At present, embodied intelligence has become an international academic frontier research direction. Institutions including the National Science Foundation of the United States are promoting the development of embodied intelligence, and major international academic conferences have begun to pay more and more attention to embodiment. For intelligence-related work, top universities in the United States have begun to form an embodied intelligence research community. The industry is also making rapid progress, with

Google and Microsoft taking the lead, both trying to inject soul into robots with large models. The PaLM-E model of the former is inseparable from embodied intelligence. Completing embodied robot tasks has always been the focus of research on this model. The latter is exploring how to extend ChatGPT developed by OpenA into the field of robotics, allowing us to intuitively control machines such as machines with language. Arms, drones, home assistance robots and other platforms.

In China, Alibaba is also experimenting with connecting the Qianwen large model to industrial robots. By inputting a sentence of human language in the DingTalk dialog box, the robot can be remotely commanded to work.

The main line of investment is increasingly clear

From a technical point of view, the realization of embodied intelligence is inseparable from three major links - perception, imagination and execution. Specifically, in order to realize an embodied intelligent robot, it needs to have flexible thinking, strong execution ability, excellent communication ability with people and human-like self-learning ability. Strong execution depends on the robot's physical peripherals, that is, the mechanical body and basic motion control. Perception and imagination capabilities can be externalized into the robot's ability to hear, see, and speak, which requires the use of machines Various AI technologies such as vision, deep learning, and reinforcement learning optimize software and algorithms, and use a large number of data sets across different environments such as text, vision, speech, and scenes for training. Many institutions believe that the robotics industry, multi-modal large models, and machine vision technology are new directions for making money. Guosheng Securities said that multi-modal GPT can greatly assist the development of the robot industry. In the next 5-10 years, large models combined with complex multi-modal solutions are expected to have complete capabilities to interact with the world and be applied in fields such as general robots; Tianfeng International Securities stated that AI is the mother of machine vision, and deep learning is the technical fortress of machine vision. The recent release of the SAM model by Meta is expected to help machine vision usher in the GPT moment. Companies in the above industries or with corresponding technologies have become the focus of attention. Listed companies such as Interactive Yishang, Lingyunguang, Huichuan Technology, Haitian Ruixiang, etc. have all been questioned by investors about their connection with embodied intelligence. Among them, Lingyunguang is one of the few companies that has given a clear response, saying that it uses multi-modal fusion technology to solve customers' intelligent manufacturing needs in multiple scenarios, which is a necessary step in the development of embodied intelligence. .

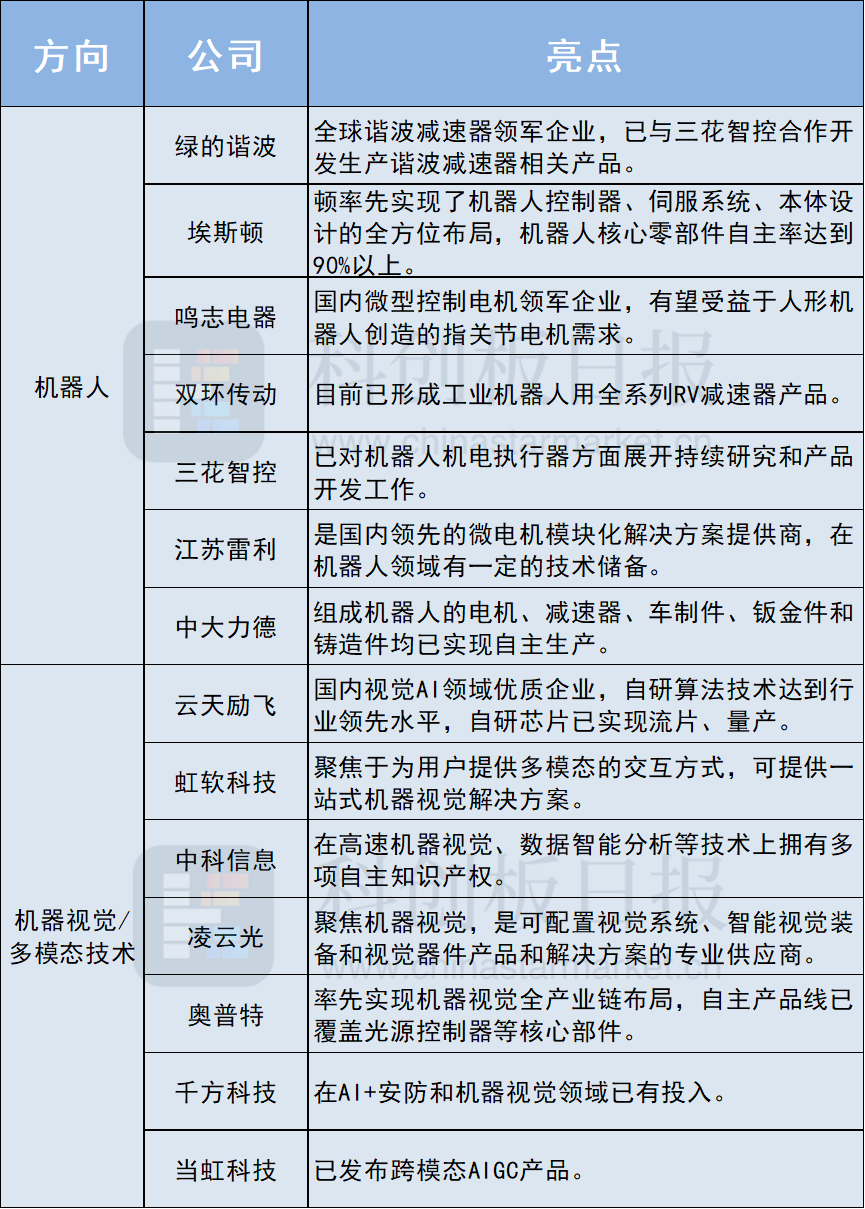

Combined with institutional research reports, according to an incomplete review by the Science and Technology Innovation Board Daily, these companies have also received attention:

Source: Science and Technology Innovation Board Daily

Source: Science and Technology Innovation Board Daily

The above is the detailed content of AIGC+robot=embodied intelligence? Two of the coolest men in Silicon Valley coincide with each other and A-shares preview the 'next wave”. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o