Technology peripherals

Technology peripherals

AI

AI

Someone finally made it clear about the current situation of GPT! OpenAI's latest speech went viral, and it must be a genius hand-picked by Musk

Someone finally made it clear about the current situation of GPT! OpenAI's latest speech went viral, and it must be a genius hand-picked by Musk

Someone finally made it clear about the current situation of GPT! OpenAI's latest speech went viral, and it must be a genius hand-picked by Musk

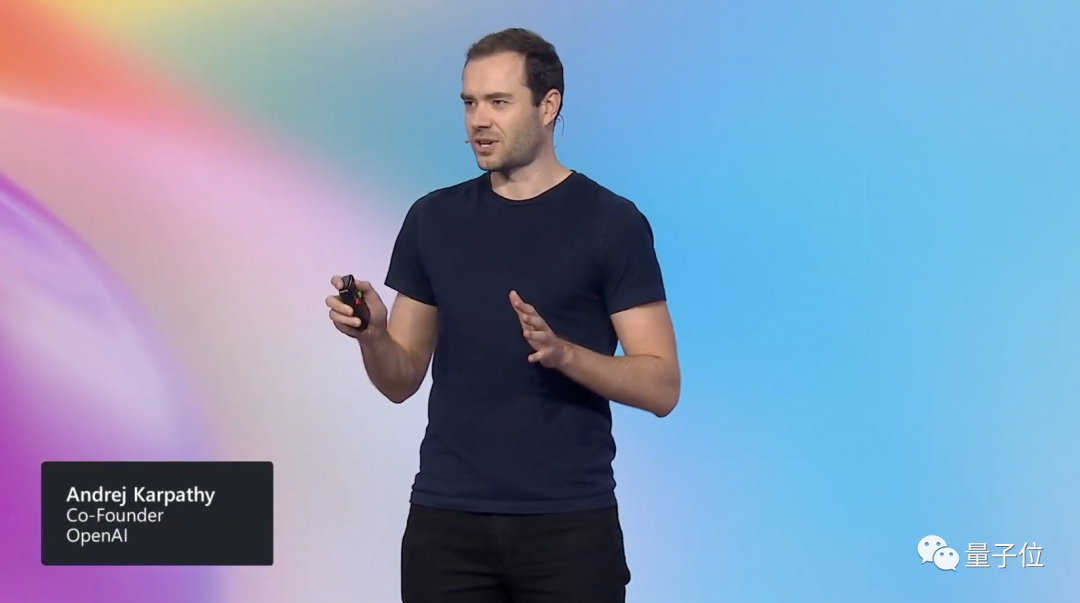

Following the release of Windows Copilot, the Microsoft Build conference was detonated by a speech.

Former Tesla AI Director Andrej Karpathy believed in his speech that tree of thoughts is similar to AlphaGo’s Monte Carlo Tree Search (MCTS) How wonderful!

Netizens shouted: This is the most detailed and interesting guide on how to use large language models and GPT-4 models!

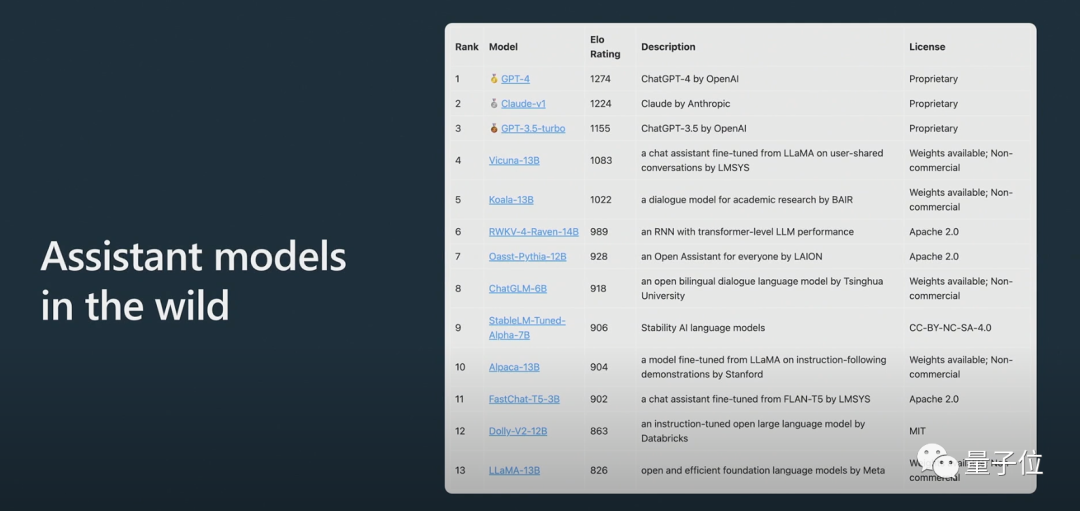

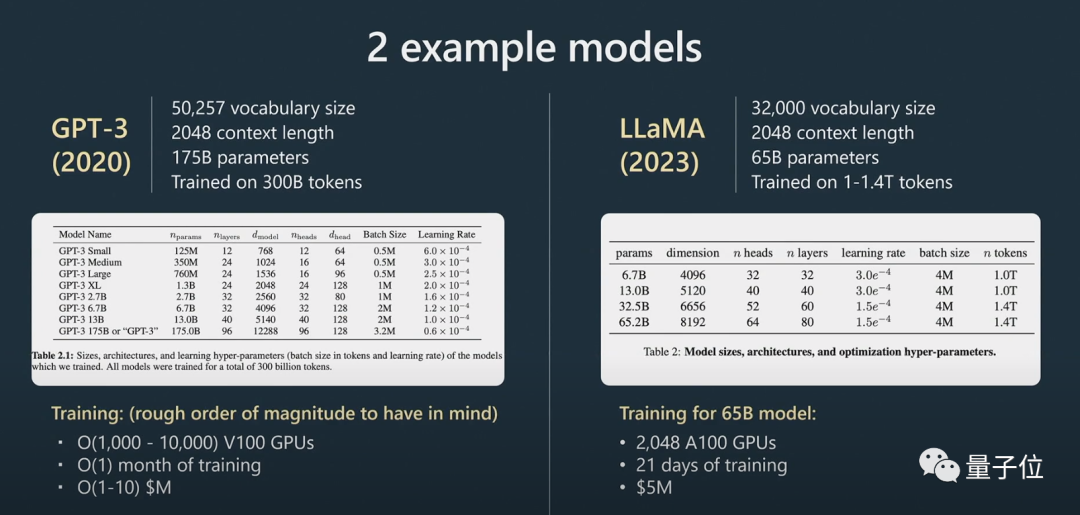

In addition, Karpathy revealed that LLAMA 65B is “significantly more powerful than GPT-3 175B” due to the expansion of training and data, and introduced large models Anonymous Arena ChatBot Arena:

Claude's score is between ChatGPT 3.5 and ChatGPT 4.

Netizens said that Karpathy’s speeches are always great, and the content this time did not disappoint everyone as always.

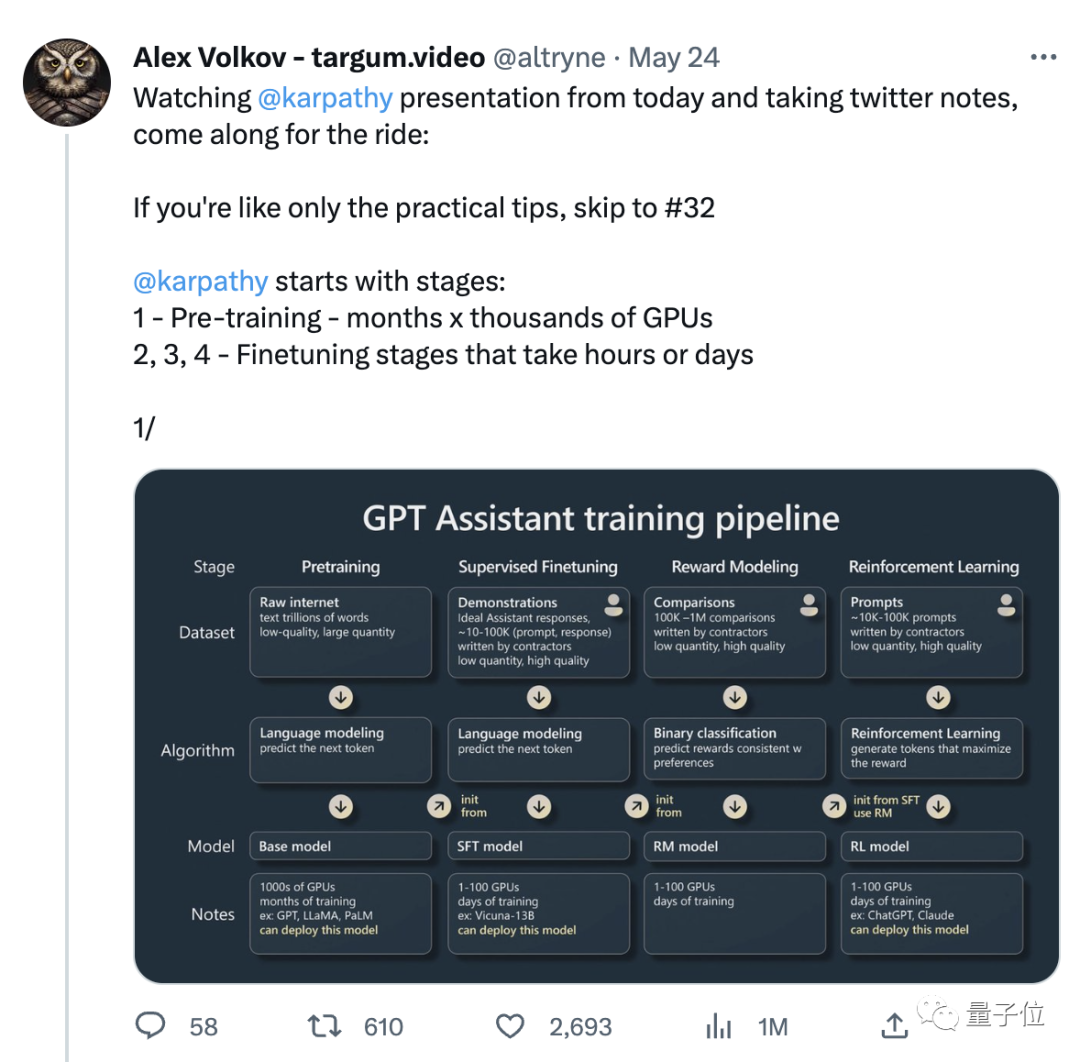

What went viral with the speech was a note compiled by Twitter netizens based on the speech. There are 31 notes, and the number of likes has exceeded 3,000:

So, what specific content was mentioned in this much-anticipated speech?

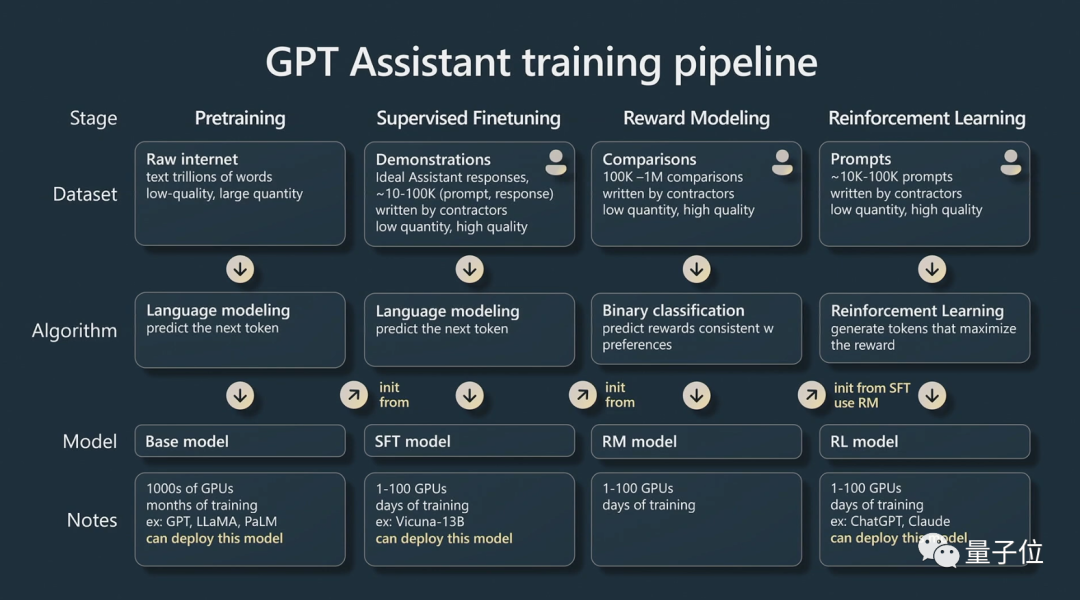

How to train GPT Assistant?

Karpathy’s speech this time is mainly divided into two parts.

Part One, he talked about how to train a "GPT Assistant".

Karpathy mainly talks about the four training stages of AI assistant:

pre-training, supervised fine tuning, reward modeling and reinforcement learning ).

Each stage requires a data set.

In the pre-training stage, a large amount of computing resources need to be used to collect a large number of data sets. A basic model is trained on a large unsupervised data set.

Karpathy used more examples to supplement:

Then we enter the fine-tuning stage.

Using a smaller supervised data set, fine-tuning this base model through supervised learning can create an assistant model that can answer the question.

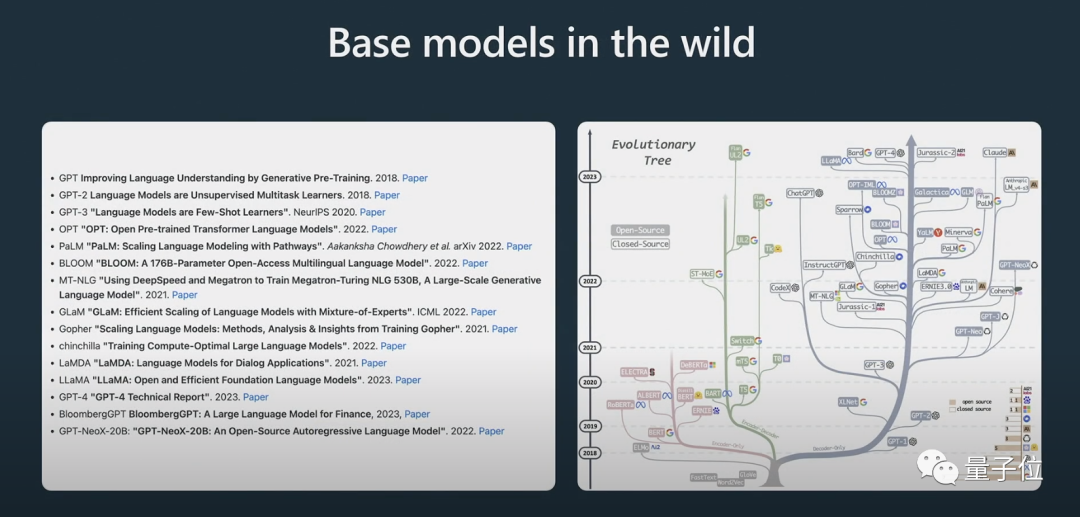

He also showed the evolution process of some models. I believe many people have seen the above "evolutionary tree" picture before.

Karpathy believes that the best open source model currently is Meta’s LLaMA series (because OpenAI has not open sourced anything about GPT-4).

What needs to be clearly pointed out here is that the base model is not an assistant model.

Although the basic model has the ability to solve problems, the answers it gives are not trustworthy, while the assistant model can provide reliable answers. The supervised fine-tuned assistant model is trained on the basis of the basic model, and its performance in generating replies and understanding text structure will be better than that of the basic model.

Reinforcement learning is another key process when training language models.

Using high-quality manually annotated data during the training process, and creating a loss function in a reward modeling manner to improve its performance. Reinforcement training can be achieved by increasing the probability of positive marking and decreasing the probability of negative marking.

Human judgment is critical to improving AI models when it comes to creative tasks, and models can be trained more effectively by incorporating human feedback.

After reinforcement learning with human feedback, a RLHF model can be obtained.

After the models are trained, the next step is how to effectively use these models to solve problems.

How to use the model better?

In Part 2, Karpathy discusses prompting strategies, fine-tuning, the rapidly evolving tool ecosystem, and future expansion.

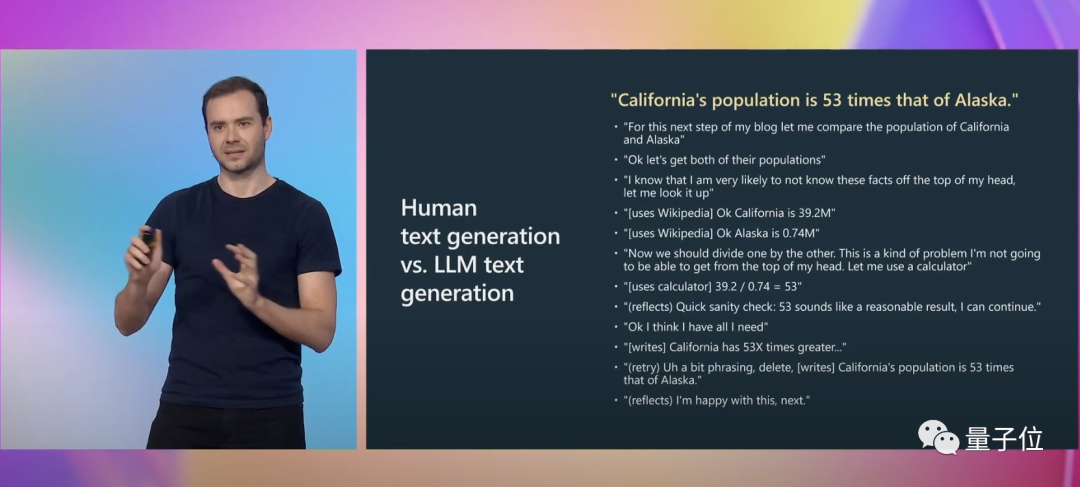

Karpathy gave another specific example to illustrate:

When writing, we need to perform a lot of mental activities , including considering whether your expression is accurate. For GPT, this is merely a sequence of tokens being tagged.

And prompt can make up for this cognitive gap.

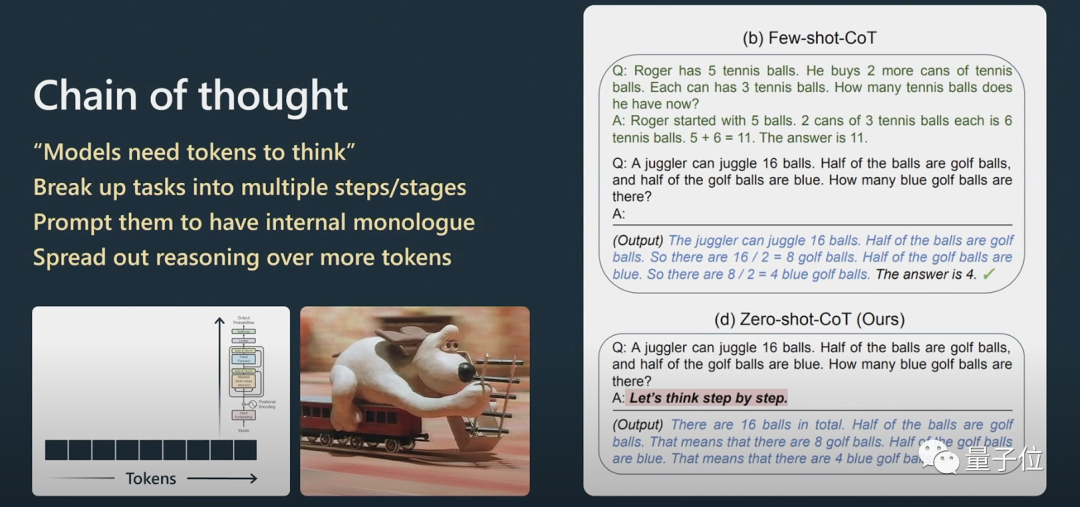

Karpathy further explained how Thought Chain prompts work.

For reasoning problems, if you want Transformer to perform better in natural language processing, you need to let it process information step by step instead of directly throwing it a very complex problem.

If you give it a few examples, it will imitate the template of this example, and the final result will be better.

The model can only answer questions according to its sequence. If the content it generates is wrong, you can prompt it and let It regenerates.

If you don't ask it to check, it won't check it by itself.

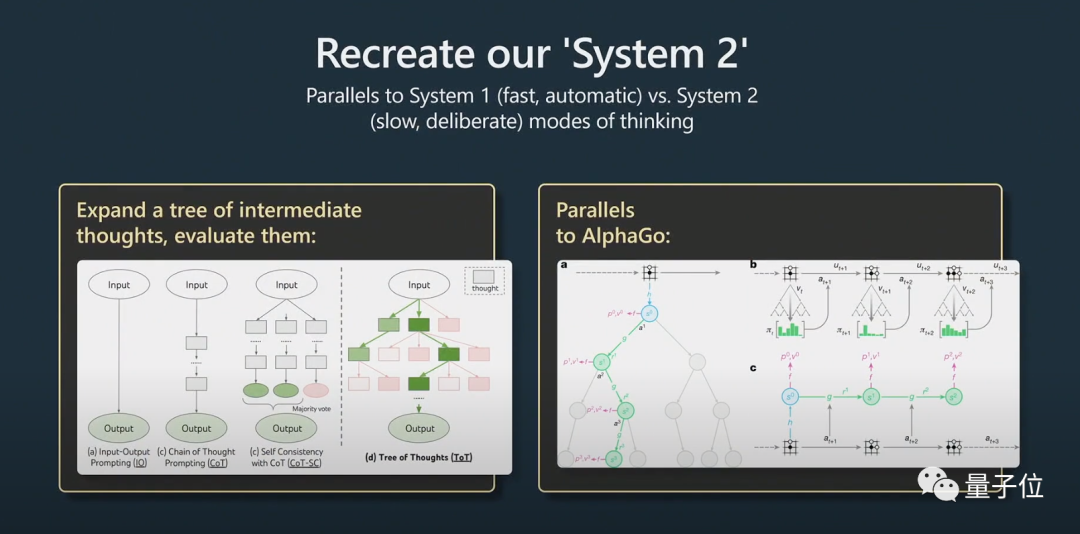

This involves the problem of System1 and System2.

Nobel Prize winner in economics Daniel Kahneman proposed in "Thinking Fast and Slow" that the human cognitive system consists of two subsystems, System1 and System2. System1 relies mainly on intuition, while System2 is a logical analysis system.

In layman's terms, System1 is a fast and automatically generated process, while System2 is a well-thought-out part.

This was also mentioned in a recent popular paper "Tree of thought".

Thoughtful means not simply giving an answer to a question, but more like a prompt used with Python glue code, incorporating many prompts are concatenated together. In order to scale the hints, the model needs to maintain multiple hints and perform a tree search algorithm.

Karpathy believes that this idea is very similar to AlphaGo:

When AlphaGo plays Go, it needs to consider where to place the next piece. Initially it learned by imitating humans.

In addition to this, it implements a Monte Carlo tree search to obtain results with multiple potential strategies. It evaluates many possible moves and retains only those that are better. I think this is somewhat equivalent to AlphaGo.

In this regard, Karpathy also mentioned AutoGPT:

I think its effect is not very good at present, and I do not recommend its practical application. I think we might be able to learn from its evolution over time.

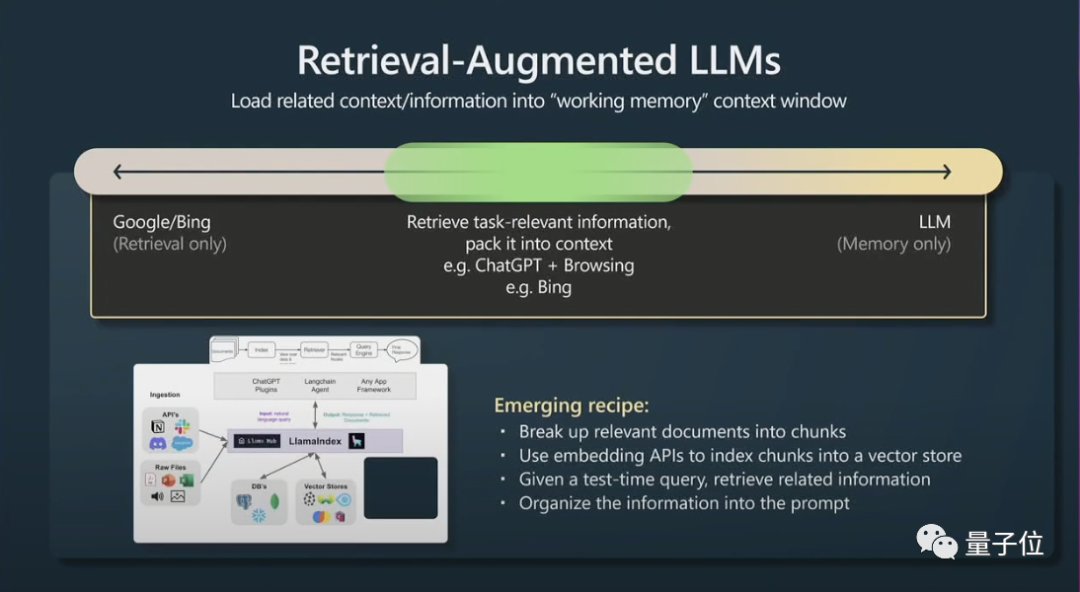

Secondly, another little trick is to retrieve enhanced generation (retrieval agumented generation) and effective prompts.

The content of the window context is the working memory of transformers at runtime. If you can add task-related information to the context, then it will perform very well because it can be accessed immediately these messages.

In short, it means that relevant data can be indexed so that the model can be accessed efficiently.

# Transformers will perform better if they also have a main file to reference.

Finally, Karpathy briefly talked about constraint prompting and fine-tuning in large language models.

Large language models can be improved through constraint hints and fine-tuning. Constraint hints enforce templates in the output of large language models, while fine-tuning adjusts the model's weights to improve performance.

I recommend using large language models in low-risk applications, always combining them with human supervision, treating them as a source of inspiration and advice, and considering copilots rather than making them completely autonomous acting.

About Andrej Karpathy

Dr. Andrej Karpathy’s first job after graduation was to study computer vision at OpenAI .

Later, Musk, one of the co-founders of OpenAI, fell in love with Karpathy and hired him at Tesla. Musk and OpenAI were at odds over the matter, and Musk was eventually excluded. Karpathy is responsible for Tesla's Autopilot, FSD and other projects.

In February of this year, seven months after leaving Tesla, Karpathy joined OpenAI again.

Recently he tweeted that he is currently very interested in the development of the open source large language model ecosystem, which is a bit like signs of the early Cambrian explosion.

Portal:

[1]https://www.youtube. com/watch?v=xO73EUwSegU (speech video)

[2]https://arxiv.org/pdf/2305.10601.pdf ("Tree of thought" paper)

Reference link:

[1]https://twitter.com/altryne/status/1661236778458832896

[2]https://www.reddit.com/r/MachineLearning/comments/13qrtek/n_state_of_gpt_by_andrej_karpathy_in_msbuild_2023/

#[ 3]https://www.wisdominanutshell.academy/state-of-gpt/

The above is the detailed content of Someone finally made it clear about the current situation of GPT! OpenAI's latest speech went viral, and it must be a genius hand-picked by Musk. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The ultimate weapon for Kubernetes debugging: K8sGPT

Feb 26, 2024 am 11:40 AM

The ultimate weapon for Kubernetes debugging: K8sGPT

Feb 26, 2024 am 11:40 AM

As artificial intelligence and machine learning technologies continue to develop, companies and organizations have begun to actively explore innovative strategies to leverage these technologies to enhance competitiveness. K8sGPT[2] is one of the most powerful tools in this field. It is a GPT model based on k8s, which combines the advantages of k8s orchestration with the excellent natural language processing capabilities of the GPT model. What is K8sGPT? Let’s look at an example first: According to the K8sGPT official website: K8sgpt is a tool designed for scanning, diagnosing and classifying kubernetes cluster problems. It integrates SRE experience into its analysis engine to provide the most relevant information. Through the application of artificial intelligence technology, K8sgpt continues to enrich its content and help users understand more quickly and accurately.

Should I choose MBR or GPT as the hard disk format for win7?

Jan 03, 2024 pm 08:09 PM

Should I choose MBR or GPT as the hard disk format for win7?

Jan 03, 2024 pm 08:09 PM

When we use the win7 operating system, sometimes we may encounter situations where we need to reinstall the system and partition the hard disk. Regarding the issue of whether win7 hard disk format requires mbr or gpt, the editor thinks that you still have to make a choice based on the details of your own system and hardware configuration. In terms of compatibility, it is best to choose the mbr format. For more details, let’s take a look at how the editor did it~ Win7 hard disk format requires mbr or gpt1. If the system is installed with Win7, it is recommended to use MBR, which has good compatibility. 2. If it exceeds 3T or install win8, you can use GPT. 3. Although GPT is indeed more advanced than MBR, MBR is definitely invincible in terms of compatibility. GPT and MBR areas

In-depth understanding of Win10 partition format: GPT and MBR comparison

Dec 22, 2023 am 11:58 AM

In-depth understanding of Win10 partition format: GPT and MBR comparison

Dec 22, 2023 am 11:58 AM

When partitioning their own systems, due to the different hard drives used by users, many users do not know whether the win10 partition format is gpt or mbr. For this reason, we have brought you a detailed introduction to help you understand the difference between the two. Win10 partition format gpt or mbr: Answer: If you are using a hard drive exceeding 3 TB, you can use gpt. gpt is more advanced than mbr, but mbr is still better in terms of compatibility. Of course, this can also be chosen according to the user's preferences. The difference between gpt and mbr: 1. Number of supported partitions: 1. MBR supports up to 4 primary partitions. 2. GPT is not limited by the number of partitions. 2. Supported hard drive size: 1. MBR only supports up to 2TB

How to determine whether the computer hard drive uses GPT or MBR partitioning method

Dec 25, 2023 pm 10:57 PM

How to determine whether the computer hard drive uses GPT or MBR partitioning method

Dec 25, 2023 pm 10:57 PM

How to check whether a computer hard disk is a GPT partition or an MBR partition? When we use a computer hard disk, we need to distinguish between GPT and MBR. In fact, this checking method is very simple. Let's take a look with me. How to check whether the computer hard disk is GPT or MBR 1. Right-click 'Computer' on the desktop and click "Manage" 2. Find "Disk Management" in "Management" 3. Enter Disk Management to see the general status of our hard disk, then How to check the partition mode of my hard disk, right-click "Disk 0" and select "Properties" 4. Switch to the "Volume" tab in "Properties", then we can see the "Disk Partition Form" and you can see it as Problems related to MBR partition win10 disk How to convert MBR partition to GPT partition >

GPT large language model Alpaca-lora localization deployment practice

Jun 01, 2023 pm 09:04 PM

GPT large language model Alpaca-lora localization deployment practice

Jun 01, 2023 pm 09:04 PM

Model introduction: The Alpaca model is an LLM (Large Language Model, large language) open source model developed by Stanford University. It is fine-tuned from the LLaMA7B (7B open source by Meta company) model on 52K instructions. It has 7 billion model parameters (the larger the model parameters, the larger the model parameters). , the stronger the model's reasoning ability, of course, the higher the cost of training the model). LoRA, the full English name is Low-RankAdaptation of Large Language Models, literally translated as low-level adaptation of large language models. This is a technology developed by Microsoft researchers to solve the fine-tuning of large language models. If you want a pre-trained large language model to be able to perform a specific domain

How many of the three major flaws of LLM do you know?

Nov 26, 2023 am 11:26 AM

How many of the three major flaws of LLM do you know?

Nov 26, 2023 am 11:26 AM

Science: Far from being an eternally benevolent and beneficial entity, the sentient general AI of the future is likely to be a manipulative sociopath that eats up all your personal data and then collapses when it is needed most. Translated from 3WaysLLMsCanLetYouDown, author JoabJackson. OpenAI is about to release GPT-5, and the outside world has high hopes for it. The most optimistic predictions even believe that it will achieve general artificial intelligence. But at the same time, CEO Sam Altman and his team face a number of serious obstacles in bringing it to market, something he acknowledged earlier this month. There are some recently published research papers that may provide clues to Altman's challenge. Summary of these papers

How has the GPT technology chosen by Bill Gates evolved, and whose life has it revolutionized?

May 28, 2023 pm 03:13 PM

How has the GPT technology chosen by Bill Gates evolved, and whose life has it revolutionized?

May 28, 2023 pm 03:13 PM

Xi Xiaoyao Science and Technology Talks Original Author | IQ has dropped completely, Python What will happen if machines can understand and communicate in a way similar to humans? This has been a topic of great concern in the academic community, and thanks to a series of breakthroughs in natural language processing in recent years, we may be closer than ever to achieving this goal. At the forefront of this breakthrough is Generative Pre-trained Transformer (GPT) - a deep neural network model specifically designed for natural language processing tasks. Its outstanding performance and ability to conduct effective conversations have made it one of the most widely used and effective models in the field, attracting considerable attention from research and industry. In a recent detailed

In-depth analysis, step by step to build your chatbot using GPT

Apr 07, 2023 pm 07:41 PM

In-depth analysis, step by step to build your chatbot using GPT

Apr 07, 2023 pm 07:41 PM

Chatting with ChatGPT is fun and informative - you can explore some new ideas by chatting with it. But these are more casual use cases, and the novelty quickly wears off, especially once one realizes that it can produce hallucinations. How to use ChatGPT in a more efficient way? After OpenAI releases the GPT3.5 series of APIs, you can do much more than just chat. QA (Question and Answer) is a very effective use case for businesses and individuals - ask a bot about your own files/data using natural language and it can answer quickly by retrieving information from the file and generating a response. Use it for customer support, comprehensive user research, personal knowledge management, and more. Ask the bot questions related to your files.