How to configure Nginx current limiting

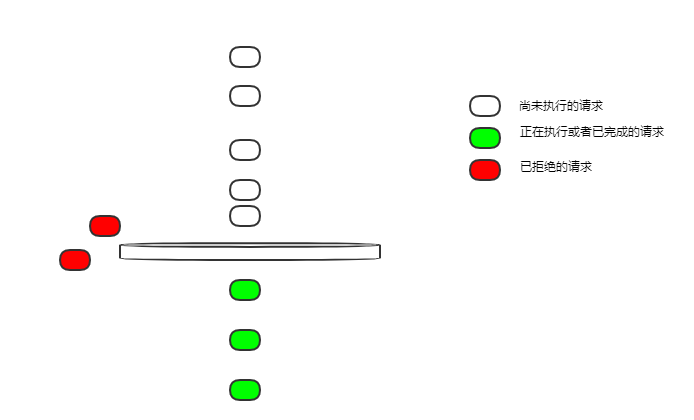

Empty Bucket

We start with the simplest current limiting configuration:

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit;

proxy_pass http://login_upstream;

}

}$binary_remote_addr for client ip limit Flow;

zone=ip_limit:10m The name of the current limiting rule is ip_limit, which allows the use of 10mb of memory space to record the current limiting status corresponding to the IP;

-

rate=10r/s The current limit speed is 10 requests per second

location /login/ Limit the login flow

The current limit speed is 10 requests per second. If 10 requests arrive at an idle nginx at the same time, can they all be executed?

Leaky bucket leak requests are uniform. How is 10r/s a constant speed? One request is leaked every 100ms.

Under this configuration, the bucket is empty, and all requests that cannot be leaked in real time will be rejected.

So if 10 requests arrive at the same time, only one request can be executed, and the others will be rejected.

This is not very friendly. In most business scenarios, we hope that these 10 requests can be executed.

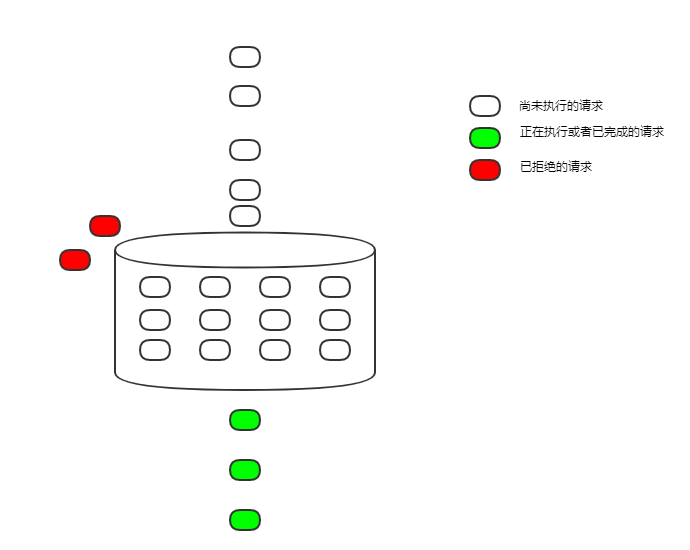

#burst

Let’s change the configuration to solve the problem in the previous section

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12;

proxy_pass http://login_upstream;

}

}burst=12 The size of the leaky bucket is set to 12

# Logically called a leaky bucket, it is implemented as a fifo queue, which temporarily caches requests that cannot be executed.

The leakage speed is still 100ms per request, but requests that come concurrently and cannot be executed temporarily can be cached first. Only when the queue is full will new requests be refused.

In this way, the leaky bucket not only limits the current, but also plays the role of peak shaving and valley filling.

Under such a configuration, if 10 requests arrive at the same time, they will be executed in sequence, one every 100ms.

Although it was executed, the delay was greatly increased due to queuing execution, which is still unacceptable in many scenarios.

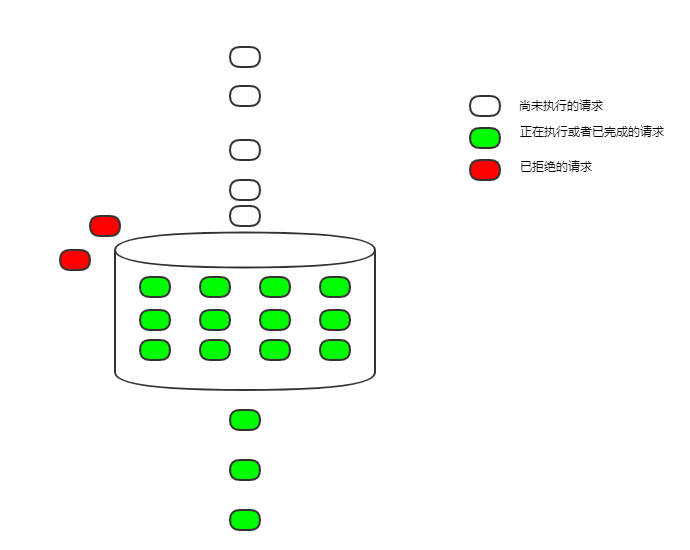

nodelay

Continue to modify the configuration to solve the problem of increased delay caused by too long delay

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12 nodelay;

proxy_pass http://login_upstream;

}

}nodelay Advance the time to start executing requests , it used to be delayed until it leaked out of the bucket, but now there is no delay. It will start executing as soon as it enters the bucket

Either it will be executed immediately, or it will be rejected. Requests will no longer be delayed due to throttling.

Because requests leak out of the bucket at a constant rate, and the bucket space is fixed, in the end, on average, 5 requests are executed per second, and the purpose of current limiting is still achieved.

But this also has disadvantages. The current limit is limited, but the limit is not so uniform. Taking the above configuration as an example, if 12 requests arrive at the same time, then these 12 requests can be executed immediately, and subsequent requests can only be entered into the bucket at a constant speed, and one request will be executed every 100ms. If there are no requests for a period of time and the bucket is empty, 12 concurrent requests may be executed at the same time.

In most cases, this uneven flow rate is not a big problem. However, nginx also provides a parameter to control concurrent execution, which is the number of nodelay requests.

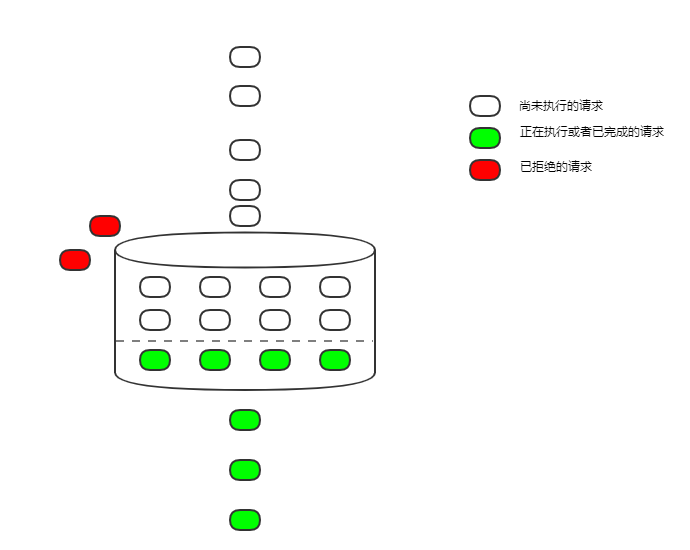

limit_req_zone $binary_remote_addr zone=ip_limit:10m rate=10r/s;

server {

location /login/ {

limit_req zone=ip_limit burst=12 delay=4;

proxy_pass http://login_upstream;

}

}delay=4 Start delay from the 5th request in the bucket

In this way, by controlling the value of the delay parameter, you can adjust the allowed concurrent execution The number of requests makes the requests even. It is still necessary to control this number on some resource-consuming services.

The above is the detailed content of How to configure Nginx current limiting. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

How to allow external network access to tomcat server

Apr 21, 2024 am 07:22 AM

To allow the Tomcat server to access the external network, you need to: modify the Tomcat configuration file to allow external connections. Add a firewall rule to allow access to the Tomcat server port. Create a DNS record pointing the domain name to the Tomcat server public IP. Optional: Use a reverse proxy to improve security and performance. Optional: Set up HTTPS for increased security.

How to run thinkphp

Apr 09, 2024 pm 05:39 PM

How to run thinkphp

Apr 09, 2024 pm 05:39 PM

Steps to run ThinkPHP Framework locally: Download and unzip ThinkPHP Framework to a local directory. Create a virtual host (optional) pointing to the ThinkPHP root directory. Configure database connection parameters. Start the web server. Initialize the ThinkPHP application. Access the ThinkPHP application URL and run it.

Welcome to nginx!How to solve it?

Apr 17, 2024 am 05:12 AM

Welcome to nginx!How to solve it?

Apr 17, 2024 am 05:12 AM

To solve the "Welcome to nginx!" error, you need to check the virtual host configuration, enable the virtual host, reload Nginx, if the virtual host configuration file cannot be found, create a default page and reload Nginx, then the error message will disappear and the website will be normal show.

How to register phpmyadmin

Apr 07, 2024 pm 02:45 PM

How to register phpmyadmin

Apr 07, 2024 pm 02:45 PM

To register for phpMyAdmin, you need to first create a MySQL user and grant permissions to it, then download, install and configure phpMyAdmin, and finally log in to phpMyAdmin to manage the database.

How to communicate between docker containers

Apr 07, 2024 pm 06:24 PM

How to communicate between docker containers

Apr 07, 2024 pm 06:24 PM

There are five methods for container communication in the Docker environment: shared network, Docker Compose, network proxy, shared volume, and message queue. Depending on your isolation and security needs, choose the most appropriate communication method, such as leveraging Docker Compose to simplify connections or using a network proxy to increase isolation.

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

How to deploy nodejs project to server

Apr 21, 2024 am 04:40 AM

Server deployment steps for a Node.js project: Prepare the deployment environment: obtain server access, install Node.js, set up a Git repository. Build the application: Use npm run build to generate deployable code and dependencies. Upload code to the server: via Git or File Transfer Protocol. Install dependencies: SSH into the server and use npm install to install application dependencies. Start the application: Use a command such as node index.js to start the application, or use a process manager such as pm2. Configure a reverse proxy (optional): Use a reverse proxy such as Nginx or Apache to route traffic to your application

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

How to generate URL from html file

Apr 21, 2024 pm 12:57 PM

Converting an HTML file to a URL requires a web server, which involves the following steps: Obtain a web server. Set up a web server. Upload HTML file. Create a domain name. Route the request.

What to do if the installation of phpmyadmin fails

Apr 07, 2024 pm 03:15 PM

What to do if the installation of phpmyadmin fails

Apr 07, 2024 pm 03:15 PM

Troubleshooting steps for failed phpMyAdmin installation: Check system requirements (PHP version, MySQL version, web server); enable PHP extensions (mysqli, pdo_mysql, mbstring, token_get_all); check configuration file settings (host, port, username, password); Check file permissions (directory ownership, file permissions); check firewall settings (whitelist web server ports); view error logs (/var/log/apache2/error.log or /var/log/nginx/error.log); seek Technical support (phpMyAdmin