Technology peripherals

Technology peripherals

AI

AI

AI Contract Theory ⑤: Generative AI is racing with thousands of sails, how to use rules to 'steer'

AI Contract Theory ⑤: Generative AI is racing with thousands of sails, how to use rules to 'steer'

AI Contract Theory ⑤: Generative AI is racing with thousands of sails, how to use rules to 'steer'

21st Century Business Herald reporter Cai Shuyue Guo Meiting Intern Tan Yanwen Mai Zihao Reporting from Shanghai and Guangzhou

Editor’s note:

In the past few months of 2023, major companies have rushed to develop large-scale models, explored the commercialization of GPT, and are optimistic about computing infrastructure... Just like the Age of Discovery that opened in the 15th century, human exchanges, trade, and wealth have exploded. growth, the space revolution is sweeping the world. At the same time, change also brings challenges to order, such as data leakage, personal privacy risks, copyright infringement, false information... In addition, the post-humanist crisis brought by AI is already on the table. What attitude should people take? Are you facing the myths caused by the mixture of humans and machines?

At this moment, seeking consensus on AI governance and reshaping a new order have become issues faced by all countries. Nancai Compliance Technology Research Institute will launch a series of reports on AI contract theory, analyzing Chinese and foreign regulatory models, subject responsibility allocation, corpus data compliance, AI ethics, industry development and other dimensions, with a view to providing some ideas for AI governance plans and ensuring Responsible innovation.

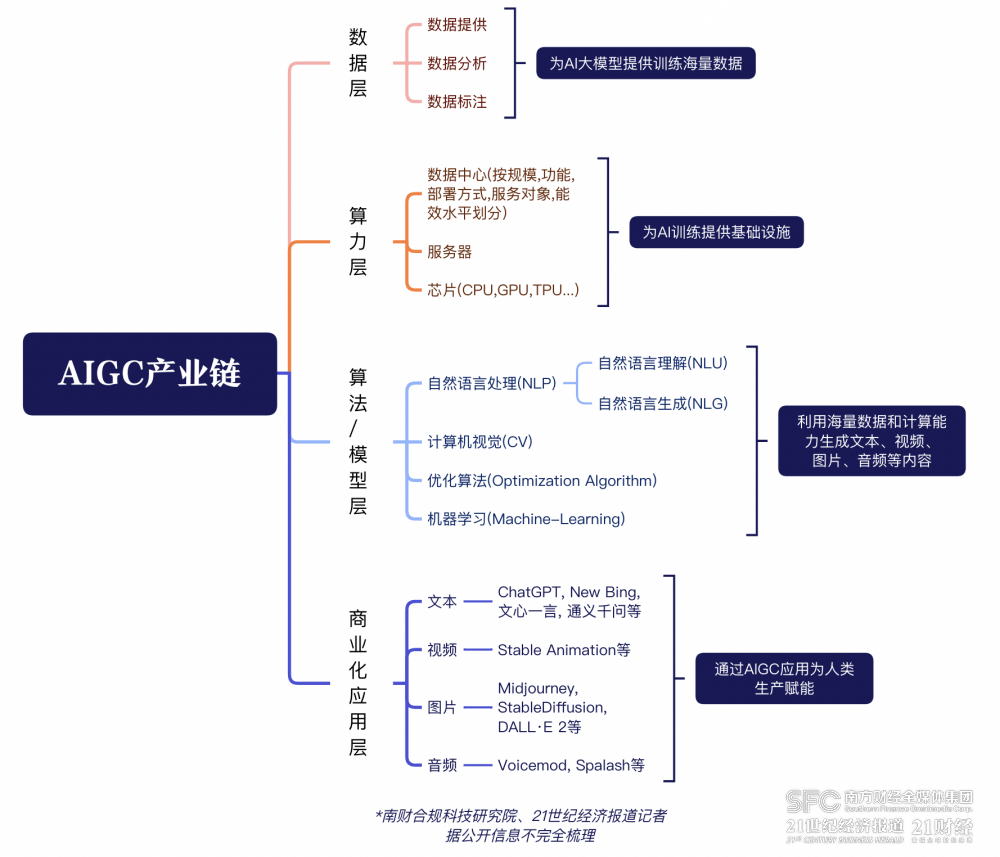

The rise of self-generated AI technology has led to the current situation of "Battle of Hundreds of Models", and the industry chain map of this technology has also taken initial shape.

(AIGC industrial chain map. Drawing/Nancai Compliance Technology Research Institute, 21st Century Business Herald reporter)

Before generative AI becomes a common technology, every participant in the production chain must consider how to make it a "controllable" tool.

In late March this year, a letter signed by Tesla CEO Elon Musk, Apple co-founder Steve Wozniak and more than a thousand entrepreneurs and scholars signed a letter "Suspension of Large-scale Artificial Intelligence". Intelligent Experiment" open letter released.

The letter mentioned that although artificial intelligence laboratories around the world have been locked in an out-of-control race in recent months to develop and deploy more powerful digital minds, including the developers of the technology, "No one can truly understand, predict or fully control this technology."

The Yuanshi Culture Laboratory of the School of Journalism and Communication at Tsinghua University also pointed out in the "AIGC Development Research" report that AIGC's strong involvement in the global industrial chain will comprehensively replace programmers, graphic designers, customer service and other tasks, and provide artificial intelligence If the cost is capped, the third world industrial chain will suffer a huge impact.

This means that AIGC, supported by large computing power, may become a sharp blade that separates the global industrial chain of multinational companies, and may also become a dagger that cuts through the illusion of the "global village."

Therefore, with the rapid development of AIGC, putting the generative AI technology behind it into a regulatory cage and clarifying the responsibilities of all parties in the industry chain has become an urgent proposition for countries around the world to deal with.

Regulatory policy review: draw a clear bottom line for industrial research and development

At present, our country is already on the road of generative AI technology regulation. In April this year, the Cyberspace Administration of China issued the "Measures for the Administration of Generative Artificial Intelligence Services (Draft for Comments)" (hereinafter referred to as the "Measures"), which is my country's first regulatory document targeting generative AI technology.

Generally speaking, the "Measures" are based on the existing deep synthesis regulatory framework, which regulates the "Internet Information Service Deep Synthesis Management Regulations", "Internet Information Service Algorithm Recommendation Management Regulations", "Network Audio and Video Service Management Regulations" and "Internet Information Service Deep Synthesis Management Regulations". The refinement of the "Regulations on Ecological Governance of Network Information Content" In addition to the general obligations of personal information protection, artificial intelligence service providers are also required to further perform obligations such as security assessment, algorithm filing, and content identification.

Regarding the promulgation of the above relevant policy documents, Xiao Sa, senior partner of Beijing Dacheng Law Firm, pointed out in an interview with a reporter from the 21st Century Business Herald that relevant companies should pay attention to connecting existing algorithm recommendation services, deep synthesis services and other artificial intelligence In accordance with the requirements of regulatory regulations, we strive to achieve internal compliance, combine technology and legal power to propose creative compliance solutions, and win more institutional space for industrial development.

Most of the industry is supportive and supports the successively introduced "Measures" and other bills regulating the development of artificial intelligence technology. In an interview with 21 reporters, Wei Chaoqun, senior product director of Liangfengtai, shared his views. He believes that when generative AI technology has just begun, the implementation of relevant management methods is crucial to the healthy development of the entire industry, and these methods will play a significant role in promoting it.

"On the one hand, the promulgation of the "Measures" means that the entire industry has clear operating specifications, which can guide a complete set of R&D processes for enterprises. On the other hand, it also sets a R&D bottom line for the entire industry, including What can be done and what cannot be done." Wei Chaoqun pointed out.

For example, Article 17 of the "Measures" requires artificial intelligence service providers to "provide necessary information that can affect user trust and choice, including descriptions of the source, scale, type, quality, etc. of pre-training and optimized training data, artificial intelligence Labeling rules, the scale and type of manually labeled data, basic algorithms and technical systems, etc." to achieve the governance of artificial intelligence technology with large amounts of data and volatile rules.

However, some people believe that the current domestic laws, regulations and policy documents related to artificial intelligence still need to be further improved.

Xiao Sa mentioned in the interview that although the "Measures" responded to the risks and impacts brought by generative artificial intelligence, but by sorting out its content, it will be found that it has many differences in terms of responsible subjects, scope of application, and compliance. The provisions on regulatory obligations and other aspects are relatively broad.

For example, Article 5 of the "Measures" stipulates that service providers (i.e. subjects) who use generative artificial intelligence products should bear the responsibilities of content producers.

The original article mentioned that organizations and individuals who use generative artificial intelligence products to provide services such as chatting and text, image, sound generation, etc., including supporting others to generate text, images, sounds, etc. by providing programmable interfaces and other methods, are responsible for this Product generated content is the responsibility of the producer. However, the "Measures" have not yet elaborated on the specific legal responsibilities that service providers should bear.

Development Difficulties: How to Balance Regulation and Technology

How to improve the artificial intelligence supervision system under the premise of technological innovation and development, and strengthen its connection and coordination with data compliance and algorithm governance, is an issue that needs to be solved urgently.

Among them, clarifying the responsible entities of each industrial chain link of AIGC and creating "responsible" AI technology is one of the key points that supervision needs to pay close attention to.

In addition to the issue of the distribution of subject responsibilities mentioned in Article 5 of the "Measures", recently, the EU also mentioned in the revised "Artificial Intelligence Act" that In terms of the distribution of responsibilities in the artificial intelligence value chain, any distribution Authors, importers, deployers or other third parties should be regarded as providers of high-risk artificial intelligence systems and need to perform corresponding obligations. For example, indicate the name and contact information on the high-risk artificial intelligence system, provide data specifications or data set-related information, save logs, etc.

Pei Yi, assistant professor at Beijing Institute of Technology Law School, also pointed out to 21 reporters that as a key entity in providing AI services, enterprises need to ensure transparent data collection and processing on the one hand - clearly inform the data subject of data collection and processing purposes and obtain the necessary consent or authorization. Implement appropriate data security and privacy protection measures to ensure the confidentiality and integrity of data. On the other hand, compliant data sharing is also required. When conducting multi-party data sharing or data transactions, ensure compliant data use rights and authorization mechanisms, and comply with applicable data protection laws and regulations.

21 Reporters observed that some artificial intelligence companies are currently clarifying their obligations as responsible entities.

For example, OpenAI has specially opened a "Security Portal" for users. In this page, users can browse the company's compliance documents, including backup, deletion, and static data in "Data Security" Encrypted information, as well as code analysis, credential management, and more in App Security.

(OpenAI's "Secure Portal" page. Source/OpenAI official website)

In the privacy policy released by the official website of AI painting tool Midjourney, also provides specific instructions on the sharing, retention, transmission scenarios and uses of user data. At the same time, it also lists in detail the application's process of providing services to users. , it is necessary to collect 11 types of personal information such as identification, business information, and biometric information.

It is worth mentioning that the legal person in charge of an emerging technology company in Shanghai said in a conversation with 21 reporters that the terms of service for the company’s internal artificial intelligence-related business are currently being formulated. Part of the rules for the distribution of responsibilities Refer to OpenAI’s approach.

On the other hand, as providers of generative AI services, enterprises also need to pay attention to internal compliance. Xiao Sa pointed out that the business of AIGC-related companies needs to rely on massive data and complex algorithms, and the application scenarios are complex and diverse. Companies are prone to fall into various risks, and it is very difficult to rely entirely on external supervision. Therefore, related companies Be sure to strengthen AIGC’s internal compliance management.

On the one hand, regulatory agencies should take the opportunity to comprehensively implement corporate compliance reform, actively explore the promotion of compliance reform of companies involved in the network digital field and implement third-party supervision and evaluation mechanisms, establish and improve institutional mechanisms for compliance management, and effectively prevent Internet crimes. On the other hand, it is also necessary to actively explore regulatory paths to promote ex-ante compliance construction through ex-post compliance rectification, and promote network regulatory authorities and Internet companies to jointly study and formulate data compliance guidelines to ensure the healthy development of the digital economy.

"The most important task of the regulatory authorities is to draw the bottom line. Among them, 'tech ethics' and 'national security' are two inalienable bottom lines . Within the bottom line, the industry can be given as much tolerance as possible There is room for development, so as to prevent technology from being timid and restricted in its development for the sake of compliance." Pei Yi told 21 reporters.

Coordinator: Wang Jun

Reporters: Guo Meiting, Cai Shuyue, Tan Yanwen, Mai Zihao

Drawing: Cai Shuyue

For more content, please download 21 Finance APP

The above is the detailed content of AI Contract Theory ⑤: Generative AI is racing with thousands of sails, how to use rules to 'steer'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Chinese mathematician Terence Tao leads the White House Generative AI Working Group, and Li Feifei will speak at the group

May 25, 2023 am 10:36 AM

Chinese mathematician Terence Tao leads the White House Generative AI Working Group, and Li Feifei will speak at the group

May 25, 2023 am 10:36 AM

The Generative AI Working Group established by the President's Council of Advisors on Science and Technology is designed to help assess key opportunities and risks in the field of artificial intelligence and provide advice to the President on ensuring that these technologies are developed and deployed as fairly, safely, and responsibly as possible. AMD CEO Lisa Su and Google Cloud Chief Information Security Officer Phil Venables are also members of the working group. Chinese-American mathematician and Fields Medal winner Terence Tao. On May 13, local time, Chinese-American mathematician and Fields Medal winner Terence Tao announced that he and physicist Laura Greene will co-lead the Generative Artificial Intelligence Working Group of the U.S. Presidential Council of Advisors on Science and Technology (PCAST) .

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

Image source@visualchinesewen|Wang Jiwei From "human + RPA" to "human + generative AI + RPA", how does LLM affect RPA human-computer interaction? From another perspective, how does LLM affect RPA from the perspective of human-computer interaction? RPA, which affects human-computer interaction in program development and process automation, will now also be changed by LLM? How does LLM affect human-computer interaction? How does generative AI change RPA human-computer interaction? Learn more about it in one article: The era of large models is coming, and generative AI based on LLM is rapidly transforming RPA human-computer interaction; generative AI redefines human-computer interaction, and LLM is affecting the changes in RPA software architecture. If you ask what contribution RPA has to program development and automation, one of the answers is that it has changed human-computer interaction (HCI, h

Why is generative AI sought after by various industries?

Mar 30, 2024 pm 07:36 PM

Why is generative AI sought after by various industries?

Mar 30, 2024 pm 07:36 PM

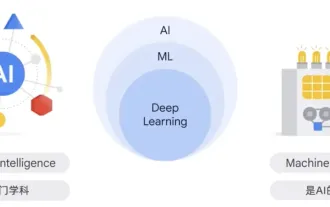

Generative AI is a type of human artificial intelligence technology that can generate various types of content, including text, images, audio and synthetic data. So what is artificial intelligence? What is the difference between artificial intelligence and machine learning? Artificial intelligence is the discipline, a branch of computer science, that studies the creation of intelligent agents, which are systems that can reason, learn, and perform actions autonomously. At its core, artificial intelligence is concerned with the theories and methods of building machines that think and act like humans. Within this discipline, machine learning ML is a field of artificial intelligence. It is a program or system that trains a model based on input data. The trained model can make useful predictions from new or unseen data derived from the unified data on which the model was trained.

Say goodbye to design software to generate renderings in one sentence, generative AI subverts the field of decoration and decoration, with 28 popular tools

Jun 10, 2023 pm 03:33 PM

Say goodbye to design software to generate renderings in one sentence, generative AI subverts the field of decoration and decoration, with 28 popular tools

Jun 10, 2023 pm 03:33 PM

▲This picture was generated by AI. Kujiale, Sanweijia, Dongyi Risheng, etc. have already taken action. The decoration and decoration industry chain has introduced AIGC on a large scale. What are the applications of generative AI in the field of decoration and decoration? What impact does it have on designers? One article to understand and say goodbye to various design software to generate renderings in one sentence. Generative AI is subverting the field of decoration and decoration. Using artificial intelligence to enhance capabilities improves design efficiency. Generative AI is revolutionizing the decoration and decoration industry. What impact does generative AI have on the decoration and decoration industry? What are the future development trends? One article to understand how LLM is revolutionizing decoration and decoration. These 28 popular generative AI decoration design tools are worth trying. Article/Wang Jiwei In the field of decoration and decoration, there has been a lot of news related to AIGC recently. Collov launches generative AI-powered design tool Col

Watch: What is the potential of applying generative AI to network automation?

Aug 17, 2023 pm 07:57 PM

Watch: What is the potential of applying generative AI to network automation?

Aug 17, 2023 pm 07:57 PM

Generative artificial intelligence (GenAI) is expected to become a compelling technology trend by 2023, bringing important applications to businesses and individuals, including education, according to a new report from market research firm Omdia. In the telecom space, use cases for GenAI are mainly focused on delivering personalized marketing content or supporting more sophisticated virtual assistants to enhance customer experience. Although the application of generative AI in network operations is not obvious, EnterpriseWeb has developed an interesting concept. Validation, demonstrating the potential of generative AI in the field, the capabilities and limitations of generative AI in network automation One of the early applications of generative AI in network operations was the use of interactive guidance to replace engineering manuals to help install network elements, from

Which technology giant is behind Haier and Siemens' generative AI innovation?

Nov 21, 2023 am 09:02 AM

Which technology giant is behind Haier and Siemens' generative AI innovation?

Nov 21, 2023 am 09:02 AM

Gu Fan, General Manager of the Strategic Business Development Department of Amazon Cloud Technology Greater China In 2023, large language models and generative AI will "surge" in the global market, not only triggering "an overwhelming" follow-up in the AI and cloud computing industry, but also vigorously Attract manufacturing giants to join the industry. Haier Innovation Design Center created the country's first AIGC industrial design solution, which significantly shortened the design cycle and reduced conceptual design costs. It not only accelerated the overall conceptual design by 83%, but also increased the integrated rendering efficiency by about 90%, effectively solving Problems include high labor costs and low concept output and approval efficiency in the design stage. Siemens China's intelligent knowledge base and intelligent conversational robot "Xiaoyu" based on its own model has natural language processing, knowledge base retrieval, and big language training through data

Tencent Hunyuan upgrades model matrix, launching 256k long text model on the cloud

Jun 01, 2024 pm 01:46 PM

Tencent Hunyuan upgrades model matrix, launching 256k long text model on the cloud

Jun 01, 2024 pm 01:46 PM

The implementation of large models is accelerating, and "industrial practicality" has become a development consensus. On May 17, 2024, the Tencent Cloud Generative AI Industry Application Summit was held in Beijing, announcing a series of progress in large model development and application products. Tencent's Hunyuan large model capabilities continue to upgrade. Multiple versions of models hunyuan-pro, hunyuan-standard, and hunyuan-lite are open to the public through Tencent Cloud to meet the model needs of enterprise customers and developers in different scenarios, and to implement the most cost-effective model solutions. . Tencent Cloud releases three major tools: knowledge engine for large models, image creation engine, and video creation engine, creating a native tool chain for the era of large models, simplifying data access, model fine-tuning, and application development processes through PaaS services to help enterprises

Transformative Trend: Generative Artificial Intelligence and Its Impact on Software Development

Feb 26, 2024 pm 10:28 PM

Transformative Trend: Generative Artificial Intelligence and Its Impact on Software Development

Feb 26, 2024 pm 10:28 PM

The rise of artificial intelligence is driving the rapid development of software development. This powerful technology has the potential to revolutionize the way we build software, with far-reaching impacts on every aspect of design, development, testing and deployment. For companies trying to enter the field of dynamic software development, the emergence of generative artificial intelligence technology provides them with unprecedented development opportunities. By incorporating this cutting-edge technology into their development processes, companies can significantly increase production efficiency, shorten product time to market, and launch high-quality software products that stand out in the fiercely competitive digital market. According to a McKinsey report, it is predicted that the generative artificial intelligence market size is expected to reach US$4.4 trillion by 2031. This forecast not only reflects a trend, but also shows the technology and business landscape.