Normally, we will give priority to using Redis cache to reduce the database access burden. However, we will also encounter the following situation: when a large number of users access our system, they will first query the cache. If there is no data in the cache, they will query the database, and then update the data to the cache, and if the data in the database has changed It needs to be synchronized to redis. During the synchronization process, the data consistency between MySQL and redis needs to be ensured. It is normal for a short data delay to occur during this synchronization process, but in the end it is necessary to ensure the consistency between mysql and the cache.

//我们通常使用redis的逻辑

//通常我们是先查询reids

String value = RedisUtils.get(key);

if (!StringUtils.isEmpty(value)){

return value;

}

//从数据库中获取数据

value = getValueForDb(key);

if (!StringUtils.isEmpty(value)){

RedisUtils.set(key,value);

return value;

}The delayed double delete strategy is a common strategy for database storage and cache data to maintain consistency in distributed systems, but it is not strongly consistent. In fact, no matter which solution is used, the problem of dirty data in Redis cannot be avoided. It can only alleviate this problem. To completely solve it, synchronization locks and corresponding business logic levels must be used to solve it.

Generally when we update database data, we need to synchronize the data cached in redis, so we generally give two solutions:

The first solution: execute first update operation, and then perform cache clearing.

The second option: perform cache clearing first, and then perform the update operation.

However, these two solutions are prone to the following problems in concurrent requests

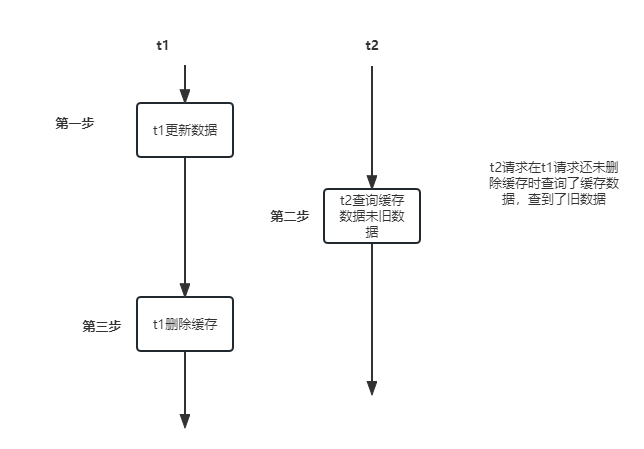

Disadvantages of the first solution: when request 1 goes After the database update operation is performed, before the cache clearing is performed, request 2 comes in to query the cache. At this time, the data in the cache is still old data, and it has not been deleted before it can be deleted, causing data problems. However, after t1 performs the cache deletion operation, Subsequent requests cannot query the cache, then query the data, and then update it to the cache. This impact is relatively small.

t1 thread updates the db first;

The t2 thread query hits the cache and returns the old data;

Assuming that the t1 thread has updated the db, it is expected that the cache key will be deleted in 5 milliseconds and other threads will query within 5 milliseconds. The cached result is still the old data, but the query cached result is empty after 5 milliseconds, and the latest db result is synchronized to Redis again.

It is common for delays in a project to occur, so the impact of such delays on the business is actually very limited. But what if it happens and deleting the cache fails?

1. Keep retrying----If it is in the http protocol interface, the interface response will slow down and a response timeout will occur when calling this interface. 2. Or synchronize through mq asynchronous form

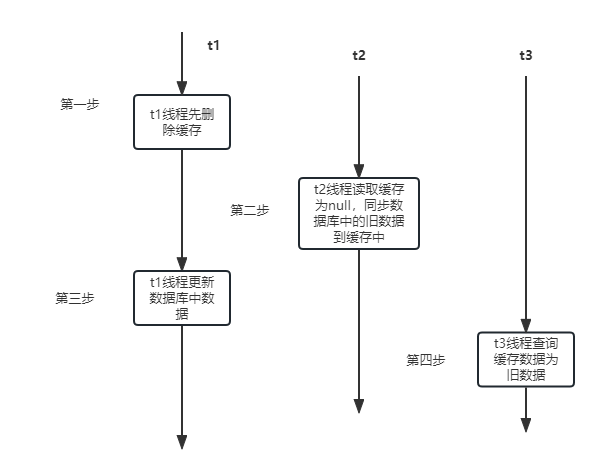

Disadvantages of the second solution: when request 1 clears the cache but has not yet performed the data update operation, request 2 comes in to query the old data of the database and writes After entering redis, this leads to the problem of inconsistency between the database and redis data.

t1 thread deletes the cache first;

t2 thread reads the cache as null and synchronizes db data to the cache;

t1 thread updates the data in db;

t3 thread queries that the data in the cache is old data;

Clear the cache before performing the update operation, and delay N seconds to clear the cache again after the update is completed. Two deletions are performed, and a period of delay is required

RedisUtils.del(key);// 先删除缓存 updateDB(user);// 更新db中的数据 Thread.sleep(N);// 延迟一段时间,在删除该缓存key RedisUtils.del(key);// 先删除缓存

The above (delay N seconds) time is greater than the time of a write operation. If the writing time to redis is earlier than the delay time, request 1 will clear the cache, but the cache of request 2 has not yet been written, resulting in an embarrassing situation. . .

Estimate based on the operation time of business logic execution of reading data and writing cache, when the business program is running. "Delayed double deletion" is because this solution will delete the cached value again after a delay of a period of time after deleting it for the first time.

The above is the detailed content of How to use redis delayed double delete strategy. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis