How to migrate SQL Server sensorless system to MySQL

1. Architecture Overview

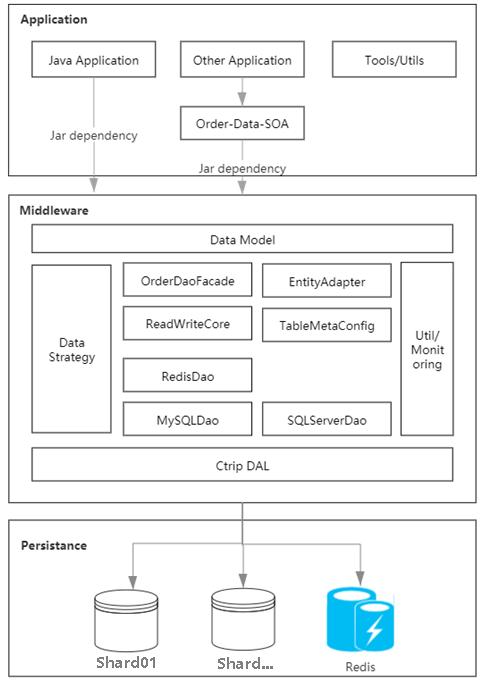

Through the analysis of the bottlenecks of the existing system, we found that the core defects are concentrated in the scattered order data cache, which leads to inconsistency at each end of the data, and each order application is directly connected to the database, causing scalability. Poor sex. Through practice, we wrote middleware to abstract and unify the data access layer, and built an order cache based on the database deployment architecture mirror to uniformly manage hot data, solving the differences between different ends.

Figure 1.1 Storage system architecture diagram

2. Application scenarios

1. New single-second level synchronization of each end

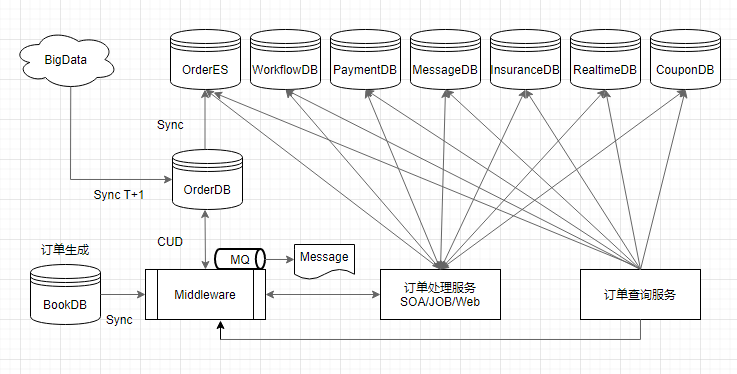

The speed from order submission to visibility at each end is one of the core indicators of storage services. We have optimized the main links of the data chain, covering new order synchronization, real-time message push, query index construction, and offline archiving of the data platform. Wait for the main links to ensure that the data arrival speed in the large system is within 3 seconds, that is, the user can jump to the My-Carry list immediately after placing an order.

When a new user creates an order, the synchronization service serves as the data link entrance to write the user order data into the order database through the middleware. At this time, the middleware completes the construction of the order cache at the same time;

When the order After completing the warehousing behavior and hotspot data construction, the order message is thrown and output to each subsystem in real time;

When the new order is completed, the ES index of the order details is immediately constructed to provide retrieval support for third parties;

Finally, the data platform T 1 implements the archiving of daily data for use by various offline businesses such as BI.

Figure 2.1 Data link

2. Automatic order issuance and workbench

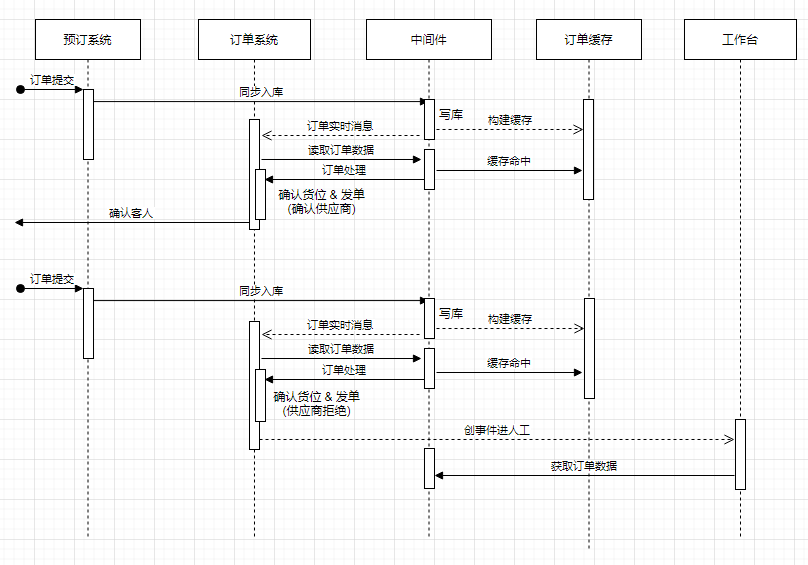

Support for customers, merchants, and employee workbench It is the basic role of the order storage system. The data link in Figure 2.1 plays an indispensable role in connecting automatic order issuance and the workbench after the new order is submitted. Automatic ordering is the process of sending order details to the merchant as quickly as possible after the customer submits the order to verify inventory and confirm the order. The workbench assists employees in the process of obtaining orders and processing manual events in a timely manner.

Figure 2.2 Relationship between issuing orders and workbench based on storage system (abbreviated details)

3. Query and data analysis

Based on order data as the core, it is mainly divided into two business lines: online query and data analysis. Taking detailed query as an example, access QPS remains at a high level all year round. During holiday peaks, it is easy to cause query bottlenecks. The root cause will be in this period after the review. During the sub-architecture upgrade, we made adjustments to optimize high availability in related scenarios.

Online queries are mainly based on order caching. When orders are submitted, a hotspot cache is built to relieve query pressure, and it can be valid for a long period of time according to configured time parameters.

Non-online query scenarios, real-time message push and delivery combined with Hive data warehouse T 1, any occasion that requires long-term order data (such as real-time reports) can access order messages in real time calculate. When conducting large-scale data analysis, offline BI will use Hive tables and perform data synchronization through low-frequency access from the database during the low peak period in the early morning every day.

As mentioned above, we protect the access to the main order database behind the troika of order cache, real-time messaging, and Hive data warehouse, and decouple it from the business as much as possible.

3. System Upgrade Practice

In the process of upgrading Ctrip's core storage system, what needs to be done throughout the entire process is live migration, and all operations must be done on the data link. The goal of each application being transparent and lossless. Our design comprehensively analyzes the characteristics of the group's data links. The order cache system provides database mirroring to reduce the direct coupling between the application and the database. Then, the middleware is used to transparently remove the physical relationship between the data originating from SQLServer/MySQL and provide the underlying layer to the application. Operation space for live migration.

Combined with the process design of lossless migration, it focuses on the visibility and controllability of each database traffic, supports traffic distribution strategies at the full database, Shard level, table level, and CRUD operation level, and provides sufficient support for underlying data migration. means of implementation. The data warehouse connection design focuses on solving the synchronization problem between the tens of billions of offline data on the data platform and the dual database online period, as well as solving the data problems that arise during full access to MySQL.

The following will be divided into three parts to share the experiences we learned in this process.

1. Distributed order caching

With the development of business, the number of users and visits are increasing, and the pressure on order system applications and servers is also increasing. Before order caching was introduced, each application connected to the database independently, causing the queried data to be unable to be shared between applications, and there was an upper limit on the number of DB queries and connections per second. However, the hotel's core transaction link was based on DB storage, and there was a single Risk of point failure.

After data analysis, the order system typically reads more and writes less. In order to share hot query data and reduce DB load, an effective way is to introduce cache, as shown in Figure 3.1. When the user's request comes, the cache is queried first. , if there is cached data, the result will be returned directly; if there is no hit in the cache, the DB will be queried, and the DB result data will be verified according to the configuration policy. If the verification passes, the DB data will be written to the cache for subsequent query use, otherwise it will not be written to the cache. , and finally return the DB query results.

Figure 3.1 Basic design of order cache

Regarding the hardware overhead after introducing new cache components, it can be reduced by converging the original scattered hardware resources of each application. The total cost, but centralized management will also bring usability challenges and data consistency issues, so it is necessary to fully conduct capacity assessment, traffic estimation and cache table value analysis of the existing system. Only cache hot data tables with high access volume. Through appropriate cache structure design, data compression and cache elimination strategies, we can maximize the cache hit rate and make a good trade-off between cache capacity, hardware cost and availability.

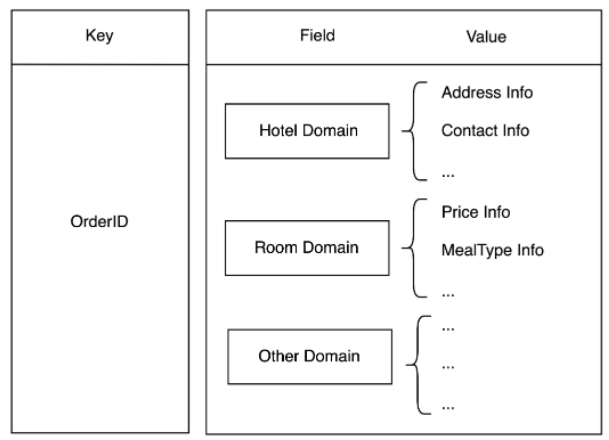

The traditional cache design is that one database table record corresponds to one cache data. In the order system, it is very common to query multiple tables for one order. If a traditional design is adopted, the number of Redis accesses in a user query increases with the number of tables. This design has large network IO and is time-consuming. long. When taking stock of the table dimension traffic data, we found that some tables are often queried together, and less than 30% of the tables have more than 90% of the query traffic. In terms of business, they can be divided into the same abstract domain model, and then stored based on the hash structure, such as Figure 3.2 uses the order number as the key, the field name as the field, and the field data as the value.

In this way, whether it is a single table or multi-table query, each order only needs to access Redis once, which reduces the key, reduces the number of multi-table queries, and improves performance. At the same time, value is compressed based on protostuff, which also reduces the storage space of Redis and the subsequent network traffic overhead.

Figure 3.2 Brief description of domain-based storage structure

2. Lossless migration process

How to achieve lossless hot migration is the entire project The most challenging place. Our pre-work is to first complete the development of middleware, with the purpose of separating database and business layer applications, so that process design can be carried out. Secondly, the abstract Dao layer implements domainization, and the data domain layer provides data services to applications. Under the domain, two databases, SQLServer and MySQL, are adapted and packaged uniformly. On this basis, lossless thermal migration can be implemented for the following process design.

SQLServer and MySQL dual databases are online, implementing dual writing, primary writing SQLServer, and synchronously writing secondary MySQL. If the SQLServer operation fails, the entire operation fails and the dual-writing transaction is rolled back.

Add a synchronization job between SQLServer and MySQL to query the data changed in the latest time window of SQLServer in real time to verify the consistency of entries in MySQL. The differences can be tracked to ensure double writing. This is especially useful when there are unexpected inconsistencies between the two sides during this period, especially when there is still direct access to the SQL Server application.

The middleware is designed with a configuration system that supports any major query dimension. It can accurately direct the data source to SQLServer or MySQL according to the configuration, and can control whether to load it into the order cache after reading. . The initial setting is to only load the SQLServer data source to avoid cache data jumps caused by data inconsistencies between the two databases. In the initial stage, grayscale can be set up and a small number of non-core tables can be connected directly to MySQL for verification to ensure reliability. Once the expectation of late data consistency is achieved, the order cache can be loaded against the specified database at will.

After ensuring data consistency when querying data, the traffic policy supports single write to the database based on any controllable dimension in Figure 3.3. In actual projects, single-write is mainly implemented in table dimensions. When a specified table is configured with single-write MySQL, all CRUD behaviors involving the table are directed to MySQL, including the cache load source.

Finally, the order messages sent externally are unified through the middleware. All messages are sent based on the CUD operation of the middleware and have nothing to do with the physical database. In this way, the data source of the message is transparent and can be linked to the above All process operations and data links remain consistent.

Figure 3.3 Introduction to the operation process

3. Data warehouse connection

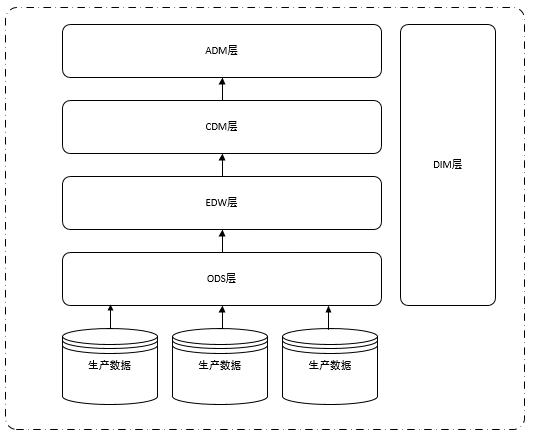

In order to facilitate the understanding of production data to data The migration of warehouse ODS layer data is transparent to downstream users. Here is a brief introduction to the hierarchical system of conventional data warehouses. Usually the data warehouse is mainly divided into five layers: ODS (original data layer), DIM (dimension), EDW (enterprise data warehouse), CDM (common model layer), ADM (application model layer),

As shown below:

Figure 3.4 Data warehouse hierarchical structure

As can be seen from Figure 3.4, each layer of the data warehouse relies on the data of the ODS layer. In order not to affect all applications of the data platform, we only need to convert the original order library The ODS layer data source can be migrated from SQLServer to the MySQL library.

It can be seen intuitively from the figure that migration only needs to change the data source. It is not very troublesome, but in order to ensure the data quality, we have done a lot of preparatory work, such as: DBA synchronizes production data to production in advance. MySQL database, MySQL data real-time synchronization, data consistency verification on both sides of production, MySQL side data synchronization to the ODS layer, ODS layer data consistency verification, and original ODS layer synchronization job data source switching, etc.

Among them, the data consistency check on both sides of the production and the data consistency check on the ODS layer of the data warehouse are the most complex and the longest time consuming. You must ensure that each table and each field must be consistent before switching. data source. However, in actual operation, it cannot be completely consistent. According to the actual situation, appropriately handle the time type, floating point value precision and decimal places, etc.

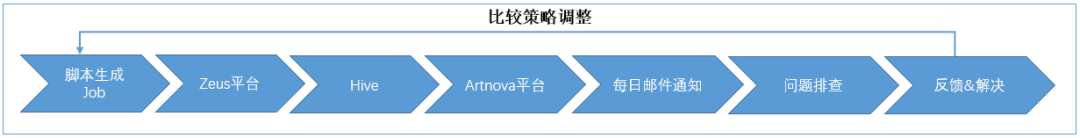

The following is an introduction to the overall process:

First, for online data consistency verification, we developed an online synchronization job to compare SQLServer data with MySQL data. , when inconsistencies are found, the MySQL data is updated based on the SQLServer data to ensure the consistency of the data on both sides.

Secondly, for offline data consistency verification, we work with data warehouse colleagues to synchronize MySQL side data to the ODS layer (use the database name to distinguish whether it is a SQLServer or MySQL table), and combine scheduled tasks with The tasks on the SQL Server side should be as consistent as possible in time. After the data on both sides was prepared, we developed an offline data verification script generator. Based on the data warehouse metadata, a synchronization job was generated for each table and deployed to the scheduling platform.

The synchronization task will rely on the ODS layer synchronization data on both sides. After the T1 data synchronization is completed, a consistency check will be performed, the inconsistent order numbers will be recorded in the inconsistent details table, and the inconsistent data amount will be counted. The results are saved to the statistics table. Then we create a report on the self-service reporting platform and send the daily statistics of inconsistent tables and the amount of inconsistencies to the mailbox. We troubleshoot the inconsistent tables every day to find problems, adjust the comparison strategy, and update the comparison job. The general process is as follows:

Figure 3.5 Overall consistency verification process

Finally, as the online and offline data gradually become consistent, we will The data source of the original SQLServer synchronized to the ODS layer job was switched to MySQL. Some students here may have questions: Why not directly use the tables in the ODS layer on the MySQL side? The reason is that according to statistics, there are thousands of jobs that rely on the original ODS layer table. If the dependent job is switched to the MySQL side ODS table, the modification workload will be very heavy, so we directly switch the original ODS layer synchronization data source. into MySQL.

In actual operation, all data sources cannot be cut at once. We do it in three batches. We first find a dozen less important tables as the first batch. After cutting, we run for two weeks and collect Feedback on downstream data issues. The first batch of samples were successfully analyzed two weeks later, and we did not receive data problems in downstream reports, which proves the reliability of the sample data quality. Then divide the remaining hundreds of tables into two batches according to their importance and continue cutting until they are finished.

At this point, we have completed the migration of the order database from SQLServer to MySQL at the data warehouse layer.

4. Compilation of core issues

In fact, no matter how thorough the analysis and design is, it is inevitable to encounter various challenges during the execution process. We have summarized some classic problems. Although these large and small problems were finally solved through technical means and the goals were achieved, I believe that you readers must have better solutions. We are happy to learn and make progress together.

1. How to fine-grained monitoring of SQLServer & MySQL traffic migration

The order system involves a large number of applications and tables. One application corresponds to 1 to n tables, and one table corresponds to 1 to n applications are typical many-to-many relationships. As shown in Figure 4.1, for upper-layer applications, switching from one SQLServer database to another MySQL database, the basic process is divided into at least the following steps according to the operation process chapter:

From single Writing SQLServer becomes dual-writing SQLServer and MySQL

From single-read SQLServer to single-reading MySQL

From dual-writing SQLServer and MySQL to Write MySQL alone

Offline SQLServer

In the switching process, the double writing, single reading and single writing processes are interlocking and dependent on each other step by step. As a supporting design monitoring method, it is necessary to confirm that the previous operation achieves the expected effect before proceeding to the next one. If you skip or proceed to the next step rashly without switching cleanly, for example, if you start reading MySQL data before the double-write is completely consistent, it may result in no data being found or dirty data being found! Then it is necessary to monitor the reading and writing of each CRUD operation, and achieve 360-degree visual traffic segmentation control without blind spots during the migration process. What you see is what you get. The specific method is as follows:

All applications are connected to the middleware, and CRUD is controlled by the middleware to read and write which table in which DB according to the configuration;

The detailed information of each read and write operation is written to ES and displayed visually on Kibana and Grafana, and through DBTrace, you can know which DB each SQL is executed on;

-

Gradually configure the dual-write DB according to the application level, compare, repair and record the DB differences on both sides in real time through the synchronization job, and then verify the final inconsistency in the dual-write through offline T 1, and so on until the dual-write is consistent;

After the double writes are consistent, gradually switch from reading SQLServer to reading MySQL. Through ES monitoring and DBTrace, it is confirmed that there is no SQLServer reading at all, which means that the single reading of MySQL is completed. Taking into account the auto-incrementing primary key situation, We use table dimensions and write to SQL Server in batches until all tables are written to MySQL alone.

To sum up, the basic solution is to use middleware to serve as a pipeline for all connected applications, observe the traffic distribution by displaying the behavior of the application layer in real time, and combine it with the company database Trace's visualization tool verifies that the application's traffic switching behavior is consistent with the actual QPS and load fluctuation of the database to supervise the migration task.

2. How to solve the DB consistency problem during double writing

The hotel’s order database has a history of about 20 years, accumulated over the years, and is directly or indirectly relied upon by multiple teams across departments and within the hotel. Order database SQLServer, if you want to switch to MySQL, you must first solve the dual-write DB consistency problem. The inconsistency is mainly reflected in the following two points:

When dual-write, only SQLServer is actually written , Missing writing to MySQL;

Double writing to SQLServer and MySQL is successful. When concurrency, unreliable network, GC, etc. occur, the MySQL data may be inconsistent with SQLServer.

Regarding the guarantee of double-write data consistency, we use the synchronization job to align the SQL Server data, and pull the DB data on both sides for comparison based on the last update time. If they are inconsistent, Repair MySQL data and write inconsistent information to ES for subsequent troubleshooting of root causes.

But also because of the introduction of additional jobs to operate MySQL data, new problems have been brought about, that is, when multiple tables are double-written, because the time is doubled, the job finds that SQLServer has data but MySQL does not, and it immediately Fixed MySQL data causing double write failure. Therefore, a failover mechanism is added when the double-write part fails. By throwing messages, a new round of comparison and repair work is triggered until the DB data on both sides are completely consistent.

Although the synchronized Job and Failover message mechanisms can make the data ultimately consistent, there is a second-level interval after all, and the data on both sides are inconsistent. In addition, for various scenarios of many applications, it is inevitable that there will be omissions and single writes. SQLServer. These missed writes to MySQL cannot be found through DBTrace because it cannot be determined that a CUD operation is only written to SQLServer and not to MySQL. So is there a way to find out the scenario of missing MySQL in advance? We did find out one thing, that is to change the database connection string, use the new connection string for the application connected to the middleware, and then find out all operations using the old connection string. SQLServer's SQL can accurately locate the traffic that misses MySQL.

In the end, we gradually reduced the double-write DB inconsistency rate from 2/100,000 to almost 0. Why is it almost? Because some differences in DB characteristics will naturally cause the data to be inconsistent. This will be discussed in detail in subsequent content.

3. Handling the data out-of-synchronization problem caused by the introduction of order cache

After the cache is introduced, it involves writing or updating the cache. Common practices in the industry are divided into the following categories:

Write to DB first and then cache

Write cache first and then write DB

Delete first Cache and then write DB

Write DB first and then delete cache

No longer compare the advantages and disadvantages of various methods, it may be used in specific implementation Double delete cache or delayed double delete cache. We adopt a scheme of writing to the DB first and then deleting the cache. For data-sensitive tables, delayed double deletion will be performed. The background synchronization job regularly compares, repairs and records the differences between the database data and the Redis data. Although the design can ensure the final consistency. performance, but there were still a lot of data inconsistencies in the early stages. Mainly reflected in the following aspects:

There are scenarios where the application is not connected to the middleware, and after performing CUD operations on the DB, the cache is not deleted;

The delay in deleting the cache after writing the DB results in reading dirty cache data, such as unreliable network, GC, etc. causing the delay in deleting the cache;

The failure to delete the cache after writing the DB results in reading Caching dirty data, such as during Redis master-slave switching, can only be read but not written.

In order to solve the cache consistency problem, as shown in Figure 4.2, we added optimistic locking and CUD construction markers based on the original cache and DB to limit the simultaneous loading of data under concurrency. The behavior of caches overwriting each other, and the perception that CUD operations are being performed on the currently checked data. The last writer winning mechanism based on optimistic locking can be used to realize Query traffic to directly connect to the DB and solve the competition problem when these two scenarios are not ended. In the end, our cache inconsistency rate was controlled from 2 parts per million to 3 parts per 10 million.

Figure 4.2 Cache consistency solution

Figure 4.2 When the query misses the cache, or there is currently an optimistic lock or construction mark for the data, when Query the directly connected DB and release the automatic loading function of cached data until the relevant transaction is completed.

4. How to calibrate the existing order data at one time

At the beginning of the project, we made a one-time preparation of the last N years of data for MySQL, which resulted in the following problems that cannot be calibrated during the double-write stage: Data of two scenarios:

Because the production order database is preset to retain nearly N years of data, the Job responsible for cleaning the backup has existed for N years before MySQL is connected to the middleware. This batch of data cannot be overwritten by the policy and cleared.

It takes a long time to connect all applications to the middleware. The data may be inconsistent before the middleware is double-written. It is necessary to apply all the middleware and double-write all tables before rewriting the previous data. The data is repaired in one go.

In response to the first point, we developed a MySQL data cleaning job. Since the order database has multiple shards, the total number of core threads is set internally in the job based on the actual number of shards. Each thread is separately Responsible for cleaning the designated tables in the corresponding Shard, and running multiple servers in parallel to distribute tasks for cleaning. Through speed control, efficiency is ensured without affecting the load of the production database.

Regarding the second point, after all application interface middleware and all tables have been double-written, the existing order data can be repaired by adjusting the start timestamp of the online synchronization job scan. When repairing, special attention should be paid to the fact that the scanned data must be processed in slices according to time periods to prevent too much data from being loaded, causing the order database server CPU to be too high.

5. Some differences in database features

If we want to perform live migration of databases in a huge system, we must have a deep understanding of the similarities and differences between different databases in order to be effective Solve the problem. Although MySQL and SQL Server are both popular relational databases and both support standardized SQL queries, there are still some differences in details. Let’s take a closer look at the problems faced during migration.

1) Auto-increment key problem

In order to avoid even greater risks of data repair caused by inconsistent auto-increment serial numbers, you should ensure that the two databases share the same auto-increment serial number. . Therefore, each should not be allowed to perform auto-increment operations. Therefore, when data is double-written, we write the auto-increment id generated by SQLServer back into the MySQL auto-increment column. When data is written to MySQL alone, MySQL is used to directly generate the auto-increment id value.

2) Date accuracy issue

In order to ensure data consistency after double writing, the data on both sides must be consistent checked, the types are Date, DateTime, Timestamp Due to the inconsistent storage accuracy of the fields, special processing is required during comparison, and the data is intercepted to seconds for comparison.

3) XML field problem

SQL Server supports the XML data type, but MySQL 5.7 does not support the XML type. After using varchar(4000) instead, I encountered a case where the MySQL data writing failed, but the synchronization job could write the SQLServer data back to MySQL normally. After analysis, the program will write uncompressed XML strings when writing. The SQLServer XML type will automatically compress and store it, but MySQL will not. As a result, the write operation with a length exceeding 4000 will fail, and the length after SQLServer compression is less than 4000. , and can write back to MySQL normally. To this end, we propose countermeasures, including compressing and verifying the length before writing, intercepting non-important fields before storing them, optimizing the storage structure of important fields or changing field types.

The following lists some common points to note during the migration process.

5. Early Warning Practice

Our early warning practice is not limited to monitoring requirements during project advancement. How to scan data periodically in tens of billions of data Abnormalities in writing, review of the consistency rate of double-write data during the completion of the project, how to real-time monitor and early warning the normal trend of order writing volume on each shard of the order library, and how to regularly accept/verify the high availability of the entire system will be discussed in the following pages. describe.

1. Tens of billions of data difference verification warnings

To meet the requirements of order data migration from SQLServer to MySQL database, data quality is a necessary condition for migration. If data consistency does not meet the requirements, it will not be transparent. Migration, so designing a reasonable verification plan is related to the progress of migration. For data verification, we divide it into two types: online and offline:

Online data verification and early warning

During the migration, we synchronized the job, calculated the inconsistent data, wrote the inconsistent tables and fields into ElasticSearch, and then used Kibana to create a monitoring dashboard of the amount of inconsistent data and the proportion of inconsistent tables. , through the monitoring dashboard, we can monitor in real time which tables have high data inconsistencies, and then use DBA tools to find out which applications have performed CUD operations on the tables based on the table names, and further locate applications and codes that have missed middleware.

In actual operations, we did find a large number of applications that were not connected to the middleware and transformed them. As more and more applications are connected to the middleware, data consistency gradually improves. From the monitoring dashboard The amount of inconsistency seen is also slowly decreasing. However, the consistency has never been reduced to zero. The reason is caused by the concurrency of applications and synchronization jobs. This is also the most troublesome problem.

Perhaps some students may wonder, since double writing, why not stop the synchronization job? The reason is that SQL Server is the main writing method and the CUD range covered by the middleware is used as the benchmark. In addition to not guaranteeing 100% success in writing data to MySQL, there is no guarantee that the amount of data in the two databases is equal, so a consistent job is required. . Although the data cannot be completely consistent, inconsistencies can be further reduced through concurrent processing.

Our approach is to set a 5-second stable line when comparing consistency jobs (that is, data within 5 seconds of the current time is regarded as unstable data). If the order data timestamp is not within the stable line, When comparing outside the stable line, the order data will be calculated again to see if it is within the stable line. If it is confirmed that all the data is outside the stable line, the comparison operation will be performed. Otherwise, the comparison will be abandoned and the consistency calibration will be performed in the next schedule. test.

Offline data verification and early warning

The migration of the order database involves hundreds of tables and a large amount of offline data. The amount of order-related data in just one year reaches billions, which brings considerable challenges to offline data inspection. We wrote a data consistency script generator to generate a comparison script for each table and deploy it to the scheduling platform. The comparison script relies on the synchronization job on both sides of the upstream SQLServer and MySQL. After the upstream job is executed, the data comparison is automatically performed to compare the inconsistent data. The order number is written into the detailed table, and the amount of inconsistency is calculated based on the detailed table, which is issued in the form of a daily report. Tables with high data inconsistency are checked and resolved every day.

We typically continually troubleshoot and resolve inconsistencies, including fixing problems in comparison scripts and checking the quality of offline data. The verification of each field in each table of offline data is very complicated. We write a UDF function for comparison. The function of the UDF function is also very simple. It is to splice the non-primary key fields of each table to generate a new field. The tables on both sides When performing a full outer join, records with equal primary keys or logical primary keys should also generate new fields. As long as they are different, they will be regarded as inconsistent data. Here we should pay attention to date field interception, data accuracy and the processing of decimals with zeros at the end.

After more than three months of hard work, we identified all applications that were not connected to the middleware and connected all their CUD operations to the middleware. After turning on dual-write, the consistency of online and offline data gradually improved, reaching The goal of migrating data.

2. ALL Shard real-time total order volume monitoring

Every company is indispensable for monitoring order volume. Ctrip has a unified early warning platform Sitemon, which mainly monitors various order alarms , including hotels, air tickets, wireless, high-speed rail, and vacation. The system has the function of independent search and display based on online/offline, domestic/international and payment methods, as well as alerts for all types of orders.

During the migration of order data from SQL Server to MySQL, we sorted out nearly two hundred early warning strategies that relied on the order database. The relevant colleagues responsible for monitoring made a copy of the early warning strategies of the SQL Server data source and connected to the MySQL data source. . After all the monitoring alarms using MySQL as the data source are added, enable the alarm strategy. Once the order volume is abnormal, NOC will receive two notifications, one from SQLServer data alarm and one from MySQL alarm. If both sides are consistent, it means grayscale Verification passed. Otherwise, it fails and the MySQL monitoring problem needs to be investigated.

After a period of grayscale verification, the alarm data on both sides are consistent. As the SQLServer data table goes offline (that is, MySQL data is written alone), the early warning strategy using SQLServer as the data source also goes offline in time.

3. "Wandering Earth" Practical Operation

To ensure system security and improve the ability to respond to emergencies, necessary drills and stress tests must be conducted. To this end, we have developed a complete emergency plan and regularly organize emergency drills - The Wandering Earth. The drill items include core/non-core application circuit breaker, DB circuit breaker, Redis circuit breaker, core firewall, switch emergency switching, etc.

Take caching as an example. In order to ensure the high availability of the cache service, we will offline some nodes or machines or even cut off the entire Redis service during the drill to simulate cache avalanche, cache breakdown and other scenarios. According to the plan, before fusing, we will first cut off the application's Redis access, gradually reduce the Redis load, and then fusing Redis to test whether each application system can operate normally without Redis.

In the first drill, when Redis was disconnected, the number of application errors increased sharply, so we decisively stopped the drill and rolled back while looking for the cause of the problem. Since the Redis operations of some applications are not uniformly managed and are not controlled by middleware, when Redis is blown, the application immediately becomes abnormal. In response to this situation, we analyzed and connected the order cache access port of the error reporting application to the middleware. On the other hand, we strengthened the weak dependence between the middleware and Redis, supported one-click disconnection of Redis operations, and improved various Metric monitoring. In the second drill, the Redis circuit breaker was successful and all business systems ran normally with full traffic access to MySQL. In the latest Wandering Earth exercise, after rounds of fault injection such as computer room network blocking and non-core application blocking, our system achieved very good expected results.

In this way, in drill after drill, we discovered problems, summarized experience, optimized the system, improved emergency plans, step by step improved the system's ability to cope with sudden failures, and ensured business continuity and data integrity. sex. Provide underlying data support to protect the entire hotel order system.

6. Future planning

1. Order cache manual control desk

Although we have a complete monitoring board and early warning system, for things like circuit breaker drills, automated fault drills, Hardware failures and maintenance, as well as unpredictable problems in advance, if the core developers fail to respond to the operation on site in time, the system cannot fully autonomously degrade, which may lead to some performance degradation, such as increased response time, etc. In the future, we plan to add manual control dashboards. After authorization, NOC or TS can be allowed to perform targeted operations. For example, if all or part of the Redis cluster is down, the faulty Redis shards can be cut with one click, or based on the planned unavailability time period of Redis. Setting the cutting time in advance can ensure the controllability of the system to the greatest extent.

2. Automatic downgrade of middleware

Since manual control can be performed, we also consider monitoring some core indicators in the future. For example, during Redis master-slave switching, the normal situation is at the second level. However, we have also experienced situations where some Redis cannot be written for more than 10 seconds. At this time, we can monitor the amount of dirty data that is inconsistent between the cache and the database. We can also apply some strategies by monitoring the abnormal response time threshold when Redis fails, so that The middleware automatically downgrades and cuts off these faulty hosts to ensure the basic stability of the service, and then gradually tries to recover after detecting that the cluster indicators are stable.

3. Middleware access to Service Mesh

The current order team uses middleware in the form of JAR. The middleware shields the underlying differences in the database and operates Redis to achieve more complex functions. , naturally has the ability to access Service Mesh. After access, the underlying upgrade will be faster and less intrusive, the call will be lighter, the grid integration with the framework will be better, and the cloud will be more convenient, which can better support Ctrip's internationalization strategy. Target.

The above is the detailed content of How to migrate SQL Server sensorless system to MySQL. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

How to open phpmyadmin

Apr 10, 2025 pm 10:51 PM

You can open phpMyAdmin through the following steps: 1. Log in to the website control panel; 2. Find and click the phpMyAdmin icon; 3. Enter MySQL credentials; 4. Click "Login".

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL: An Introduction to the World's Most Popular Database

Apr 12, 2025 am 12:18 AM

MySQL is an open source relational database management system, mainly used to store and retrieve data quickly and reliably. Its working principle includes client requests, query resolution, execution of queries and return results. Examples of usage include creating tables, inserting and querying data, and advanced features such as JOIN operations. Common errors involve SQL syntax, data types, and permissions, and optimization suggestions include the use of indexes, optimized queries, and partitioning of tables.

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

How to use single threaded redis

Apr 10, 2025 pm 07:12 PM

Redis uses a single threaded architecture to provide high performance, simplicity, and consistency. It utilizes I/O multiplexing, event loops, non-blocking I/O, and shared memory to improve concurrency, but with limitations of concurrency limitations, single point of failure, and unsuitable for write-intensive workloads.

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

Why Use MySQL? Benefits and Advantages

Apr 12, 2025 am 12:17 AM

MySQL is chosen for its performance, reliability, ease of use, and community support. 1.MySQL provides efficient data storage and retrieval functions, supporting multiple data types and advanced query operations. 2. Adopt client-server architecture and multiple storage engines to support transaction and query optimization. 3. Easy to use, supports a variety of operating systems and programming languages. 4. Have strong community support and provide rich resources and solutions.

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's Place: Databases and Programming

Apr 13, 2025 am 12:18 AM

MySQL's position in databases and programming is very important. It is an open source relational database management system that is widely used in various application scenarios. 1) MySQL provides efficient data storage, organization and retrieval functions, supporting Web, mobile and enterprise-level systems. 2) It uses a client-server architecture, supports multiple storage engines and index optimization. 3) Basic usages include creating tables and inserting data, and advanced usages involve multi-table JOINs and complex queries. 4) Frequently asked questions such as SQL syntax errors and performance issues can be debugged through the EXPLAIN command and slow query log. 5) Performance optimization methods include rational use of indexes, optimized query and use of caches. Best practices include using transactions and PreparedStatemen

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL: Essential Skills for Developers

Apr 10, 2025 am 09:30 AM

MySQL and SQL are essential skills for developers. 1.MySQL is an open source relational database management system, and SQL is the standard language used to manage and operate databases. 2.MySQL supports multiple storage engines through efficient data storage and retrieval functions, and SQL completes complex data operations through simple statements. 3. Examples of usage include basic queries and advanced queries, such as filtering and sorting by condition. 4. Common errors include syntax errors and performance issues, which can be optimized by checking SQL statements and using EXPLAIN commands. 5. Performance optimization techniques include using indexes, avoiding full table scanning, optimizing JOIN operations and improving code readability.

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Monitor Redis Droplet with Redis Exporter Service

Apr 10, 2025 pm 01:36 PM

Effective monitoring of Redis databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Redis Exporter Service is a powerful utility designed to monitor Redis databases using Prometheus. This tutorial will guide you through the complete setup and configuration of Redis Exporter Service, ensuring you seamlessly build monitoring solutions. By studying this tutorial, you will achieve fully operational monitoring settings