How to implement Redis data sharding

Introduction to Twemproxy

Twitter's Twemproxy is currently the most widely used on the market and is mostly used for redis cluster services. Since redis is single-threaded, and the official cluster is not very stable and widely used. Twemproxy is a proxy sharding mechanism. As a proxy, Twemproxy can accept access from multiple programs, forward it to each Redis server in the background according to routing rules, and then return to the original route. This solution well solves the problem of the carrying capacity of a single Redis instance. Of course, Twemproxy itself is also a single point and needs to use Keepalived as a high-availability solution (or LVS). Through Twemproxy, multiple servers can be used to horizontally expand the redis service, which can effectively avoid single points of failure. Although using Twemproxy requires more hardware resources and has a certain loss in redis performance (about 20% in the twitter test), it is quite cost-effective to improve the HA of the entire system. In fact, twemproxy not only implements the redis protocol, but also implements the memcached protocol. What does it mean? In other words, twemproxy can not only proxy redis, but also proxy memcached.

Advantages of Twemproxy:

1) Exposing an access node to the outside world reduces program complexity.

2) Supports automatic deletion of failed nodes. You can set the time to reconnect the node and delete the node after setting the number of connections. This method is suitable for cache storage, otherwise the key will be lost;

3) Support setting HashTag. Through HashTag, you can set two KEYhash to the same instance.

4) Multiple hash algorithms, and the weight of the backend instance can be set.

5) Reduce the number of direct connections with redis: maintain a long connection with redis, set the number of each redis connection between the agent and the backend, and automatically shard it to multiple redis instances on the backend.

6) Avoid single point problems: Multiple proxy layers can be deployed in parallel, and the client automatically selects the available one.

7) High throughput: connection multiplexing, memory multiplexing, multiple connection requests, composed of redis pipelining and unified requests to redis.

Disadvantages of Twemproxy:

1) It does not support operations on multiple values, such as taking the subintersection and complement of sets, etc.

2) Redis transaction operations are not supported.

3) The applied memory will not be released. All machines must have large memory and need to be restarted regularly, otherwise client connection errors will occur.

4) Dynamic addition and deletion of nodes is not supported, and a restart is required after modifying the configuration.

5) When changing nodes, the system will not reallocate existing data. If you do not write your own script for data migration, some keys will be lost (the key itself exists on a certain redis, but the key has been hashed) other nodes, causing "loss").

6) The weight directly affects the hash result of the key. Changing the node weight will cause some keys to be lost.

7) By default, Twemproxy runs in a single thread, but most companies that use Twemproxy will conduct secondary development on their own and change it to multi-threading.

Generally speaking, twemproxy is still very reliable. Although there is a loss in performance, it is still relatively worthwhile. It has been tested for a long time and is widely used. For more detailed information, please refer to the official documentation. In addition, twemproxy is only suitable for static clusters and is not suitable for scenarios that require dynamic addition and deletion of nodes and manual load adjustment. If we use it directly, we need to do development and improvement work. https://github.com/wandoulabs/codis This system is based on twemproxy and adds functions such as dynamic data migration. The specific usage requires further testing.

Twemproxy usage architecture

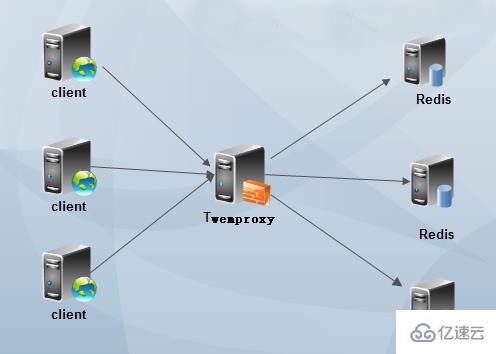

First type: single-node Twemproxy

ps: Saves hardware resources, but is prone to single points of failure.

Second type: Highly available Twemproxy

PS: One-half of the resources are wasted, but the node is highly available.

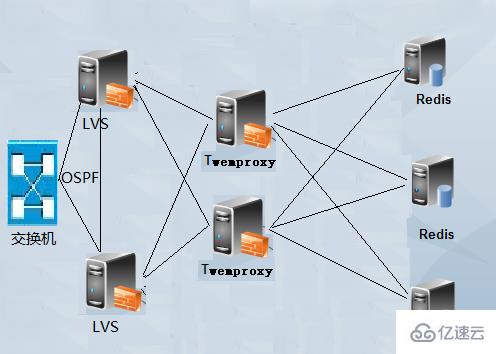

Third type: Load balancing Twemproxy

PS: If you are a large-scale Redis or Memcached application scenario, you can do Twemproxy load balancing scenario , that is, adding LVS nodes on the basis of high-availability Twemproxy, and using LVS (Linux virtual server) to perform load balancing of Twemproxy. LVS is a four-layer load balancing technology and has very powerful proxy capabilities. For details, please see the LVS chapter of this blog. introduce. But when you use LVS, the problem of Twemproxy and single point of failure arises. At this time, you need to make high availability for LVS. Using OSPF routing technology, LVS can also achieve load balancing. And this architecture is the architecture I am currently using in my work.

In addition, no matter which of the above architecture methods is used, Redis's single point of failure problem cannot be avoided, and Redis persistence cannot avoid hardware failure problems. If you must ensure the uninterruptibility of Redis data access, then you should use Redis cluster mode. Cluster mode currently has good support for JAVA and is widely used in work.

Install Twemproxy

1. Download Twemproxy

Please rewrite the following statement into one sentence: Clone the twemproxy repository to your local machine using the following command: git clone https://github.com/twitter/twemproxy.git After rewriting: Use the following command on your local computer: git clone https://github.com/twitter/twemproxy.git to clone the twemproxy repository

2. Install Twemproxy

Twemproxy needs to use Autoconf for compilation and configuration. GNU Autoconf is a tool for creating configuration scripts for compiling, installing and packaging software under the Bourne shell. Autoconf is not restricted by programming languages and is commonly used in C, C++, Erlang and Objective-C. A configure script controls the installation of a software package on a specific system. By running a series of tests, the configure script generates the makefile and header files from the template and adjusts the package as needed to make it suitable for the specific system. Autoconf, along with Automake and Libtool, form the GNU Build System. Autoconf was written by David McKenzie in the summer of 1991 to support his programming work at the Free Software Foundation. Autoconf has incorporated improved code written by multiple people and has become the most widely used free compilation and configuration software.

Let’s start using autoreconf to compile and configure twemproxy:

[root@www twemproxy]# autoreconfconfigure.ac:8: error: Autoconf version 2.64 or higher is required configure.ac:8: the top level autom4te: /usr/bin/m4 failed with exit status: 63 aclocal: autom4te failed with exit status: 63 autoreconf: aclocal failed with exit status: 63 [root@www twemproxy]# autoconf --versionautoconf (GNU Autoconf) 2.63

It prompts that the version of autoreconf is too low. The autoconf 2.63 version is used above, so let’s download the autoconf 2.69 version for compilation and installation. Note that if you are CentOS6, then your default version is 2.63. If you are CentOS7, then your default version should be 2.69. If you are Debian8 or Ubuntu16, then your default version should also be 2.69. Anyway, if an error is reported when executing autoreconf, it means that the version is old and needs to be compiled and installed.

Compile and install Autoconf

[root@www ~]# wget http://ftp.gnu.org/gnu/autoconf/autoconf-2.69.tar.gz[root@www ~]# tar xvf autoconf-2.69.tar.gz[root@www ~]# cd autoconf-2.69[root@www autoconf-2.69]# ./configure --prefix=/usr[root@www autoconf-2.69]# make && make install[root@www autoconf-2.69]# autoconf --versionautoconf (GNU Autoconf) 2.69

Compile and install Twemproxy

[root@www ~]# cd /root/twemproxy/[root@www twemproxy]# autoreconf -fvi[root@www twemproxy]# ./configure --prefix=/etc/twemproxy CFLAGS="-DGRACEFUL -g -O2" --enable-debug=full[root@www twemproxy]# make && make install

If autoreconf -fvi reports the following error, you need to install the libtool tool and need to rely on libtool (if it is CentOS, use yum directly Just install libtool. If it is Debian, just use apt-get install libtool.)

autoreconf: Entering directory `.'

autoreconf: configure.ac: not using Gettext

autoreconf: running: aclocal --force -I m4

autoreconf: configure.ac: tracing

autoreconf: configure.ac: adding subdirectory contrib/yaml-0.1.4 to autoreconf

autoreconf: Entering directory `contrib/yaml-0.1.4'autoreconf: configure.ac: not using Autoconf

autoreconf: Leaving directory `contrib/yaml-0.1.4'

autoreconf: configure.ac: not using Libtool

autoreconf: running: /usr/bin/autoconf --force

configure.ac:36: error: possibly undefined macro: AC_PROG_LIBTOOL

If this token and others are legitimate, please use m4_pattern_allow.

See the Autoconf documentation.

autoreconf: /usr/bin/autoconf failed with exit status: 1Twemproxy adds configuration file

[root@www twemproxy]# mkdir /etc/twemproxy/conf[root@www twemproxy]# cat /etc/twemproxy/conf/nutcracker.ymlredis-cluster: listen: 0.0.0.0:22122 hash: fnv1a_64 distribution: ketama timeout: 400 backlog: 65535 preconnect: true redis: true server_connections: 1 auto_eject_hosts: true server_retry_timeout: 60000 server_failure_limit: 3 servers: - 172.16.0.172:6546:1 redis01 - 172.16.0.172:6547:1 redis02

Configuration option introduction:

redis-cluster: Give this configuration segment a name, there can be multiple configuration segments;

listen: Set monitoring IP and port;

hash: specific hash function, supports md5, crc16, crc32, finv1a_32, fnv1a_64, hsieh, murmur, jenkins, etc. More than ten kinds, generally choose fnv1a_64 , the default is also fnv1a_64;

Rewrite: Use the hash_tag function to calculate the hash value of the key based on a certain part of the key. Hash_tag consists of two characters, one is the beginning of hash_tag, and the other is the end of hash_tag. Between the beginning and end of hash_tag is the part that will be used to calculate the hash value of the key, and the calculated result will be used to select the server. For example: if hash_tag is defined as "{}", then the hash values with key values of "user:{user1}:ids" and "user:{user1}:tweets" are based on "user1" and will eventually be mapped to Same server. And "user:user1:ids" will use the entire key to calculate the hash, which may be mapped to different servers.

Distribution: Specify the hash algorithm. This hash algorithm determines how the hashed keys above are distributed on multiple servers. The default is "ketama" consistent hashing. ketama: The ketama consistent hash algorithm will construct a hash ring based on the server and allocate hash ranges to the nodes on the ring. The advantage of ketama is that after a single node is added or deleted, the cached key values in the entire cluster can be reused to the greatest extent. Modula: Modula is very simple. It takes the modulo based on the hash value of the key value and selects the corresponding server based on the modulo result. Random: random means that no matter what the hash of the key value is, a server is randomly selected as the target of the key value operation.

timeout: Set the timeout of twemproxy. When timeout is set, if no response is received from the server after the timeout, the timeout error message SERVER_ERROR Connection time out will be sent to the client

Backlog: The length of the listening TCP backlog (connection waiting queue), the default is 512.

preconnect: Specify whether twemproxy will establish connections with all redis when the system starts. The default is false, a Boolean value;

redis: Specify whether this configuration section should be used as a proxy for Redis. If redis is true without adding redis, you can act as a proxy for the memcached cluster (this is the only difference between Twemproxy as a redis or memcached cluster proxy);

redis_auth: If your backend Redis has authentication turned on, then You need to specify the authentication password for redis_auth;

server_connections: The number of connections between twemproxy and each redis server. The default is 1. If it is greater than 1, user commands may be sent to different connections, which may cause the actual execution of the command. The order is inconsistent with the one specified by the user (similar to concurrency);

auto_eject_hosts: Whether to eject the node when it cannot respond. The default is true, but it should be noted that after the node is ejected, because the number of machines decreases, the machine hash position changes. , will cause some keys to fail to hit, but if the program connection is not eliminated, an error will be reported;

server_retry_timeout: Controls the time interval for server connection, in milliseconds. It takes effect when auto_eject_host is set to true. The default is 30000 millisecond;

server_failure_limit:Redis连续超时的次数,超过这个次数就视其为无法连接,如果auto_eject_hosts设置为true,那么此Redis会被移除;

servers:一个pool中的服务器的地址、端口和权重的列表,包括一个可选的服务器的名字,如果提供服务器的名字,将会使用它决定server的次序,从而提供对应的一致性hash的hash ring。否则,将使用server被定义的次序,可以通过两种字符串格式指定’host:port:weight’或者’host:port:weight name’。一般都是使用第二种别名的方式,这样当其中某个Redis节点出现问题时,可以直接添加一个新的Redis节点但服务器名字不要改变,这样twemproxy还是使用相同的服务器名称进行hash ring,所以其他数据节点的数据不会出现问题(只有挂点的机器数据丢失)。

PS:要严格按照Twemproxy配置文件的格式来,不然就会有语法错误;另外,在Twemproxy的配置文件中可以同时设置代理Redis集群或Memcached集群,只需要定义不同的配置段即可。

启动twemproxy (nutcracker)

刚已经加好了配置文件,现在测试下配置文件:

[root@www twemproxy]# /etc/twemproxy/sbin/nutcracker -tnutcracker: configuration file 'conf/nutcracker.yml' syntax is ok

说明配置文件已经成功,现在开始运行nutcracker:

[root@www ~]# /etc/twemproxy/sbin/nutcracker -c /etc/twemproxy/conf/nutcracker.yml -p /var/run/nutcracker.pid -o /var/log/nutcracker.log -d选项说明: -h, –help #查看帮助文档,显示命令选项;-V, –version #查看nutcracker版本;-c, –conf-file=S #指定配置文件路径 (default: conf/nutcracker.yml);-p, –pid-file=S #指定进程pid文件路径,默认关闭 (default: off);-o, –output=S #设置日志输出路径,默认为标准错误输出 (default: stderr);-d, –daemonize #以守护进程运行;-t, –test-conf #测试配置脚本的正确性;-D, –describe-stats #打印状态描述;-v, –verbosity=N #设置日志级别 (default: 5, min: 0, max: 11);-s, –stats-port=N #设置状态监控端口,默认22222 (default: 22222);-a, –stats-addr=S #设置状态监控IP,默认0.0.0.0 (default: 0.0.0.0);-i, –stats-interval=N #设置状态聚合间隔 (default: 30000 msec);-m, –mbuf-size=N #设置mbuf块大小,以bytes单位 (default: 16384 bytes);

PS:一般在生产环境中,都是使用进程管理工具来进行twemproxy的启动管理,如supervisor或pm2工具,避免当进程挂掉的时候能够自动拉起进程。

验证是否正常启动

[root@www ~]# ps aux | grep nutcrackerroot 20002 0.0 0.0 19312 916 ? Sl 18:48 0:00 /etc/twemproxy/sbin/nutcracker -c /etc/twemproxy/conf/nutcracker.yml -p /var/run/nutcracker.pid -o /var/log/nutcracker.log -d root 20006 0.0 0.0 103252 832 pts/0 S+ 18:48 0:00 grep nutcracker [root@www ~]# netstat -nplt | grep 22122tcp 0 0 0.0.0.0:22122 0.0.0.0:* LISTEN 20002/nutcracker

Twemproxy代理Redis集群

这里我们使用第一种方案在同一台主机上测试Twemproxy代理Redis集群,一个twemproxy和两个Redis节点(想添加更多的也可以)。Twemproxy就是用上面的配置了,下面只需要增加两个Redis节点。

安装配置Redis

在安装Redis之前,需要安装Redis的依赖程序tcl,如果不安装tcl在Redis执行make test的时候就会报错的哦。

[root@www ~]# yum install -y tcl[root@www ~]# wget https://github.com/antirez/redis/archive/3.2.0.tar.gz[root@www ~]# tar xvf 3.2.0.tar.gz -C /usr/local[root@www ~]# cd /usr/local/[root@www local]# mv redis-3.2.0 redis[root@www local]# cd redis[root@www redis]# make[root@www redis]# make test[root@www redis]# make install

配置两个Redis节点

[root@www ~]# mkdir /data/redis-6546[root@www ~]# mkdir /data/redis-6547[root@www ~]# cat /data/redis-6546/redis.confdaemonize yes pidfile /var/run/redis/redis-server.pid port 6546bind 0.0.0.0 loglevel notice logfile /var/log/redis/redis-6546.log [root@www ~]# cat /data/redis-6547/redis.confdaemonize yes pidfile /var/run/redis/redis-server.pid port 6547bind 0.0.0.0 loglevel notice logfile /var/log/redis/redis-6547.log

PS:简单提供两个Redis配置文件,如果开启了Redis认证,那么在twemproxy中也需要填写Redis密码。

启动两个Redis节点

[root@www ~]# /usr/local/redis/src/redis-server /data/redis-6546/redis.conf[root@www ~]# /usr/local/redis/src/redis-server /data/redis-6547/redis.conf[root@www ~]# ps aux | grep redisroot 23656 0.0 0.0 40204 3332 ? Ssl 20:14 0:00 redis-server 0.0.0.0:6546 root 24263 0.0 0.0 40204 3332 ? Ssl 20:16 0:00 redis-server 0.0.0.0:6547

验证Twemproxy读写数据

首先twemproxy配置项中servers的主机要配置正确,然后连接Twemproxy的22122端口即可测试。

[root@www ~]# redis-cli -p 22122127.0.0.1:22122> set key vlaue OK 127.0.0.1:22122> get key"vlaue"127.0.0.1:22122> FLUSHALL Error: Server closed the connection 127.0.0.1:22122> quit

上面我们set一个key,然后通过twemproxy也可以获取到数据,一切正常。但是在twemproxy中使用flushall命令就不行了,不支持。

然后我们去找分别连接两个redis节点,看看数据是否出现在某一个节点上了,如果有,就说明twemproxy正常运行了。

[root@www ~]# redis-cli -p 6546127.0.0.1:6546> get key (nil) 127.0.0.1:6546>

由上面的结果我们可以看到,数据存储到6547节点上了。目前没有很好的办法明确知道某个key存储到某个后端节点了。

如何Reload twemproxy?

Twemproxy没有为启动提供脚本,只能通过命令行参数启动。所以,无法使用对twemproxy进行reload的操作,在生产环境中,一个应用无法reload(重载配置文件)是一个灾难。当你对twemproxy进行增删节点时如果直接使用restart的话势必会影响线上的业务。所以最好的办法还是reload,既然twemproxy没有提供,那么可以使用kill命令带一个信号,然后跟上twemproxy主进程的进行号即可。

kill -SIGHUP PID

注意,PID就是twemproxy master进程。

The above is the detailed content of How to implement Redis data sharding. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to use redis lock

Apr 10, 2025 pm 08:39 PM

How to use redis lock

Apr 10, 2025 pm 08:39 PM

Using Redis to lock operations requires obtaining the lock through the SETNX command, and then using the EXPIRE command to set the expiration time. The specific steps are: (1) Use the SETNX command to try to set a key-value pair; (2) Use the EXPIRE command to set the expiration time for the lock; (3) Use the DEL command to delete the lock when the lock is no longer needed.

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

The best way to understand Redis source code is to go step by step: get familiar with the basics of Redis. Select a specific module or function as the starting point. Start with the entry point of the module or function and view the code line by line. View the code through the function call chain. Be familiar with the underlying data structures used by Redis. Identify the algorithm used by Redis.

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

Redis data loss causes include memory failures, power outages, human errors, and hardware failures. The solutions are: 1. Store data to disk with RDB or AOF persistence; 2. Copy to multiple servers for high availability; 3. HA with Redis Sentinel or Redis Cluster; 4. Create snapshots to back up data; 5. Implement best practices such as persistence, replication, snapshots, monitoring, and security measures.

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.