Double-write consistency between Redis and MySQL refers to scenarios where cache and database are used to store data at the same time (mainly when there is high concurrency) , How to ensure the data consistency between the two (the content is the same or as close as possible).

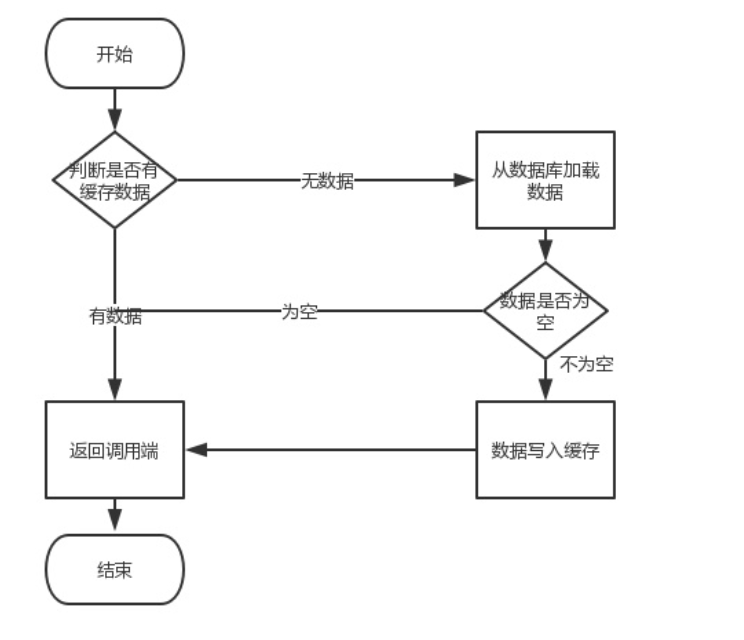

Normal business process:

Reading is not a problem, the problem lies in the write operation (update). Several problems may arise at this time. We need to update the database first and then perform caching operations. When dealing with the cache, you should consider whether to update the cache or delete the cache, or update the cache first and then update the database.

To summarize, should you operate the cache first and then the database, or operate the database first and then the cache?

Let’s continue with these questions.

First let’s talk about the operation cache, which includes two types: update cache and delete cache. How to choose?

Assume that the database is updated first (because operating the cache first and then operating the database is a big problem, which will be discussed later)

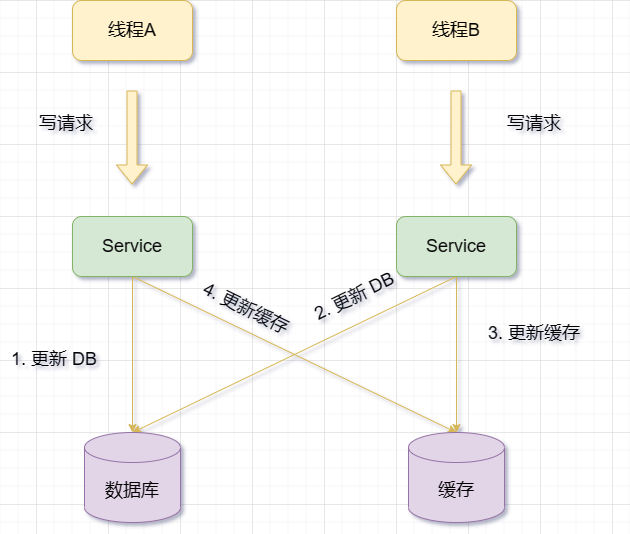

Update cache

Update the database first, then update the cache.

When two requests modify the same data at the same time, old data may exist in the cache because their order may be reversed. Subsequent read requests will read old data, and only when the cache is invalidated can the correct value be obtained from the database.

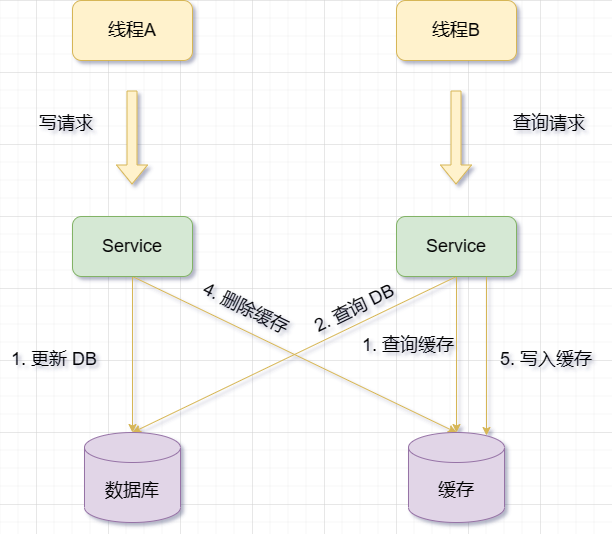

Delete cache

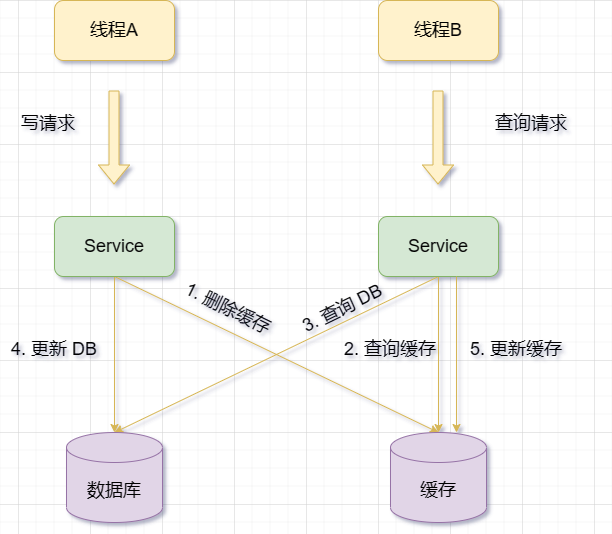

Update the database first, then delete the cache.

When the cache fails, request B may query data from the database and obtain the old value. At this time, A is requested to update the database, write the new value to the database, and delete the cache. And request B writes the old value into the cache, resulting in dirty data

#It can be seen from the above that the requirements for dirty data are more than the requirements for updating the cache, and the following must be met Several conditions:

Cache invalidation

##Concurrency of read requests and write requests

The time to update the database and delete the cache is shorter than the time to read the database and write the cache

Note: I don’t really understand this. Simply speaking, the probability of occurrence is low, but if there is a network delay, etc., won’t it also happen? I hope someone with good intentions can clear up the confusion, but I don’t understand it anyway.Therefore, when choosing to delete the cache, you need to combine other technologies to optimize performance and consistency. For example:

ComparisonIn the update cache, the cache is updated every time, but the data in the cache may not be

read immediately Taking will result in a lot of infrequently accessed data being stored in the cache, which wastes cache resources. And in many cases, the value written to the cache does not correspond one-to-one with the value in the database. It is very likely that the database is first queried, and then a value is obtained through a series of "calculations" before the value is written. into the cache.

It can be seen that thisupdate cache scheme not only has low cache utilization, but also causes a waste of machine performance. So we generally consider Deleting the cache

Update the cache first and then update the databaseWhen updating the data,But there are some problems:Write the new data to the cache first (Redis ), and then write the new data into the database (MySQL)

The cache update is successful, but The database update failed, resulting in data inconsistency

Example: The user modified his nickname. The system first writes the new nickname into the cache and then updates it. database. However, during the process of updating the database, abnormal situations such as network failure or database downtime occurred, resulting in the nickname in the database not being modified. In this way, the nickname in the cache will be inconsistent with the nickname in the database.

The cache update is successful, but the database update is delayed, causing other requests to read old data

Example: When the user places an order for a product, the system first writes the order status into the cache and then updates the database. However, during the process of updating the database, due to large concurrency or other reasons, the writing speed of the database is slower than the writing speed of the cache. In this way, other requests will read the order status as paid from the cache, but read the order status as unpaid from the database.

The cache update was successful, but other requests queried the cache and database before the database was updated, and the old data was written back to the cache, overwriting the new data

Example: User A modified his avatar and uploaded it to the server. The system first writes the new avatar address into the cache and returns it to user A for display. Then update the new avatar address to the database. But during this process, user B visited user A's personal homepage and read the new avatar address from the cache. Cache invalidation may be due to the cache expiration policy or other reasons, such as a restart operation, causing the cache to be cleared or expired. At this time, user B visits user A's personal homepage again, reads the old avatar address from the database, and writes it back to the cache. This may cause the avatar address in the cache to not match the address in the database.

A lot has been said above, but the summary is that the cache update was successful, but the database was not updated (update failed) , resulting in the cache storing the latest value and the data inventory storing the old value. value. If the cache fails, the old value in the database will be obtained.

I also got confused later. Since the problem was caused by the failure of database update, can I solve the problem of data inconsistency as long as I ensure that the database update is successful? When the database update fails, Keep retrying to update the database until the database update is completed.

Later I found that I was too naive, and there were many problems, such as:

If the reason for the database update failure is database downtime or network failure, then you keep Retrying to update the database may cause more stress and delays, or even make database recovery difficult.

If the reason for database update failure is data conflict or business logic error, then your constant retrying to update the database may cause data loss or data confusion, and even affect other users' data. .

If you keep retrying to update the database, then you need to consider how to ensure the idempotence and order of retries, and how to handle exceptions that occur during the retry process.

So, this method is not a good solution.

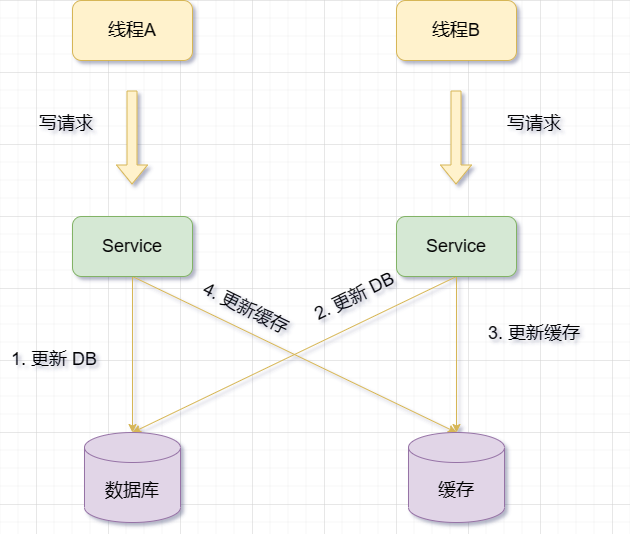

When there is an update operation, update the database data first, and then update the corresponding cache data

However, this solution also has some problems and risks, such as:

If the database is updated successfully, but the cache update fails, the old data will be retained in the cache. There is already new data in the database, that is, dirty data.

If another request queries the same data between updating the database and updating the cache, and the cache is found to exist, the old data will be read from the cache. This will also cause inconsistency between the cache and the database.

Therefore, when using the update cache operation, No matter who comes first or who comes last, if an exception occurs in the latter, it will have an impact on the business. (Still the picture above)

So how to handle exceptions to ensure data consistency

The source of these problems is caused by multi-thread concurrency, so the simplest method is to add a lock (distributed lock). If two threads want to modify the same data, each thread must apply for a distributed lock before making changes. Only the thread that has obtained the lock is allowed to update the database and cache. The thread that cannot obtain the lock will return failure and wait for the next retry. . The reason for this is to limit only one thread to operating data and cache to prevent concurrency issues.

But locking is time-consuming and labor-intensive, so it is definitely not recommended. Moreover, every time the cache is updated, the data in the cache may not be read immediately. This may cause a lot of infrequently accessed data to be stored in the cache, which wastes cache resources. And in many cases, the value written to the cache does not correspond one-to-one with the value in the database. It is very likely that the database is first queried, and then a value is obtained through a series of "calculations" before the value is written. into the cache.

It can be seen that this scheme of updating the database and updating the cache not only has low cache utilization, but also causes a waste of machine performance.

So at this time we need to consider another option: Delete the cache

When When there is an update operation, delete the corresponding cache data first, and then update the database data

However, this solution also has some problems and risks, such as:

If the database update fails after deleting the cache, the cache will be lost and the data will need to be reloaded from the database during the next query, which increases database pressure and response time.

If between deleting the cache and updating the database, there are other requests for the same data and it is found that the cache does not exist, then the old data will be read from the database and Write to cache. This will cause inconsistency between the cache and the database.

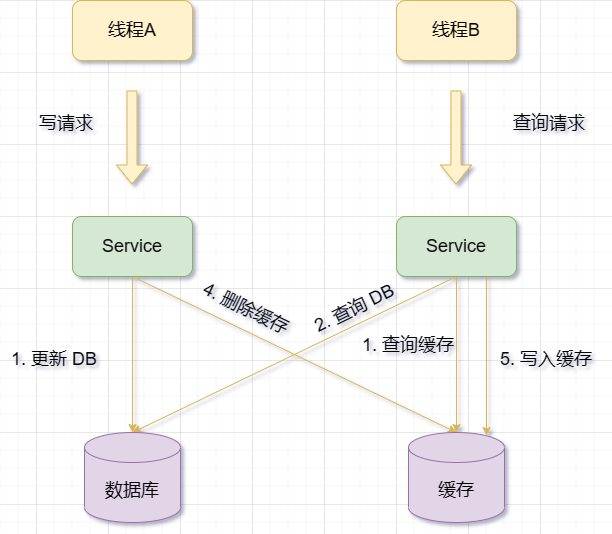

When there is an update operation, update the database data first, and then Delete cache

I actually said it above, let me repeat it again

When the cache fails, request B may query data from the database and get the old value. At this time, A is requested to update the database, write the new value to the database, and delete the cache. And request B writes the old value into the cache, resulting in dirty data

#It can be seen from the above that the requirements for dirty data are more than the requirements for updating the cache, and the following must be met Several conditions:

Cache invalidation

##Concurrency of read requests and write requests

The time to update the database and delete the cache is shorter than the time to read the database and write the cache

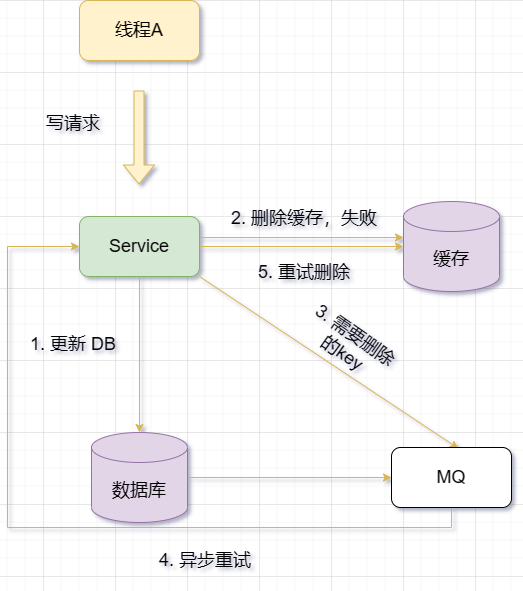

Here are some common methods to ensure double-write consistencySolution1. Try againAs mentioned above, when the second step operation fails, I will try again and try to remedy it as much as possible, but the cost of retrying is too high, so I won’t repeat what I said above. 2. Asynchronous retrySince the retry method takes up resources, I will do it asynchronously. When deleting or updating the cache, if the operation fails, an error is not returned immediately. Instead, the cache retry operation is triggered through some mechanisms (such as message queues, scheduled tasks, binlog subscriptions, etc.). Although this method can avoid the performance loss and blocking problems when retrying the cache synchronously, it will prolong the time when the cache and database data are inconsistent. 2.1 Use message queue to implement retry

#Message queue ensures reliability: Messages written to the queue will not be lost until they are successfully consumed (Don’t worry about restarting the project)

The message queue ensures successful message delivery: The downstream pulls the message from the queue and deletes the message only after successful consumption, otherwise it will Continue to deliver messages to consumers (in line with our retry requirements)

Use the message queue to retry asynchronously The cache situation means that when the information changes, the database is updated first, and then the cache is deleted. If the deletion is successful, everyone is happy. If the deletion fails, the key that needs to be deleted is sent to the message queue. In addition, a consumer thread will retrieve the key to be deleted from the message queue and delete or update the Redis cache based on the key. If the operation fails, it is resent to the message queue and retried.

Note: You can also send it directly to the message queue without trying to delete it first, and let the message queueFor example, if there is a user information table, Want to store user information in Redis. The following are steps that can be performed, taking the solution using message queue asynchronous retry caching as an example:

When user information changes, update the database first and return the successful result to the front end.

Try to delete the cache. If successful, the operation will end. If failed, a message will be generated for the operation of deleting or updating the cache (for example, including key and operation type) and sent to the message queue ( Such as using Kafka or RabbitMQ).

Another consumer thread subscribes to and obtains these messages from the message queue, and deletes or updates the corresponding information in Redis based on the message content.

If the cache is deleted or updated successfully, the message will be removed (discarded) from the message queue to avoid repeated operations.

If deleting or updating the cache fails, implement a failure strategy, such as setting a delay time or a retry limit, and then resend the message to the message queue for retry.

If the retry fails after a certain number of times, an error message will be sent to the business layer and logged.

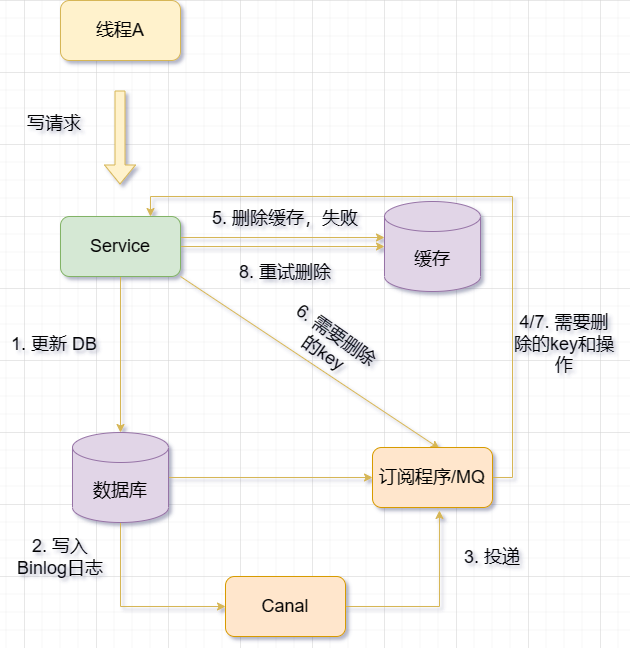

The basic idea of using binlog to achieve consistency isUse binlog logs to record database change operations, and then use the main Synchronize or restore data from replication or incremental backup.

For example, if we have a master database and a slave database, we can enable binlog on the master database and set the slave database as its replication node. In this way, when any change operation occurs on the master database, it will send the corresponding binlog log to the slave database, and the slave database will perform the same operation based on the binlog log to ensure data consistency.

In addition, if we need to restore data before a certain point in time, we can also use binlog logs to achieve this. First, we need to find the most recent full backup file before the corresponding time point and restore it to the target database. Then, we need to find all incremental backup files (ie binlog log files) before the corresponding time point and apply them to the target database in order. In this way, we can restore the data state before the target time point.

Use Binlog to update/delete the Redis cache in real time. Using Canal, the service responsible for updating the cache is disguised as a MySQL slave node, receives Binlog from MySQL, parses the Binlog, obtains real-time data change information, and then updates/delete the Redis cache based on the change information;

MQ Canal strategy delivers the Binlog data received by Canal Server directly to MQ for decoupling, and uses MQ to asynchronously consume Binlog logs for data synchronization;

MySQL's binlog log records database change operations, such as inserts, updates, deletes, etc. The binlog log has two main functions, one is master-slave replication, and the other is incremental backup.

Master-slave replication is the process of achieving data synchronization by synchronizing data from a master database to one or more slave databases. The master database will send its own binlog log to the slave database, and the slave database will perform the same operation based on the binlog log to ensure data consistency. By implementing this approach, data availability and reliability can be enhanced, and load balancing and failure recovery can be achieved.

Incremental backup refers to regular backup of database changes based on full backup. Full backup refers to completely backing up the entire database data to a file. The purpose is to merge the latest changes in the database with previous backups in order to restore to the latest state. Doing so can not only save the space and time occupied by backup, but also make it easy to restore data to any point in time.

At this point, we can conclude that in order to ensure the consistency of the database and cache, it is recommended to adopt the "update the database first, then delete the cache" solution, and cooperate with "Message Queue" or "Subscribe Change Log" ” way to do it.

Our focus is to update the database first and then delete the cache. What if I want to delete the cache first and then update the database?

Recall what I said before: delete the cache first, and then update the database. It will cause the problem of the old value overwriting the cache. That’s easy to handle. We just delete the old value and it’s over. Delayed double deletion This is the principle. The basic idea is:

Delete the cache first

and then update the database

Sleep for a period of time (determined according to system conditions)

Delete the cache again

In order to avoid that after updating the database, other threads will read the This measure was taken by expiring cached data and writing it back to the cache resulting in data inconsistency.

For example: Suppose there is a user information table, one of which is user points. Now there are two threads A and B operating on user points at the same time:

Thread A wants to add 100 points to the user

Thread B wants Reduce 50 points to the user

If the delayed double deletion strategy is used, the execution process of threads A and B may be as follows:

Thread A first deletes the user information in the cache

Thread A then reads the user information from the database and finds that the user points are 1000

Thread A adds 100 to the user points, which becomes 1100, and update to the database

Thread A sleeps for 5 seconds (assuming this time is enough for the database to synchronize)

Thread A deletes the cache again The user information in

Thread B first deletes the user information in the cache

Thread B then reads the user information from the database and finds that the user The points are 1100 (because thread A has already updated)

Thread B subtracts 50 from the user's points to 1050, and updates it to the database

Thread B sleeps for 5 seconds (assuming this time is enough for the database to synchronize)

Thread B deletes the user information in the cache again

The final result is: the user points in the database are 1050, and there is no user information in the cache. The next time the user information is queried, it will be read from the cache first, instead of being obtained from the database and written to the cache. This ensures data consistency.

Delayed double deletion is suitable for high concurrency scenarios, especially for situations where data modification operations are frequent and query operations are few. This can reduce the pressure on the database and improve performance while ensuring the eventual consistency of the data. Delayed double deletion is also suitable for scenarios where the database has a master-slave synchronization delay, because it can avoid reading the old cached data and writing it back to the cache after updating the database but before synchronization is completed from the slave database.

Note: This sleep time = the time it takes to read business logic data is several hundred milliseconds. In order to ensure that the read request ends, the write request can delete cached dirty data that may be brought by the read request.

The above is the detailed content of How to solve the double-write consistency problem between Redis and MySQL. For more information, please follow other related articles on the PHP Chinese website!