What are the eight classic problems of Redis?

1. Why use Redis

The blogger believes that the main considerations for using redis in the project are performance and concurrency. Of course, redis also has other functions such as distributed locks, but if it is just for other functions such as distributed locks, there are other middleware (such as zookpeer, etc.) instead, and it is not necessary to use redis. Therefore, this question is mainly answered from two perspectives: performance and concurrency.

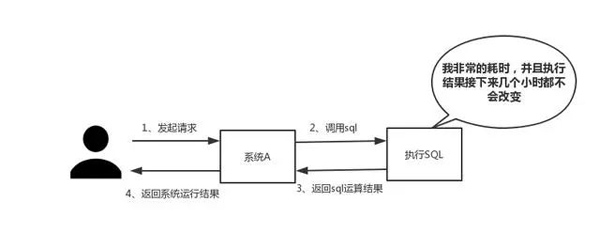

Answer: As shown below, divided into two points

(1) Performance

When the execution time is required When the SQL is very long and the results do not change frequently, we recommend storing the results in the cache. In this way, subsequent requests will be read from the cache, so that requests can be responded to quickly.

Digression: I suddenly want to talk about this standard of rapid response. In fact, depending on the interaction effect, there is no fixed standard for this response time. But someone once told me: "In an ideal world, our page jumps need to be resolved in an instant, and in-page operations need to be resolved in an instant. In order to provide the best user experience, operations that take more than one second should be provided Progress prompts and allows for suspension or cancellation at any time."

So how much time is an instant, an instant, or a snap of a finger?

According to the records of "Maha Sangha Vinaya"

One moment is one thought, twenty thoughts are one moment, twenty moments are one snap of fingers, twenty snaps of fingers are one Luoyu, twenty Luo Preliminary prediction is one moment, one day and one night is thirty moments.

So, after careful calculation, an instant is 0.36 seconds, an instant is 0.018 seconds, and a flick of the finger is 7.2 seconds long.

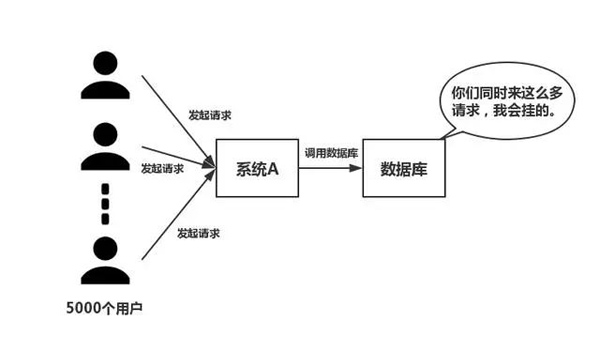

(2) Concurrency

As shown in the figure below, in the case of large concurrency, all requests directly access the database, and a connection exception will occur in the database. In this case, using Redis buffering operations is necessary so that the request first accesses Redis instead of directly accessing the database.

2. What are the disadvantages of using Redis

Analysis: Everyone has been using redis for so long. This issue must be understood. Basically, you will encounter some problems when using redis, and there are only a few common ones.

Answer: Mainly four questions

(1) Cache and database double-write consistency issue

(2) Cache avalanche Problem

(3) Cache breakdown problem

(4) Cache concurrency competition problem

This I personally feel that these four problems are relatively common in projects. The specific solutions are given below.

You can refer to: "Cache avalanche, cache penetration, cache warm-up, cache update, cache downgrade and other issues! 》

3. Why is single-threaded Redis so fast?

Analysis: This question is actually an investigation of the internal mechanism of redis. According to the blogger's interview experience, many people actually do not understand the single-threaded working model of Redis. Therefore, this issue should still be reviewed.

Answer: Mainly the following three points

(1) Pure memory operation

(2) Single-thread operation, avoiding frequent context switching

(3) Using a non-blocking I/O multiplexing mechanism

Digression: We now have to talk about the I/O multiplexing mechanism in detail, because of this statement It's so popular that most people don't understand what it means. The blogger gave an analogy: Xiaoqu opened an express store in City S, responsible for express delivery services in the city. Due to limited funds, Xiaoqu first hired a group of couriers, but later found that there was insufficient funds and could only buy a car for express delivery.

Business Method 1

Whenever a customer delivers a courier, Xiaoqu will assign a courier to keep an eye on it, and then the courier will drive to deliver the courier. Slowly Xiaoqu discovered the following problems with this business method

Dozens of couriers basically spent their time robbing cars, and most of the couriers were in trouble. In the idle state, whoever grabs the car can deliver the express

As the number of express delivery increases, there are more and more couriers. Xiaoqu finds that the express delivery store is getting more and more crowded. , there is no way to hire new couriers

Coordination among couriers is very time-consuming

Based on the above shortcomings, Xiaoqu learned from the experience and proposed The following business method is adopted

Business method two

Xiaoqu only hires one courier. Xiaoqu will mark the express delivery sent by the customer according to the delivery location and place it in the same place. In the end, the courier picked up the packages one at a time, then drove to deliver the package, and then came back to pick up the next package after delivery.

Comparison

Comparing the above two business methods, is it obvious that the second one is more efficient and better? In the above metaphor:

Every courier ------------------> Every thread

Each express-------------------->Each socket (I/O stream)

Delivery location of express delivery-------------->Different status of socket

Customer delivery request-------------->Request from client

Xiaoqu’s business method- ------------->Code running on the server

A car--------------- ------->Number of CPU cores

So we have the following conclusions

1. The first business method is the traditional concurrency model. Each I /O streams (Express) are managed by a new thread (Expressor).

2. The second management method is I/O multiplexing. There is only a single thread (a courier) that manages multiple I/O streams by tracking the status of each I/O stream (the delivery location of each courier).

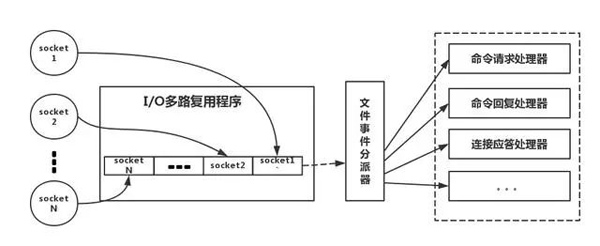

The following is an analogy to the real redis thread model, as shown in the figure

Refer to the above figure, to put it simply, it is. During operation, our Redis client will create sockets with different event types. On the server side, there is an I/0 multiplexing program that puts it into a queue. Then, the file event dispatcher takes it from the queue in turn and forwards it to different event processors.

It should be noted that for this I/O multiplexing mechanism, redis also provides multiplexing function libraries such as select, epoll, evport, kqueue, etc. You can learn about it by yourself.

4. Redis data types, and usage scenarios of each data type

Analysis: Do you think this question is very basic? In fact, I think so too. However, according to interview experience, at least 80% of people cannot answer this question. It is recommended that after using it in the project, you can memorize it by analogy to gain a deeper understanding instead of memorizing it by heart. Basically, a qualified programmer will use all five types.

Answer: There are five types in total

(1) String

This is actually very common, involving the most basic get/set operations, value can be String or number. Generally, some complex counting functions are cached.

(2)hash

The value here stores a structured object, and it is more convenient to operate a certain field in it. When bloggers do single sign-on, they use this data structure to store user information, use cookieId as the key, and set 30 minutes as the cache expiration time, which can simulate a session-like effect very well.

(3)list

Using the data structure of List, you can perform simple message queue functions. In addition, we can also use the lrange command to implement Redis-based paging function. This method has excellent performance and user experience.

(4)set

Because set is a collection of non-repeating values. Therefore, the global deduplication function can be implemented. Why not use the Set that comes with the JVM for deduplication? Because our systems are generally deployed in clusters, it is troublesome to use the Set that comes with the JVM. Is it too troublesome to set up a public service just to do global deduplication?

In addition, by using operations such as intersection, union, and difference, you can calculate common preferences, all preferences, and your own unique preferences.

(5) sorted set

sorted set has an additional weight parameter score, and the elements in the set can be arranged according to score. You can make a ranking application and take TOP N operations. In addition, in an article titled "Analysis of Distributed Delayed Task Schemes", it is mentioned that sorted set can be used to implement delayed tasks. The last application is to do range searches.

5. Redis expiration strategy and memory elimination mechanism

The importance of this issue is self-evident, and it can be seen whether Redis is used. For example, if you can only store 5 GB of data in Redis, but you write 10 GB of data, 5 GB of the data will be deleted. How did you delete it? Have you thought about this issue? Also, your data has set an expiration time, but when the time is up, the memory usage is still relatively high. Have you thought about the reason?

Answer:

redis uses regular deletion inertia Delete policy.

Why not use a scheduled deletion strategy?

Scheduled deletion uses a timer to monitor the key, and it will be automatically deleted when it expires. Although the memory is released in time, it consumes a lot of CPU resources. Since under high concurrent requests, the CPU needs to focus on request processing rather than key value deletion operations, we gave up adopting this strategy

How does regular deletion and lazy deletion work?

Delete regularly. Redis checks every 100ms by default to see if there are expired keys. If there are expired keys, delete them. It should be noted that redis does not check all keys every 100ms, but randomly selects them for inspection (if all keys are checked every 100ms, wouldn't redis be stuck)? If you only use a periodic deletion strategy, many keys will not be deleted after the expiration time.

So, lazy deletion comes in handy. That is to say, when you get a key, redis will check whether the key has expired if it has an expiration time set? If it expires, it will be deleted at this time.

Is there no other problem with regular deletion and lazy deletion?

No, if the key is not deleted during regular deletion. Then you did not request the key immediately, which means that lazy deletion did not take effect. In this way, the memory of redis will become higher and higher. Then the memory elimination mechanism should be adopted.

There is a line of configuration in redis.conf

# maxmemory-policy volatile-lru

This configuration is configured with the memory elimination strategy (What, you haven’t configured it? OK Reflect on yourself)

1) noeviction: When the memory is not enough to accommodate the newly written data, the new write operation will report an error. No one should use it.

When the memory space is insufficient to store new data, the allkeys-lru algorithm will remove the least recently used key from the key space. Recommended, currently used in projects.

3) allkeys-random: When the memory is insufficient to accommodate newly written data, a key is randomly removed from the key space. No one should be using it. If you don’t want to delete it, at least use Key and delete it randomly.

4) volatile-lru: When the memory is insufficient to accommodate newly written data, in the key space with an expiration time set, the least recently used key is removed. Generally, this method is only used when redis is used as both cache and persistent storage. Not recommended

5) volatile-random: When the memory is insufficient to accommodate newly written data, a key is randomly removed from the key space with an expiration time set. Still not recommended

6) volatile-ttl: When the memory is not enough to accommodate newly written data, in the key space with an expiration time set, keys with earlier expiration times will be removed first. Not recommended

ps: If the expire key is not set and the prerequisites are not met; then the behavior of volatile-lru, volatile-random and volatile-ttl strategies is basically the same as noeviction (not deleted) .

6. Redis and database double-write consistency issue

In distributed systems, consistency issues are common problems. This problem can be further distinguished into eventual consistency and strong consistency. If the database and cache are double-written, there will inevitably be inconsistencies. To answer this question, first understand a premise. That is, if there are strong consistency requirements for the data, it cannot be cached. Everything we do can only guarantee eventual consistency. The solution we proposed can actually only reduce the probability of inconsistent events, but cannot completely eliminate them. Therefore, data with strong consistency requirements cannot be cached.

Here is a brief introduction to the detailed analysis in the article "Analysis of Distributed Database and Cache Double-Write Consistency Scheme". First, adopt a correct update strategy, update the database first, and then delete the cache. Provide a backup measure, such as using a message queue, in case deletion of the cache fails.

7. How to deal with cache penetration and cache avalanche problems

Small and medium-sized traditional software companies rarely encounter these two problems, to be honest. If there are large concurrent projects, the traffic will be around millions. These two issues must be considered deeply.

Answer: As shown below

Cache penetration, that is, the hacker deliberately requests data that does not exist in the cache, causing all requests to be sent to the database, thus Database connection exception.

Solution:

When the cache fails, use a mutex lock to acquire the lock first. Once the lock is acquired, then request the database. If the lock is not obtained, then sleep for a period of time and try again

(2) Use an asynchronous update strategy, and return directly regardless of whether the key has a value. Save a cache expiration time in the value value. Once the cache expires, a thread will be started asynchronously to read the database and update the cache. Cache preheating (loading the cache before starting the project) operation is required.

Provide an interception mechanism that can quickly determine whether the request is valid. For example, use Bloom filters to internally maintain a series of legal and valid keys. Quickly determine whether the Key carried in the request is legal and valid. If it is illegal, return directly.

Cache avalanche, that is, the cache fails in a large area at the same time. At this time, another wave of requests comes, and as a result, the requests are all sent to the database, resulting in an abnormal database connection.

Solution:

(1) Add a random value to the cache expiration time to avoid collective failure.

(2) Use a mutex lock, but the throughput of this solution drops significantly.

(3) Double buffering. We have two caches, cache A and cache B. The expiration time of cache A is 20 minutes, and there is no expiration time for cache B. Do the cache warm-up operation yourself. Then break down the following points

I Read the database from cache A, if there is any data, it will be returned directly

II A has no data, and it will be returned directly from cache A B reads the data, returns directly, and starts an update thread asynchronously.

III The update thread updates cache A and cache B at the same time.

8. How to solve the problem of concurrent key competition in Redis

Analysis: This problem is roughly that there are multiple subsystems setting a key at the same time. What should we pay attention to at this time? Have you ever thought about it? After checking the Baidu search results in advance, the blogger found that almost all answers recommended using the redis transaction mechanism. The blogger does not recommend using the redis transaction mechanism. Because our production environment is basically a redis cluster environment, data sharding operations are performed. When a single task involves multiple key operations, these keys are not necessarily stored on the same Redis server. Therefore, the transaction mechanism of redis is very useless.

Answer: As shown below

(1) If you operate on this key, the order is not required

In this case, prepare a distributed For locks, everyone grabs the lock. Once you grab the lock, just do the set operation. It’s relatively simple.

(2) If you operate this key, the required sequence

Assume there is a key1, system A needs to set key1 to valueA, system B needs to set key1 to valueB, and system C needs to set key1 to valueB. key1 is set to valueC.

It is expected that the value of key1 will change in the order of valueA-->valueB-->valueC. At this time, we need to save a timestamp when writing data to the database. Assume that the timestamp is as follows

System A key 1 {valueA 3:00}

System B key 1 {valueB 3:05}

System C key 1 {valueC 3: 10}

Imagine that if system B obtains the lock first, it will set the value of key1 to {valueB 3:05}. When system A acquires the lock, if it finds that the timestamp of valueA it stores is earlier than the timestamp stored in the cache, system A will not perform the set operation. And so on.

Other methods, such as using a queue and turning the set method into serial access, can also be used. In short, be flexible.

The above is the detailed content of What are the eight classic problems of Redis?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to use redis lock

Apr 10, 2025 pm 08:39 PM

How to use redis lock

Apr 10, 2025 pm 08:39 PM

Using Redis to lock operations requires obtaining the lock through the SETNX command, and then using the EXPIRE command to set the expiration time. The specific steps are: (1) Use the SETNX command to try to set a key-value pair; (2) Use the EXPIRE command to set the expiration time for the lock; (3) Use the DEL command to delete the lock when the lock is no longer needed.

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

How to read the source code of redis

Apr 10, 2025 pm 08:27 PM

The best way to understand Redis source code is to go step by step: get familiar with the basics of Redis. Select a specific module or function as the starting point. Start with the entry point of the module or function and view the code line by line. View the code through the function call chain. Be familiar with the underlying data structures used by Redis. Identify the algorithm used by Redis.

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

How to solve data loss with redis

Apr 10, 2025 pm 08:24 PM

Redis data loss causes include memory failures, power outages, human errors, and hardware failures. The solutions are: 1. Store data to disk with RDB or AOF persistence; 2. Copy to multiple servers for high availability; 3. HA with Redis Sentinel or Redis Cluster; 4. Create snapshots to back up data; 5. Implement best practices such as persistence, replication, snapshots, monitoring, and security measures.

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.