Technology peripherals

Technology peripherals

AI

AI

Andrew Ng's ChatGPT class went viral: AI gave up writing words backwards, but understood the whole world

Andrew Ng's ChatGPT class went viral: AI gave up writing words backwards, but understood the whole world

Andrew Ng's ChatGPT class went viral: AI gave up writing words backwards, but understood the whole world

I didn’t expect that ChatGPT would still make stupid mistakes to this day?

Master Andrew Ng pointed it out at the latest class:

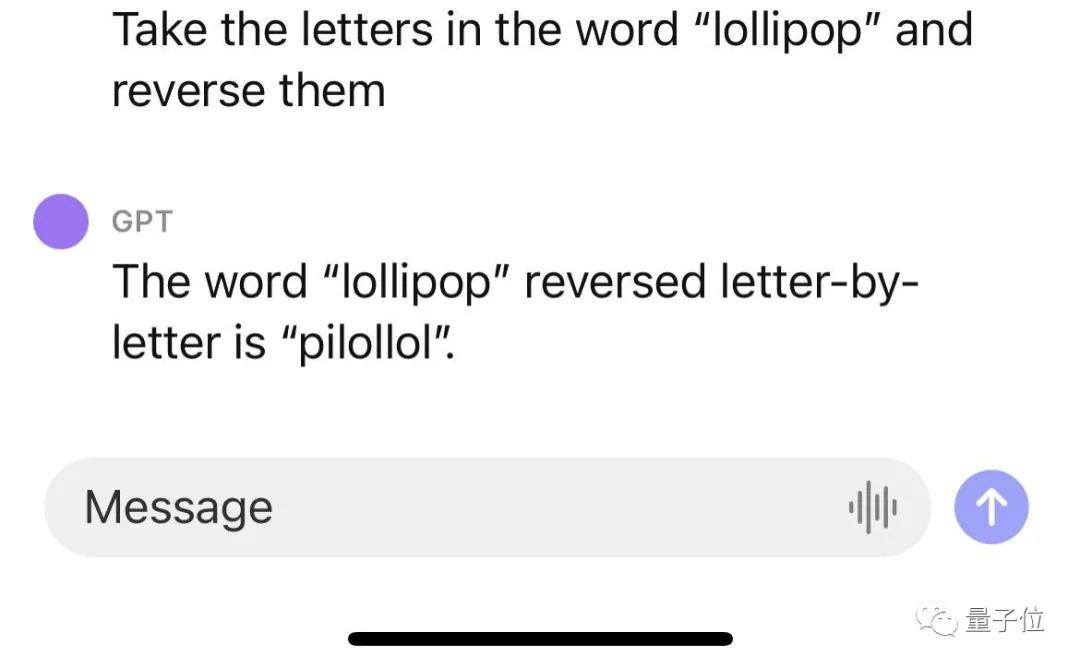

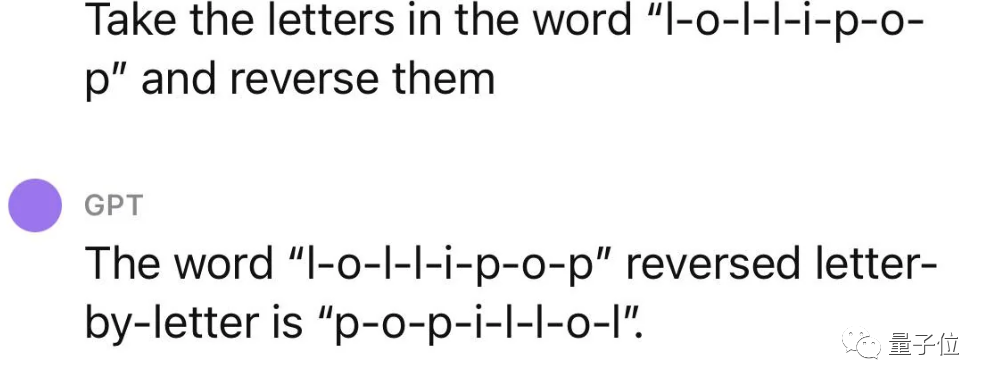

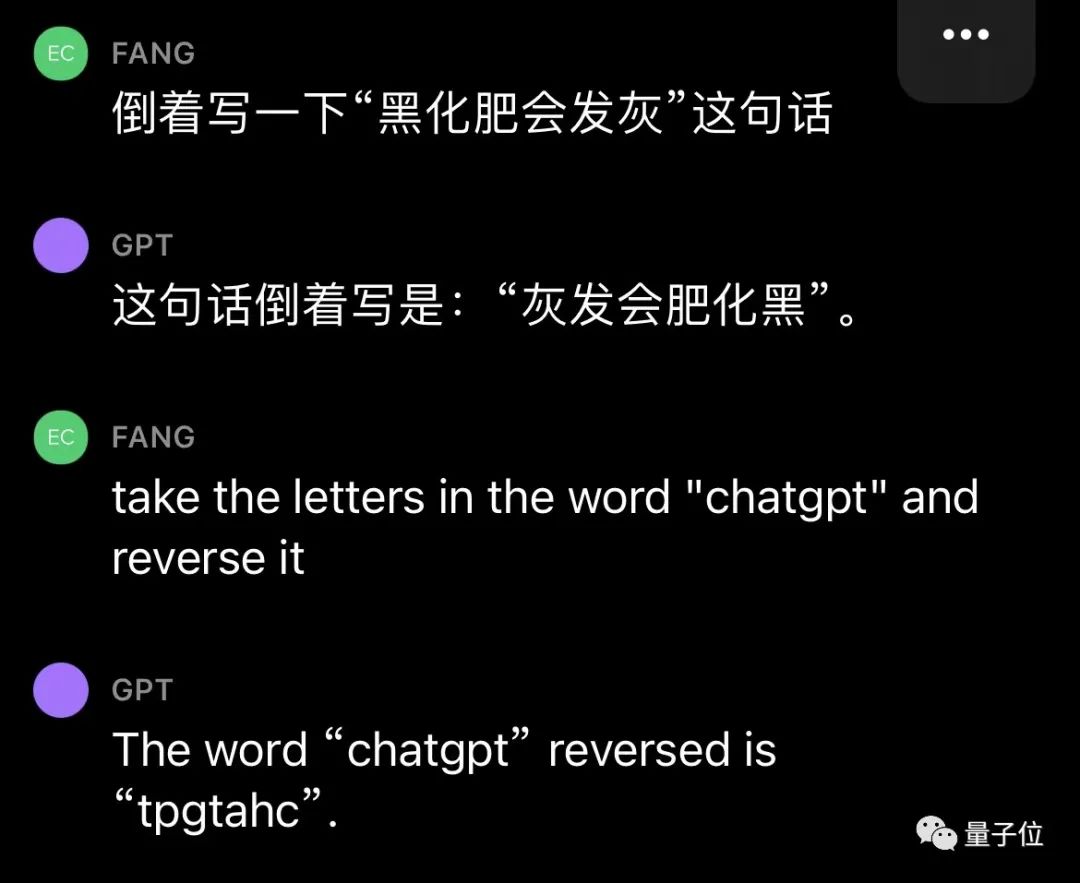

ChatGPT will not reverse words!

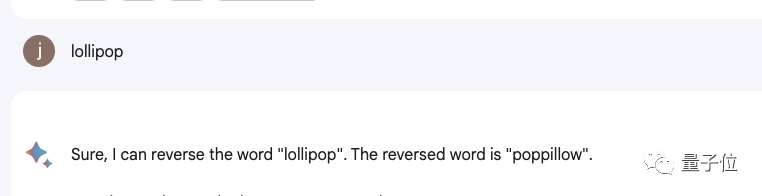

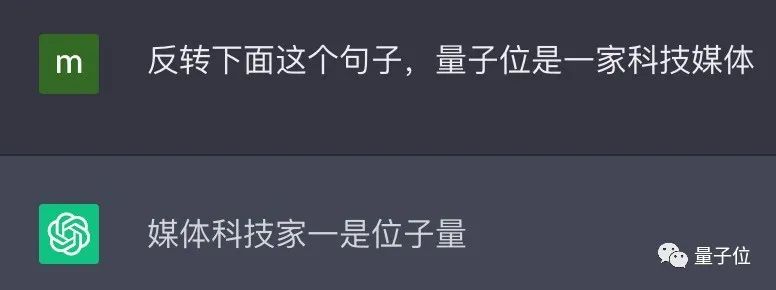

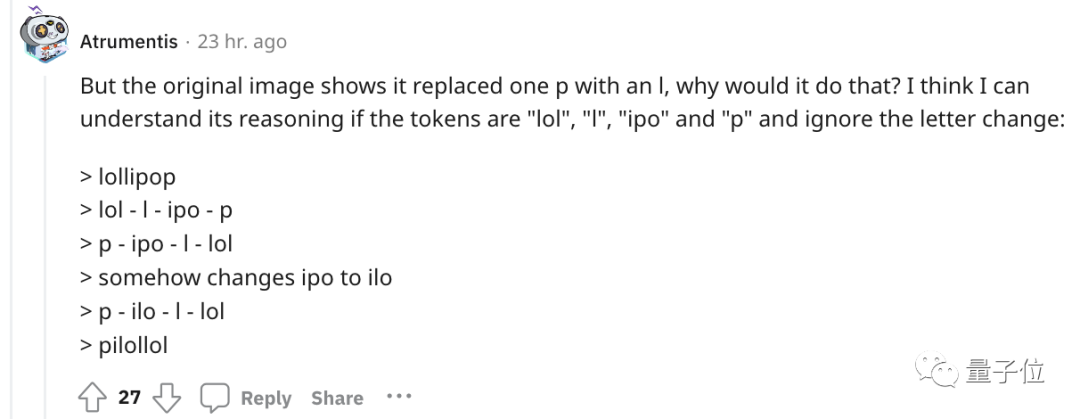

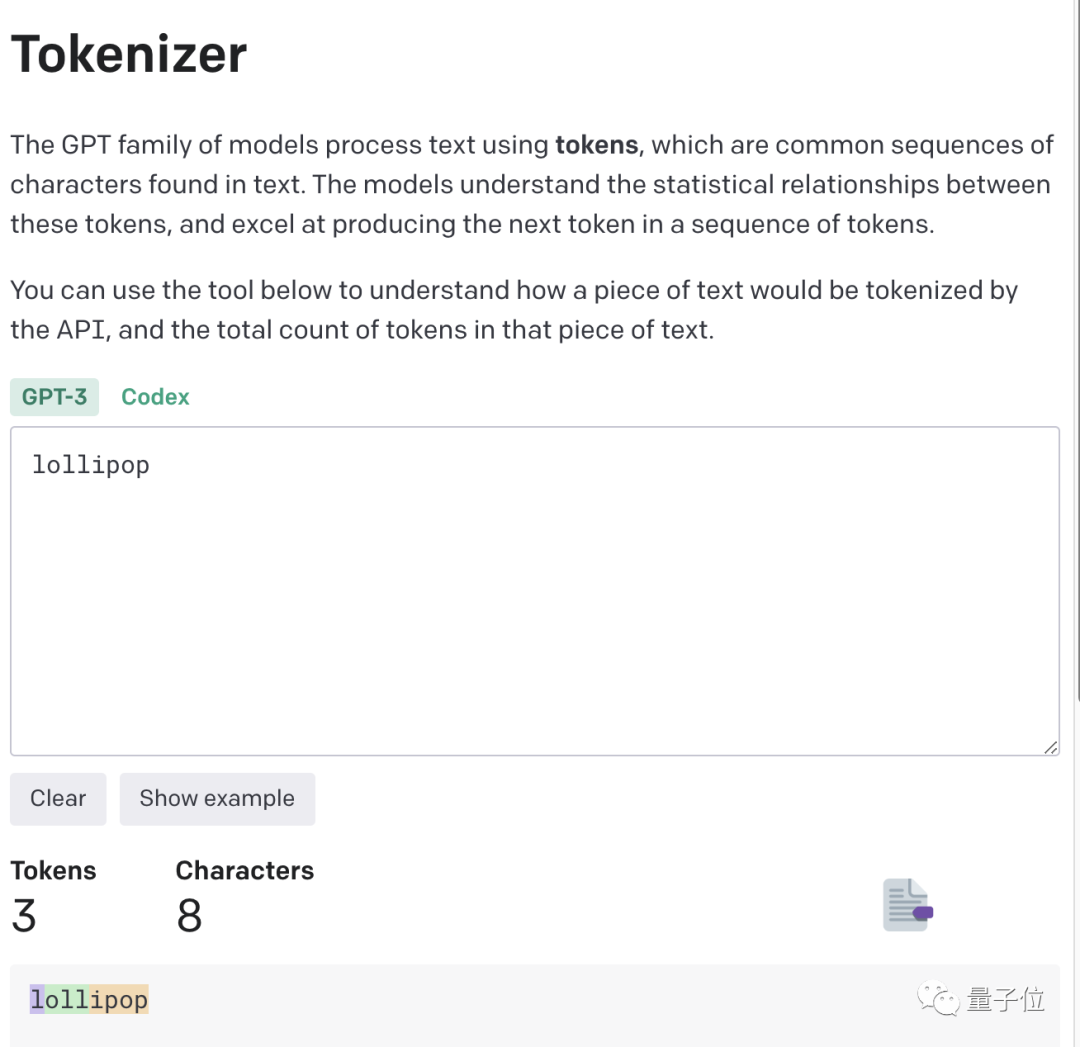

For example, let it reverse the word lollipop, and the output is pilollol, which is completely confusing.

Oh, this is indeed a bit surprising.

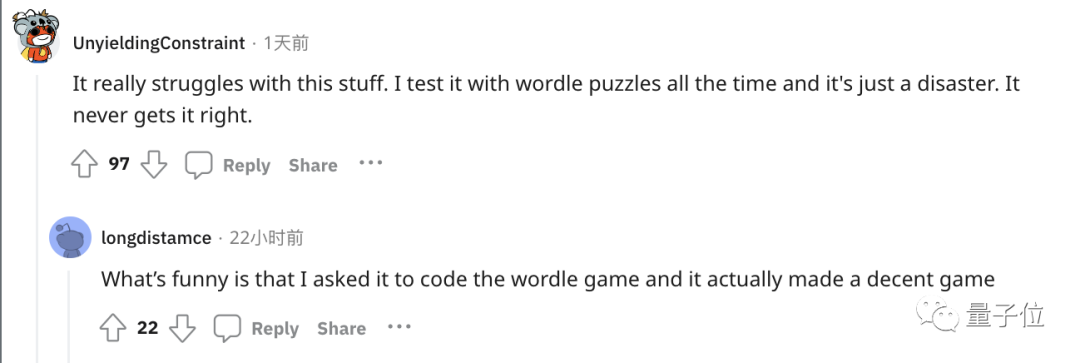

So much so that after netizens who listened to the class posted on Reddit, they immediately attracted a large number of onlookers, and the post quickly reached 6k views.

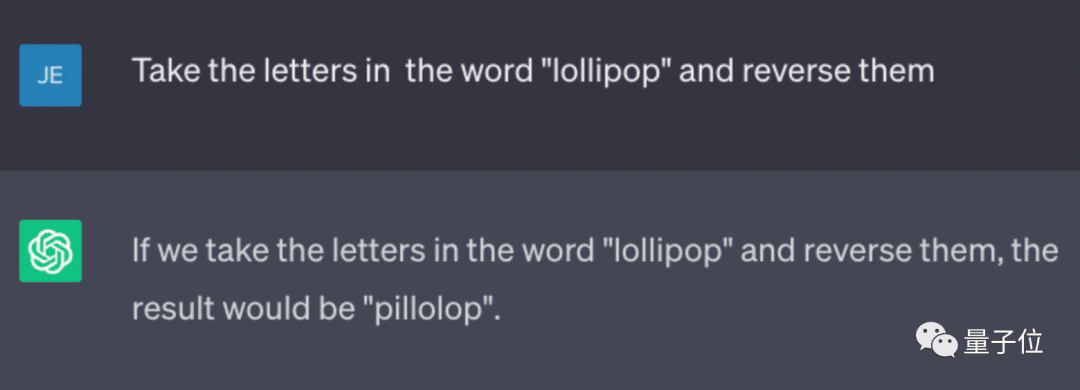

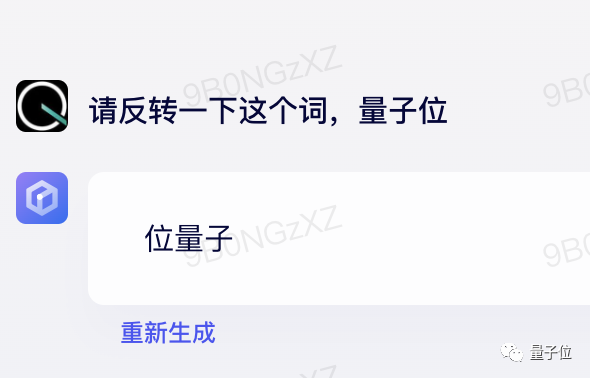

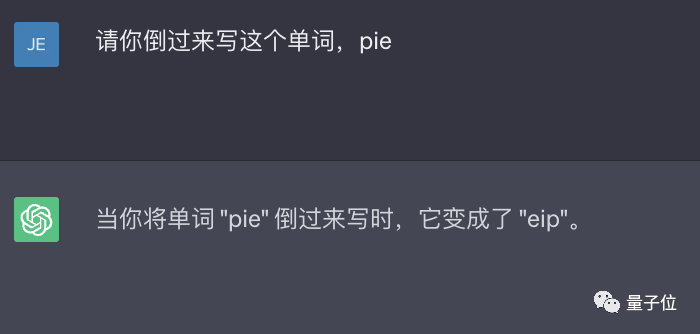

And this is not an accidental bug. Netizens found that ChatGPT is indeed unable to complete this task, and the results of our personal testing are also the same.

- 1 token≈4 English characters≈three-quarters of a word;

- 100 tokens≈75 words;

- 1-2 sentences ≈30 tokens;

- A paragraph ≈ 100 tokens, 1500 words ≈ 2048 tokens;

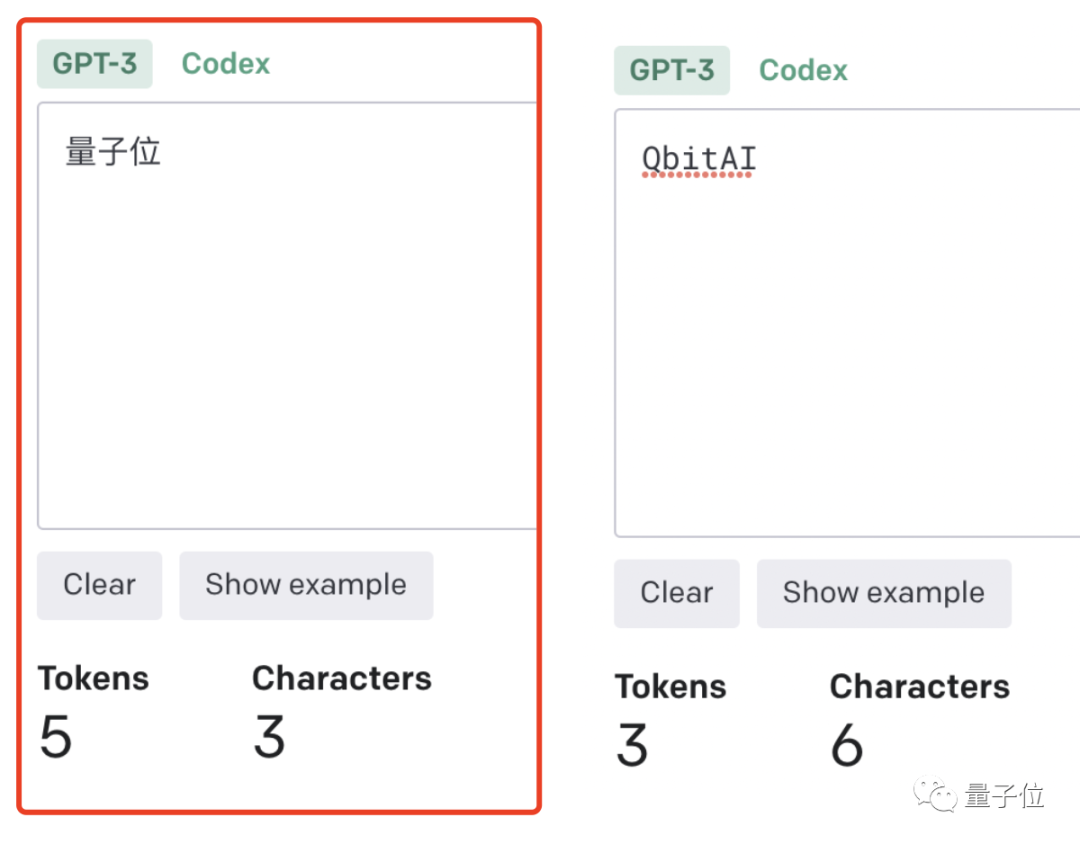

The higher the token-to-char (token to word) ratio, the higher the processing cost. Therefore, processing Chinese tokenize is more expensive than English.

It can be understood that token is a way for large models to understand the real world of humans. It's very simple and greatly reduces memory and time complexity.

But there is a problem with tokenizing words, which makes it difficult for the model to learn meaningful input representations. The most intuitive representation is that it cannot understand the meaning of the words.

At that time, Transformers had done corresponding optimization. For example, a complex and uncommon word was divided into a meaningful token and an independent token.

Just like "annoyingly" is divided into two parts: "annoying" and "ly", the former retains its own meaning, while the latter is more common.

This has also resulted in the amazing effects of ChatGPT and other large model products today, which can understand human language very well.

As for the inability to handle such a small task as word reversal, there is naturally a solution.

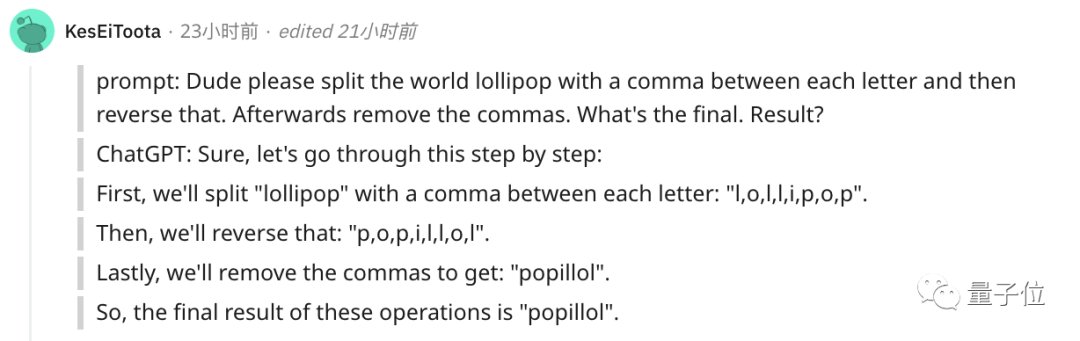

The simplest and most direct way is to separate the words yourself~

Or you can let ChatGPT do it step by step , first tokenize each letter.

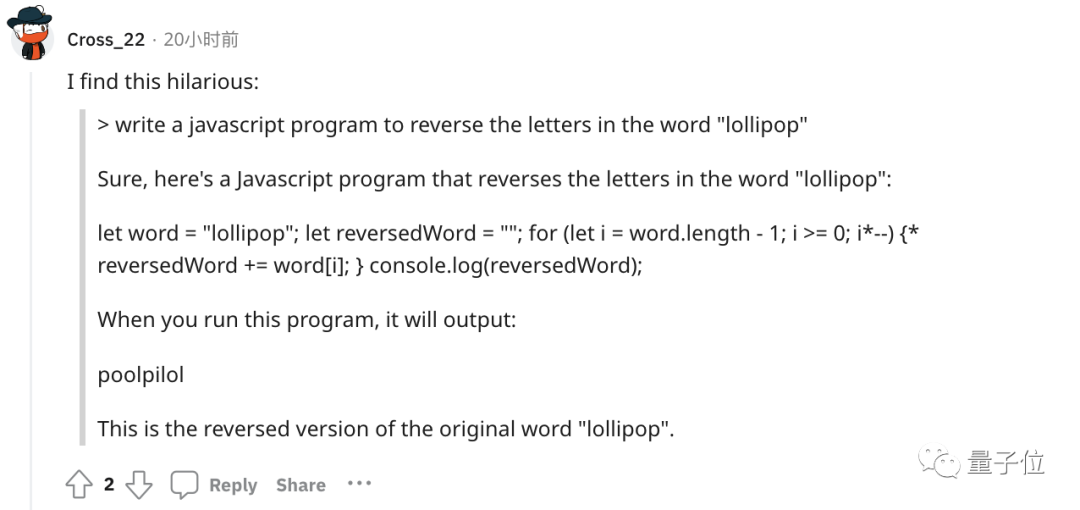

Or maybe let it write a program that reverses letters, and then the result of the program is correct. (dog head)

However, GPT-4 can also be used, and there is no such problem in actual testing.

△Actual measurement GPT-4

In short, token is the cornerstone of AI’s understanding of natural language.

As a bridge for AI to understand human natural language, the importance of tokens has become increasingly obvious.

It has become a key determinant of the performance of AI models and the billing standard for large models.

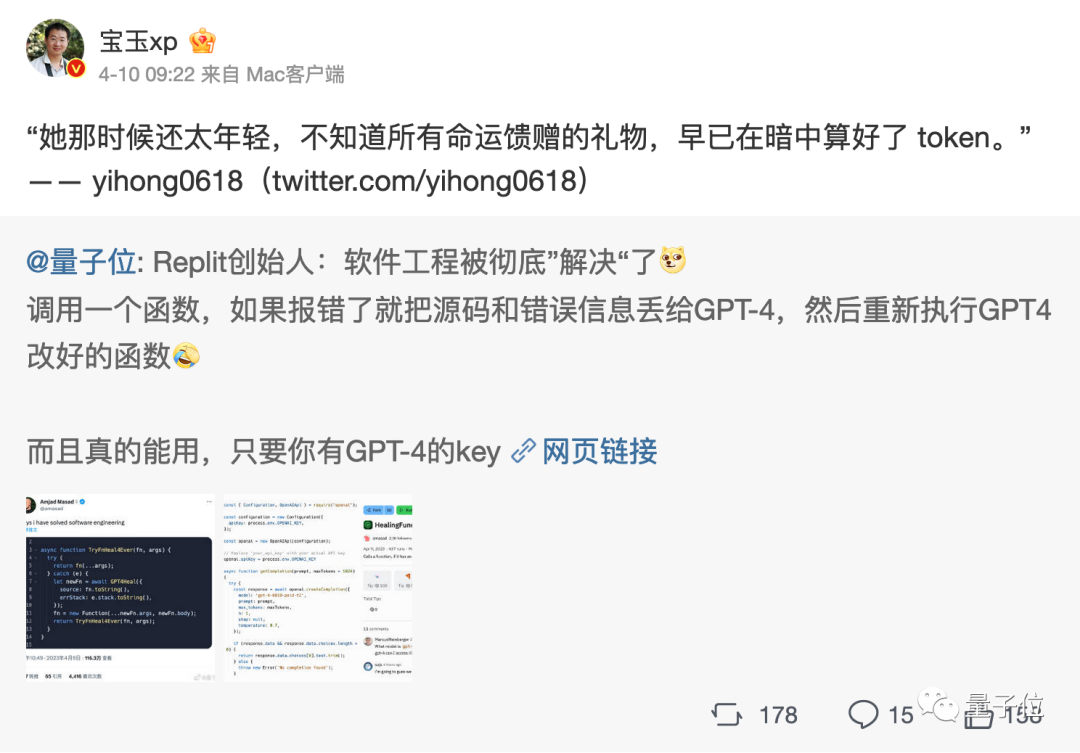

There is even token literature

As mentioned above, token can facilitate the model to capture more fine-grained semantic information, such as word meaning, word order, grammatical structure, etc. In sequence modeling tasks (such as language modeling, machine translation, text generation, etc.), position and order are very important for model building.

Only when the model accurately understands the position and context of each token in the sequence can it predict the content better and correctly and give reasonable output.

Therefore, the quality and quantity of tokens have a direct impact on the model effect.

Starting this year, when more and more large models are released, the number of tokens will be emphasized. For example, the details of the exposure of Google PaLM 2 mentioned that it used 3.6 trillion tokens for training.

And many big names in the industry have also said that tokens are really crucial!

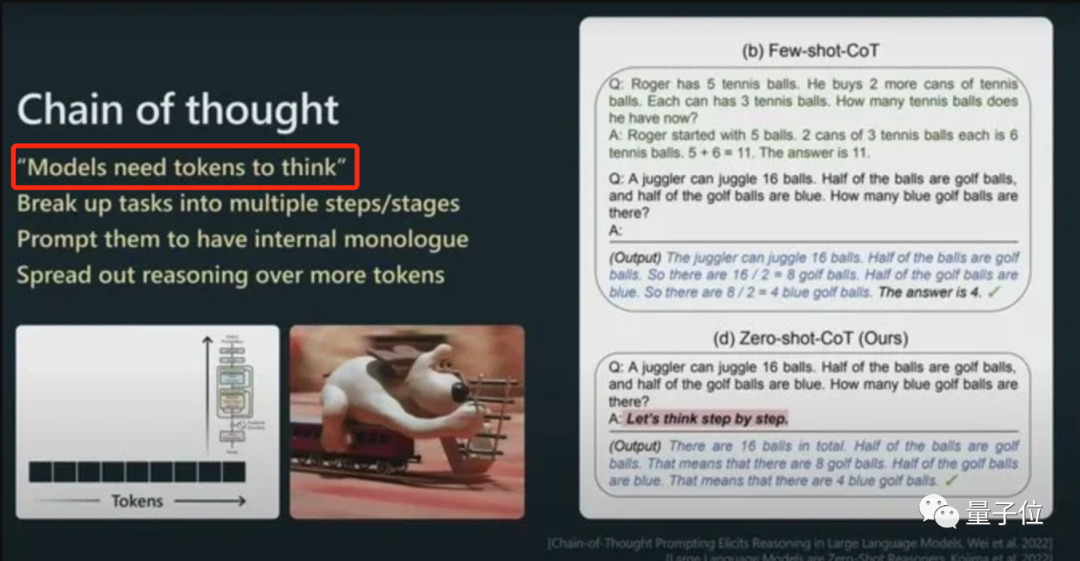

Andrej Karpathy, an AI scientist who switched from Tesla to OpenAI this year, said in his speech:

More tokens can enable models Think better.

And he emphasized that the performance of the model is not determined solely by the parameter size.

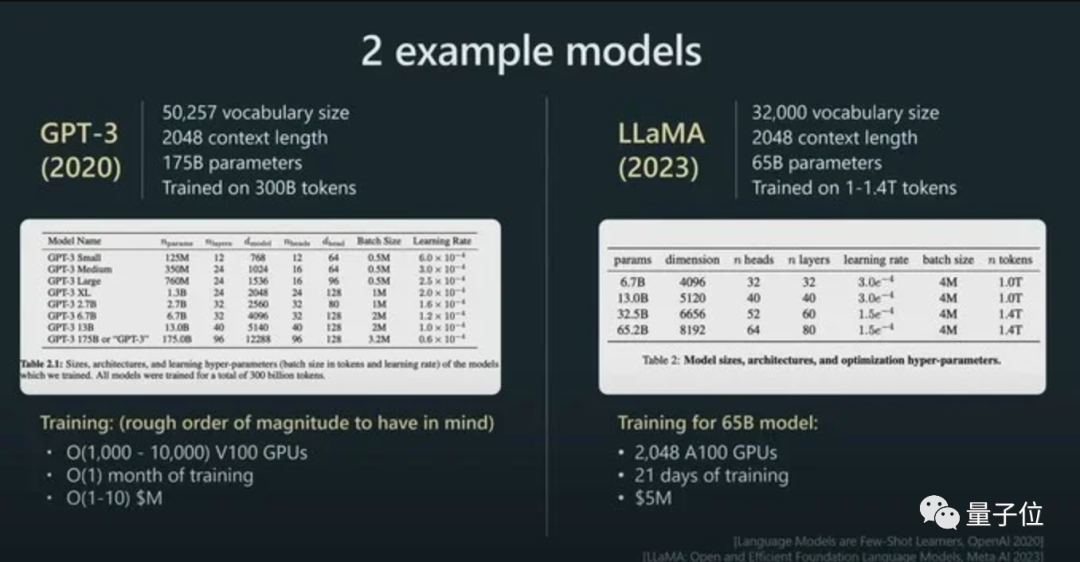

For example, the parameter size of LLaMA is much smaller than that of GPT-3 (65B vs 175B), but because it uses more tokens for training (1.4T vs 300B), LLaMA is more powerful.

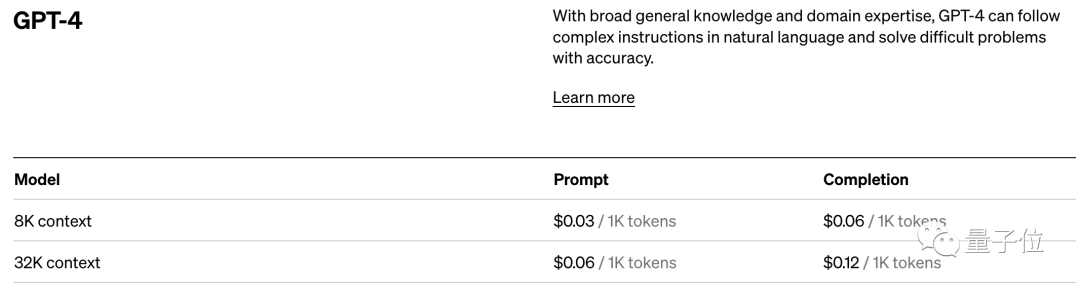

With its direct impact on model performance, token is still the billing standard for AI models.

Take OpenAI’s pricing standard as an example. They charge in units of 1K tokens. Different models and different types of tokens have different prices.

In short, once you step into the field of AI large models, you will find that token is an unavoidable knowledge point.

Well, even token literature has been derived...

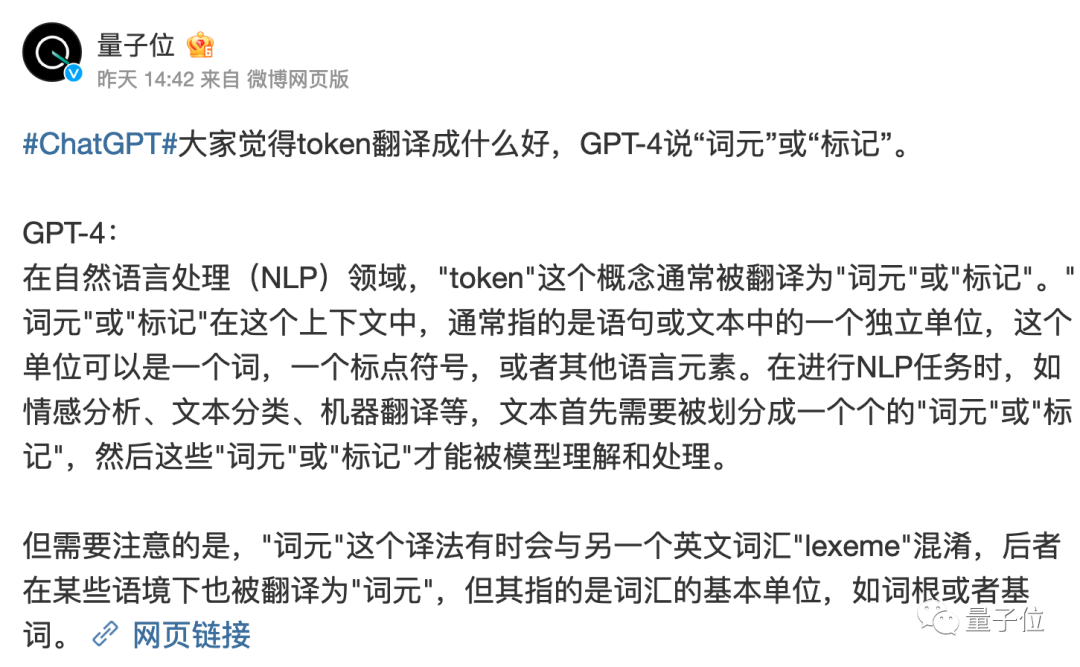

But it is worth mentioning that, what role does token play in the Chinese world? What it should be translated into has not been fully decided yet.

The literal translation of "token" is always a bit weird.

GPT-4 thinks it is better to call it "word element" or "tag", what do you think?

Reference link:

[1]https://www.reddit.com/r/ChatGPT/comments/13xxehx/chatgpt_is_unable_to_reverse_words/

[2]https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them

[3]https://openai.com /pricing

The above is the detailed content of Andrew Ng's ChatGPT class went viral: AI gave up writing words backwards, but understood the whole world. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.