Technology peripherals

Technology peripherals

AI

AI

GPT-3 plans to be open source! Sam Altman revealed that there is an urgent need for GPUs, and GPT-4 multi-modal capabilities will be available next year

GPT-3 plans to be open source! Sam Altman revealed that there is an urgent need for GPUs, and GPT-4 multi-modal capabilities will be available next year

GPT-3 plans to be open source! Sam Altman revealed that there is an urgent need for GPUs, and GPT-4 multi-modal capabilities will be available next year

After the hearing, Sam Altman took the team on a "travel" in Europe.

In a recent interview, Altman did not hide it, revealing that all progress in his own AI will have to wait until the GPU catches up.

He discussed OpenAI’s API and product plans, which attracted the attention of many people.

Many netizens have said that I like Altman’s candor.

It is worth mentioning that the multi-modal capabilities of GPT-4 should be available to most Plus users in 2024, provided they have enough GPUs.

The supercomputer Microsoft’s big brother spent US$1.2 billion to build for OpenAI is far from being able to meet the computing power required for GPT-4 runtime. After all, GPT-4 parameters are said to have 100 trillion.

In addition, Altman also revealed that GPT-3 is also included in OpenAI’s open source plan.

I don’t know if this interview revealed too many “secrets” of OpenAI. The source manuscript has been deleted, so hurry up and code it.

Key points

The latest interview was hosted by Raza Habib, CEO of the AI development platform Humanloop, and interviewed Altman and 20 other developers.

This discussion touches on practical developer issues, as well as larger issues related to OpenAI’s mission and the social impact of AI.

The following are the key points:

1. OpenAI is in urgent need of GPU

2. OpenAI’s recent roadmap: GPT-4 multi-modal will be opened in 2024

3. The ChatGPT plug-in accessed through API will not be released in the near future

4. OpenAI only makes ChatGPT, the "killer application", with the goal of making ChatGPT a super-smart work assistant

##5. GPT-3 In the open source plan

6. The scaling law of model performance continues to be effective

Next, I will introduce what Sam Altman said from 6 major points What happened?

OpenAI currently relies heavily on GPUAll the topics in the interview revolved around, "OpenAI is too short of GPU."

This has delayed many of their short-term plans.

Currently, many customers of OpenAI are complaining about the reliability and speed of the API. Sam Altman explained that the main reason is that the GPU is too short.

##OpenAI is the first customer of NVIDIA DGX-1 supercomputer ## As for , the context length supporting 32k tokens cannot yet be rolled out to more people.

Since OpenAI has not overcome technical obstacles yet, it looks like they will have the context to support 100k-1M tokens this year, but will need to make a breakthrough in research.

The Fine-Tuning API is also currently limited by GPU availability.

OpenAI does not yet use efficient fine-tuning methods like Adapters or LoRa, so fine-tuning is very computationally intensive to run and manage.

However, they will provide better support for fine-tuning in the future. OpenAI may even host a marketplace of community-contributed models.

Finally, dedicated capacity provision is also limited by GPU availability.

At the beginning of this year, netizens revealed that OpenAI was quietly launching a new developer platform, Foundry, to allow customers to run the company’s new machine learning model on dedicated capacity.

This product is "designed for cutting-edge customers running larger workloads." To use this service, customers must be willing to pay $100k upfront.

However, as can be seen from the picture information disclosed, the example is not cheap.

Running the lightweight version of GPT-3.5 will cost $78,000 for 3 months of delegation and $264,000 for a year.

It can also be seen from the other side that GPU consumption is expensive.

OpenAI near-term roadmap

Altman shared the tentative near-term roadmap for the OpenAI API:

2023 :

· Fast and cheap GPT-4 ーー This is a top priority for OpenAI.

In general, OpenAI’s goal is to reduce “smart costs” as much as possible. So they will work hard to continue driving down the cost of their APIs.

·Longer context windows—In the near future, context windows may support up to 1 million tokens.

· Fine-tuning API – The fine-tuning API will be extended to the latest models, but the exact form will depend on what the developer really wants .

·Memory API ーーCurrently most tokens are wasted in the above transmission. In the future, there will be an API version that can remember the conversation history.

2024:

##·Multi-modal capabilitiesーーGPT-4 demonstrated powerful multi-modal capabilities when it was released, but until GPUs are satisfied, this feature cannot be extended to everyone.

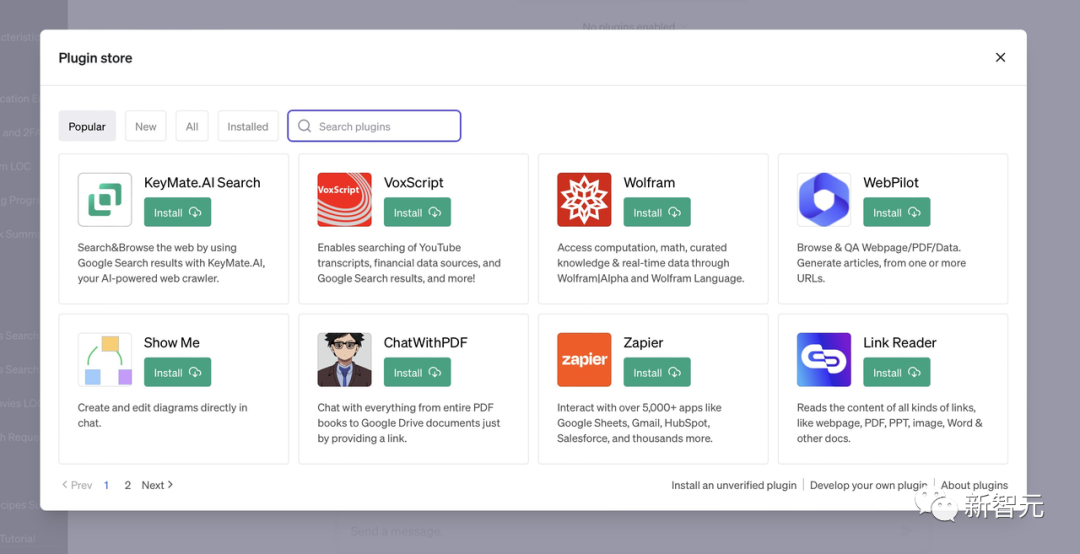

The plug-in "has no PMF" and will not appear in the API in the short termMany developers are very interested in accessing the ChatGPT plug-in through the API, but Sam said that these plug-ins It won't be released anytime soon.

"Except for Browsing, the plug-in system has not found PMF."

He also pointed out that, Many people want to put their products into ChatGPT, but in fact what they really need is to put ChatGPT into their products.

Except for ChatGPT, OpenAI will not release any more productsEvery move OpenAI makes makes developers tremble.

Many developers said they were nervous about using the OpenAI API to build applications when OpenAI might release products that compete with them.

And Altman said that OpenAI will not release more products outside of ChatGPT.

In his opinion, a great company has a "killer application", and ChatGPT is going to be this record-breaking application.

ChatGPT’s vision is to become a super smart work assistant. OpenAI will not touch many other GPT use cases.

Regulation is necessary, but so is open sourceWhile Altman calls for regulation of future models, he does not believe that existing models are dangerous.

He believes that regulating or banning existing models would be a huge mistake.

In the interview, he reiterated his belief in the importance of open source and said that OpenAI is considering making GPT-3 open source.

Now, part of the reason OpenAI is not open source is because he doubts how many individuals and companies have the ability to host and provide large models.

The "scaling law" of model performance is still validRecently, many articles have claimed that the era of giant artificial intelligence models is over. However, it does not accurately reflect Altman's original intention.

#OpenAI’s internal data shows that scaling laws for model performance are still in effect, and making the model larger will continue to yield performance.

However, OpenAI has already scaled up its model millions of times in just a few years and therefore cannot sustain this rate of expansion.

This doesn't mean that OpenAI won't continue to try to make models bigger, it just means that they will probably only increase by 1x/2x per year instead of multiple orders of magnitude. The fact that scaling laws continue to be in effect has important implications for the timeline of AGI development.

The scaling assumption is that we probably already have most of the parts needed to build an AGI, and most of the remaining work will be scaling existing methods to larger models and larger data sets.

If the era of scaling is over, then we should probably expect AGI to be even further away. The continued validity of the scaling law strongly implies that the timeline for implementing AGI will become shorter.

Hot comments from netizens

Some netizens joked,

##OpenAI: Must Protect our moats through regulations. OpenAI once again mentioned that Meta is peeing in our moat, which should also mean that our models need to be open source.

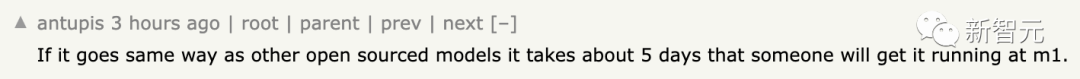

Some people say that if GPT-3 is really open source, like LLMa, it will take about 5 days to be ready on the M1 chip It's running.

Community developers can help OpenAI solve the GPU bottleneck, provided they open source their models. Within days, developers will be able to run it on CPUs and edge devices.

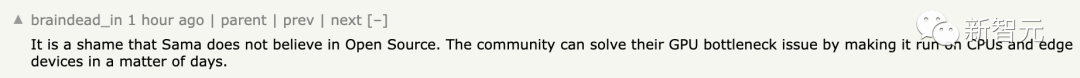

Regarding the GPU shortage, some people think that there is a problem with OpenAI’s capital chain and it cannot afford it.

However, others said there was an obvious lack of supply. Unless there is a revolution in chip manufacturing, there will likely always be an undersupply relative to consumer GPUs.

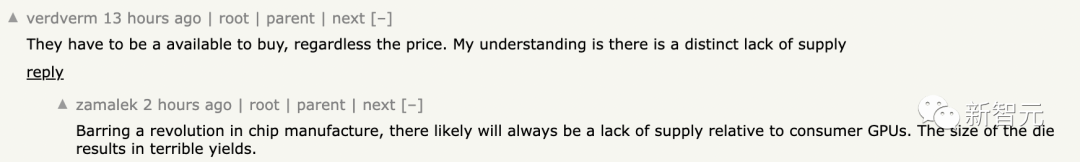

Some netizens doubt that Nvidia’s value is still underestimated? The step change in computing demand may last for years...

Nvidia just joined the trillion dollar club, so unlimited computing power Demand may lead to a world with chip factories exceeding $2 trillion.

Reference:

##https://www.php.cn/link /c55d22f5c88cc6f04c0bb2e0025dd70b

#https://www.php.cn/link/d5776aeecb3c45ab15adce6f5cb355f3

The above is the detailed content of GPT-3 plans to be open source! Sam Altman revealed that there is an urgent need for GPUs, and GPT-4 multi-modal capabilities will be available next year. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Ten recommended open source free text annotation tools

Mar 26, 2024 pm 08:20 PM

Ten recommended open source free text annotation tools

Mar 26, 2024 pm 08:20 PM

Text annotation is the work of corresponding labels or tags to specific content in text. Its main purpose is to provide additional information to the text for deeper analysis and processing, especially in the field of artificial intelligence. Text annotation is crucial for supervised machine learning tasks in artificial intelligence applications. It is used to train AI models to help more accurately understand natural language text information and improve the performance of tasks such as text classification, sentiment analysis, and language translation. Through text annotation, we can teach AI models to recognize entities in text, understand context, and make accurate predictions when new similar data appears. This article mainly recommends some better open source text annotation tools. 1.LabelStudiohttps://github.com/Hu

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

Image annotation is the process of associating labels or descriptive information with images to give deeper meaning and explanation to the image content. This process is critical to machine learning, which helps train vision models to more accurately identify individual elements in images. By adding annotations to images, the computer can understand the semantics and context behind the images, thereby improving the ability to understand and analyze the image content. Image annotation has a wide range of applications, covering many fields, such as computer vision, natural language processing, and graph vision models. It has a wide range of applications, such as assisting vehicles in identifying obstacles on the road, and helping in the detection and diagnosis of diseases through medical image recognition. . This article mainly recommends some better open source and free image annotation tools. 1.Makesens

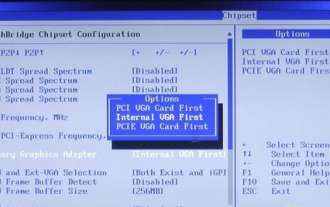

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

How to turn off win10gpu shared memory

Jan 12, 2024 am 09:45 AM

Friends who know something about computers must know that GPUs have shared memory, and many friends are worried that shared memory will reduce the number of memory and affect the computer, so they want to turn it off. Here is how to turn it off. Let's see. Turn off win10gpu shared memory: Note: The shared memory of the GPU cannot be turned off, but its value can be set to the minimum value. 1. Press DEL to enter the BIOS when booting. Some motherboards need to press F2/F9/F12 to enter. There are many tabs at the top of the BIOS interface, including "Main, Advanced" and other settings. Find the "Chipset" option. Find the SouthBridge setting option in the interface below and click Enter to enter.

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Do I need to enable GPU hardware acceleration?

Feb 26, 2024 pm 08:45 PM

Is it necessary to enable hardware accelerated GPU? With the continuous development and advancement of technology, GPU (Graphics Processing Unit), as the core component of computer graphics processing, plays a vital role. However, some users may have questions about whether hardware acceleration needs to be turned on. This article will discuss the necessity of hardware acceleration for GPU and the impact of turning on hardware acceleration on computer performance and user experience. First, we need to understand how hardware-accelerated GPUs work. GPU is a specialized

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

News says AMD will launch new RX 7700M / 7800M laptop GPU

Jan 06, 2024 pm 11:30 PM

According to news from this site on January 2, according to TechPowerUp, AMD will soon launch notebook graphics cards based on Navi32 GPU. The specific models may be RX7700M and RX7800M. Currently, AMD has launched a variety of RX7000 series notebook GPUs, including the high-end RX7900M (72CU) and the mainstream RX7600M/7600MXT (28/32CU) series and RX7600S/7700S (28/32CU) series. Navi32GPU has 60CU. AMD may make it into RX7700M and RX7800M, or it may make a low-power RX7900S model. AMD is expected to

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

Beelink EX graphics card expansion dock promises zero GPU performance loss

Aug 11, 2024 pm 09:55 PM

One of the standout features of the recently launched Beelink GTi 14is that the mini PC has a hidden PCIe x8 slot underneath. At launch, the company said that this would make it easier to connect an external graphics card to the system. Beelink has n

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Recommended: Excellent JS open source face detection and recognition project

Apr 03, 2024 am 11:55 AM

Face detection and recognition technology is already a relatively mature and widely used technology. Currently, the most widely used Internet application language is JS. Implementing face detection and recognition on the Web front-end has advantages and disadvantages compared to back-end face recognition. Advantages include reducing network interaction and real-time recognition, which greatly shortens user waiting time and improves user experience; disadvantages include: being limited by model size, the accuracy is also limited. How to use js to implement face detection on the web? In order to implement face recognition on the Web, you need to be familiar with related programming languages and technologies, such as JavaScript, HTML, CSS, WebRTC, etc. At the same time, you also need to master relevant computer vision and artificial intelligence technologies. It is worth noting that due to the design of the Web side

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD FSR 3.1 launched: frame generation feature also works on Nvidia GeForce RTX and Intel Arc GPUs

Jun 29, 2024 am 06:57 AM

AMD delivers on its initial March ‘24 promise to launch FSR 3.1 in Q2 this year. What really sets the 3.1 release apart is the decoupling of the frame generation side from the upscaling one. This allows Nvidia and Intel GPU owners to apply the FSR 3.