Everyone still remembers that when ChatGPT first came out, its code generation function was very new to people.

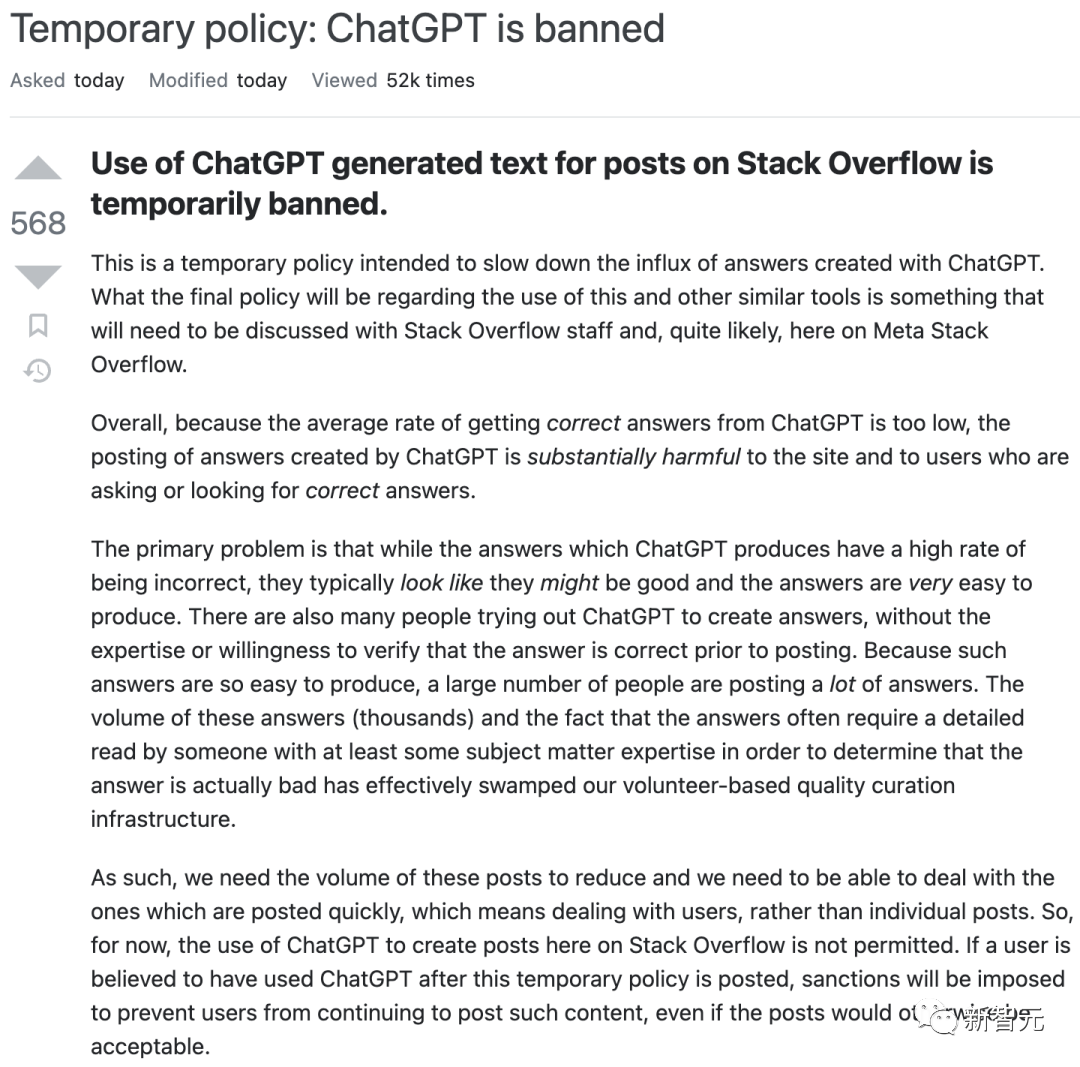

However, with the influx of all kinds of specious and difficult-to-distinguish answers, Stack Overflow was forced to issue a ban order overnight - ChatGPT, ban!

Specifically, Stack Overflow moderators have the right to ban accounts suspected of publishing AI-generated content such as ChatGPT, and directly delete posts.

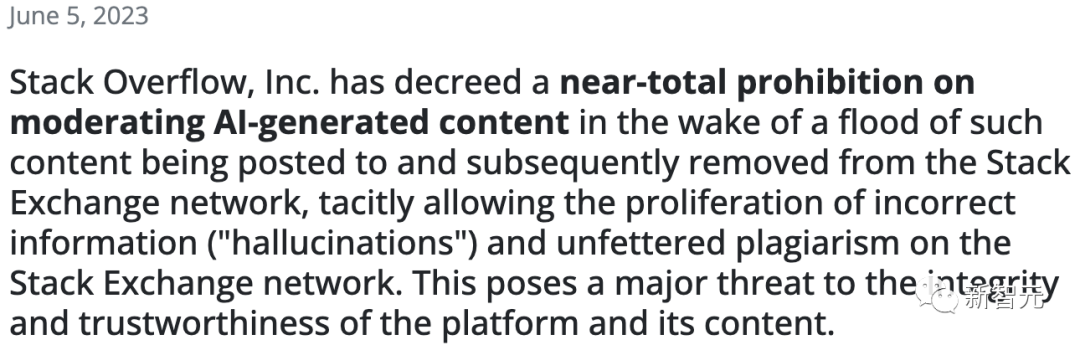

##However, Stack Overflow recently issued such a new rule:

Given At present, we are unable to accurately identify content generated by AI, and the probability of "false positives" is very high. This kind of excessive banning measures may cause the website to lose a large number of contributing netizens. Therefore, moderators can ban accounts only if it is true and verifiable. Neither subjective guesses, such as writing style, nor the results of the GPT detector can be used as a standard of measurement.As soon as this rule was published, the moderators immediately went into a panic!

In their view, this is simply a blatant acquiescence to the wanton spread of the LLM illusion, an open destruction of the community’s clean environment, a loss of morality, and a distortion of human nature...

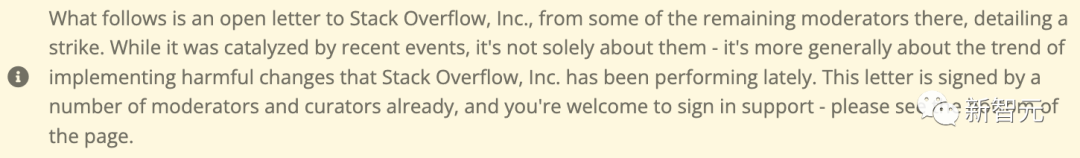

Some of the moderators have gone on strike in anger.

Some moderators and users wrote an open letter angrily complaining about the new rules This will completely destroy Stack Overflow, the holy place for code Q&A in everyone’s hearts.

They expressed indignation that AI-generated content would "pose a major threat to the integrity and credibility of the platform and content." The operator's decision will undermine Stack Overflow's goal of becoming a "high-quality information base."

In the past, moderators could delete posts or ban their accounts as long as they believed that the content of the post was generated by AI.

But nowadays, this standard has become very strict. The usual methods used in the past are no longer applicable today.

These moderators believe that this will mean that in the future, AI-generated content can be published on Stack Overflow without any regard for what the community thinks about it.

From now on, when we open Stack Overflow, we are likely to see an eyeful of error messages and plagiarized content. .

In addition, the new policy will also deprive the Stack Exchange community of room to define its own policies.

Unfortunately, so far, the operators and moderators have not yet achieved good direct communication. The last thing the moderators can do is to choose to go on strike and no longer oppose the platform. Posts are reviewed.

In their view, their strike is a last resort to save the community "from a complete loss of value."

In a large number of AI-generated After the content was removed, Stack Overflow issued a new "ban," allowing incorrect information and unchecked plagiarism to flourish on Stack Exchange. This poses a significant threat to the integrity and credibility of the platform and its content.

The undersigned are volunteer moderators, contributors, and users of Stack Overflow and Stack Exchange.

Starting today, we are going on a general strike against Stack Overflow and Stack Exchange to protest what Stack Overflow, the company, is and will be imposing on us in the future. Changes to policies and platform rules.

Our efforts to achieve platform change through reasonable means were ignored and our concerns were ignored. Now, as a last resort, we are withdrawing from the platform into which we have invested over a decade of care and volunteer effort.

We firmly believe in Stack Exchange’s core mission: to provide a high-quality repository of information in a question-and-answer format, and recent actions by Stack Overflow, Inc. directly undermine that goal.

Specifically, moderators are no longer allowed to use AI-generated answers as a reason to delete AI-generated answers in some cases. This allows almost any AI-generated answer to be freely published without regard to the established community consensus on such content.

This, in turn, allows incorrect information (commonly known as "illusion") and plagiarism to flourish on the platform.

As Stack Overflow, Inc. has pointed out before, this undermines our trust in the platform.

Additionally, the policy details sent directly to moderators differ significantly from the publicly outlined guidelines, and moderators are prohibited from sharing these details in public.

These policies ignore the leeway previously given to each Stack Exchange community to decide on their own policies, make direct modifications without exchanging opinions with the community, overturn the community consensus, and refuse to Think before you act.

Until this matter is resolved to our satisfaction, we will suspend these activities, including but not limited to the following:

· Posting and Handle flag

· Run SmokeDetector, anti-spam robot

· Close or vote to close the post

· Delete or vote for deletion of a post

· Review tasks in the queue

· Run bots to assist with review, such as detecting plagiarism, low-level Quality answers and rude comments, etc.

at Stack Overflow Inc. retracts this new policy to address moderator concerns and allow moderators to effectively enforce established policies against AI-generated answers Prior to the policy, we called for a general strike, which would be a last-ditch effort to protect the Stack Exchange platform and users from a complete loss of value.

At the same time, we also want to remind Stack Overflow that a network that relies entirely on volunteers cannot continue to ignore, abuse, and point the finger at these volunteers.

The new regulations state that Stack Overflow officially conducted a series of analyzes on the current content review methods. It was found that the content review mechanism generated by AI was not accurate.

In other words, the determination of whether some content is generated by AI is not necessarily correct, and the responsible moderator or volunteer may make a wrong judgment.

Officials are worried that this move will encourage prejudice against users in certain regions or countries, or exclude a large number of legitimate content publishers.

In order to solve this problem, the official requirement is that moderators responsible for reviewing content need to use very strict evidence before banning their accounts. Criteria to determine whether the content posted by this user was generated by AI.

Under the new regulations, the useful standards that moderators usually use based on user writing style and forum behavior will no longer be used, because this is not considered "strict".

Officials stated that the methods used by moderators cannot be 100% adjudicated.

At the same time, the GPT detector is not recommended in the official opinion. The false positive rate is too high, unacceptable, and cannot be used as a reliable indicator for judgment.

In short, the new regulations officially restrict the power of moderators. Only conclusions reached through strict evidence review can be adopted and further actions (such as account suspension, etc.) can be taken.

Any method that relies on intuition and guessing should no longer be used.

Why do we have to strike? A moderator’s blood and tears complaint

A moderator posted on Stack Overflow and explained in detail the reasons why the moderators had to choose to go on strike.

ta said that everyone is doing this more or less for the benefit of the entire community.

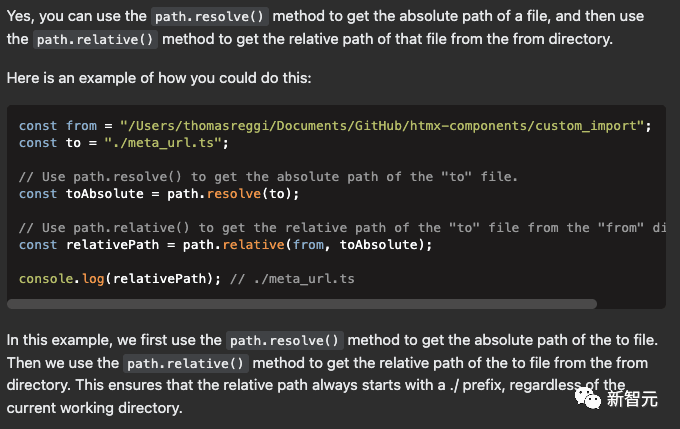

In late November 2022, with the launch of ChatGPT, a large number of users began to frantically upload answers generated by AI.

The answers generated using ChatGPT are very similar to the answers given by real users

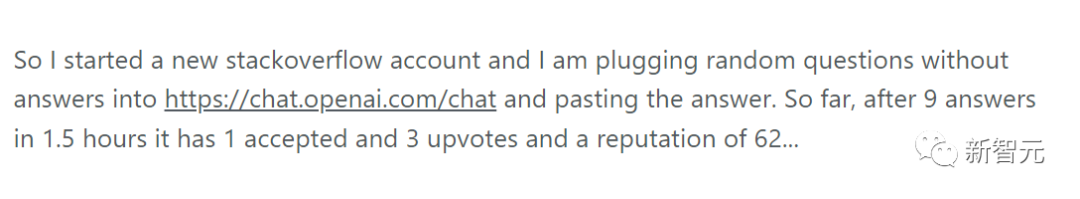

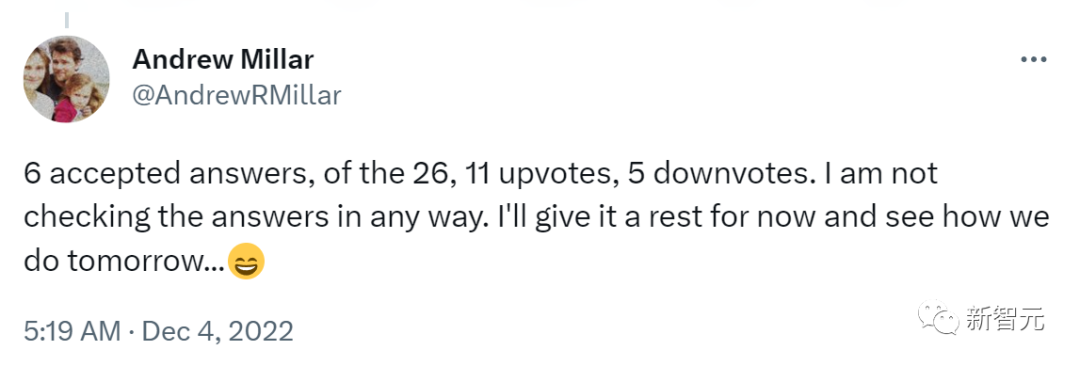

For example, the netizen below said that he first created a new account, then posted random questions without answers into ChatGPT, and then posted the generated answers.

Within an hour and a half, he posted 9 answers and received 1 acceptance, 3 approvals, and 62 reputation points.

Subsequently, these randomly copied and pasted answers from ChatGPT quickly had a huge impact. This netizen is very much looking forward to the follow-up Case.

new regulations.

The new rules naturally make sense. Stack Overflow has relied on community volunteer review to maintain operations since its establishment in 2008.

But the answers generated by ChatGPT usually look good, and countless netizens are frantically posting these answers, which will cause a huge burden on volunteers.

Therefore, the community had to issue a ban.

####################The moderator said that after the ban was issued, the moderators worked hard and directly participated in the community management team. All aspects help this new policy be implemented in this community. ############The process of processing posts is also very troublesome. ############The review team will use JavaScript scripts to help with the review. When tools are needed, they will write them directly. They also extend existing website functionality. ############Every day, moderators deal with a large number of posts and experience situations such as being abused. ############ Therefore, after receiving the new version ban on Monday, the moderators were very tired. After all, we are all volunteers, not regular employees. They have given enough to the community...######What do the striking moderators want? Ultimately it comes down to problem solving.

Some issues require official recognition in person, and some issues require employees to discuss them openly to clarify what should be done.

ChatGPT rules are rules jointly developed by the entire community and have received widespread support.

Employees should not only use non-public channels (chat rooms and moderator teams) to make large-scale rule changes, because using these channels means that the majority of users will not be aware of it.

Moderators are required to handle flags according to a rule that has never been made public. In fact, everything on the site indicates that the old policy is still in effect.

Therefore, moderators will still receive flags that cannot be handled consistent with this policy. Eventually, the community will definitely notice the difference.

Those few moderators who post to explain the policy changes before the announcement is made public are actually running the risk of losing their moderator power and losing access to the moderator team. This is very unfair to the moderators.

Philippe posted a post angrily criticizing the new regulations. He said that the possible racial discrimination that officials are worried about is a naked accusation. But obviously, no one wants to be accused of racism.

At worst, the new rules reflect a perception among employees that moderators and the community are at odds with each other.

Staff's wording of the policy was very vague (as stated before, there are differences between public and private policies) and did not provide specific instances where they felt something was done incorrectly.

They hope that the moderators will stop using GPT detector as the only criterion, which is certainly possible, but for someone who posted more than a dozen long answers in two hours Said, it is obvious that these answers are definitely not all written by themselves, they are just copy and paste to answer the questions.

Per policy, this is unacceptable, but how are moderators supposed to handle it?

Can moderators use detection tools without direct evidence of plagiarism?

However, the reality is that no one tells the moderators what exactly they should do...

The above is the detailed content of A collective strike! More than 200 Stack Overflow moderators accuse ChatGPT of causing a flood of 'junk content'. For more information, please follow other related articles on the PHP Chinese website!