Technology peripherals

Technology peripherals

AI

AI

Detailed explanation of Transformer structure and its applications - GPT, BERT, MT-DNN, GPT-2

Detailed explanation of Transformer structure and its applications - GPT, BERT, MT-DNN, GPT-2

Detailed explanation of Transformer structure and its applications - GPT, BERT, MT-DNN, GPT-2

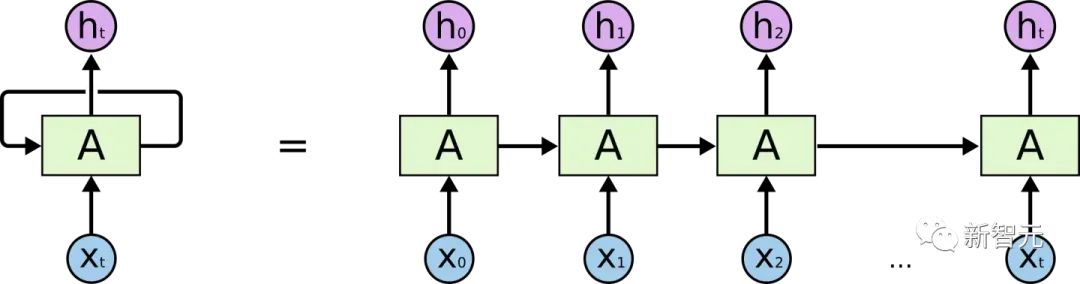

Before introducing Transformer, let’s review the structure of RNN

##If you have a certain understanding of RNN, you will definitely know that RNN There are two obvious problems

- Efficiency problem: it needs to be processed word by word, and the next word cannot be processed until the hidden state of the previous word is output

- If the transfer distance is too long, there will be gradient disappearance, gradient explosion and forgetting problems

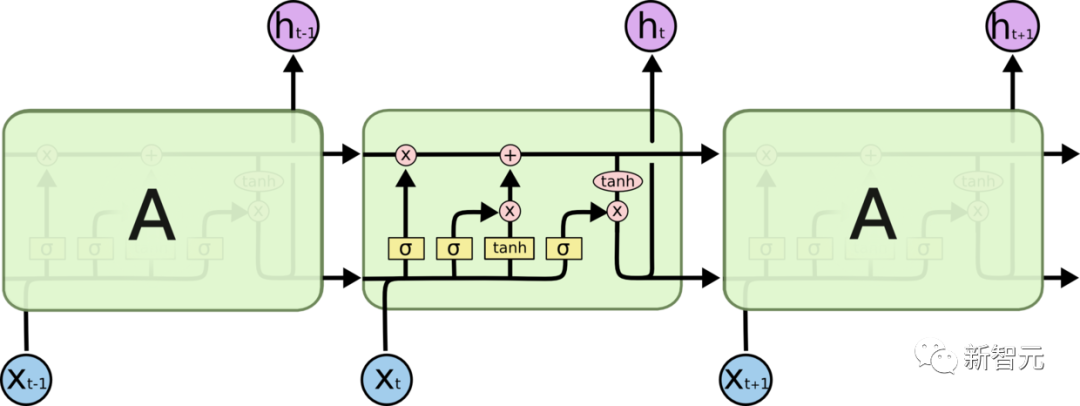

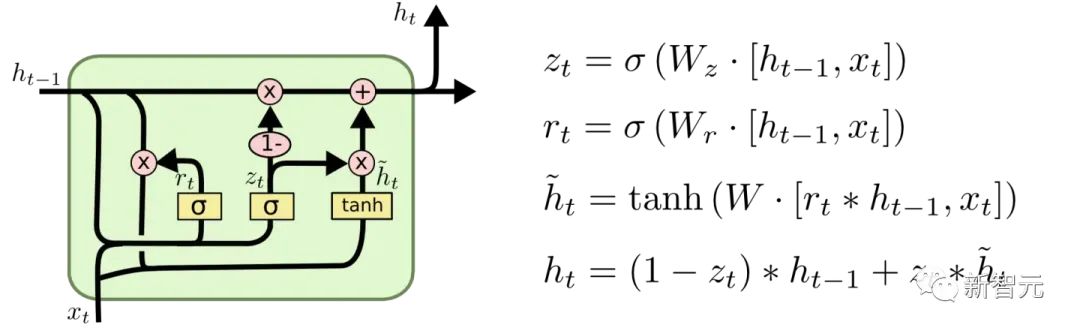

In order to alleviate the gradient and forgetting problems between transfers, Various RNN cells have been designed, the two most famous ones are LSTM and GRU

LSTM (Long Short Term Memory)

GRU (Gated Recurrent Unit)

However, to quote a metaphor from a blogger on the Internet, doing so will It's like changing the wheels of a carriage, why not just replace them with a car?

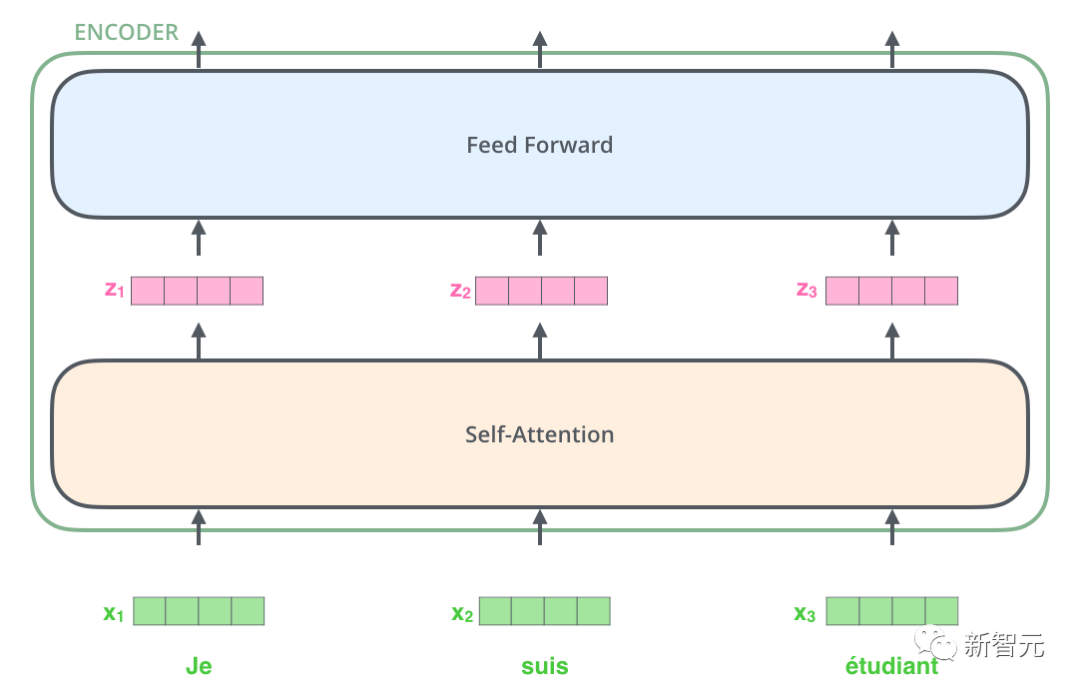

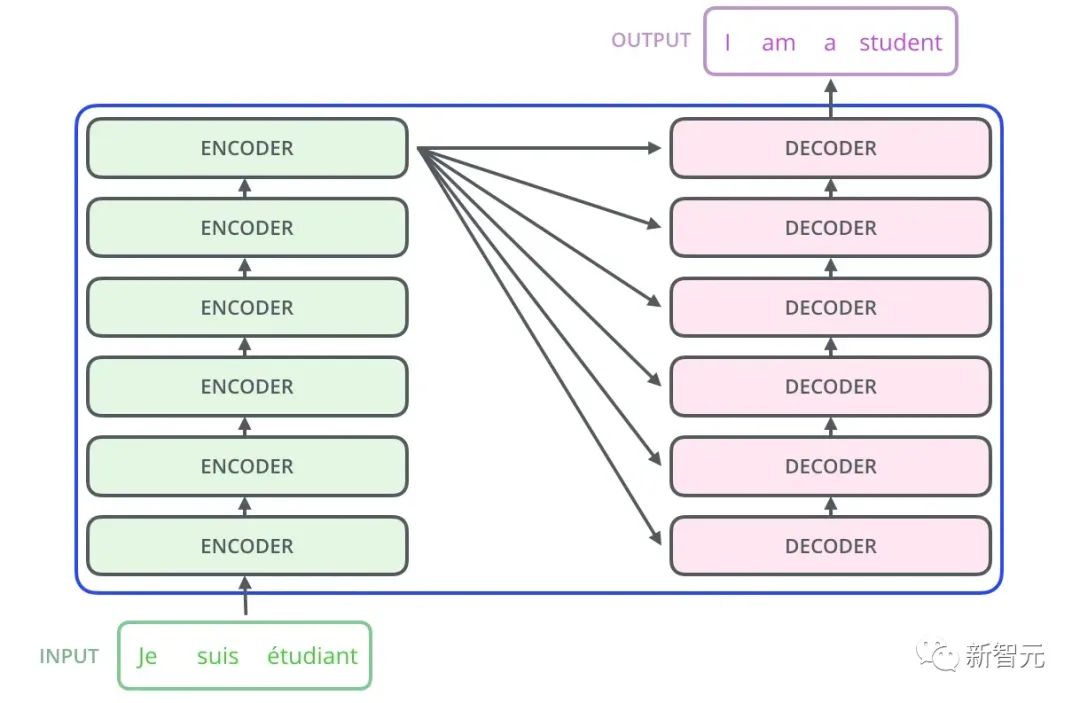

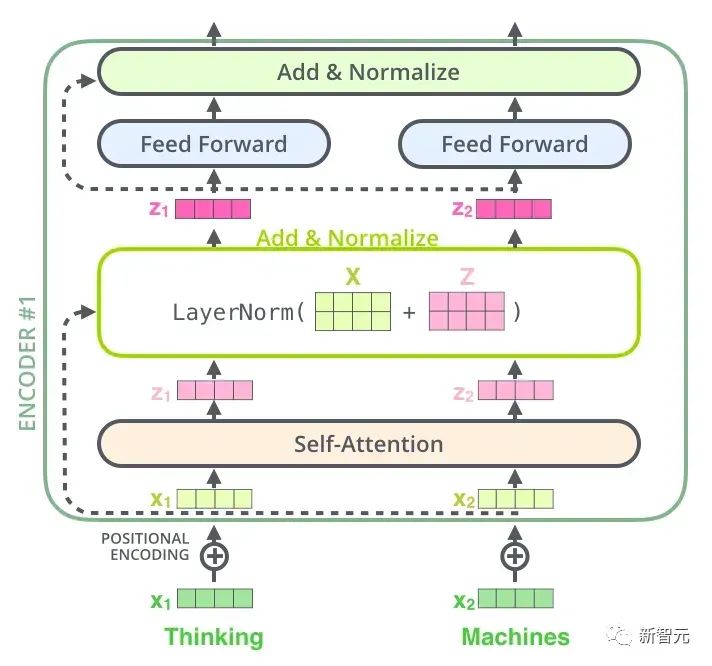

So there is the core structure we will introduce in this article - Transformer. Transformer is a work proposed by Google Brain 2017. It redesigns the weaknesses of RNN, solves RNN efficiency issues and transmission defects, and surpasses the performance of RNN on many issues. The basic structure of Transformer is shown in the figure below. It is an N-in-N-out structure. That is to say, each Transformer unit is equivalent to a layer of RNN layer. It receives all the words of a whole sentence as input, and then provides each word in the sentence. Each word produces an output. But unlike RNN, Transformer can process all words in the sentence at the same time, and the operating distance between any two words is 1. This effectively solves the efficiency problem and distance of RNN mentioned above. question.

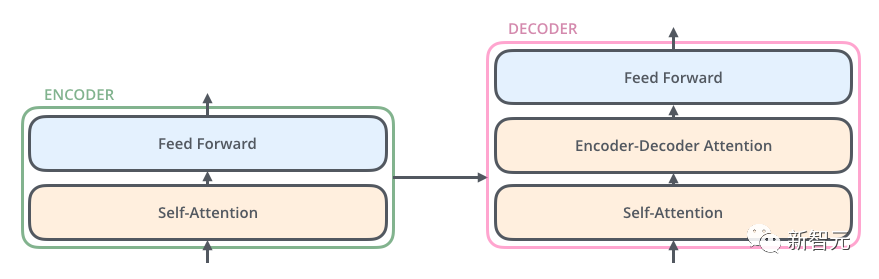

Each Transformer unit has two most important sub-layers, namely the Self-Attention layer and the Feed Forward layer, which will be discussed later. The detailed structure of the two layers is introduced. The article uses Transformer to build a language translation model similar to Seq2Seq, and designs two different Transformer structures for Encoder and Decoder.

Decoder Transformer has an additional Encoder-Decoder Attention layer compared to Encoder Transformer, which is used to receive the output from Encoder as a parameter. Finally, as long as they are stacked as shown in the figure below, the structure of Transformer Seq2Seq can be completed.

Give an example of how to use this Transformer Seq2Seq for translation

- First of all, Transformer encodes sentences in the original language and obtains memory.

- When decoding for the first time, there is only one

sign, indicating the beginning of the sentence. - The decoder gets a unique output from this unique input, which is used to predict the first word of the sentence.

The second decoding, Append the first output to the input, the input becomes

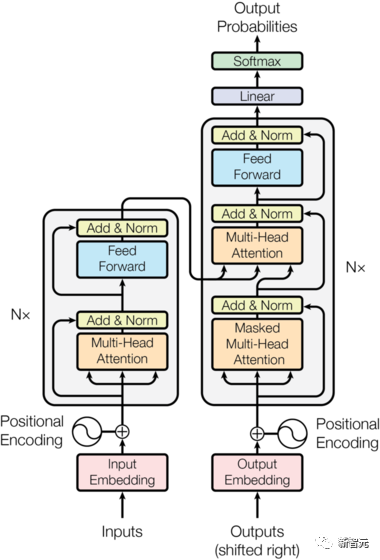

After understanding the general structure of Transformer and how to use it to complete the translation task, let’s take a look at the detailed structure of Transformer:

The core components are the Self-Attention and Feed Forward Networks mentioned above, but there are many other details. Next, we will start to interpret the Transformer structure by structure.

Self Attention

Self Attention is when a word in a sentence pays attention to all its words. Calculate the weight of each word for this word, and then represent this word as the weighted sum of all words. Each Self Attention operation is like a Convolution operation or Aggregation operation for each word. The specific operation is as follows:

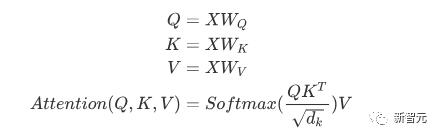

First, each word undergoes a linear change through three matrices Wq, Wk, Wv, divided into three, and each word's own query is generated. key, vector are three vectors. When performing Self Attention with a word as the center, the key vector of the word is used to do the dot product with the query vector of each word, and then the weight is normalized through Softmax. Then use these weights to calculate the weighted sum of the vectors of all words as the output of this word. The specific process is shown in the figure below

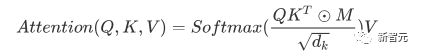

Before normalization, it needs to be normalized by dividing by the dimension dk of the vector, so the final Self Attention can be expressed as# using matrix transformation

##Finally, each Self Attention accepts the input of n word vectors and outputs n Aggregated vectors.

As mentioned above, the Self Attention in the Encoder is different from that in the Decoder. The Q, K, and V in the Encoder all come from the output of the upper layer unit, while the Decoder only Q comes from the output of the previous Decoder unit, and K and V both come from the output of the last layer of the Encoder. In other words, the Decoder calculates the weight through the current state and the Encoder's output, and then weights the Encoder's encoding to obtain the next layer's state.

Masked Attention

#By observing the structure diagram above, we can also find another difference between Decoder and Encoder. That is, the input layer of each Decoder unit must first go through a Masked Attention layer. So what is the difference between Masked and the ordinary version of Attention?

Encoder needs to consider the context of each word because it needs to encode the entire sentence. Therefore, during the calculation process of each word, all words in the sentence can be seen. However, Decoder is similar to the decoder in Seq2Seq. Each word can only see the status of the previous word, so it is a one-way Self-Attention structure.

The implementation of Masked Attention is also very simple. Just add (&) to the previous lower triangular matrix M before the Softmax step of ordinary Self Attention

Multi-Head Attention

##Multi-Head Attention is to do the above Attention h times, Then concat the h outputs to get the final output. This can greatly improve the stability of the algorithm and has relevant applications in many Attention-related work. In the implementation of Transformer, in order to improve the efficiency of Multi-Head, W is expanded by h times, and then the k, q, and v of different heads of the same word are arranged together for simultaneous calculation through view (reshape) and transpose operations to complete the calculation. Then the splicing is completed again through reshape and transpose, which is equivalent to a parallel processing of all heads.

Position-wise Feed Forward Networks

n vectors output after Attention in Encoder and Decoder ( Here n is the number of words) are input into a fully connected layer respectively to complete a position-by-position feedforward network.

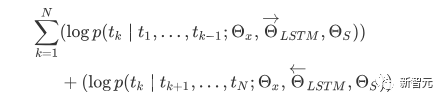

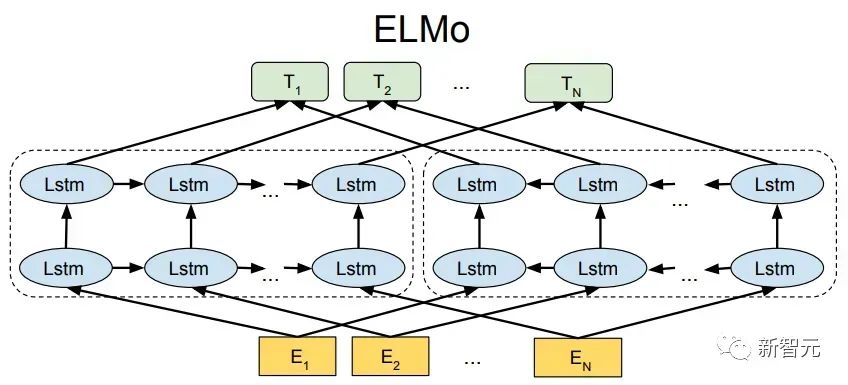

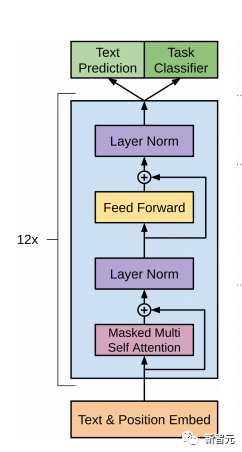

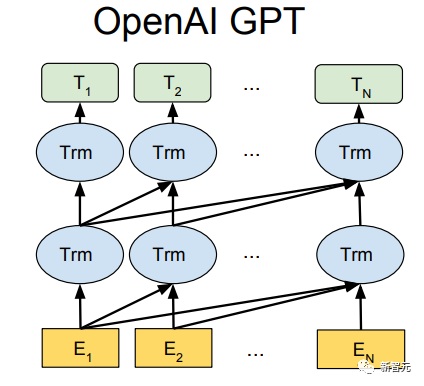

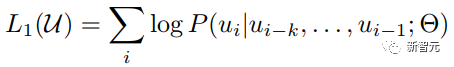

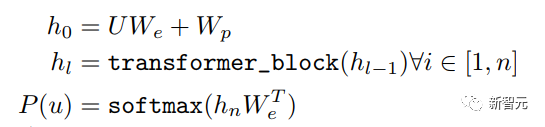

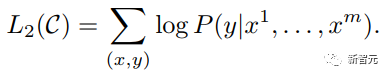

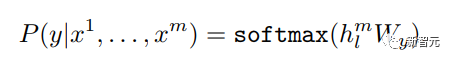

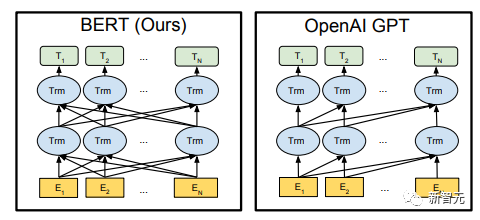

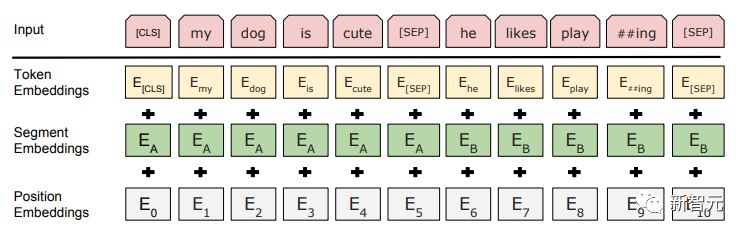

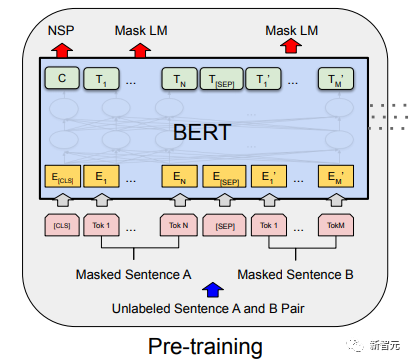

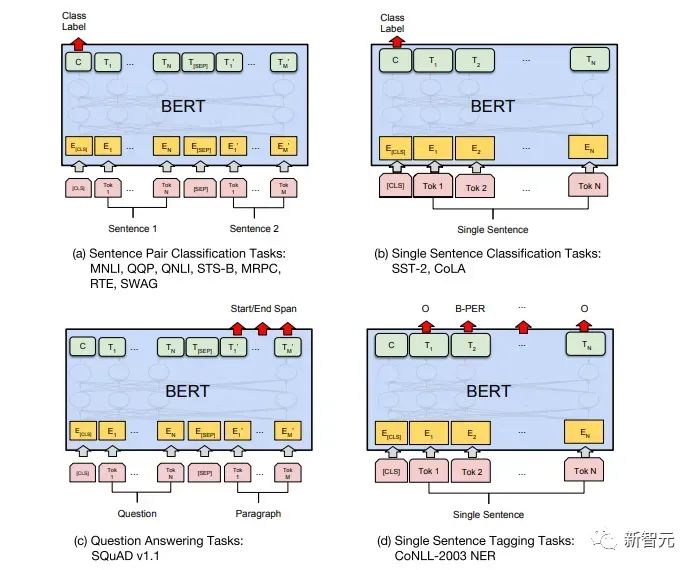

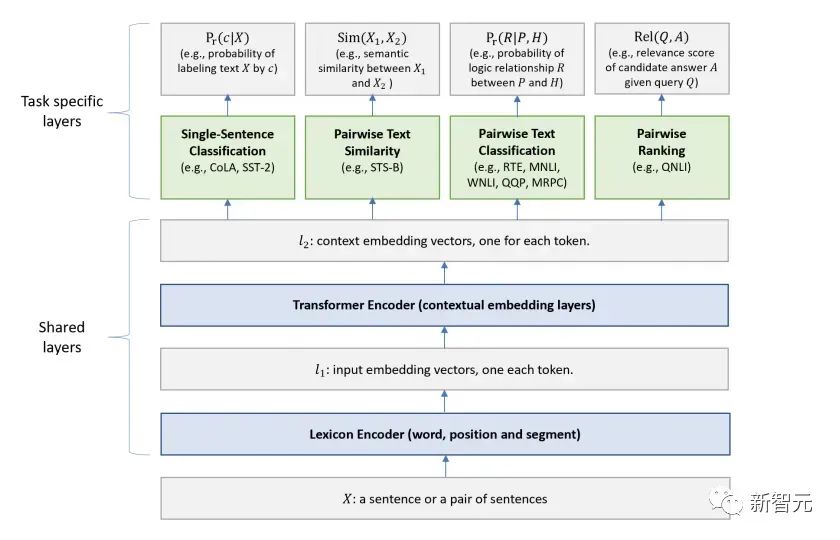

Add & Norm is a residual network, just add the input of one layer and its standardized output. Each Self Attention layer and FFN layer in Transformer will be followed by an Add & Norm layer. Positional Encoding Since there is neither RNN in Transformer, nor Unlike CNN, all words in a sentence are treated equally, so there is no sequential relationship between words. In other words, it is likely to suffer from the same shortcomings as the bag-of-words model. In order to solve this problem, Transformer proposed the Positional Encoding solution, which is to superimpose a fixed vector on each input word vector to represent its position. The Positional Encoding used in this article is as follows: where pos is the position of the word in the sentence, i is the i-th position in the word vector, that is, the word vectors of each word are superimposed into one line, Then a wave with a different phase or a gradually increasing wavelength is superimposed on each column to uniquely distinguish the position. Transformer workflow The workflow of Transformer is the splicing of each sub-process introduced above GitHub link: https://github .com/harvardnlp/annotated-transformer Post Scriptum Although raised in the Transformer article A model of natural language translation. Many articles call this model Transformer. But we still tend to call the substructure of the Encoder or Decoder that uses Self-Attention in the article a Transformer. The text and source code also contain many other optimizations such as dynamic changes in learning rate, Residual Dropout and Label Smoothing, which I will not go into details here. Interested friends can read the relevant references to learn more. One-way two-stage training model - OpenAI GPT GPT (Generative Pre-Training) is a model proposed by OpenAI in 2018, using the Transformer model to solve the problem Models for various natural language problems, such as classification, reasoning, question answering, similarity, etc. GPT adopts the Pre-training Fine-tuning training mode, which allows a large amount of unlabeled data to be utilized, greatly improving the effectiveness of these problems. GPT is one of the attempts to use Transformer to perform various natural language tasks. It mainly has the following three points If you already understand the principle of Transformer, you only need to understand the above three contents to have a deeper understanding of GPT. Pre-Training training method Many machine learning tasks require labeled data sets as input. But there is a large amount of unlabeled data around us, such as text, pictures, code, etc. Labeling this data requires a lot of manpower and time, and the speed of labeling is far less than the speed of data generation, so labeled data often only occupies a small part of the total data set. As computing power continues to improve, the amount of data that computers can process gradually increases. It would be a waste if these unlabeled data cannot be well utilized. So the two-stage model of semi-supervised learning and pre-training fine-tuning is becoming more and more popular. The most common two-stage method is Word2Vec, which uses a large amount of unlabeled text to train word vectors with certain semantic information, and then uses these word vectors as input for downstream machine learning tasks, which can greatly improve the generalization ability of the downstream model. But there is a problem with Word2Vec, that is, there can only be one Embedding for a single word. In this way, polysemy cannot be represented well. ELMo first thought of providing contextual information for each vocabulary set in the pre-training stage, using a language model based on bi-LSTM to bring contextual semantic information to the word vector: The above formulas represent the left and right LSTM-RNN respectively. They share the input word vector X and the weight S of each RNN layer, that is, using two-way The two-way output of RNN is used to simultaneously predict the next word (the next one on the right, the previous one on the left). The specific structure is as shown in the figure below: But ELMo uses RNN to complete the pre-training of the language model, so how to use Transformer to complete the pre-training? One-way Transformer structure OpenAI GPT uses a one-way Transformer to complete this pre-training task. What is a one-way Transformer? In the Transformer article, it is mentioned that the Transformer Block used by Encoder and Decoder is different. In the Decoder Block, Masked Self-Attention is used, that is, each word in the sentence can only pay attention to all previous words including itself. This is a one-way Transformer. The Transformer structure used by GPT is to replace Self-Attention in Encoder with Masked Self-Attention. The specific structure is as shown in the figure below: Because A one-way Transformer is used, and only the words above can be seen, so the language model is: The training process is actually very simple. Simple, it is to add the word vector of n words in the sentence (the first one is Due to the use of Masked Self-Attention, the words at each position will not "see" the following words, that is, when predicting The "answer" is invisible, ensuring the rationality of the model. This is why OpenAI uses a one-way Transformer. Fine-Tuning and changes in different input data structures The next step is to enter the second step of model training, using a small amount of labeled data to fine-tune the model parameters. We did not use the output of the last word in the previous step. In this step, we will use this output as the input of downstream supervised learning. In order to avoid Fine-Tuning causing the model to fall into overfitting, the article also mentions the auxiliary training target The method is similar to a multi-task model or semi-supervised learning. The specific method is to use the prediction result of the last word for supervised learning while continuing the unsupervised training of the previous words, so that the final loss function becomes: For different tasks, the format of the input data needs to be modified: GitHub link: https://github.com/openai/finetune-transformer-lm Post Scriptum OpenAI GPT has made great explorations in the use of Transformer and the two-stage training method, and also Achieved very good results, paving the way for BERT later. BERT (Bidirectional Encoder Representation from Transformer) is a natural language representation framework based on Transformer proposed by Google Brain in 2018 . It is a star model that became popular as soon as it was proposed. Like GPT, BERT adopts the Pre-training Fine-tuning training method and achieves better results in tasks such as classification and labeling. BERT and GPT are very similar. They are both two-stage training models based on Transformer. They are divided into two stages: Pre-Training and Fine-Tuning. Both have no pre-training stage. A universal Transformer model is trained in a supervised manner, and then the parameters in this model are fine-tuned in the Fine-Tuning stage to adapt it to different downstream tasks. Although BERT and GPT look very similar, their training goals, model structures and uses are still slightly different: bidirectional Transformer BERT uses a Transformer that does not pass through Mask. That is, the structure of the Encoder Transformer in the Transformer article is exactly the same: In GPT, because the training of the language model needs to be completed, Pre-Training is required to only be able to see the next word when predicting it. The current and previous words, this is why GPT abandoned the original two-way structure of Transformer and adopted a one-way structure. In order to obtain contextual information at the same time, instead of completely giving up contextual information like GPT, BERT uses a two-way Transformer. But in this way, it is no longer possible to use a normal language model for pre-training like GPT, because the structure of BERT causes the output of each Transformer to see the entire sentence. No matter what you use this output to predict, you will "see it" "Reference answer, which is the question of "see itself". Although ELMo uses a bidirectional RNN, the two RNNs are independent, so the problem of seeing itself can be avoided. Pre-Training stage Then BERT wants to use a two-way Transformer model, we have to give up the language model used in GPT as the pre-training objective function. Instead, BERT proposes a completely different pre-training method. In Transformer, we want to know the above information, If you want to know the information below, but at the same time make sure that the entire model does not know the information about the word to be predicted, then just don’t tell the model the information about the word. That is to say, BERT digs out some words that need to be predicted in the input sentence, then analyzes the sentence through the context, and finally uses the output of its corresponding position to predict the dug out words. It's actually like doing a cloze. However, directly replacing a large number of words with 1. Randomly select 15% of the words in the input data for prediction. Among these 15% of words, 2.80% of the word vectors are replaced with 3.10% of the word vectors are replaced when input For the word vectors of other words 4. The other 10% remains unchanged This is equivalent to telling the model that I may give Your answer may not give you an answer, or it may give you a wrong answer. I will check your answer where there is BERT also proposed another pre-training method NSP, which is similar to MLM Performed simultaneously to form multi-task pre-training. This pre-training method is to input two consecutive sentences into the Transformer. The left sentence is preceded by a In order to distinguish the relationship between the two sentences, BERT not only adds Positional Encoding, but also adds a Segment Embedding that needs to be learned during pre-training to distinguish the two sentences. . In this way, BERT's input consists of the addition of three parts: word vector, position vector, and segment vector. Additionally, the two sentences are distinguished using the The overall Pre-Training diagram is as follows: Fine-Tuning stage BERT’s Fine-Tuning stage is not much different from GPT. Because of the use of a two-way Transformer, the auxiliary training target used by GPT in the Fine-Tuning stage, which is the language model, is abandoned. In addition, the output vector for classification prediction is changed from the output position of the last word of GPT to the position of GitHub link: https://github.com/google-research/bert Post Scriptum Personally believe that BERT is just a trade-off of the GPT model. In both stages, sentence context information can be obtained simultaneously, using a bidirectional Transformer model. But for this, we have to pay the price of losing the traditional language model, and instead use a more complex method such as MLM NSP for pre-training. Multi-task model - MT-DNN MT-DNN (Multi-Task Deep Neural Networks) still uses BERT’s two-stage training method and bidirectional Transformer. In the Pre-Training stage, MT-DNN is almost identical to BERT, but in the Fine-Tuning stage, MT-DNN adopts a multi-task fine-tuning method. At the same time, the context Embedding output by Transformer is used for training on tasks such as single sentence classification, text pair similarity, text pair classification, and question and answer. The entire structure is shown below: GitHub link: https://github.com/namisan/mt-dnn GPT-2 continues to use the one-way Transformer model originally used in GPT, and the purpose of this article It is to take advantage of the one-way Transformer as much as possible and do things that the two-way Transformer used by BERT cannot do. That is to generate the following text from the above. The idea of GPT-2 is to completely abandon the Fine-Tuning process and instead use a larger capacity, unsupervised training, and more general language model to complete a variety of tasks . We don't need to define what tasks this model should do at all, because the information contained in many tags exists in the corpus. Just like if a person reads a lot of books, he can easily automatically summarize, answer questions, and continue writing articles based on the content he has read. Strictly speaking, GPT-2 may not be a multi-task model, but it does use the same model and the same parameters to complete different tasks. Usually we train a dedicated model for a specific task. Given an input, we can return the corresponding output of the task, which is So if we want to design a general model, which requires a given input and a given task type, and then makes corresponding outputs based on the given input and task, then the model is It can be expressed as follows It’s like if I need to translate a sentence, I need to design a translation model, and if I want a question and answer system, I need to design it specially. A question and answer model. But if a model is smart enough and can generate context based on your context, then we can distinguish various problems by adding some identifiers to the input. For example, we can ask him directly: (‘Natural Language Processing’, Chinese translation) to get the results we need Nature Language Processing. In my understanding, GPT-2 is more like an omniscient question and answer system. By informing the identifier of a given task, it can make appropriate answers to questions and answers in various fields and tasks. GPT-2 satisfies the zero-shot setting. There is no need to tell it what tasks it should complete during the training process, and the prediction can also give a more reasonable answer. So what has GPT-2 done to meet the above requirements? Broaden and enlarge the data set The first thing is to make the model well-read, if the training samples are not enough If there are too many, then how can we reason? The previous work was focused on a specific problem, so the data sets were relatively one-sided. GPT-2 collects a larger and broader dataset. At the same time, we must ensure the quality of this data set and retain web pages with high-quality content. Finally, an 8 million text, 40G data set WebText was formed. Expand network capacity If you have too many books, you have to carry some with you, otherwise you won’t be able to remember what’s in the book. thing. In order to increase the capacity of the network and make it have stronger learning potential, GPT-2 increases the number of Transformer stack layers to 48 layers, the dimension of the hidden layer is 1600, and the number of parameters reaches 1.5 billion. Adjust the network structure GPT-2 increases the vocabulary to 50257, the maximum context size ) has been increased from GPT 512 to 1024, and the batch size has been increased from 512 to 1024. In addition, small adjustments were made to the Transformer. The normalization layer was placed before each sub-block, and a normalization layer was added after the last Self-attention; the initialization method of the residual layer was changed, etc. GitHub link: https://github.com/openai/gpt-2 ##Post Scriptum In fact, the most amazing thing about GPT-2 is its extremely strong generation ability, and such a powerful generation ability is mainly due to its data quality and amazing number of parameters and Data size. The number of parameters of GPT-2 is so large that the model used for experiments is still in an under-fitting state. If it is further trained, the effect can be further improved. In summary of the above developments regarding Transformer work, I have also compiled some personal thoughts on the development trends of deep learning: 1. Supervised models are developing towards semi-supervised or even unsupervised directions The scale of data is growing much faster than the annotation of data speed, which also leads to the generation of a large amount of unlabeled data. These unlabeled data are not without value. On the contrary, if you find the right "alchemy", you will be able to obtain unexpected value from these massive data. How to use these unlabeled data to improve task performance has become an increasingly important issue that cannot be ignored. 2. From a complex model with a small amount of data to a simple model with a large amount of data The fitting ability of deep neural networks is very So powerful that a simple neural network model is enough to fit any function. However, it is difficult to use a simpler network structure to complete the same task, and the requirements for data volume are also higher. The more the data volume increases and the data quality improves, the more often the requirements for the model will decrease. The larger the amount of data, the easier it is for the model to capture features consistent with real-world distributions. Word2Vec is an example. The objective function it uses is very simple, but because a large amount of text is used, the trained word vectors contain many interesting features. 3. Develop from specialized model to general model GPT, BERT, MT-DNN, GPT-2 They all use pre-trained general models to continue downstream machine learning tasks, and do not need to make too many modifications to the model itself. If the expressive ability of a model is strong enough and the amount of data used during training is large enough, the model will be more versatile and will not need to be modified too much for specific tasks. The most extreme case is like GPT-2, which can train a general multi-task model without even knowing what the subsequent downstream tasks are during training. 4. Increase the requirements for data scale and quality Although GPT, BERT, MT-DNN, and GPT-2 have successively topped the list, I think that in the improvement of performance, the improvement of data scale accounts for a greater proportion than structural adjustment. With the generalization and simplification of models, in order to improve the performance of the model, more attention will shift from how to design a complex and specialized model to how to obtain, clean, and refine a large number of models with superior quality. On the data. The effect of adjusting the data processing method will be greater than the effect of adjusting the model structure. To sum up, the DL competition will sooner or later become a competition between large manufacturers for resources and computing power. A new topic may emerge within a few years: green AI, low-carbon AI, sustainable AI, etc.

One-way universal model - GPT-2

The above is the detailed content of Detailed explanation of Transformer structure and its applications - GPT, BERT, MT-DNN, GPT-2. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

How to set the Debian Apache log level

Apr 13, 2025 am 08:33 AM

This article describes how to adjust the logging level of the ApacheWeb server in the Debian system. By modifying the configuration file, you can control the verbose level of log information recorded by Apache. Method 1: Modify the main configuration file to locate the configuration file: The configuration file of Apache2.x is usually located in the /etc/apache2/ directory. The file name may be apache2.conf or httpd.conf, depending on your installation method. Edit configuration file: Open configuration file with root permissions using a text editor (such as nano): sudonano/etc/apache2/apache2.conf

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

How to implement file sorting by debian readdir

Apr 13, 2025 am 09:06 AM

In Debian systems, the readdir function is used to read directory contents, but the order in which it returns is not predefined. To sort files in a directory, you need to read all files first, and then sort them using the qsort function. The following code demonstrates how to sort directory files using readdir and qsort in Debian system: #include#include#include#include#include//Custom comparison function, used for qsortintcompare(constvoid*a,constvoid*b){returnstrcmp(*(

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Debian mail server firewall configuration tips

Apr 13, 2025 am 11:42 AM

Configuring a Debian mail server's firewall is an important step in ensuring server security. The following are several commonly used firewall configuration methods, including the use of iptables and firewalld. Use iptables to configure firewall to install iptables (if not already installed): sudoapt-getupdatesudoapt-getinstalliptablesView current iptables rules: sudoiptables-L configuration

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

How Debian OpenSSL prevents man-in-the-middle attacks

Apr 13, 2025 am 10:30 AM

In Debian systems, OpenSSL is an important library for encryption, decryption and certificate management. To prevent a man-in-the-middle attack (MITM), the following measures can be taken: Use HTTPS: Ensure that all network requests use the HTTPS protocol instead of HTTP. HTTPS uses TLS (Transport Layer Security Protocol) to encrypt communication data to ensure that the data is not stolen or tampered during transmission. Verify server certificate: Manually verify the server certificate on the client to ensure it is trustworthy. The server can be manually verified through the delegate method of URLSession

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

Debian mail server SSL certificate installation method

Apr 13, 2025 am 11:39 AM

The steps to install an SSL certificate on the Debian mail server are as follows: 1. Install the OpenSSL toolkit First, make sure that the OpenSSL toolkit is already installed on your system. If not installed, you can use the following command to install: sudoapt-getupdatesudoapt-getinstallopenssl2. Generate private key and certificate request Next, use OpenSSL to generate a 2048-bit RSA private key and a certificate request (CSR): openss

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

How debian readdir integrates with other tools

Apr 13, 2025 am 09:42 AM

The readdir function in the Debian system is a system call used to read directory contents and is often used in C programming. This article will explain how to integrate readdir with other tools to enhance its functionality. Method 1: Combining C language program and pipeline First, write a C program to call the readdir function and output the result: #include#include#include#includeintmain(intargc,char*argv[]){DIR*dir;structdirent*entry;if(argc!=2){

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

How to do Debian Hadoop log management

Apr 13, 2025 am 10:45 AM

Managing Hadoop logs on Debian, you can follow the following steps and best practices: Log Aggregation Enable log aggregation: Set yarn.log-aggregation-enable to true in the yarn-site.xml file to enable log aggregation. Configure log retention policy: Set yarn.log-aggregation.retain-seconds to define the retention time of the log, such as 172800 seconds (2 days). Specify log storage path: via yarn.n