Technology peripherals

Technology peripherals

AI

AI

With the help of large AI models, Zhangyue Technology sets off a new wave of content generation

With the help of large AI models, Zhangyue Technology sets off a new wave of content generation

With the help of large AI models, Zhangyue Technology sets off a new wave of content generation

With the help of large AI models, Palm Reading Technology sets off a new wave of content generation

News on June 6, in recent years, the rapid development of artificial intelligence (AI) technology has led the transformation and innovation of all walks of life. In this digital era, content creation has become an important means for companies and individuals to gain user attention and influence on the Internet. In order to meet user needs and improve the efficiency and quality of content creation, PalmReader Technology actively explores the application of AI technology in the field of content generation and has achieved remarkable results.

爱奇超

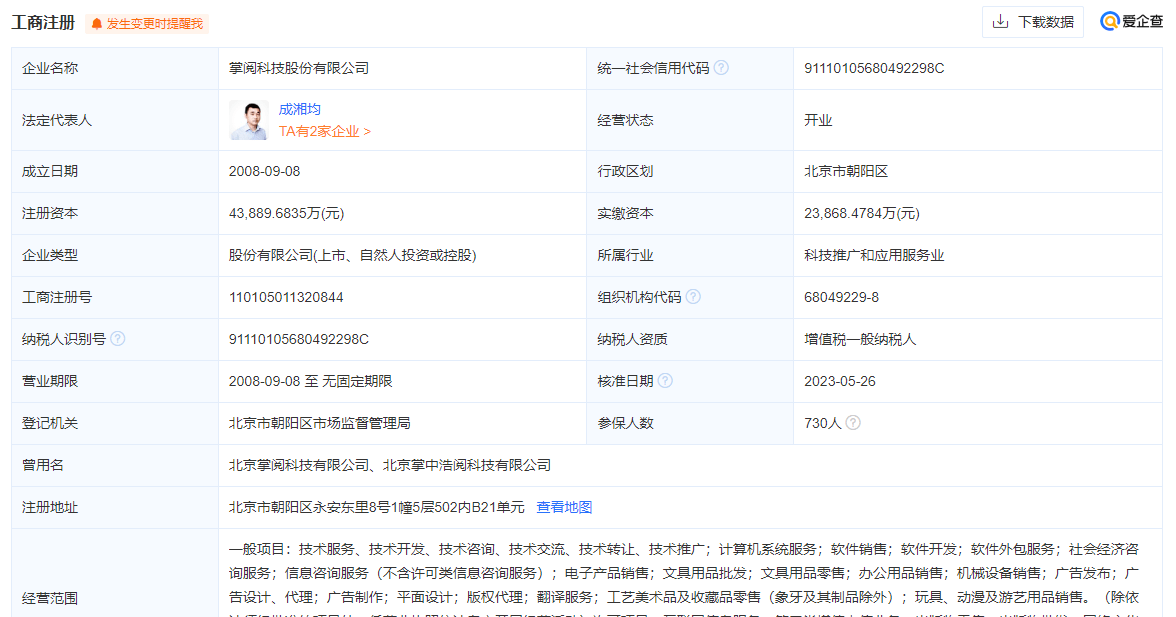

Aiqicha APP shows that Zhangyue Technology is a technology company focusing on digital reading and content services, and is committed to improving user reading experience through technological innovation. Recently, Palm Reading Technology announced that it has officially accessed the mainstream AI large models on the market and applied them to Prompt Engineering work for content generation, bringing a new experience to content creators and readers.

AI large model is a powerful tool based on deep learning that can imitate human writing style and language expression ability. By training a massive corpus, AI large models can generate high-quality, smooth, and coherent text content, helping content creators to quickly generate various articles, short video scripts, advertising copy, etc.

Zhangyue Technology’s Prompt Engineering work mainly relies on AI large models for content generation. By applying AI large models to the initial prompt and draft stages of the content creation process, PalmReader Technology can provide creators with rich inspiration and creative direction, speeding up content generation and improving quality. This innovative approach enables creators to more efficiently create content that meets user needs while improving readers' reading experience.

Zhangyue Technology’s move to access mainstream AI large models not only demonstrates its leading position in technological innovation, but also brings new opportunities and challenges to the entire content creation industry. The application of AI large models will greatly change the methods and efficiency of content creation, allowing creators to better meet user needs and achieve personalized and precise content output.

In addition to improving the efficiency and quality of content generation, the application of large AI models in Zhangyue Technology also helps promote innovation in the content field. AI technology provides content creators with more creative ideas and possibilities, and promotes the emergence of innovative works. At the same time, AI large models can also provide support for personalized recommendations and content promotion, helping companies achieve more precise marketing and user services.

However, the application of large AI models also faces some challenges and tests. First of all, model training requires a large amount of data and computing resources, and has high requirements on technology and resources. Secondly, whether the content generated by AI truly meets user needs and how to ensure the originality and authoritativeness of the content are also issues that need to be paid attention to. In addition, with the continuous development of AI technology, relevant laws, regulations and ethical issues also require attention.

As a leader in the mobile reading industry, Zhangyue Technology should pay attention to data security, copyright protection and user privacy compliance in the process of applying AI large models, and establish a sound regulatory mechanism and responsibility system. At the same time, Zhangyue Technology should also actively cooperate with relevant departments and industry organizations to jointly discuss the development direction and application specifications of AI technology, and provide guidance and support for the healthy development of the industry.

Zhangyue Technology has access to mainstream AI large models and leverages its advantages in the field of content generation to set off a new wave of content generation. Through the application of AI large models, PalmReader Technology can provide content creators with better creative experience and tool support, helping the development and innovation of the content creation industry. However, it also brings with it the thinking and solution of issues such as data security, copyright protection, and ethics. Zhangyue Technology should lead the industry with a responsible attitude, promote the healthy development of AI technology, and realize unlimited possibilities for content generation.

The above is the detailed content of With the help of large AI models, Zhangyue Technology sets off a new wave of content generation. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Large AI models are very expensive and only big companies and the super rich can play them successfully

Apr 15, 2023 pm 07:34 PM

Large AI models are very expensive and only big companies and the super rich can play them successfully

Apr 15, 2023 pm 07:34 PM

The ChatGPT fire has led to another wave of AI craze. However, the industry generally believes that when AI enters the era of large models, only large companies and super-rich companies can afford AI, because the creation of large AI models is very expensive. The first is that it is computationally expensive. Avi Goldfarb, a marketing professor at the University of Toronto, said: "If you want to start a company, develop a large language model yourself, and calculate it yourself, the cost is too high. OpenAI is very expensive, costing billions of dollars." Rental computing certainly will It's much cheaper, but companies still have to pay expensive fees to AWS and other companies. Secondly, data is expensive. Training models requires massive amounts of data, sometimes the data is readily available and sometimes not. Data like CommonCrawl and LAION can be free

How to build an AI-oriented data governance system?

Apr 12, 2024 pm 02:31 PM

How to build an AI-oriented data governance system?

Apr 12, 2024 pm 02:31 PM

In recent years, with the emergence of new technology models, the polishing of the value of application scenarios in various industries and the improvement of product effects due to the accumulation of massive data, artificial intelligence applications have radiated from fields such as consumption and the Internet to traditional industries such as manufacturing, energy, and electricity. The maturity of artificial intelligence technology and application in enterprises in various industries in the main links of economic production activities such as design, procurement, production, management, and sales is constantly improving, accelerating the implementation and coverage of artificial intelligence in all links, and gradually integrating it with the main business , in order to improve industrial status or optimize operating efficiency, and further expand its own advantages. The large-scale implementation of innovative applications of artificial intelligence technology has promoted the vigorous development of the big data intelligence market, and also injected market vitality into the underlying data governance services. With big data, cloud computing and computing

Popular science: What is an AI large model?

Jun 29, 2023 am 08:37 AM

Popular science: What is an AI large model?

Jun 29, 2023 am 08:37 AM

AI large models refer to artificial intelligence models trained using large-scale data and powerful computing power. These models usually have a high degree of accuracy and generalization capabilities and can be applied to various fields such as natural language processing, image recognition, speech recognition, etc. The training of large AI models requires a large amount of data and computing resources, and it is usually necessary to use a distributed computing framework to accelerate the training process. The training process of these models is very complex and requires in-depth research and optimization of data distribution, feature selection, model structure, etc. AI large models have a wide range of applications and can be used in various scenarios, such as smart customer service, smart homes, autonomous driving, etc. In these applications, AI large models can help people complete various tasks more quickly and accurately, and improve work efficiency.

Vivo launches self-developed general-purpose AI model - Blue Heart Model

Nov 01, 2023 pm 02:37 PM

Vivo launches self-developed general-purpose AI model - Blue Heart Model

Nov 01, 2023 pm 02:37 PM

Vivo released its self-developed general artificial intelligence large model matrix - the Blue Heart Model at the 2023 Developer Conference on November 1. Vivo announced that the Blue Heart Model will launch 5 models with different parameter levels, respectively. It contains three levels of parameters: billion, tens of billions, and hundreds of billions, covering core scenarios, and its model capabilities are in a leading position in the industry. Vivo believes that a good self-developed large model needs to meet the following five requirements: large scale, comprehensive functions, powerful algorithms, safe and reliable, independent evolution, and widely open source. The rewritten content is as follows: Among them, the first is Lanxin Big Model 7B, this is a 7 billion level model designed to provide dual services for mobile phones and the cloud. Vivo said that this model can be used in fields such as language understanding and text creation.

In the era of large AI models, new data storage bases promote the digital intelligence transition of education, scientific research

Jul 21, 2023 pm 09:53 PM

In the era of large AI models, new data storage bases promote the digital intelligence transition of education, scientific research

Jul 21, 2023 pm 09:53 PM

Generative AI (AIGC) has opened a new era of generalization of artificial intelligence. The competition around large models has become spectacular. Computing infrastructure is the primary focus of competition, and the awakening of power has increasingly become an industry consensus. In the new era, large models are moving from single-modality to multi-modality, the size of parameters and training data sets is growing exponentially, and massive unstructured data requires the support of high-performance mixed load capabilities; at the same time, data-intensive The new paradigm is gaining popularity, and application scenarios such as supercomputing and high-performance computing (HPC) are moving in depth. Existing data storage bases are no longer able to meet the ever-upgrading needs. If computing power, algorithms, and data are the "troika" driving the development of artificial intelligence, then in the context of huge changes in the external environment, the three urgently need to regain dynamic

With reference to the human brain, will learning to forget make large AI models better?

Mar 12, 2024 pm 02:43 PM

With reference to the human brain, will learning to forget make large AI models better?

Mar 12, 2024 pm 02:43 PM

Recently, a team of computer scientists developed a more flexible and resilient machine learning model with the ability to periodically forget known information, a feature not found in existing large-scale language models. Actual measurements show that in many cases, the "forgetting method" is very efficient in training, and the forgetting model will perform better. Jea Kwon, an AI engineer at the Institute for Basic Science in Korea, said the new research means significant progress in the field of AI. The "forgetting method" training efficiency is very high. Most of the current mainstream AI language engines use artificial neural network technology. Each "neuron" in this network structure is actually a mathematical function. They are connected to each other to receive and transmit information.

AI large models are popular! Technology giants have joined in, and policies in many places have accelerated their implementation.

Jun 11, 2023 pm 03:09 PM

AI large models are popular! Technology giants have joined in, and policies in many places have accelerated their implementation.

Jun 11, 2023 pm 03:09 PM

In recent times, artificial intelligence has once again become the focus of human innovation, and the arms competition around AI has become more intense than ever. Not only are technology giants gathering to join the battle of large models for fear of missing out on the new trend, but even Beijing, Shanghai, Shenzhen and other places have also introduced policies and measures to carry out research on large model innovation algorithms and key technologies to create a highland for artificial intelligence innovation. . AI large models are booming, and major technology giants have joined in. Recently, the "China Artificial Intelligence Large Model Map Research Report" released at the 2023 Zhongguancun Forum shows that China's artificial intelligence large models are showing a booming development trend, and there are many companies in the industry. Influential large models. Robin Li, founder, chairman and CEO of Baidu, said bluntly that we are at a new starting point

Lecture Reservation|Five experts discussed: How does AI large model affect the research and development of new drugs under the wave of new technologies?

Jun 08, 2023 am 11:27 AM

Lecture Reservation|Five experts discussed: How does AI large model affect the research and development of new drugs under the wave of new technologies?

Jun 08, 2023 am 11:27 AM

In 1978, Stuart Marson and others from the University of California established the world's first CADD commercial company and pioneered the development of a chemical reaction and database retrieval system. Since then, computer-aided drug design (CADD) has entered an era of rapid development and has become one of the important means for pharmaceutical companies to conduct drug research and development, bringing revolutionary upgrades to this field. On October 5, 1981, Fortune magazine published a cover article titled "The Next Industrial Revolution: Merck Designs Drugs Through Computers," officially announcing the advent of CADD technology. In 1996, the first drug carbonic anhydrase inhibitor developed based on SBDD (structure-based drug design) was successfully launched on the market. CADD was widely used in drug research and development.