Technology peripherals

Technology peripherals

AI

AI

The arithmetic ability is close to perfect score! The National University of Singapore releases Goat, which kills GPT-4 with only 7 billion parameters and initially supports 16-digit multiplication and division.

The arithmetic ability is close to perfect score! The National University of Singapore releases Goat, which kills GPT-4 with only 7 billion parameters and initially supports 16-digit multiplication and division.

The arithmetic ability is close to perfect score! The National University of Singapore releases Goat, which kills GPT-4 with only 7 billion parameters and initially supports 16-digit multiplication and division.

Although large-scale language models have shown superior performance in various natural language processing tasks, arithmetic questions are still a big difficulty, even for the most powerful GPT- 4 is also difficult to deal with basic arithmetic problems.

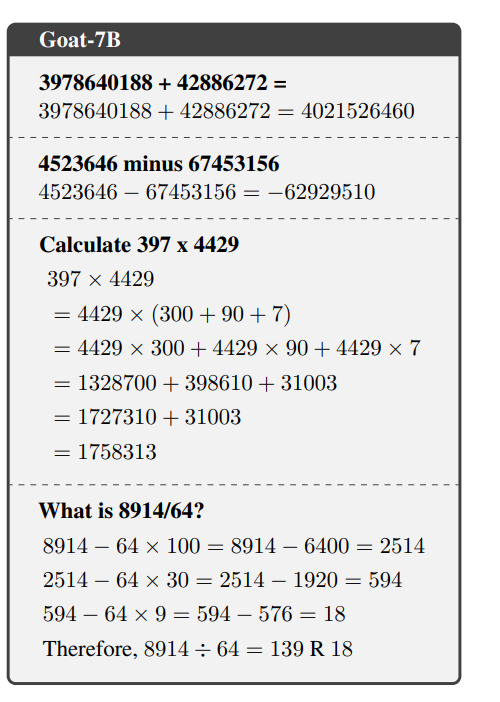

Recently, researchers from the National University of Singapore proposed Goat, a model dedicated to arithmetic. After fine-tuning on the basis of the LLaMA model, it achieved significantly better performance than GPT-4. Numeracy skills.

Paper link: https://arxiv.org/pdf/2305.14201.pdf

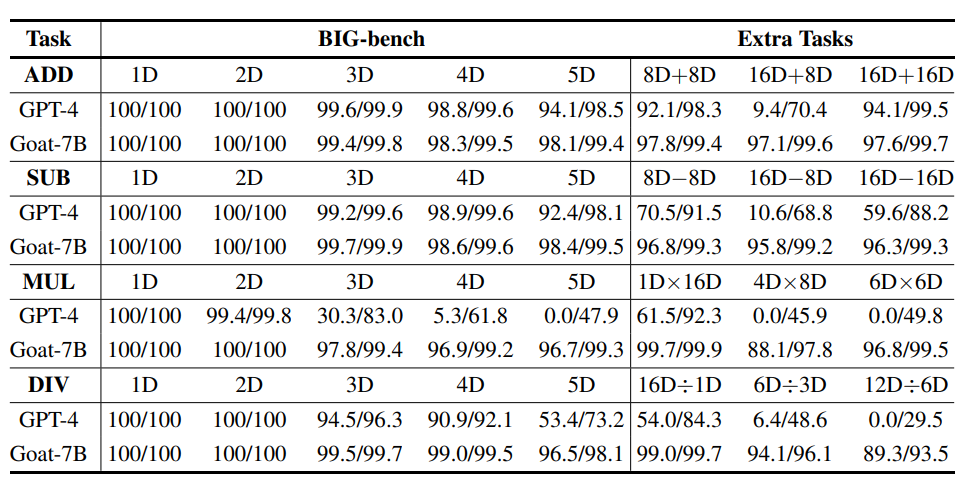

By fine-tuning a synthetic arithmetic dataset, Goat achieves state-of-the-art performance on the BIG-bench arithmetic subtask,

Goat Through supervised fine-tuning alone, it is possible to achieve near-perfect accuracy in large number addition and subtraction operations, surpassing all previous pre-trained language models, such as Bloom, OPT, GPT-NeoX, etc. Among them, the zero-sample Goat-7B achieved The accuracy even exceeds PaLM-540 after few-shot learning. The researchers attributed Goat's excellent performance to LLaMA's consistent word segmentation technology for numbers.

To solve more challenging tasks, such as large number multiplication and division, the researchers also proposed a method to classify tasks according to the learnability of arithmetic and then use Basic arithmetic principles break down non-learnable tasks, such as multi-digit multiplication and division, into a series of learnable tasks.

After comprehensive experimental verification, the decomposition steps proposed in the article can effectively improve arithmetic performance.

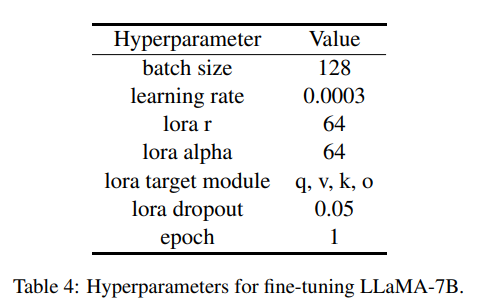

And Goat-7 B can be trained efficiently using LoRA on a 24 GB VRAM GPU, other researchers can repeat the experiment very easily, the model, the dataset and the python that generated the dataset The script will be open source soon.

Language model that can count

Language modelLLaMA It is a set of open source pre-trained language models that are trained on trillions of tokens using publicly available data sets and achieve state-of-the-art performance on multiple benchmarks.

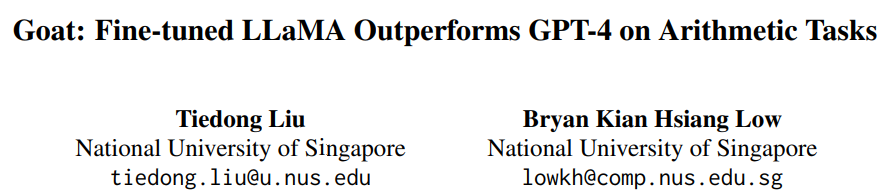

Previous research results show that tokenization is important for LLM’s arithmetic ability. However, commonly used tokenization techniques cannot represent numbers well. For example, numbers with too many digits may will be divided.

LLaMA chose to split the number into multiple tokens to ensure the consistency of digital representation. Researchers believe that the experiment The extraordinary arithmetic ability shown in the results is mainly due to LLaMA's consistent segmentation of numbers.

LLaMA chose to split the number into multiple tokens to ensure the consistency of digital representation. Researchers believe that the experiment The extraordinary arithmetic ability shown in the results is mainly due to LLaMA's consistent segmentation of numbers.

In experiments, other fine-tuned language models, such as Bloom, OPT, GPT-NeoX and Pythia, were unable to match LLaMA’s arithmetic capabilities.

Learnability of Arithmetic Tasks

Previously The researchers conducted a theoretical analysis of using intermediate supervision to solve composite tasks and showed that such tasks are not learnable but can be decomposed into a polynomial number of simple subtasks.

That is, unlearnable compound problems can be learned by using intermediate supervision or chain of steps (CoT).

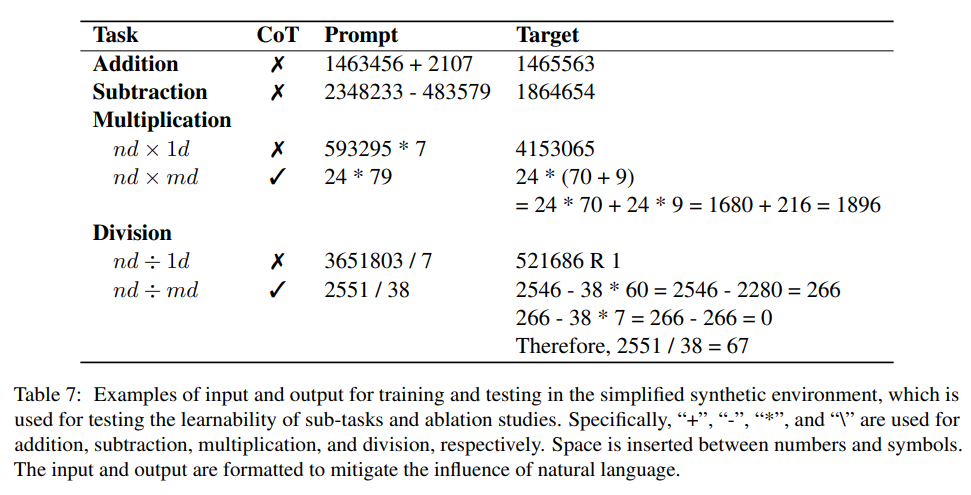

Based on this analysis, the researchers first experimentally classified learnable and non-learnable tasks.

In the context of arithmetic computing, learnable tasks generally refer to those tasks for which a model can be successfully trained to directly generate answers, thereby achieving a sufficiently high level within a predefined number of training epochs. Accuracy.

Non-learnable tasks are those for which a model has difficulty learning correctly and generating direct answers, even after extensive training.

While the exact reasons behind changes in task learnability are not fully understood, it can be hypothesized that it is related to the complexity of the underlying pattern and the size of working memory required to complete the task.

The researchers experimentally examined the feasibility of these tasks by fine-tuning the model specifically for each task in a simplified synthetic environment. Learning ability.

Learnable and non-learnable tasks

The results of task classification are also the same as human perception. Through practice, humans can calculate the addition and subtraction of two large numbers in their minds. Without hand calculation, they can directly go from left (most significant digit) to right. (least significant digit) Write the final numerical answer.

But mental arithmetic to solve multiplication and division of large numbers is a challenging task.

It can also be observed that the above classification results of the tasks are also consistent with the performance of GPT-4. In particular, GPT-4 is good at generating direct answers for large number addition and subtraction, and when it comes to Accuracy drops significantly when it comes to multi-bit multiplication and division tasks.

The inability of a powerful model like GPT-4 to directly solve non-learnable tasks may also indicate that generating direct answers for these tasks is extremely challenging even after extensive training .

It is worth noting that tasks that are learnable for LLaMA may not necessarily be learnable for other LLMs.

Additionally, not all tasks classified as unlearnable are completely impossible for the model to learn.

For example, multiplying a two-digit number by a two-digit number is considered a non-learnable task, but if the training set contains all possible 2-digit multiplication enumeration data, the model still Answers can be generated directly by overfitting the training set.

However, the entire process requires nearly 10 epochs to achieve an accuracy of about 90%.

By inserting the CoT proposed in the article before the final answer, the model can achieve quite good accuracy in two-digit multiplication after 1 epoch of training, which is also consistent with Previous studies have consistently concluded that the presence of intermediate supervision facilitates the learning process.

Addition and subtraction

#These two arithmetic operations are learnable, only through supervised fine-tuning, the model It demonstrates an extraordinary ability to accurately generate direct numerical answers.

Although the model was trained on a very limited subset of the additive data, this can be seen from the fact that the model achieved near-perfect accuracy on an unseen test set , the model successfully captures the basic patterns of arithmetic operations without using CoT

Multiplication

The researchers passed Experiments have verified that multiplication of n-digit numbers by one-digit number is learnable, but multi-digit multiplication cannot be learned.

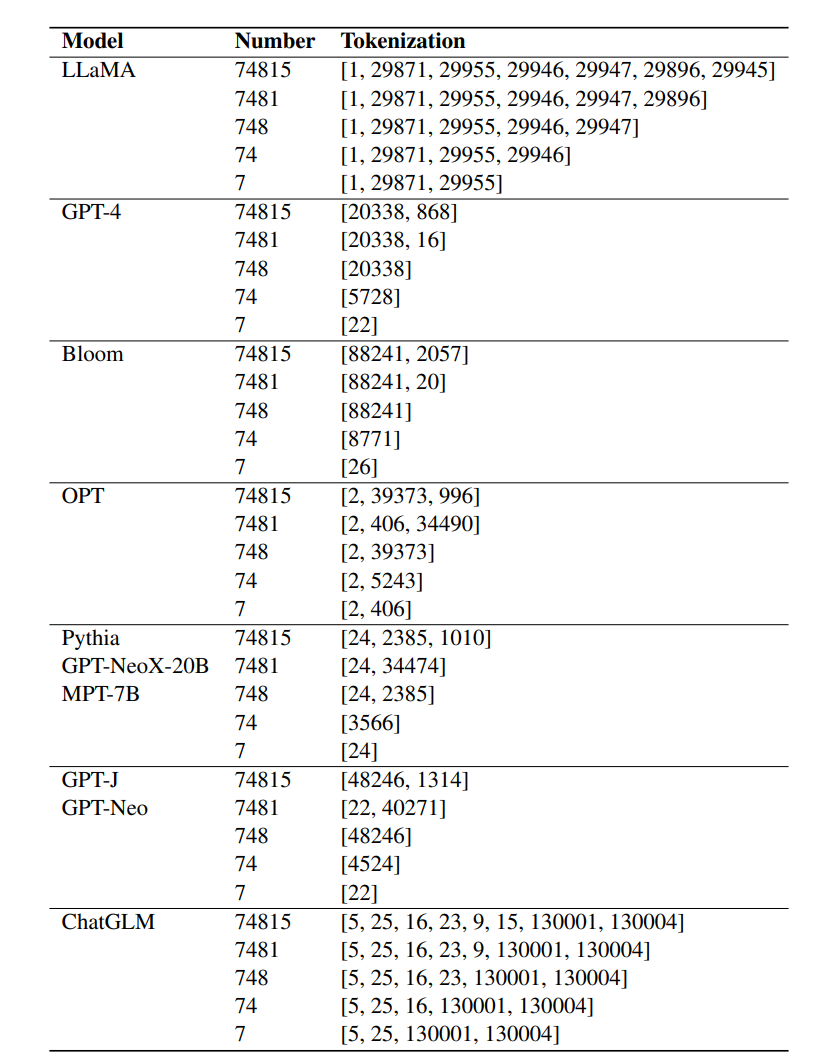

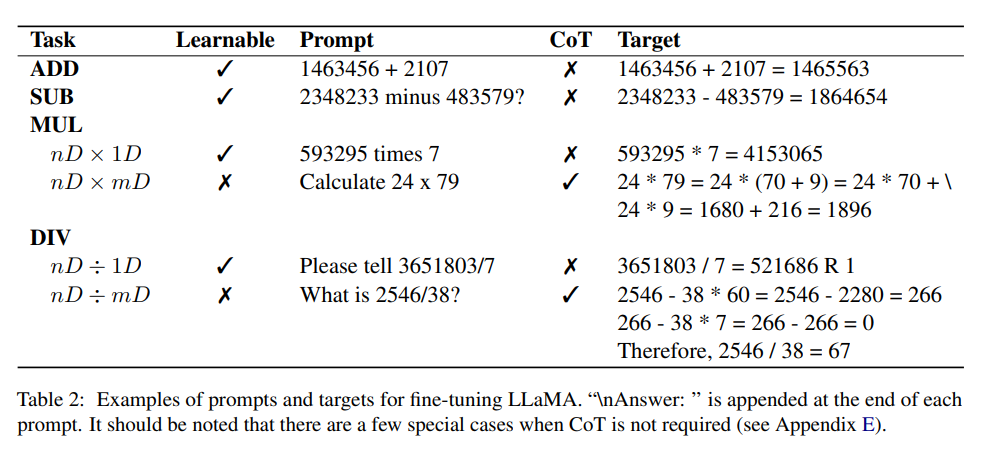

To overcome this problem, the researchers chose to fine-tune the LLM to generate CoT before generating the answer, breaking multi-digit multiplication into 5 learnable subtasks:

1. Extraction, extract arithmetic expressions from natural language instructions

2. Split, split the smaller of the two Small numbers are split into place values

3. Expansion, summation based on distributive expansion

4. Product, calculate each product simultaneously

5. Adding term by term, add the first two terms, copy the remaining terms, and get the final sum

Every mission is learnable.

Division

Similarly, it can be observed experimentally that dividing n digits by 1 digit can be learned , while multi-digit division is not learnable.

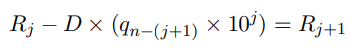

The researchers designed a new thinking chain prompt using the recursion equation that improves slow division.

The main idea is to subtract multiples of the divisor from the dividend until the remainder is less than the divisor.

Dataset

Design in the article The experiment is the addition and subtraction of two positive integers, each positive integer contains up to 16 digits, and the result of the subtraction operation may be a negative number.

In order to limit the maximum sequence length generated, the result of multiplication is a positive integer within 12 digits; in the division of two positive integers, the dividend is less than 12 digits, and the quotient is within 6 digits. .

The researchers used a Python script to synthesize a data set that generated approximately 1 million question-answer pairs. The answers contained the proposed CoT and the final numerical output, all of which were randomly generated. , which guarantees that the probability of duplicate instances is very low, but small numbers may be sampled multiple times.

Fine-tuning

To enable the model to solve arithmetic problems based on instructions and facilitate natural language question answering, the researchers Hundreds of instruction templates were generated using ChatGPT.

During the instruction tuning process, a template is randomly selected from the training set for each arithmetic input and LLaMA-7B is fine-tuned, similar to the method used in Alpaca.

Goat-7B can be fine-tuned using LoRA on a 24GB VRAM GPU and takes only about 1.5 hours to complete 100,000 samples on an A100 GPU fine-tuning and achieving near-perfect accuracy.

Experimental results

It seems unfair to compare the performance of Goat and GPT-4 on large multiplications and divisions, because GPT-4 generates answers directly, while Goat It relies on the design thinking chain, so when evaluating GPT-4, "Solve it step by step" is added at the end of each prompt

##However, it can be observed that although GPT-4 in some cases, the intermediate steps of long multiplication and division are wrong, the final answer is still correct, which means that GPT-4 does not use thinking Intermediate supervision of the chain to improve the final output.

Finally, the following 3 common errors were identified from the GPT-4 solution:

1. Alignment of corresponding numbers

2. Repeated numbers

3. The intermediate result of multiplying n digits by 1 digit is wrong

From the experiment It can be seen from the results that GPT-4 performs quite well on 8D 8D and 16D 16D tasks, but the calculation results on most 16D 8D tasks are wrong, although intuitively, 16D 8D should be relatively better than 16D 16D easy.

While the exact cause of this is unclear, one possible factor could be GPT-4's inconsistent number tokenization process, making it difficult to align between two numbers.

The above is the detailed content of The arithmetic ability is close to perfect score! The National University of Singapore releases Goat, which kills GPT-4 with only 7 billion parameters and initially supports 16-digit multiplication and division.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1390

1390

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative