Technology peripherals

Technology peripherals

AI

AI

Apple's AR glasses Vision Pro was born! The scene screamed non-stop, the first real machine shot, a conversation with Cook

Apple's AR glasses Vision Pro was born! The scene screamed non-stop, the first real machine shot, a conversation with Cook

Apple's AR glasses Vision Pro was born! The scene screamed non-stop, the first real machine shot, a conversation with Cook

Author | Zhidongxi Editorial Department

Today, Apple has reignited the enthusiasm in the technology circle with its “first spatial computing device” Apple Vision Pro!

As for whether it is called a head-mounted display or glasses, AR or VR, Apple did not clearly explain it throughout the press conference.

This shows that Apple does not necessarily agree with the current terminology in the industry. At the same time, it may also be that Apple believes that these concepts cannot accurately define the Vision Pro product, so it proposed the concept of "the first spatial computing system platform" . In any case, Apple has indeed set a precedent. For the sake of simplicity in the following, we will temporarily call it an AR head display.

Back to the press conference itself, this time Apple Park reunited fans from all over the world to witness Apple’s new “iPhone moment”. The scene was crowded early and it was very lively.

Apple set up a stage in its internal campus and once again held WWDC23 through a combination of online live broadcast and offline participation. In the pre-recorded video of the press conference, our old friend Cook took the lead in wearing his iconic short-sleeved shirt.

After releasing all the systems and hardware, about 1 hour and 20 minutes into the conference, Cook appeared again in the One more thing session and announced the launch of Vision Pro, the first spatial computing device.

After calling for three years, Apple finally meets everyone with its first AR headset. "This is a revolutionary product." Cook said, and this is by far the most representative product of the Cook era.

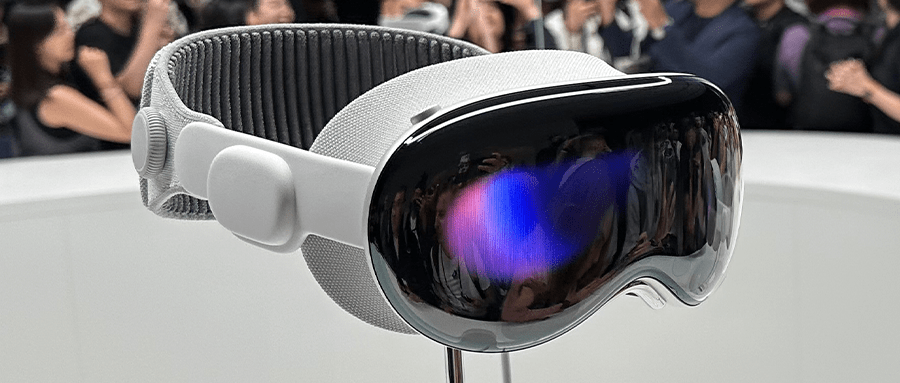

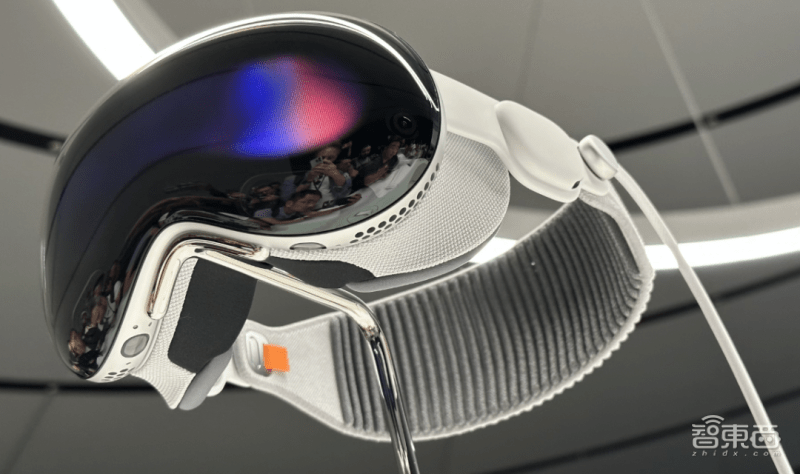

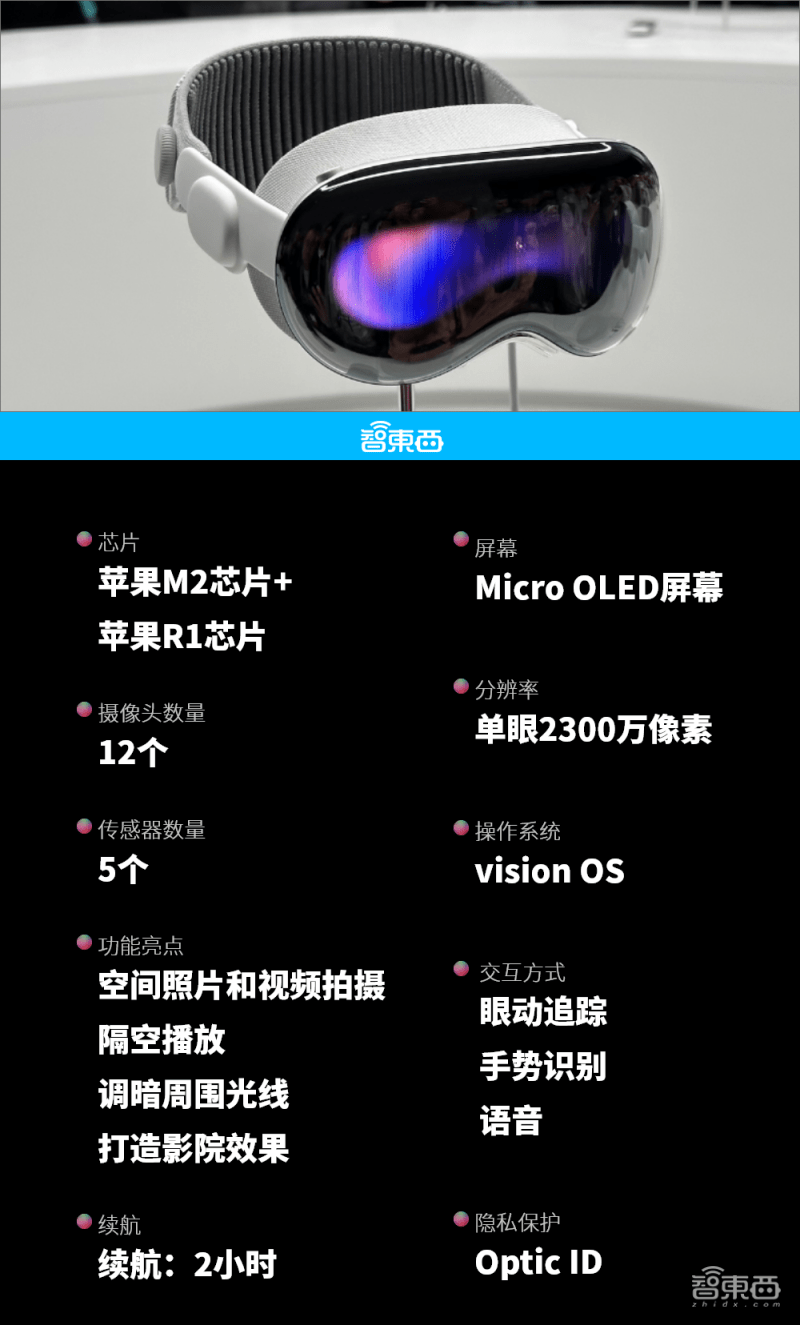

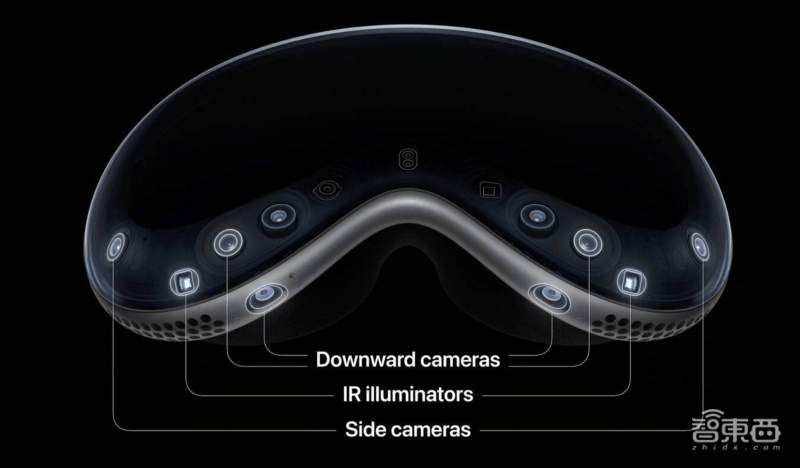

The Apple Vision Pro headset consists of a double-sided display, a portable battery, and components such as a headset and a mask. Currently, Apple’s Vision Pro headset uses a dual-chip design, dominated by M2 and the debuted R1 chip, with 12 cameras, 5 sensors, and 6 microphones.

Vision Pro headset supports eye movement interaction, voice interaction and gesture interaction. This is also a rare head-mounted display product that uses handleless interaction in the industry. In terms of scenarios, Apple mainly focuses on four major products: video, office, games and home products.

It is worth noting that the naming method of the Vision Pro head display means that the head display is a high-end head display. Its price of US$3,499 (approximately RMB 25,000) is also related to its high-end head display positioning. Maybe next time at WWDC, we can see the "civilian version" of the Vision headset unveiled.

In addition, today Apple also released 15-inch MacBook Air, M2 Ultra, and new Mac Studio and Mac Pro products equipped with M2 Ultra. Among them, Apple's M2 Ultra has become the most powerful laptop chip on the planet, with a number of transistors reaching 134 billion. Apple's self-developed chip empire has officially covered all Apple Mac product lines. The "long-rumored" 15-inch MacBook Air not only has a larger screen, but also retains the "notch" [dog].

Without further ado, let’s take a look at the highlights of the most important Vision Pro:

Apple M2 chip Apple R1 chip

Micro OLED display with a total of 23 million pixels and super 4K resolution per eye

12 cameras, 5 sensors

Customized three-mirror lens to achieve clarity from all angles

Made of aviation grade aluminum alloy, the front screen is a whole piece of 3D laminated glass

visionOS, an operating system specially built for spatial computing

Apps on tens of millions of iPhones and iPads can be used directly

3D Photo and Video Shooting

AI personal avatar that can imitate facial expressions and hand movements

Super 100-inch virtual giant screen viewing

More than 5,000 technology patents

Iris ID recognition

Spatial Audio

▲Top Ten Highlights of Apple Vision Pro (Smart Drawing)

Zhixixi got close contact with the first batch of real Vision Pro machines at the launch site, and also had a warm exchange with our old friend Apple CEO Cook.

Zhang Guoren, the editor-in-chief of Smart Things who watched the launch at Apple's headquarters and saw the Apple Vision Pro up close, believes that before the release, many voices in the industry speculated that the Apple Vision Pro should be a product for the niche market or the B market. product, and it’s expensive, at the $3,000 level.

Judging from the results, this guess, in addition to the relatively close price range, has a large deviation in the positioning guess of Vision Pro. It is obvious that through the scenes displayed by Apple, Vision Pro is an out-and-out consumer product. grade products.

The use scenarios of Apple Vision Pro at least cover movie viewing, gaming, office, home, etc., and can also be used in the commercial market. However, this Vision Pro is still a technically stacked product. With the development of software and hardware, With the continuous development of technology and the reduction of technology costs, when a $1,000 (or 10,000 yuan) Vision product appears, the AR head-mounted display market will inevitably usher in a real explosion.

From this point of view, the market trend of spatial computing equipment led by Apple Vision Pro may not be like the iPhone's successful start in the past, but like Tesla's high-profile attack, first making top-notch electric cars, and then making civilians It will follow the same development path as the mass-produced model (Model 3/Y), but we hope that this development time will be shorter.

Next, Zhidongzhi will lead you to read through the most noteworthy core software and hardware product upgrade points of WWDC23 in one article, and directly attack this globally focused technology feast in the most complete and fastest way.

1. Apple Vision Pro redefines the head display: one system, two chips, three major functions, four major scenes

After three years, we finally heard the voice of "One More Thing" again. As expected, Apple's first AR headset, Apple Vision Pro, is priced at US$3,499 and is expected to be released next year.

"Just as Mac brings us into the era of personal computing, iPhone brings us into the era of mobile computing, then Vision Pro brings us into the era of spatial computing." With Cook's voice, the door to the new era of information interaction is opening. Slowly open to everyone.

In fact, as early as 2020, Qualcomm, another giant that promotes the development of AR, has repeatedly pointed out that spatial computing will be the focus of the next information era. The dual recognition of Apple and Qualcomm also points the way for future information computing.

Around the Vision Pro head-mounted display, Apple has created many "first-time" products: for example, the Vision Pro head-mounted display is Apple's first 3D camera, and it created visionOS, the first spatial computing-based system for the Vision Pro head-mounted display. AR series chip R1 and so on.

This time, Apple has once again "redefined" the correct way to open the headset through one system, two chips, three major functions, and four major scenes.

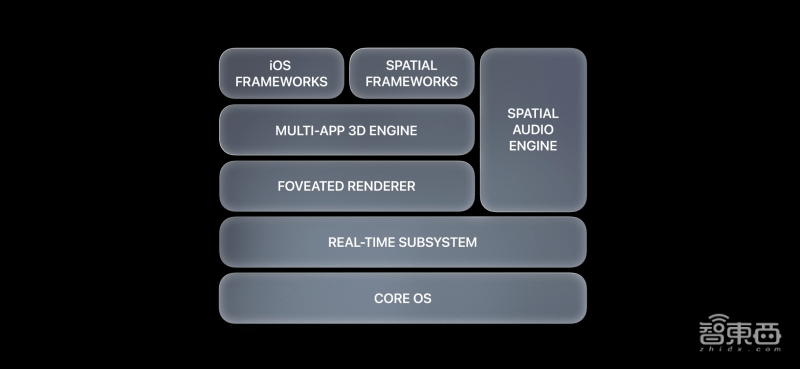

1. A system visionOS: the first operating system based on spatial computing

visionOS is Apple’s first Apple system based on spatial computing. This system is based on the innovations of Apple's three major systems: macOS, iOS, and iPadOS, and supports 3D content engines.

2. Two chips M2 R1: R1 is specially designed for real-time sensors

The Vision Pro head display adopts a dual-chip design and is also equipped with two important chips, M2 and R1. Among them, R1 is a chip specially created by Apple for the head display. It is also Apple’s second chip after the A series, S series, and M series. , another new chip series.

Among them, the R1 chip is specially designed to process real-time sensors. It can process data collected by 12 cameras, 5 sensors, and 6 microphones, and can transmit new images to the display within 12 milliseconds.

3. Three major functions: Eyesight function, spatial video function, and virtual digital human function

Eyesight function: Display when approaching, so that head-mounted display interaction no longer requires "isolation"

It can be said that the way Apple designs products is to make the products serve the design concept. In other words, when Apple hopes that the product will be an AR head-mounted display and ensure that the head-mounted display will not create a sense of isolation between people, all product ideas and designs will make concessions in this direction.

With the Eyesight function, no matter what environment the user is in, once someone approaches, the double-sided display of the headset will automatically display the face inside the headset, thereby restoring a new "face". Reduce the sense of strangeness during communication.

Virtual Digital Human Function: Instantly restore “yourself”

Through multiple sensors and cameras on the front of the screen, Apple can instantly restore a virtual digital person, and the digital person also has a sense of depth and volume.

Spatial audio function: adapt to different headsets

There are many new features inside the Vision Pro headset that are refreshing. Through audio ray tracing technology, it can adapt to different spatial scenes and provide better audio effects.

4. Four major scenes: work, home, games, and movie watching

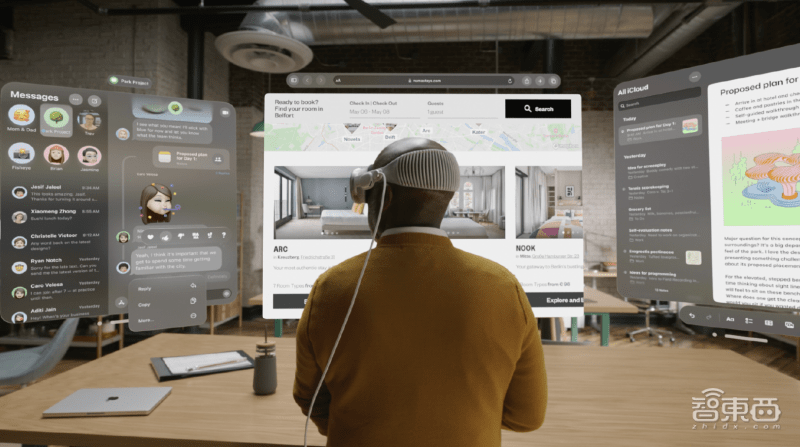

At the press conference, Apple introduced four application scenarios for AR headsets: work, home, gaming, and movie viewing.

It can be said that if the previous functions will make you appreciate Apple's product design, then the four major application scenarios will increase people's desire to buy.

Among them, one of the most attractive scenes is the movie viewing scene:

Apple maximizes the function of spatial video from two aspects, one is shooting, and the other is playback. In terms of shooting, Apple’s AR headset is the first device to support a 3D camera, which can record changes in spatial orientation from 360 degrees. Moreover, the Vision Pro headset not only supports spatial video shooting, but also supports spatial photo Live shooting, redefining the way people record their lives.

In terms of playback, Apple not only supports the playback of some downloaded space videos, but also supports the playback of selfie space videos. Moreover, when you take a panorama and display it on the headset, the panorama will be presented around the screen in a wraparound manner.

At the same time, Apple’s applications in work scenarios also focus on large-screen office, collaborative office and daily office software.

As everyone predicted before, some common software functions on iPhone and iPad, such as memos, web pages, etc., have all been transplanted to Apple AR headsets.

Like domestic VR and AR devices such as PICO and Nreal, the Vision Pro head display also focuses on the infinite screen direction, which allows multiple screens to be displayed at the same time, and users can freely adjust the size of each display interface.

At the same time, Apple is also opening up the linkage between AR headsets, Phones, and Macs. According to Apple, when you open an Apple Mac computer and a head-mounted display at the same time, you only need to glance at it, and the screen on the computer will automatically be displayed on the head-mounted display.

In smart home scenarios, Apple mainly focuses on the audio experience, and can adjust the audio experience according to different home scenarios.

Gaming scenarios have never been Apple’s strength, but at this press conference, Apple also introduced through a few sentences that the AR headset also supports the linkage of different gaming accessories and focuses on large-screen gaming scenarios.

In addition, Apple also introduced some basic parameter information. The Vision Pro headset has a 23-megapixel display, and the screen resolution that each eye can see reaches above 4K.

This double-sided display integrates multiple sensors and cameras. It is the most critical piece of hardware in Apple’s AR headset and is also the underlying cornerstone of a series of interactive functions.

In terms of interaction, currently, the Vision Pro headset supports eye movement interaction, voice interaction and gesture interaction, which is similar to most current high-end headsets, such as PICO 4 Pro, Meta Quest Pro and other headsets.

In terms of battery life, according to Apple, the Apple AR headset can support one day of use if connected to a power supply, but it can support up to 2 hours of use if connected to an external power supply. This is almost the same as the battery life of most head-mounted displays currently on the market.

2. Apple’s transition to self-developed chips is officially completed! Billion transistor M2 Ultra monster is coming

Although today’s focus is on Apple Vision Pro, the release of the M2 Ultra chip and the iteration of two new products in the Mac series are also noteworthy, and Apple also puts them first in the introduction.

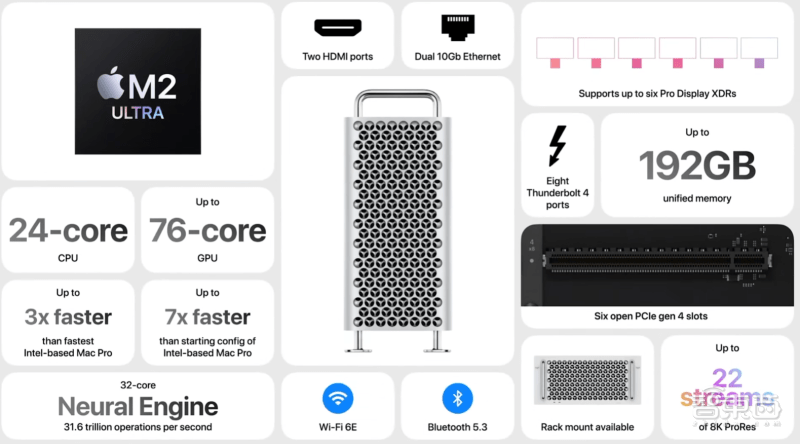

Today, Apple officially completed the transition of self-developed chips in Mac computers and released a new Mac Pro product equipped with M2 Ultra chip.

Apple’s self-developed chip empire officially covers all Apple Mac product lines.

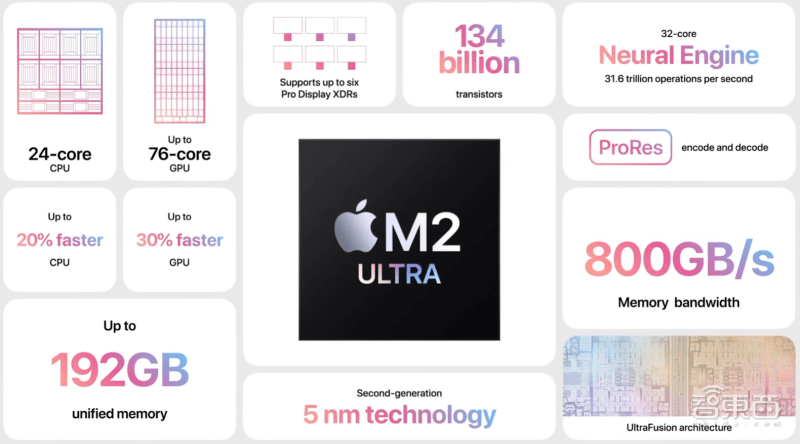

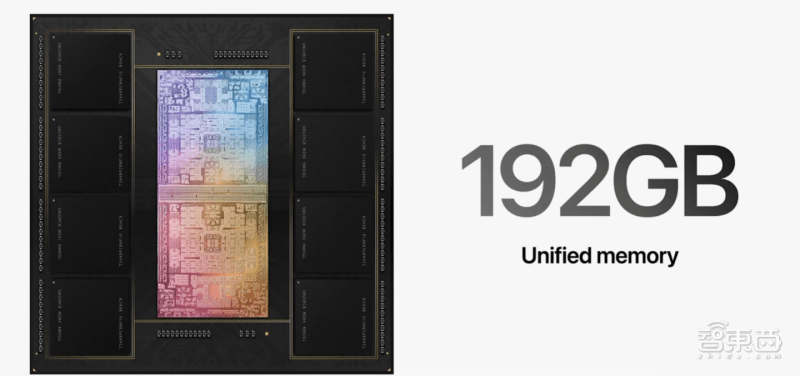

That's right, the "super cup" of Apple's M2 series is finally here. M2 Ultra has become the most powerful laptop chip on the planet. Simply put, it uses "UltraFusion" technology to "glue" two pieces of M2 Max together. together.

M2 Ultra has a terrifying 24-core CPU and 76-core GPU. The CPU performance is improved by 20% and the GPU is improved by 30%. However, the most surprising thing is not the number of cores of the CPU and GPU. The unification of M2 Ultra The memory capacity reaches up to 192GB, and the memory bandwidth is up to 800GB/s. The computing power of the M2 Ultra neural network engine reached 31.6TOPS this time, with a core count of 32 cores.

In addition, the number of transistors in M2 Ultra has reached 134 billion. You heard me right, it has reached the "hundred billion level". This is also the ceiling of current mobile processors. Its process technology is still TSMC's second-generation 5nm. Craftsmanship.

This time Apple has used M2 Ultra in both the new Mac Studio and the new Mac Pro. Thanks to the addition of M2 Ultra, the performance of these two products has been "doubled" in various ways. For example, the Mac Studio equipped with M2 Ultra is up to 6 times faster than the Intel processor iMac.

Apple specifically mentioned that M2 Ultra is particularly good at handling large-scale machine learning tasks, even better than some independent graphics cards, and this is mainly due to the unified memory technology of Apple's M-series chips.

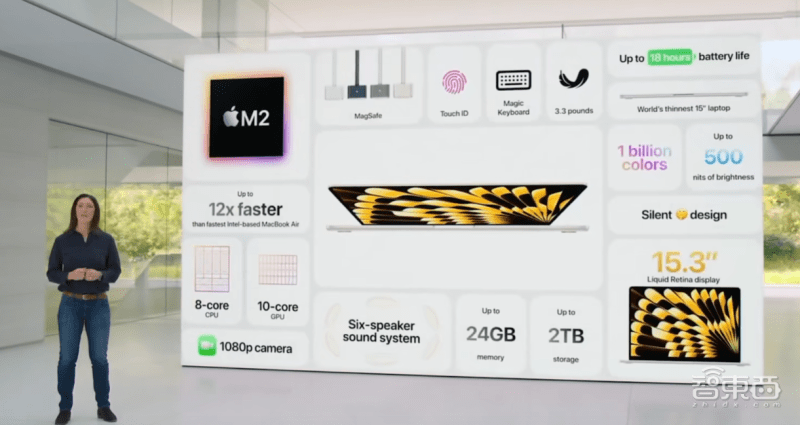

In addition to two new desktop Mac products, today Apple also officially released the "long-rumored" 15-inch MacBook Air. Not only is the screen bigger, the "notch" is also retained.

Although the MacBook Air has a larger screen size, it is the thinnest 15-inch laptop in history, weighs only 3.3 pounds, and has a battery life of up to 18 hours.

The 15-inch MacBook Air is equipped with the Apple M2 chip. Compared with the MacBook Air equipped with Intel chips, the performance is improved by up to 12 times, and the storage specification supports up to 2TB.

The peak brightness of the notch screen of the new MacBook Air Pro can reach 500nit, and the frame is as narrow as 5 mm.

Generally speaking, Apple M2 Ultra has undoubtedly become the "king bomb" of Apple's chips this time, and updates to other Mac product lines are based on "routine upgrades" of this chip.

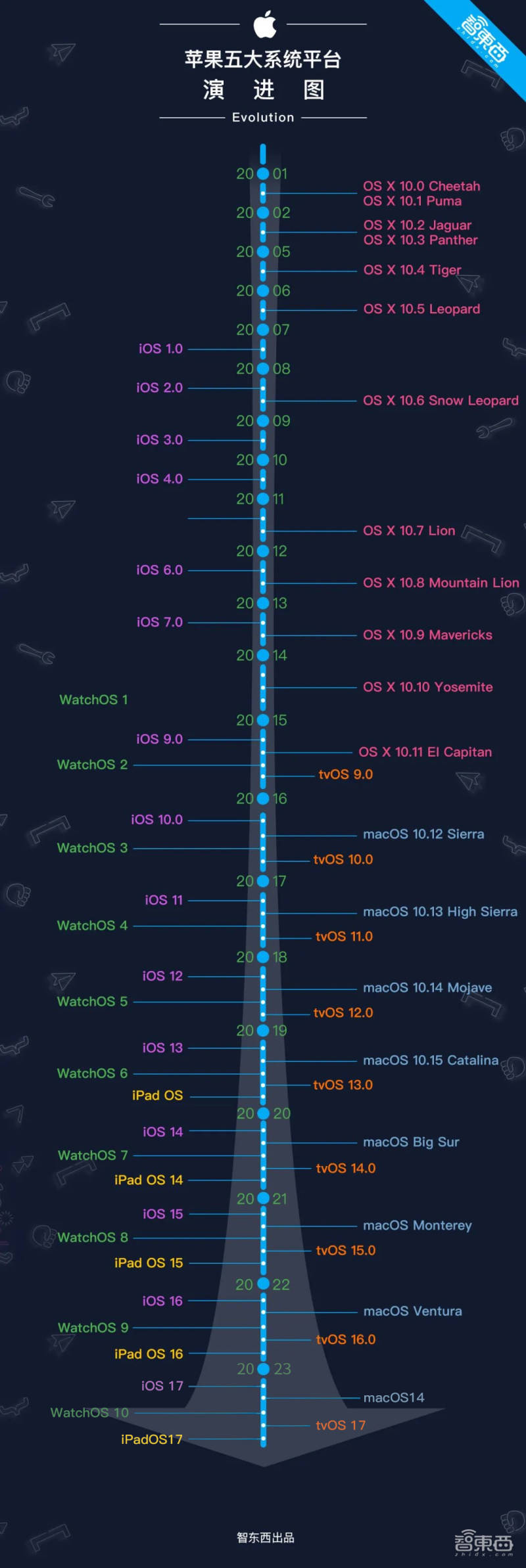

Three and five major software platform upgrades focus on AI functions, personalization, humanized interaction and health

Under the light of Apple Vision Pro and M2 Ultra, Apple’s upgrades in the other five major systems this time seem a bit “not good enough”.

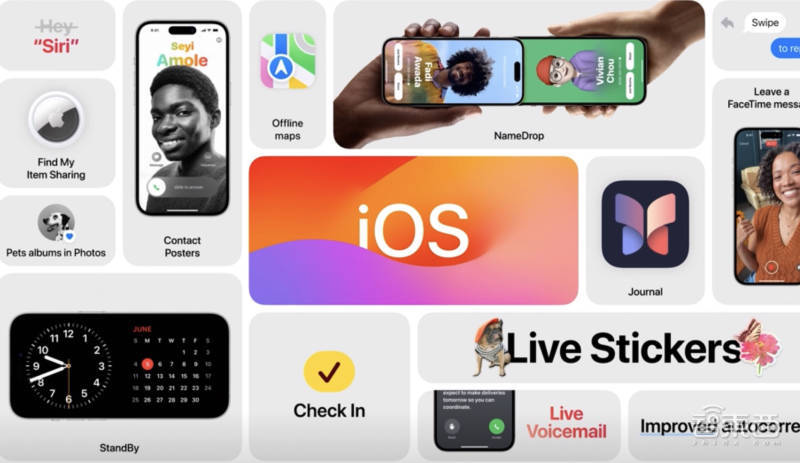

1. iOS 17: Three major function upgrades, no need to say "Hi" when calling Siri

This iOS 17 upgrade mainly focuses on communication, communication, etc., including upgrades of the three major functions of Phone, Message and FaceTime.

The upgraded Phone is no longer limited to a single call background, but can be customized to create its own unique call interface according to each person's design, and can even customize a different business card interface for each friend. The new version of Phone also supports converting voice messages into text to help users determine whether they need to answer the phone in time.

Message has added functions such as catch-up and check in. Through the check-in function, you can always tell your friends whether you have arrived home safely.

FaceTime and keyboard have also received some minor feature upgrades. Apple's way of waking up Siri has also changed from "Hey Siri" to "Siri".

AirDrop upgrade, Apple calls it Name Drop. By "sticking" your friends' mobile phones at close range, you can directly send your personal business cards. At the same time, the AirDrop function is no longer limited by distance. Once the transmission starts, air delivery can be completed even if the two people are far apart.

In addition, iOS 17 has added a Notes App this time, which will extract some key points based on part of the information presented on the user’s mobile phone that day and present them in front of the user to help users record every day and every journey. Moreover, the Notes App will also “remind” users to update every day, stimulating users’ desire to create.

2. iPadOS 17: Fighting with "widgets", you can also customize Live Photo "dynamic wallpaper"

Speaking of iPadOS 17, one prominent feeling is that Apple is stuck with "widgets" this time, whether it is a mobile phone or a tablet.

In iPadOS 17, widgets can be directly operated interactively. We only need to operate widgets on the desktop to realize the functions of the App without opening the App, such as turning on lights, playing music, etc.

To put it simply, it is to reduce the level of information that users can access.

OPPO, vivo, Xiaomi and other Android users may think when they see this, that’s it? But this is indeed a function that has been available for many generations of Android applications.

In addition to widgets, personalized customization experience is also a key upgrade direction of iPadOS 17. For example, we can customize the lock screen wallpaper and replace it with a photo we like.

It is worth mentioning that with the help of AI technology, we can fuse multiple pictures to make dynamic wallpapers in a form similar to "live photo".

In the lock screen, iPadOS 17 adds a real-time activity function. We can check flight information, see where the takeaway is, watch the scores of the games we follow, or set some timers on the lock screen.

This time Apple has added a Health App to iPadOS 17 for the first time. Due to the larger screen, we can see more details and more health information. The Health App can also access relevant information from third-party apps. In addition, HealthKit makes it easier for Apple developers to develop related functions for health apps.

This time Apple has emphasized the application of PDF in the Memo App, especially in terms of collaboration. Different users can modify the same PDF, and the modified content can be updated and synchronized in real time.

Overall, iPadOS 17 is still an upgrade of some details of the existing functions. Personalization, AI, and health are the key words we see.

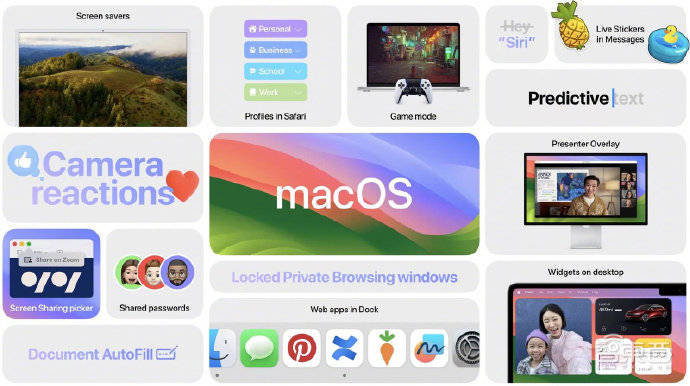

3. macOS Sonoma: specially launched game mode, widget control is more convenient

The new name of macOS this time is macOS Sonoma. Sonoma is a county in California, USA.

One of the upgrades to macOS Sonoma is also a widget. In the past, Mac components could only be used in the notification center, but now Mac control components can be used everywhere on the desktop, and the color of Mac components will be adaptively adjusted according to the screen displayed on the main interface.

Gaming has never been the strong point of Apple Mac. This time macOS has added Game Mode. In game mode, it improves the operating efficiency of the CPU and GPU and reduces the delay of game accessories.

Japanese game designer Hideo Kojima also came to the Apple conference this time. He is the producer of the well-known game "Death Stranding". The Mac version of this game will be released in the future.

In terms of video conferencing, macOS will provide video overlay effects. During the speech, the speaker's picture can appear in various places on the screen, and during the interactive session, Apple also provides some specific interactive effects. For example, you can set off fireworks behind your back.

In addition, Safari supports turning a web page into a miniature software, which can be saved in the dock with one click. Users can directly open the browsing interface in the dock.

4. watchOS 10: Not only pay attention to physical health, but also pay attention to your mental health

This time WatchOS 1O has a new system interface design, adding a Snoopy-themed interface and a portrait dial, and focuses on creating a smart stacking function.

Through the sliding interface, users can quickly obtain information through the intelligent stacking function, such as entering timers, viewing physical training, etc.

The applications of WatchOS 1O have also changed. For example, the world clock interface can present background information according to different times.

In terms of sports, Apple Watch can calculate FTP for cycling enthusiasts, which is their highest training threshold. Users can directly view their exercise attempts and results.

For hiking enthusiasts, Apple Watch can add SOS reminder points on the hiking route map, allowing you to set emergency distress signals in time to avoid risks.

Compared with the previous Apple Watch’s focus on wider coverage, now Apple Watch pays more attention to providing more professional analysis for each exercise method.

This time, Apple Watch not only begins to pay attention to people's physical health, but also begins to pay attention to mental health. Apple Watch will help users judge their own status by providing an evaluation form, and combined with relevant data information, it will remind you of your psychological changes.

5. The audio and video experience has been upgraded, and AI “learning users” has become an important trend

In terms of audio and video product technology upgrades, Apple has launched an adaptive audio function in AirPods this time. Simply put, the headphones will adjust the audio effect and noise reduction quality according to the user's listening habits and the external listening environment.

For example, when we are talking to someone, the earphones will reduce the sound pickup on both sides and increase the sound pickup in front. When we answer the phone, adaptive audio can reduce the surrounding noise and provide better call noise reduction. Effect.

In terms of audio, Apple’s HomePod can learn user usage habits. For example, when it learns that you often listen to music in the kitchen, HomePod will automatically connect to your iPhone as soon as you enter the kitchen, increasing the convenience of connection. .

Oh, by the way, when you call Siri on HomePod in the future, you no longer need to say Hey, you can directly call Siri (this is an important change, you need to take the test).

In addition, Apple has also improved the smoothness of the connection between the iPhone and the car system in CarPlay.

tvOS 17 mainly updates the interactive interface this time, making the interface simpler. Users can also make photos into TV screensavers and play them dynamically.

FaceTime can also be used on Apple TV in the future, and family members can sit in the living room and use FaceTime to make video calls. Video calls on your mobile phone can also be "relayed" to Apple TV to continue.

In terms of audio and video experience this time, Apple is obviously using various AI technologies and underlying communication technologies to improve the "insensitivity" of users using devices, making the interaction with smart devices more natural. It can be said that although Apple has not released Large model-related technology, but the application of AI in Apple devices is ubiquitous.

Conclusion: The era of spatial computing is here

At tonight’s press conference, Apple’s Apple Vision Pro is undoubtedly the core focus. It brings features or breakthroughs in all aspects of design, functionality, performance, display, audio, interaction, applications, etc. that break through the ceiling of the existing industry. experience. Although there have been various related speculations in the industry before, Apple's Apple Vision Pro still gave everyone a lot of surprises, and it will inevitably have a profound impact on the VR and AR industries.

In contrast, many of Apple’s system updates tonight are more of “optimized iterations” of existing functions. At the same time, AI technology is increasingly used in Apple systems. The emergence of Apple M2 Ultra and the new Mac Pro has also officially completed the transition of Apple's self-developed chips.

As Cook said, Apple’s Apple Vision Pro is just the beginning. Although Apple is not the first manufacturer to enter the field of VR and AR, Apple’s series of technological innovations will undoubtedly make it an industry leader in the era of spatial computing. , what new functions will Apple’s product iterate in the future? What kind of waves will Apple's first AR product cause in the industry? What key technologies are contained in the 5,000 patents behind this product? Zhidongxi will continue to dig deeper, so stay tuned.

The above is the detailed content of Apple's AR glasses Vision Pro was born! The scene screamed non-stop, the first real machine shot, a conversation with Cook. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

How to Use Mistral OCR for Your Next RAG Model

Mar 21, 2025 am 11:11 AM

Mistral OCR: Revolutionizing Retrieval-Augmented Generation with Multimodal Document Understanding Retrieval-Augmented Generation (RAG) systems have significantly advanced AI capabilities, enabling access to vast data stores for more informed respons

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist