Sterling Crispin, a former Apple AR engineer who participated in Vision Pro, posted an article sharing the work he did during his time at Apple. It is mentioned that Apple Vision Pro incorporates a large number of machine learning technologies, including using AI models to predict your body and brain states, such as whether you are curious about current affairs, whether you are distracted, whether your attention is distracted, etc.

All this is measured and judged based on the user's eye movement data, heart rate, muscle activity, blood pressure, brain blood density and other data.

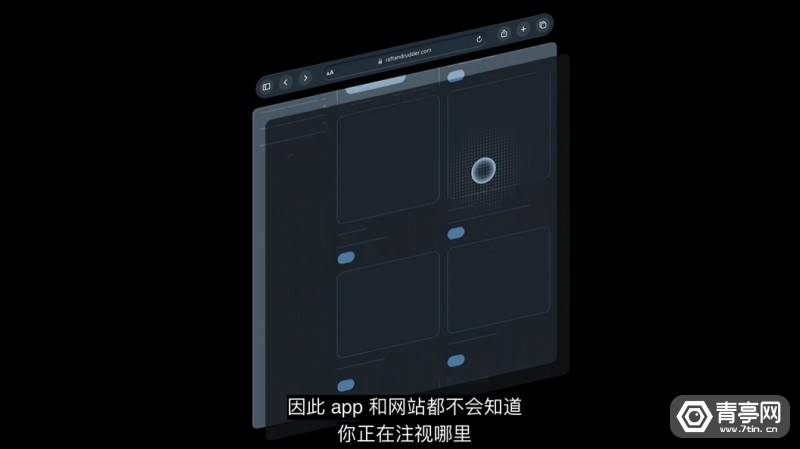

Mike Rockwell, head of the Apple Vision Pro project team, also said: Eye gaze direction is very sensitive personal privacy data. Apple has also implemented privacy protection in Vision Pro. Third-party apps cannot obtain your eye gaze direction. Only you pass it. After "clicking", the App can obtain your operation behavior, but it cannot obtain the direction of your eye gaze.

Apple Vision Pro Privacy Protection

The following is the original text of Sterling Crispin:

When I was at Apple as a Neurotechnology Prototyping Fellow in the Technology Development Group, I spent 10% of my time contributing to the development of #VisionPro. This was the longest effort I've ever had. I am proud and relieved that it has finally been announced. I've been working in AR and VR for 10 years, and in many ways it's the culmination of an entire industry in a single product. I'm grateful that I helped make it happen, and I'm open to consulting and taking calls if you want to get into this space or improve your strategy.

The work I do supports the foundational development of Vision Pro, mindfulness experiences, ▇▇▇▇▇▇products, and more ambitious neurotech moonshot research. Like, predicting that you're going to click on something before you click on it, basically mind reading. I worked there for 3.5 years and left at the end of 2021, so I'm excited to experience how everything has come together over the past two years. I'm really curious what went into the edit and what will be released later.

Specifically, I am proud to have contributed to the original vision, strategy and direction of the Vision Pro ▇▇▇▇▇▇ program. The work I did on a small team helped give the green light to this product category, which I think could one day have a significant global impact.

Most of the work I have done at Apple has been under NDAs and has spanned a wide range of topics and approaches. But some things have been disclosed through patents that I can quote and explain below.

In general, a lot of the work I do involves detecting the user's mental state based on data from the user's body and brain during immersive experiences.

So the user is in a mixed reality or virtual reality experience, and the artificial intelligence model tries to predict whether you are curious, distracted, scared, focused, recalling past experiences, or some other cognitive state. These can be inferred through measurements such as eye tracking, brain electrical activity, heartbeat and rhythm, muscle activity, brain blood density, blood pressure, skin conductivity, and more.

There are a lot of tricks involved in making a specific prediction possible, which are detailed in the few patents I mentioned. One of the coolest results is predicting that users will click on something before they actually click. It's hard work and something I'm proud of. Your pupils react before you click, in part because you anticipate something will happen after you click. Therefore, you can create biofeedback of the user's brain by monitoring the user's eye behavior and redesigning the UI in real time to create more of this intended pupil response. It's a crude brain-computer interface through the eyes, but it's pretty cool. And I'd take invasive brain surgery any day.

Other techniques for inferring cognitive state include flashing visuals or sounds to users rapidly in a way that they may not be aware of, and then measuring their reaction to it.

Another patent details how machine learning and signals from the body and brain can be used to predict how well you will focus, relax or study. The virtual environment is then updated to enhance these states. So imagine an adaptive immersive environment that helps you study, work, or relax by changing what you see and hear in the background.

All these details are publicly available in the patent and have been carefully written not to give anything away. I'm involved in a lot of other things and hope to see more eventually.

Many people have been waiting for this product for a long time. But it's still a step on the road to VR. The industry won't fully catch up to the technology's grand vision until the end of the century.

Likewise, if your business is looking to enter this space or improve your strategy, I'm open to consulting work and taking calls. Mostly, I'm proud and relieved that this is finally being announced. It's been over five years since I started working on this, and it's where I spend most of my time, as are other designers and engineers. I hope the whole is greater than the sum of its parts and Vision Pro will blow your mind.

Source: Sterling Crispin

The above is the detailed content of Apple Vision Pro combines a large number of AI technologies for user status detection. For more information, please follow other related articles on the PHP Chinese website!