Technology peripherals

Technology peripherals

AI

AI

Singapore releases AI arithmetic model Goat, with capabilities above GPT-4

Singapore releases AI arithmetic model Goat, with capabilities above GPT-4

Singapore releases AI arithmetic model Goat, with capabilities above GPT-4

DoNews reported on June 7 that the biggest shortcoming of the current GPT-4 model is mainly its arithmetic ability. Since the logical reasoning ability of the model needs to be improved, GPT-4 cannot solve even the calculation problems that many people think are relatively simple. Get the correct result.

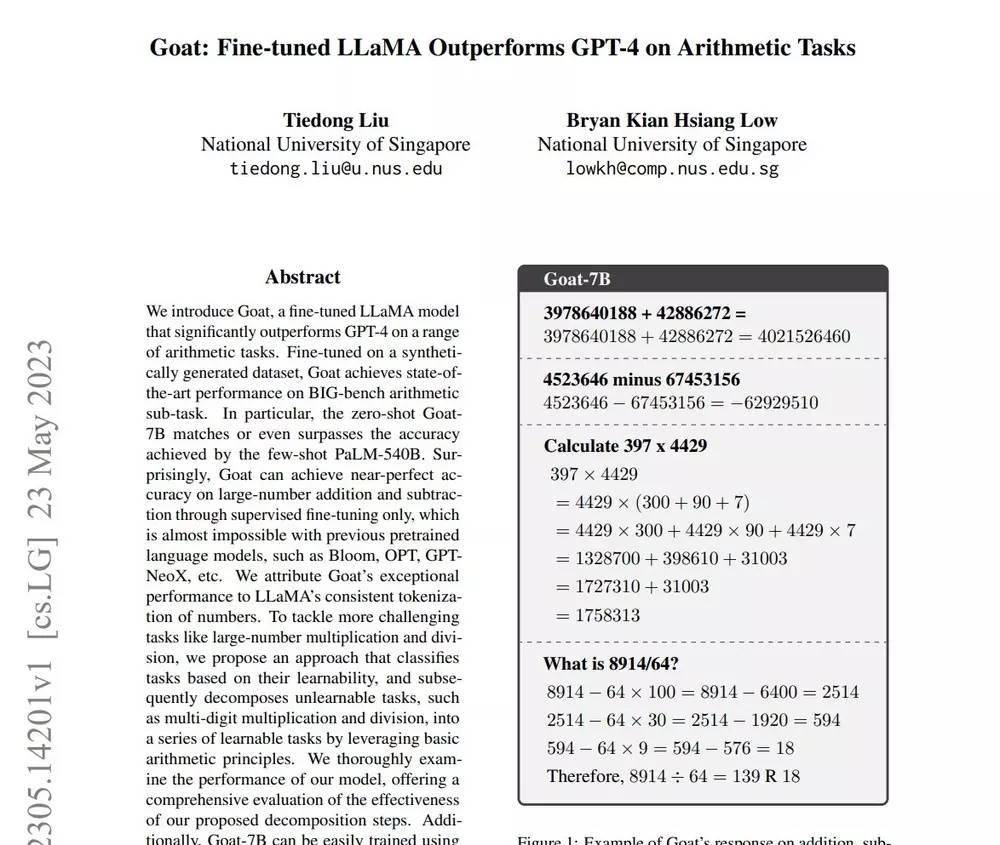

Researchers at the National University of Singapore recently launched a model called Goat, designed to solve arithmetic problems. This news was reported by IT House. The researchers stated that "after fine-tuning the LLaMA model, Goat achieved mathematically higher accuracy and better performance than GPT-4."

Researchers proposed a new method to classify tasks according to the learnable types of arithmetic, and then use basic arithmetic principles to decompose unlearnable tasks into a series of learnable tasks (IT Home Note: Complex tasks The calculation process is broken down into simple steps) and then imported into the AI model.

This new method allows the model to learn the answer pattern and generalize the process to unseen data, rather than relying solely on pure "weight memory calculation". Therefore, it can effectively improve arithmetic performance and can learn in zero samples. generates answers for large number addition and subtraction with "near-perfect accuracy."

The researchers trained on a GPU with 24 GB of video memory, and tested the final model using the BIG-bench arithmetic subtask. The accuracy results were outstanding, ahead of Bloom, GPT-NeoX, OPT, etc. in the industry Model.

The accuracy of the zero-sample Goat-7B even exceeded the PaLM-540 model after few-sample learning, and far exceeded GPT-4 in large number calculations.

The above is the detailed content of Singapore releases AI arithmetic model Goat, with capabilities above GPT-4. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

After the debut of the NVIDIA H200, known as the world's most powerful AI chip, the industry began to look forward to NVIDIA's more powerful B100 chip. At the same time, OpenAI, the most popular AI start-up company this year, has begun to develop a more powerful and complex GPT-5 model. Guotai Junan pointed out in the latest research report that the B100 and GPT5 with boundless performance are expected to be released in 2024, and the major upgrades may release unprecedented productivity. The agency stated that it is optimistic that AI will enter a period of rapid development and its visibility will continue until 2024. Compared with previous generations of products, how powerful are B100 and GPT-5? Nvidia and OpenAI have already given a preview: B100 may be more than 4 times faster than H100, and GPT-5 may achieve super

Former Apple employees develop screen-less AI hardware products that support GPT-4

Oct 30, 2023 pm 05:45 PM

Former Apple employees develop screen-less AI hardware products that support GPT-4

Oct 30, 2023 pm 05:45 PM

[Global Network Technology Comprehensive Report] On October 30, according to foreign media, Humane, a smart software and consumer device developer co-founded by two resigned Apple employees, will release its first product that has been developed for several years on November 9. The first product, AiPin, is about the size of a biscuit. It is equipped with a camera, microphone and speaker, sensors and laser projector, but has no screen. It can be magnetically fixed on clothes and is a "clothing-based wearable device." According to Humane’s official website, AiPin does not need to be paired with a smartphone or other auxiliary device. It is a screen-free independent device and software platform, and supports device functions through a combination of proprietary software and OpenAI’s GPT-4. In addition, its AI-driven optical

By connecting GPT-4 to 'Minecraft”, scientists are discovering new potential of AI

Jun 07, 2023 am 10:00 AM

By connecting GPT-4 to 'Minecraft”, scientists are discovering new potential of AI

Jun 07, 2023 am 10:00 AM

What other tasks can AI accomplish that are beyond human expectations? Recently, Nvidia's artificial intelligence researchers introduced the language model GPT-4 into the sandbox game "Minecraft" in an attempt to explore more complex capabilities of AI. On May 25, the NVIDIA team and researchers from many universities, including the California Institute of Technology, released Voyager. According to their definition in the paper, Voyager is an open concrete agent with a large language model. Enter the video game "Minecraft" with a high degree of freedom. The various operations implemented by AI in the game. Voyager is given the task of completing various goals in the game, including survival, combat, and construction, and with game time and experience

Singapore releases AI arithmetic model Goat, with capabilities above GPT-4

Jun 07, 2023 pm 05:24 PM

Singapore releases AI arithmetic model Goat, with capabilities above GPT-4

Jun 07, 2023 pm 05:24 PM

DoNews reported on June 7 that the biggest shortcoming of the current GPT-4 model is mainly its arithmetic ability. Since the logical reasoning ability of the model needs to be improved, even for calculation problems that many people think are relatively simple, GPT-4 cannot get the correct answer. the result of. According to IT House reports, recently, researchers from the National University of Singapore launched the Goat model, saying that the model is "specifically used for arithmetic problems." The researchers stated that "after fine-tuning the LLaMA model, Goat achieved mathematically higher accuracy and better performance than GPT-4." The researchers proposed a new method to classify tasks according to the learnable types of arithmetic, and then use basic arithmetic principles to decompose unlearnable tasks into a series of learnable tasks (I

![[Trend Weekly] Global Artificial Intelligence Industry Development Trend: OpenAI submitted a 'GPT-5' trademark application to the U.S. Patent Office](https://img.php.cn/upload/article/000/887/227/169147278646672.png?x-oss-process=image/resize,m_fill,h_207,w_330) [Trend Weekly] Global Artificial Intelligence Industry Development Trend: OpenAI submitted a 'GPT-5' trademark application to the U.S. Patent Office

Aug 08, 2023 pm 01:33 PM

[Trend Weekly] Global Artificial Intelligence Industry Development Trend: OpenAI submitted a 'GPT-5' trademark application to the U.S. Patent Office

Aug 08, 2023 pm 01:33 PM

This year, artificial intelligence technology has made significant progress in the fields of machine learning, deep learning, natural language processing, and computer vision. In particular, deep learning technology has performed well in image recognition, speech recognition, and natural language processing. It has passed the global industry To closely track the development of the chain, Qianzhan selects global industry core information for users every week, in the form of "Forward Industry Trend Weekly Report" - Artificial Intelligence, aiming to help users seize the new trends in industry development.

Huawei Zhou Bin: There is no problem with Ascend AI's computing power carrying GPT-4

May 30, 2023 pm 10:25 PM

Huawei Zhou Bin: There is no problem with Ascend AI's computing power carrying GPT-4

May 30, 2023 pm 10:25 PM

Zhou Bin, CTO of Huawei’s Ascend Computing Business, “The Ascend AI basic software and hardware platform has incubated and adapted more than 30 mainstream large models. More than half of China’s native large models are based on the Ascend AI basic software and hardware platform. From the perspective of underlying software and hardware technology, , Ascend AI has been verified on a large scale, and there is no problem in carrying the computing power requirements of ChatGPT or GPT-4." On May 26, at the 2023 Zhongguancun Forum, Zhou Bin, CTO of Huawei's Ascend computing business, said in an interview with The Paper and other media The above expression. The large-scale model that has been "flying" all the way has become the focus of attention in the domestic technology circle. Perhaps affected by the increase in computing power of large models, cloud vendors have recently made collective price cuts for the first time. Cloud vendors including Alibaba, Tencent, JD.com, etc. have all announced large-scale price cuts. In this regard

Truth Terminal: The AI Bot That's Blurring the Lines of Regulation

Oct 20, 2024 am 01:06 AM

Truth Terminal: The AI Bot That's Blurring the Lines of Regulation

Oct 20, 2024 am 01:06 AM

Marc Andreessen's decision to grant $50,000 in bitcoin to this semi-autonomous AI agent, to fund hardware upgrades and launch a cryptocurrency token named GOAT

GOAT: The AI-Driven Meme Coin That Reached a $418 Million Market Valuation

Oct 19, 2024 am 09:00 AM

GOAT: The AI-Driven Meme Coin That Reached a $418 Million Market Valuation

Oct 19, 2024 am 09:00 AM

The artificial intelligence (AI)-driven meme coin goatseus maximus (GOAT), launched on pump.fun, has reached a market valuation of $418 million