Operation and Maintenance

Operation and Maintenance

Safety

Safety

How to set up a high-availability Apache (HTTP) cluster on RHEL 9/8

How to set up a high-availability Apache (HTTP) cluster on RHEL 9/8

How to set up a high-availability Apache (HTTP) cluster on RHEL 9/8

Pacemaker is a high-availability cluster software suitable for Linux-like operating systems. Pacemaker is known as the "cluster resource manager" and provides maximum availability of cluster resources by failover of resources between cluster nodes. Pacemaker uses Corosync for heartbeats and internal communications between cluster components. Corosync is also responsible for voting (Quorum) in the cluster.

Prerequisites

Before we begin, make sure you have the following:

- Two RHEL 9/8 servers

- Red Hat Subscription or locally configured repository

- Access to both servers via SSH

- root or sudo permissions

- Internet connection

Lab details:

- Server 1: node1.example.com (192.168.1.6)

- Server 2: node2.exaple.com (192.168. 1.7)

- VIP: 192.168.1.81

- Shared Disk:

/dev/sdb(2GB)

Without further ado, let’s Learn more about these steps.

1. Update the /etc/hosts file

Add the following entries in the /etc/hosts file on both nodes:

192.168.1.6node1.example.com192.168.1.7node2.example.com

2 , Install the high-availability package Pacemaker

Pacemaker and other necessary software packages cannot be obtained in the default package repository of RHEL 9/8. Therefore, we must enable a highly available warehouse. Run the following subscription manager commands on both nodes.

For RHEL 9 server:

$ sudo subscription-manager repos --enable=rhel-9-for-x86_64-highavailability-rpms

For RHEL 8 server:

$ sudo subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpms

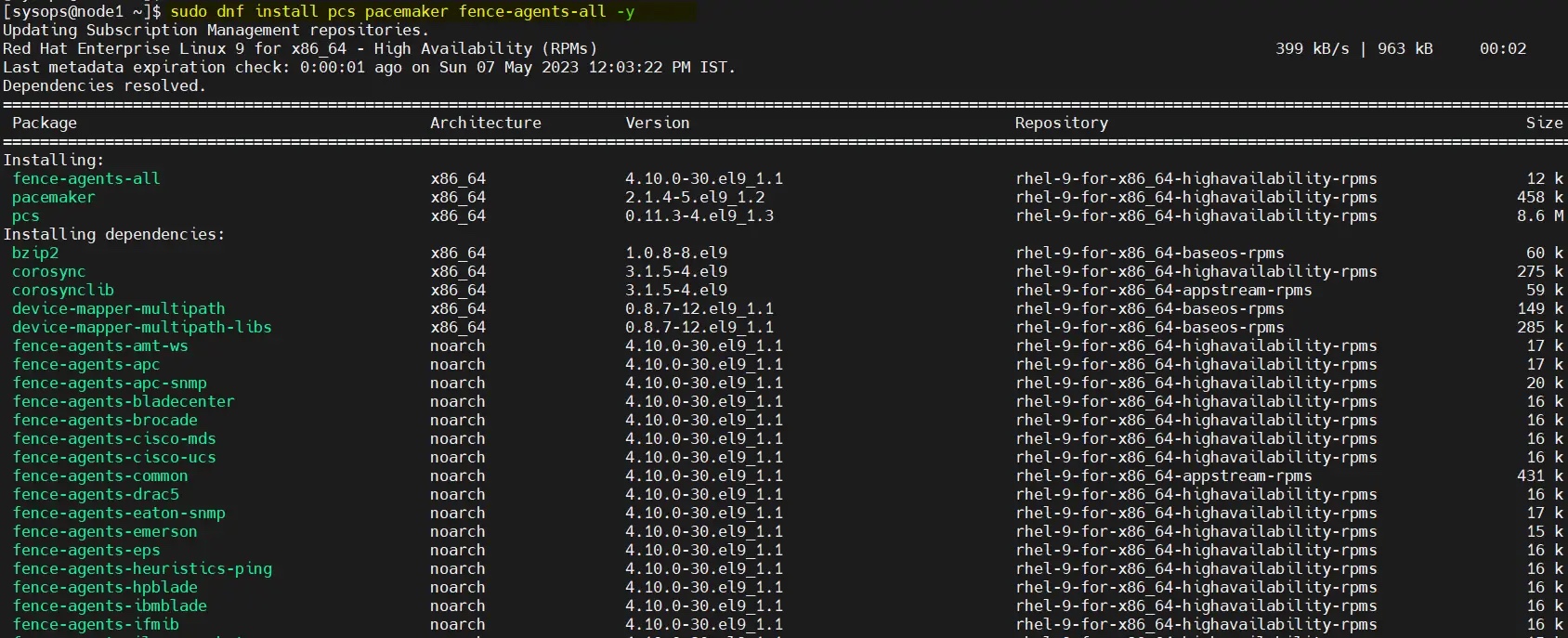

After enabling the repository, run the command to install the pacemaker package on both nodes :

$ sudo dnf install pcs pacemaker fence-agents-all -y

How to set up a high-availability Apache (HTTP) cluster on RHEL 9/8

3. Allow high-availability ports in the firewall

To allow high availability ports in the firewall, run the following command on each node:

$ sudo firewall-cmd --permanent --add-service=high-availability$ sudo firewall-cmd --reload

4. Set a password for the hacluster user and start the pcsd service

On both servers Set a password for the hacluster user and run the following echo command:

$ echo "<Enter-Password>" | sudo passwd --stdin hacluster

Execute the following command to start and enable the cluster service on both servers:

$ sudo systemctl start pcsd.service$ sudo systemctl enable pcsd.service

5. Create a high-availability cluster

Use the pcs command to authenticate both nodes and run the following command from any node. In my case, I'm running it on node1:

$ sudo pcs host auth node1.example.com node2.example.com

authenticated using the hacluster user.

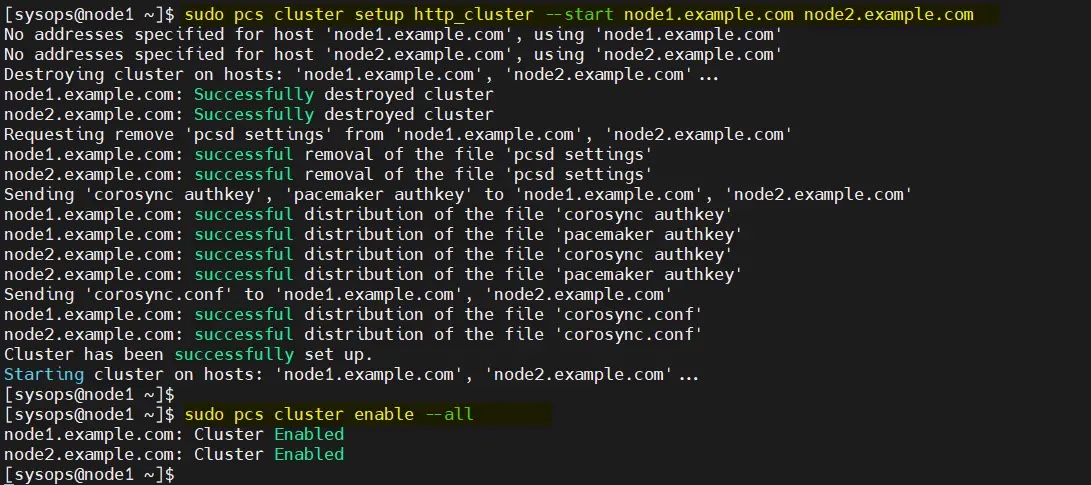

Use the following pcs cluster setup command to add two nodes to the cluster. The cluster name I use here is http_cluster . Run the command only on node1:

$ sudo pcs cluster setup http_cluster --start node1.example.com node2.example.com$ sudo pcs cluster enable --all

The output of these two commands is as follows:

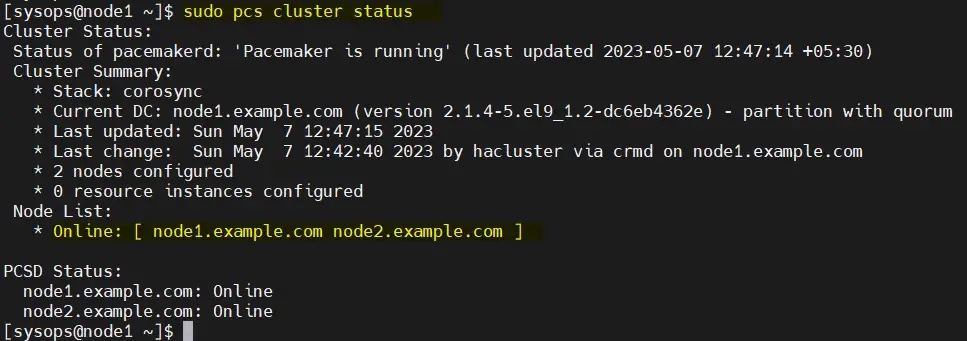

从任何节点验证初始集群状态:

$ sudo pcs cluster status

注意:在我们的实验室中,我们没有任何防护设备,因此我们将其禁用。但在生产环境中,强烈建议配置防护。

$ sudo pcs property set stonith-enabled=false$ sudo pcs property set no-quorum-policy=ignore

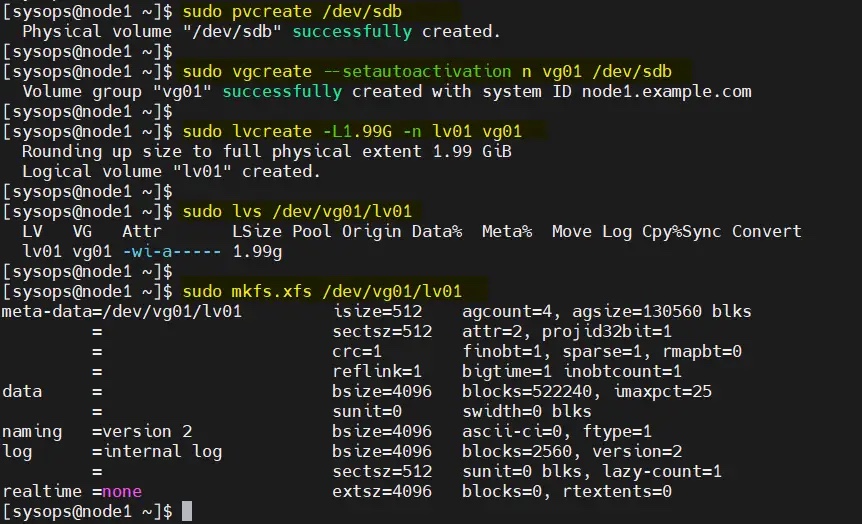

6、为集群配置共享卷

在服务器上,挂载了一个大小为 2GB 的共享磁盘(/dev/sdb)。因此,我们将其配置为 LVM 卷并将其格式化为 XFS 文件系统。

在开始创建 LVM 卷之前,编辑两个节点上的 /etc/lvm/lvm.conf 文件。

将参数 #system_id_source = "none" 更改为 system_id_source = "uname":

$ sudo sed -i 's/# system_id_source = "none"/ system_id_source = "uname"/g' /etc/lvm/lvm.conf

在 node1 上依次执行以下一组命令创建 LVM 卷:

$ sudo pvcreate /dev/sdb$ sudo vgcreate --setautoactivation n vg01 /dev/sdb$ sudo lvcreate -L1.99G -n lv01 vg01$ sudo lvs /dev/vg01/lv01$ sudo mkfs.xfs /dev/vg01/lv01

将共享设备添加到集群第二个节点(node2.example.com)上的 LVM 设备文件中,仅在 node2 上运行以下命令:

[sysops@node2 ~]$ sudo lvmdevices --adddev /dev/sdb

7、安装和配置 Apache Web 服务器(httpd)

在两台服务器上安装 Apache web 服务器(httpd),运行以下 dnf 命令:

$ sudo dnf install -y httpd wget

并允许防火墙中的 Apache 端口,在两台服务器上运行以下 firewall-cmd 命令:

$ sudo firewall-cmd --permanent --zone=public --add-service=http$ sudo firewall-cmd --permanent --zone=public --add-service=https$ sudo firewall-cmd --reload

在两个节点上创建 status.conf 文件,以便 Apache 资源代理获取 Apache 的状态:

$ sudo bash -c 'cat <<-END > /etc/httpd/conf.d/status.conf<Location /server-status>SetHandler server-statusRequire local</Location>END'$

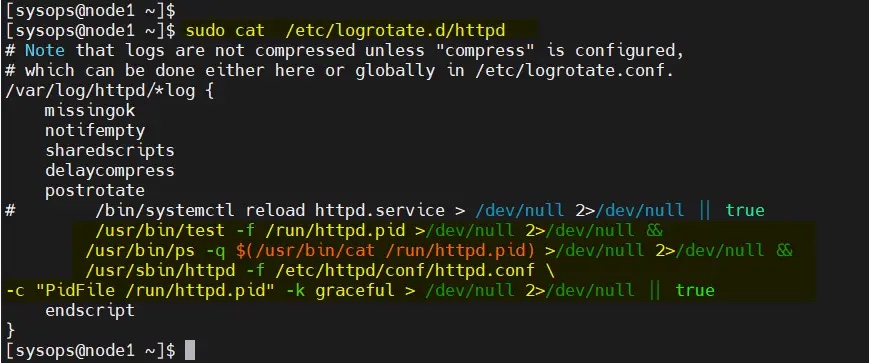

修改两个节点上的 /etc/logrotate.d/httpd:

替换下面的行

/bin/systemctl reload httpd.service > /dev/null 2>/dev/null || true

为

/usr/bin/test -f /run/httpd.pid >/dev/null 2>/dev/null &&/usr/bin/ps -q $(/usr/bin/cat /run/httpd.pid) >/dev/null 2>/dev/null &&/usr/sbin/httpd -f /etc/httpd/conf/httpd.conf \-c "PidFile /run/httpd.pid" -k graceful > /dev/null 2>/dev/null || true

保存并退出文件。

8、为 Apache 创建一个示例网页

仅在 node1 上执行以下命令:

$ sudo lvchange -ay vg01/lv01$ sudo mount /dev/vg01/lv01 /var/www/$ sudo mkdir /var/www/html$ sudo mkdir /var/www/cgi-bin$ sudo mkdir /var/www/error$ sudo bash -c ' cat <<-END >/var/www/html/index.html<html><body>High Availability Apache Cluster - Test Page </body></html>END'$$ sudo umount /var/www

注意:如果启用了 SElinux,则在两台服务器上运行以下命令:

$ sudo restorecon -R /var/www

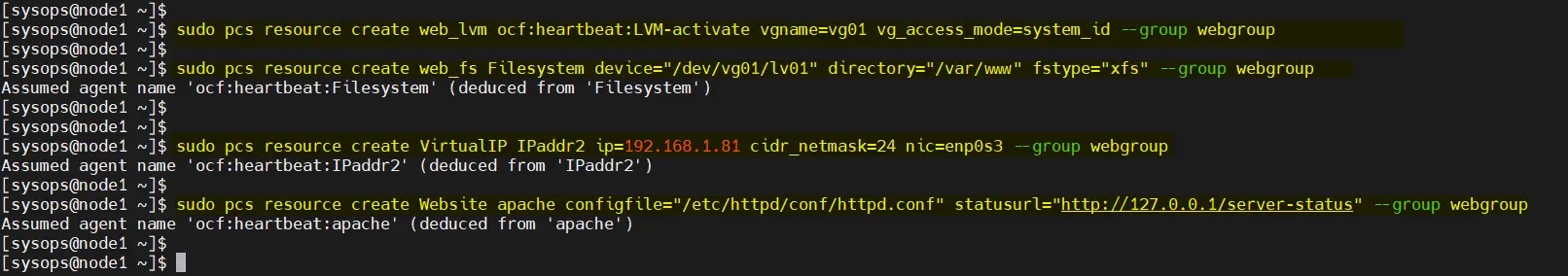

9、创建集群资源和资源组

为集群定义资源组和集群资源。在我的例子中,我们使用 webgroup 作为资源组。

-

web_lvm是共享 LVM 卷的资源名称(/dev/vg01/lv01) -

web_fs是将挂载在/var/www上的文件系统资源的名称 -

VirtualIP是网卡enp0s3的 VIP(IPadd2)资源 -

Website是 Apache 配置文件的资源。

从任何节点执行以下命令集。

$ sudo pcs resource create web_lvm ocf:heartbeat:LVM-activate vgname=vg01 vg_access_mode=system_id --group webgroup$ sudo pcs resource create web_fs Filesystem device="/dev/vg01/lv01" directory="/var/www" fstype="xfs" --group webgroup$ sudo pcs resource create VirtualIP IPaddr2 ip=192.168.1.81 cidr_netmask=24 nic=enp0s3 --group webgroup$ sudo pcs resource create Website apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" --group webgroup

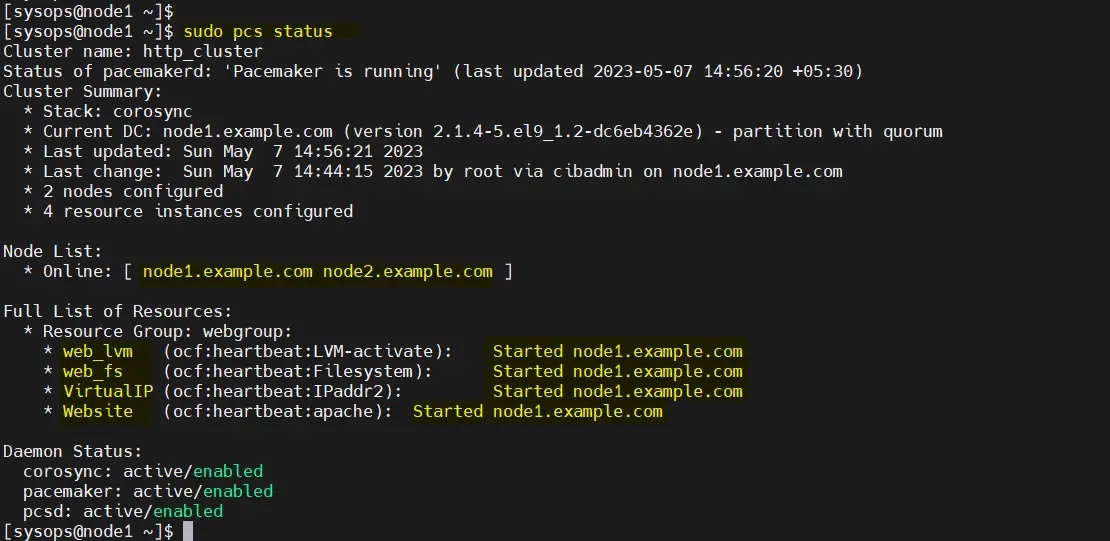

现在验证集群资源状态,运行:

$ sudo pcs status

很好,上面的输出显示所有资源都在 node1 上启动。

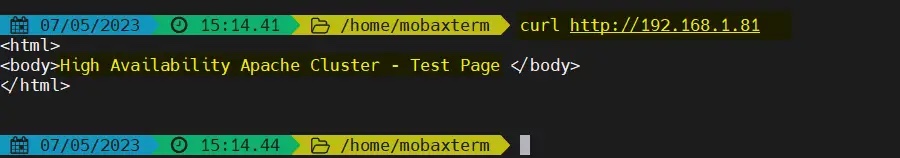

10、测试 Apache 集群

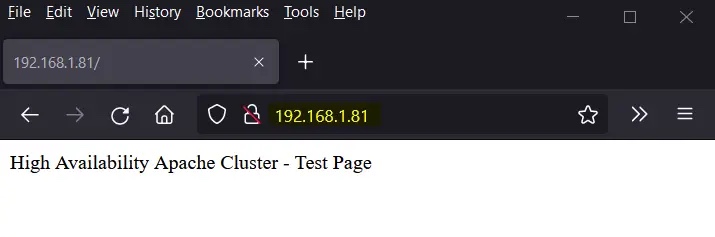

尝试使用 VIP(192.168.1.81)访问网页。

使用 curl 命令或网络浏览器访问网页:

$ curl http://192.168.1.81

或者

完美!以上输出确认我们能够访问我们高可用 Apache 集群的网页。

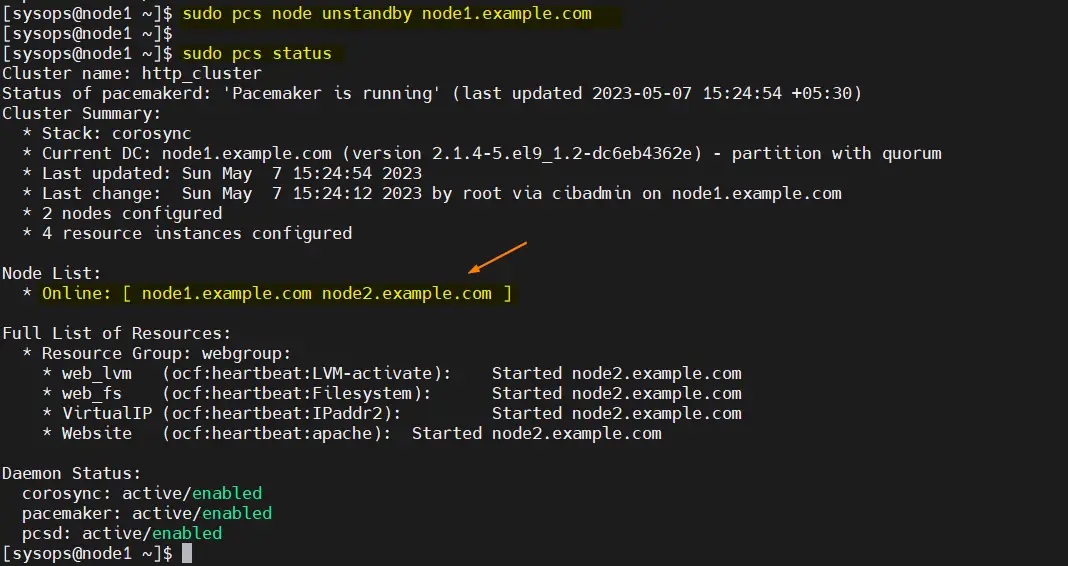

让我们尝试将集群资源从 node1 移动到 node2,运行:

$ sudo pcs node standby node1.example.com$ sudo pcs status

完美,以上输出确认集群资源已从 node1 迁移到 node2。

要从备用节点(node1.example.com)中删除节点,运行以下命令:

$ sudo pcs node unstandby node1.example.com

The above is the detailed content of How to set up a high-availability Apache (HTTP) cluster on RHEL 9/8. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP Framework Performance Comparison: The Ultimate Showdown of Speed vs. Efficiency

Apr 30, 2024 pm 12:27 PM

PHP Framework Performance Comparison: The Ultimate Showdown of Speed vs. Efficiency

Apr 30, 2024 pm 12:27 PM

According to benchmarks, Laravel excels in page loading speed and database queries, while CodeIgniter excels in data processing. When choosing a PHP framework, you should consider application size, traffic patterns, and development team skills.

How to add a server in eclipse

May 05, 2024 pm 07:27 PM

How to add a server in eclipse

May 05, 2024 pm 07:27 PM

To add a server to Eclipse, follow these steps: Create a server runtime environment Configure the server Create a server instance Select the server runtime environment Configure the server instance Start the server deployment project

How to conduct concurrency testing and debugging in Java concurrent programming?

May 09, 2024 am 09:33 AM

How to conduct concurrency testing and debugging in Java concurrent programming?

May 09, 2024 am 09:33 AM

Concurrency testing and debugging Concurrency testing and debugging in Java concurrent programming are crucial and the following techniques are available: Concurrency testing: Unit testing: Isolate and test a single concurrent task. Integration testing: testing the interaction between multiple concurrent tasks. Load testing: Evaluate an application's performance and scalability under heavy load. Concurrency Debugging: Breakpoints: Pause thread execution and inspect variables or execute code. Logging: Record thread events and status. Stack trace: Identify the source of the exception. Visualization tools: Monitor thread activity and resource usage.

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

The evasive module protects your website from application layer DOS attacks

Apr 30, 2024 pm 05:34 PM

The evasive module protects your website from application layer DOS attacks

Apr 30, 2024 pm 05:34 PM

There are a variety of attack methods that can take a website offline, and the more complex methods involve technical knowledge of databases and programming. A simpler method is called a "DenialOfService" (DOS) attack. The name of this attack method comes from its intention: to cause normal service requests from ordinary customers or website visitors to be denied. Generally speaking, there are two forms of DOS attacks: the third and fourth layers of the OSI model, that is, the network layer attack. The seventh layer of the OSI model, that is, the application layer attack. The first type of DOS attack - the network layer, occurs when a large number of of junk traffic flows to the web server. When spam traffic exceeds the network's ability to handle it, the website goes down. The second type of DOS attack is at the application layer and uses combined

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

How to deploy and maintain a website using PHP

May 03, 2024 am 08:54 AM

To successfully deploy and maintain a PHP website, you need to perform the following steps: Select a web server (such as Apache or Nginx) Install PHP Create a database and connect PHP Upload code to the server Set up domain name and DNS Monitoring website maintenance steps include updating PHP and web servers, and backing up the website , monitor error logs and update content.

How to implement PHP security best practices

May 05, 2024 am 10:51 AM

How to implement PHP security best practices

May 05, 2024 am 10:51 AM

How to Implement PHP Security Best Practices PHP is one of the most popular backend web programming languages used for creating dynamic and interactive websites. However, PHP code can be vulnerable to various security vulnerabilities. Implementing security best practices is critical to protecting your web applications from these threats. Input validation Input validation is a critical first step in validating user input and preventing malicious input such as SQL injection. PHP provides a variety of input validation functions, such as filter_var() and preg_match(). Example: $username=filter_var($_POST['username'],FILTER_SANIT

How to leverage Kubernetes Operator simplifiy PHP cloud deployment?

May 06, 2024 pm 04:51 PM

How to leverage Kubernetes Operator simplifiy PHP cloud deployment?

May 06, 2024 pm 04:51 PM

KubernetesOperator simplifies PHP cloud deployment by following these steps: Install PHPOperator to interact with the Kubernetes cluster. Deploy the PHP application, declare the image and port. Manage the application using commands such as getting, describing, and viewing logs.