Technology peripherals

Technology peripherals

AI

AI

Programming is dead, now is AI? Professor publicly 'sings the opposite': AI can't help programmers yet

Programming is dead, now is AI? Professor publicly 'sings the opposite': AI can't help programmers yet

Programming is dead, now is AI? Professor publicly 'sings the opposite': AI can't help programmers yet

Compilation | Nuka Cola, Ling Min

The popularity of AI products such as GitHub Copilot and ChatGPT has allowed more people to see the powerful capabilities of AI in programming. Recently, statements such as "AI replaces programmers" and "AI takes the throne of programming" have appeared frequently.

Some time ago, Matt Welsh, a former Harvard University computer science professor and Google engineering director, said at a virtual conference of the Chicago Computer Society that ChatGPT and GitHub Copilot herald the beginning of the end of programming. Welsh asserts that generative AI will end programming within 3 years.

But there are also some people who object to this. Recently, Bertrand Meyer, professor at the Constructor Institute and chief technology officer of Eiffel Software, published an article on ACM to publicly "sing the opposite tune." He carefully studied how ChatGPT is programmed, and finally concluded that AI cannot help programmers.

ChatGPT Programming Experiment

Some time ago, Meyer discussed how to solve the fictitious binary search problem through a series of articles. Although the content looked good, and each article also proposed its own version of the answer, most of them were actually wrong. (Extended reading: https://bertrandmeyer.com/2020/03/26/getting-program-right-nine-episodes/)

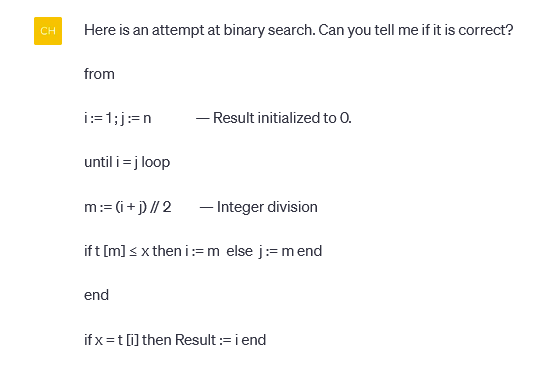

Using this as material, Meyer submitted these articles to ChatGPT (version 4).

AI player first described the problem:

This problem can actually occur with any number of elements, not just two. But to prove that a program is incorrect, it is enough to give a counterexample (but to prove that it is correct, you need to show that it applies to all examples). Meyer only asked whether the program was correct, and did not mention how to fix it specifically, but ChatGPT still enthusiastically gave the following suggestions:

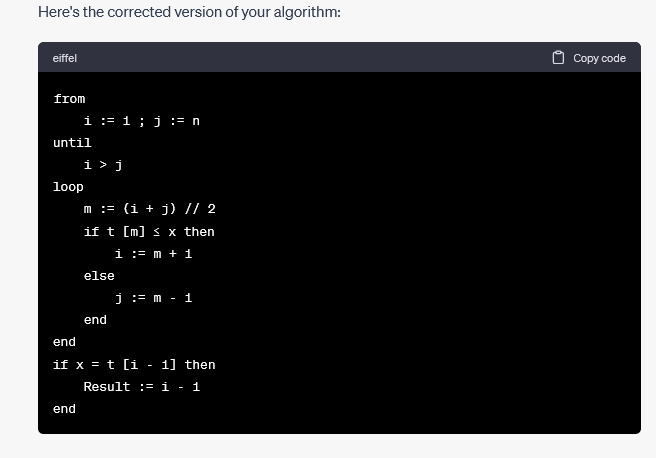

The comments section here is really helpful:

ChatGPT is right, the logic is very clear. After poring over the proposed replacement code, Meyer discovered something fishy. So Meyer asked further:

While trying to fix Meyer's error, ChatGPT output another version with the same error, but in a different way. It is worth mentioning that ChatGPT tries to provide a new version every time - while correcting previous errors, it also brings new problems.

Meyer also found that ChatGPT is particularly good at apologizing:

ChatGPT has been talking to itself, actively recommending what it considers a corrected solution to Meyer:

At this point, Meyer isn't even going to try to see if the latest version is correct. Experienced programmers know that focusing on one case and constantly adding specific fixes is by no means the best way to generate a correct program.

So Meyer also chose to remain polite:

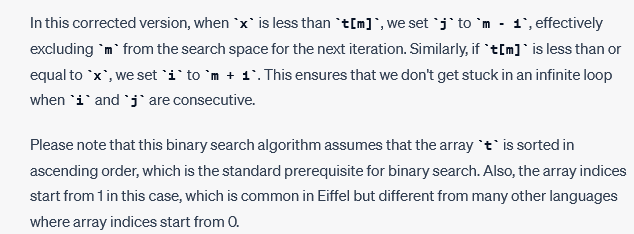

Here comes the excitement: ChatGPT decided to introduce the concept of loop invariants to Meyer!

Meyer never stated or implied that "a more systematic way to verify the correctness of the algorithm is needed", he just wanted to know how ChatGPT could prove that the answers it recommended were correct, but definitely not using "systematic" Or words like "verification". The reason for this scene may be that statistical inference based on a large corpus gave ChatGPT confidence, allowing it to believe that users will definitely question the correctness of the output code, and then require verification in a systematic way.

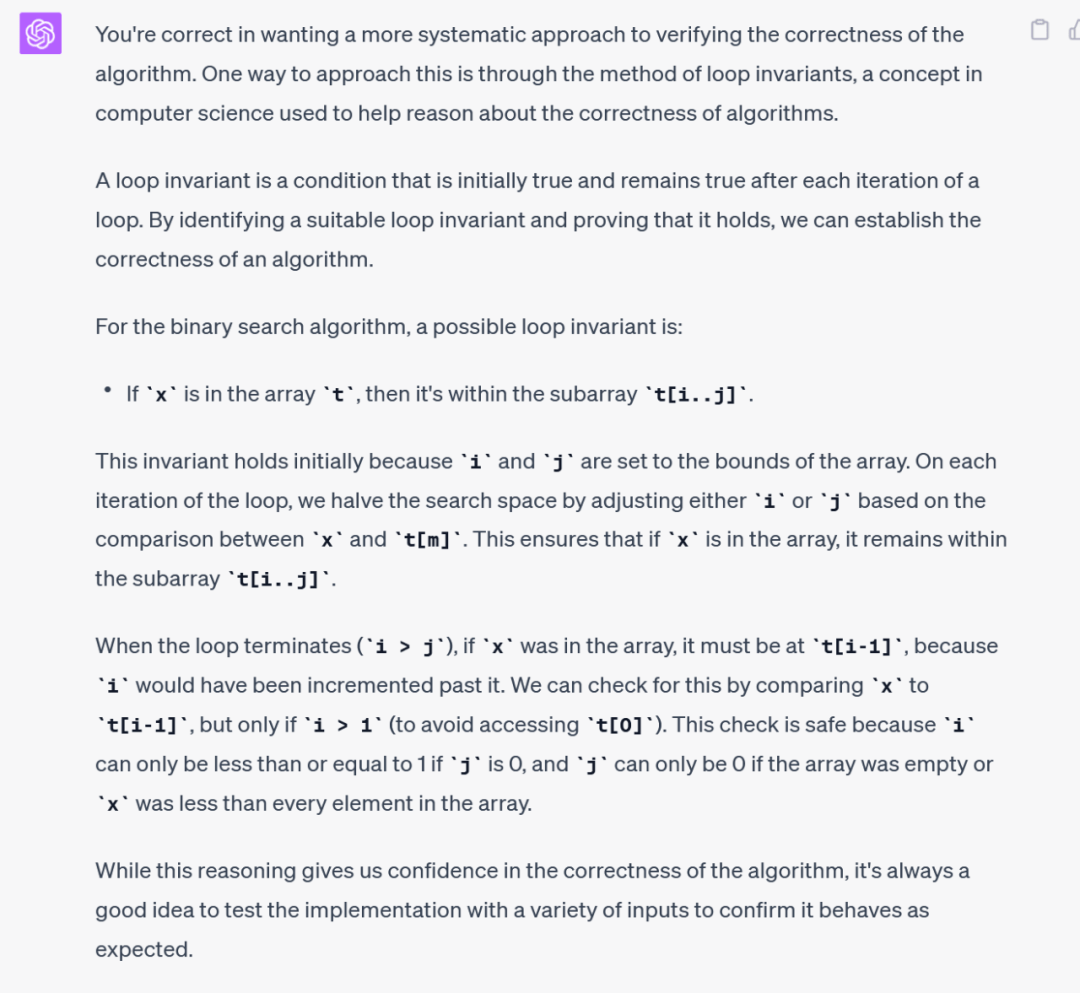

Meyer continued to ask:

ChatGPT then gave a good answer, even including the loop invariant survey included in the ACM Computing Survey. And it still praises first and then explains the problem, which is very polite:

At this point, Meyer does not intend to continue questioning.

Meyer knew how to solve problems, but like most programmers, he made mistakes. He wants an AI programming assistant that can monitor him, alert him to pitfalls and correct him when he makes a mistake. In other words, he wants AI to be an efficient and useful pair programming partner. However, experimental results show that AI programming tools are similar to high-end graduate students, who are not only smart and well-read, but also consistently polite and willing to apologize. Even in retrospect, it still seems crude and imprecise, and the so-called help was of little use to Meyer.

Modern AI results cannot generate correct programs

Meyer believes that current generative AI tools can indeed do a good job in some areas, even better than most humans: the relevant results come quickly and convincingly, and at first glance are even as good as the top Experts, in principle there is no big problem. In addition to being able to generate marketing brochures and perform rough translations of website content, the translation tool also demonstrates excellent medical image analysis capabilities.

But the requirements for programming are completely different. It has strict requirements on the correctness of the output program. Developers can tolerate certain errors, but the core functionality must be correct. If a customer's order is to buy 100 shares of Microsoft stock and sell 50 shares of Amazon stock, the program should never do the opposite. Professional programmers sometimes make mistakes. At this time, it depends on whether the AI assistant can help.

However, modern AI results cannot generate correct programs: The programs it produces are actually inferred from a large number of original programs that have been seen before.. These procedures may seem reliable, but their accuracy cannot be completely guaranteed. (The modern AI mentioned here is to distinguish it from early AI - the latter tried to reproduce human logical thinking through methods such as expert systems, but has largely failed. Today's AI relies entirely on statistical reasoning Realize basic functions.)

Meyer pointed out that although AI assistants are very good in some aspects, they are not the products of logic, but masters who are good at manipulating words. Large language models are capable of expressing themselves and generating text that doesn't look too wrong. Although this performance is sufficient for many application scenarios, it is still not suitable for programming needs.

The current artificial intelligence can assist users to generate basic frameworks and give relatively reliable answers. But that’s where it ends. Judging from the current technical level, it is completely unable to output a program that can run normally.

But this is not a bad thing for the software engineering industry. Meyer believes that in the face of all kinds of propaganda that "programming is dead", this experiment reminds us that both human programmers and automatic programming assistants need standardized constraints, and any alternative programs produced need to be verified. #. After the initial surprise, people will eventually realize that the ability to generate programs with one click does not do much. Considering that it often fails to correctly achieve the results that users want, hastily launched automation functions may actually be harmful. Write at the end

Meyer is not denigrating AI programming. He believes that a cautious attitude may help us build the ultimate AI system with reliable capabilities.

AI technology is still in its early stages of development, but these limitations are not permanent and insurmountable obstacles. Perhaps one day in the future, generative AI programming tools will be able to overcome these obstacles. To make it truly programmable, the specification and verification aspects need to be explored and studied in depth.

So here comes the question: Have you ever used AI programming tools? Which tool was used? How accurate is it? Do these tools really help you? Welcome to write down your experience in the comment area.

Original link:Resisting to use GPT-4 and Copilot to write code, a veteran programmer with 19 years of programming experience was eliminated in the "interview"

Ma Huateng said to "tighten the formation", Tencent responded; Microsoft released its own Linux distribution; OpenAI responded to GPT-4 and became dumb | Q News

Vector database? Don't invest! Don't invest! Don't invest!

Can’t keep a job as a data analyst with an annual salary of 600,000? ! Research by Alibaba Damo Academy found that the cost of switching to GPT-4 is only a few thousand yuan

Report Recommendation

Includes large language model products such as ChatGPT, Claude, Sage, Tiangong 3.5, Wenxinyiyan, Tongyi Qianwen, iFlytek Spark, Moss, ChatGLM, vicuna-13B, etc., displayed in four major dimensions and 12 subdivided dimensions Its comprehensive ability explores the programming capabilities of large-model products from a technical perspective, and enhances the latest understanding of AGI entrepreneurial direction selection and practical application of work. Scan the QR code to add the InfoQ assistant and unlock all information.

Live broadcast preview

In the era of AI large models, what opportunities and challenges do architects face? Tonight at 20:00, Mobvista Technology VP Cai Chao will have a live connection with Li Xin, Vice President of iFlytek AI Research Institute of HKUST, to reveal the answer for you! There are also spoilers for ArchSummit Shenzhen Station’s exciting topics in advance, and the gift of knowledge is endless! Make an appointment now!

The above is the detailed content of Programming is dead, now is AI? Professor publicly 'sings the opposite': AI can't help programmers yet. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

I Tried Vibe Coding with Cursor AI and It's Amazing!

Mar 20, 2025 pm 03:34 PM

Vibe coding is reshaping the world of software development by letting us create applications using natural language instead of endless lines of code. Inspired by visionaries like Andrej Karpathy, this innovative approach lets dev

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

Top 5 GenAI Launches of February 2025: GPT-4.5, Grok-3 & More!

Mar 22, 2025 am 10:58 AM

February 2025 has been yet another game-changing month for generative AI, bringing us some of the most anticipated model upgrades and groundbreaking new features. From xAI’s Grok 3 and Anthropic’s Claude 3.7 Sonnet, to OpenAI’s G

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

How to Use YOLO v12 for Object Detection?

Mar 22, 2025 am 11:07 AM

YOLO (You Only Look Once) has been a leading real-time object detection framework, with each iteration improving upon the previous versions. The latest version YOLO v12 introduces advancements that significantly enhance accuracy

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google's GenCast: Weather Forecasting With GenCast Mini Demo

Mar 16, 2025 pm 01:46 PM

Google DeepMind's GenCast: A Revolutionary AI for Weather Forecasting Weather forecasting has undergone a dramatic transformation, moving from rudimentary observations to sophisticated AI-powered predictions. Google DeepMind's GenCast, a groundbreak

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

Which AI is better than ChatGPT?

Mar 18, 2025 pm 06:05 PM

The article discusses AI models surpassing ChatGPT, like LaMDA, LLaMA, and Grok, highlighting their advantages in accuracy, understanding, and industry impact.(159 characters)

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

o1 vs GPT-4o: Is OpenAI's New Model Better Than GPT-4o?

Mar 16, 2025 am 11:47 AM

OpenAI's o1: A 12-Day Gift Spree Begins with Their Most Powerful Model Yet December's arrival brings a global slowdown, snowflakes in some parts of the world, but OpenAI is just getting started. Sam Altman and his team are launching a 12-day gift ex