PHP crawler best practices: how to avoid IP bans

With the rapid development of the Internet, crawler technology has become more and more mature. As a simple and powerful language, PHP is also widely used in the development of crawlers. However, many crawler developers have encountered the problem of IP being blocked when using PHP crawlers. This situation will not only affect the normal operation of the crawler, but may even bring legal risks to the developers. Therefore, this article will introduce some best practices for PHP crawlers to help developers avoid the risk of IP being banned.

1. Follow the robots.txt specification

robots.txt refers to a file in the root directory of the website, which is used to set access permissions to the crawler program. If the website has a robots.txt file, the crawler should read the rules in the file before crawling accordingly. Therefore, when developing PHP crawlers, developers should follow the robots.txt specification and not blindly crawl all content of the website.

2. Set the crawler request header

When developing a PHP crawler, developers should set the crawler request header to simulate user access behavior. In the request header, some common information needs to be set, such as User-Agent, Referer, etc. If the information in the request header is too simple or untrue, the crawled website is likely to identify malicious behavior and ban the crawler IP.

3. Limit access frequency

When developing PHP crawlers, developers should control the access frequency of the crawler and avoid placing excessive access burden on the crawled website. If the crawler visits too frequently, the crawled website may store access records in the database and block IP addresses that are visited too frequently.

4. Random IP proxy

When developers develop PHP crawlers, they can use random IP proxy technology to perform crawler operations through proxy IPs to protect local IPs from crawled websites. Banned. Currently, there are many agency service providers on the market that provide IP agency services, and developers can choose according to their actual needs.

5. Use verification code identification technology

When some websites are accessed, a verification code window will pop up, requiring users to perform verification operations. This situation is a problem for crawlers because the content of the verification code cannot be recognized. When developing PHP crawlers, developers can use verification code identification technology to identify verification codes through OCR technology and other methods to bypass verification code verification operations.

6. Proxy pool technology

Proxy pool technology can increase the randomness of crawler requests to a certain extent and improve the stability of crawler requests. The principle of proxy pool technology is to collect available proxy IPs from the Internet, store them in the proxy pool, and then randomly select proxy IPs for crawler requests. This technology can effectively reduce the data volume of crawled websites and improve the efficiency and stability of crawler operations.

In short, by following the robots.txt specification, setting crawler request headers, limiting access frequency, using random IP proxies, using verification code identification technology and proxy pool technology, developers can effectively avoid PHP crawler IP being banned. risks of. Of course, in order to protect their own rights and interests, developers must abide by legal regulations and refrain from illegal activities when developing PHP crawlers. At the same time, the development of crawlers needs to be careful, understand the anti-crawling mechanism of crawled websites in a timely manner, and solve problems in a targeted manner, so that crawler technology can better serve the development of human society.

The above is the detailed content of PHP crawler best practices: how to avoid IP bans. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

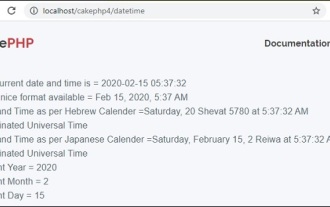

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

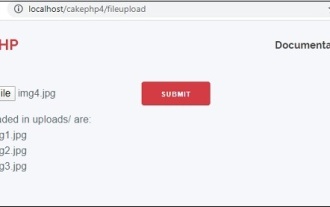

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

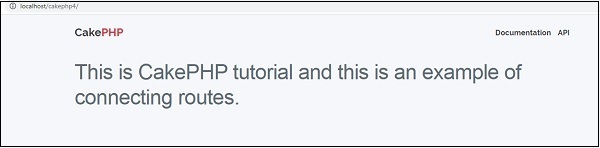

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

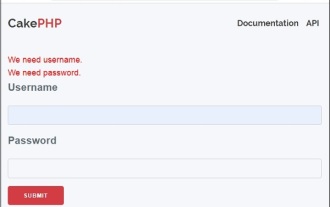

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.