Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Web crawler implementation based on PHP: extract key information from web pages

Web crawler implementation based on PHP: extract key information from web pages

Web crawler implementation based on PHP: extract key information from web pages

With the rapid development of the Internet, a large amount of information is generated on different websites every day. This information includes various forms of data, such as text, pictures, videos, etc. For those who need a comprehensive understanding and analysis of the data, manually collecting data from the Internet is impractical.

In order to solve this problem, web crawlers came into being. A web crawler is an automated program that crawls and extracts specific information from the Internet. In this article, we will explain how to implement a web crawler using PHP.

1. How web crawlers work

Web crawlers automatically crawl data on web pages by accessing websites on the Internet. Before crawling data, the crawler needs to first parse the web page and determine the information that needs to be extracted. Web pages are usually written using HTML or XML markup language, so the crawler needs to parse the web page according to the syntax structure of the markup language.

After parsing a web page, the crawler can use regular expressions or XPath expressions to extract specific information from the web page. This information can be text, or other forms of data such as pictures and videos.

2. PHP implements web crawler

- Download web page

PHP’s file_get_contents function can be used to obtain the original HTML code of the web page. As shown in the following example:

$html = file_get_contents('http://www.example.com/');- Parse the webpage

Before parsing the webpage, we need to use PHP's DOMDocument class to convert the webpage into a DOM object to facilitate subsequent operations. . As shown in the following example:

$dom = new DOMDocument(); @$dom->loadHTML($html);

After converting to a DOM object, we can use a series of methods provided by the DOMElement class to extract web page information. As shown in the following example:

$nodeList = $dom->getElementsByTagName('h1');

foreach ($nodeList as $node) {

echo $node->nodeValue;

}This code can extract all h1 headers in the web page and output their contents to the screen.

- Extract information using XPath expressions

XPath expression is a syntax structure used to extract specific information from an XML or HTML document. In PHP, we can use the DOMXPath class and XPath expressions to extract information from web pages. As shown in the following example:

$xpath = new DOMXPath($dom);

$nodeList = $xpath->query('//h1');

foreach ($nodeList as $node) {

echo $node->nodeValue;

}This code is similar to the previous example, but uses an XPath expression to extract the h1 title.

- Storing data

Finally, we need to store the extracted data in a database or file for subsequent use. In this article, we will use PHP’s string manipulation functions to save data to a file. As shown in the following example:

$file = 'result.txt'; $data = 'Data to be saved'; file_put_contents($file, $data);

This code stores the string 'Data to be saved' into the file 'result.txt'.

3. Conclusion

This article introduces the basic principles of using PHP to implement web crawlers. We discussed how to use PHP to download, parse, extract information, and store data from web pages. In fact, web crawling is a very complex topic, and we have only briefly covered some of the basics. If you are interested in this, you can further study and research.

The above is the detailed content of Web crawler implementation based on PHP: extract key information from web pages. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

How do you parse and process HTML/XML in PHP?

Feb 07, 2025 am 11:57 AM

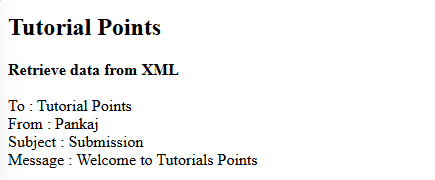

This tutorial demonstrates how to efficiently process XML documents using PHP. XML (eXtensible Markup Language) is a versatile text-based markup language designed for both human readability and machine parsing. It's commonly used for data storage an

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

PHP Program to Count Vowels in a String

Feb 07, 2025 pm 12:12 PM

A string is a sequence of characters, including letters, numbers, and symbols. This tutorial will learn how to calculate the number of vowels in a given string in PHP using different methods. The vowels in English are a, e, i, o, u, and they can be uppercase or lowercase. What is a vowel? Vowels are alphabetic characters that represent a specific pronunciation. There are five vowels in English, including uppercase and lowercase: a, e, i, o, u Example 1 Input: String = "Tutorialspoint" Output: 6 explain The vowels in the string "Tutorialspoint" are u, o, i, a, o, i. There are 6 yuan in total

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

Explain JSON Web Tokens (JWT) and their use case in PHP APIs.

Apr 05, 2025 am 12:04 AM

JWT is an open standard based on JSON, used to securely transmit information between parties, mainly for identity authentication and information exchange. 1. JWT consists of three parts: Header, Payload and Signature. 2. The working principle of JWT includes three steps: generating JWT, verifying JWT and parsing Payload. 3. When using JWT for authentication in PHP, JWT can be generated and verified, and user role and permission information can be included in advanced usage. 4. Common errors include signature verification failure, token expiration, and payload oversized. Debugging skills include using debugging tools and logging. 5. Performance optimization and best practices include using appropriate signature algorithms, setting validity periods reasonably,

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

7 PHP Functions I Regret I Didn't Know Before

Nov 13, 2024 am 09:42 AM

If you are an experienced PHP developer, you might have the feeling that you’ve been there and done that already.You have developed a significant number of applications, debugged millions of lines of code, and tweaked a bunch of scripts to achieve op

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Explain late static binding in PHP (static::).

Apr 03, 2025 am 12:04 AM

Static binding (static::) implements late static binding (LSB) in PHP, allowing calling classes to be referenced in static contexts rather than defining classes. 1) The parsing process is performed at runtime, 2) Look up the call class in the inheritance relationship, 3) It may bring performance overhead.

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are PHP magic methods (__construct, __destruct, __call, __get, __set, etc.) and provide use cases?

Apr 03, 2025 am 12:03 AM

What are the magic methods of PHP? PHP's magic methods include: 1.\_\_construct, used to initialize objects; 2.\_\_destruct, used to clean up resources; 3.\_\_call, handle non-existent method calls; 4.\_\_get, implement dynamic attribute access; 5.\_\_set, implement dynamic attribute settings. These methods are automatically called in certain situations, improving code flexibility and efficiency.