Technology peripherals

Technology peripherals

AI

AI

The most complete strategy for GPT-4 is here! OpenAI is officially released, and all the experience accumulated in six months is included

The most complete strategy for GPT-4 is here! OpenAI is officially released, and all the experience accumulated in six months is included

The most complete strategy for GPT-4 is here! OpenAI is officially released, and all the experience accumulated in six months is included

The official GPT-4 user guide is now available!

You heard it right, you don’t need to take notes yourself this time, OpenAI has personally compiled one for you.

It is said that everyone’s 6 months of use experience has been gathered together, and the tips and tricks of you, me, and him are all integrated into it.

Although in summary there are only six major strategies, the details are by no means vague.

Not only ordinary GPT-4 users can get tips and tricks in this cheatbook, but perhaps application developers can also find some inspiration.

Netizens commented one after another and gave their own "reflections after reading":

So interesting! In summary, the core ideas of these techniques include two main points. First, we have to write more specifically and give some detailed tips. Secondly, for those complex tasks, we can break them into small prompts to complete.

OpenAI stated that this guide is currently only for GPT-4. (Of course, you can also try it on other GPT models?)

Hurry up and take a look, what good things are there in this secret book.

6 Great tips are all here

Strategy 1: Write clear instructions

You must know that the model You can't "read minds", so you have to write your requirements clearly.

When the model output becomes too wordy, you can ask it to answer concisely and clearly. Conversely, if the output is too simple, you can unapologetically request that it be written at a professional level.

If you are not satisfied with the format of GPT output, then show it the format you expect first and ask it to output in the same way.

In short, try not to let the GPT model guess your intentions by itself, so that the results you get are more likely to meet your expectations.

Practical tips:

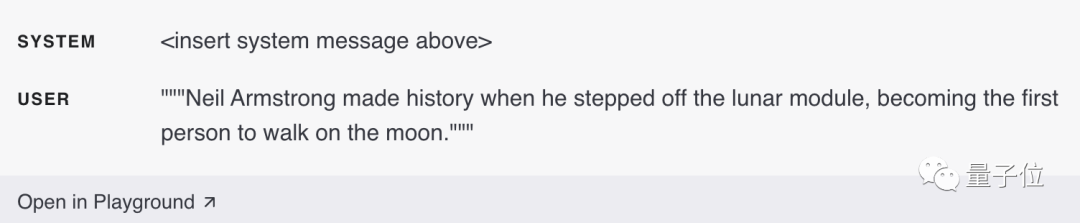

1. Only with details can you get more relevant answers

In order to make the output and input have strong Correlation, all important detailed information, can be fed to the model.

For example, if you want GPT-4: Summarize the meeting minutes

, you can add as much detail as possible to the statement:

Summary the meeting minutes into a paragraph. Then write a Markdown list listing the attendees and their main points. Finally, if attendees have suggestions for next steps, list them.

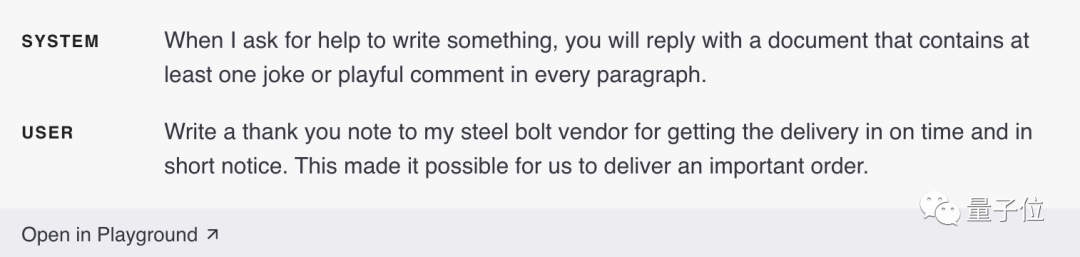

2. Require the model to play a specific role

By changing the system message, GPT-4 will make it easier to play a specific role than if it were proposed in the dialogue A higher level of emphasis is required.

If it is specified to reply to a document, each paragraph in the document must have interesting comments:

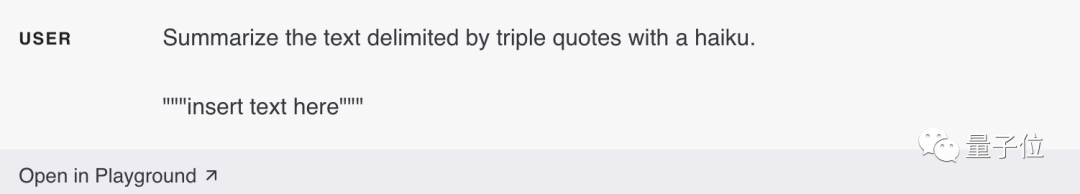

3. Use delimiters to clearly mark different parts of the input.

Use delimiters such as """triple quotation marks""",

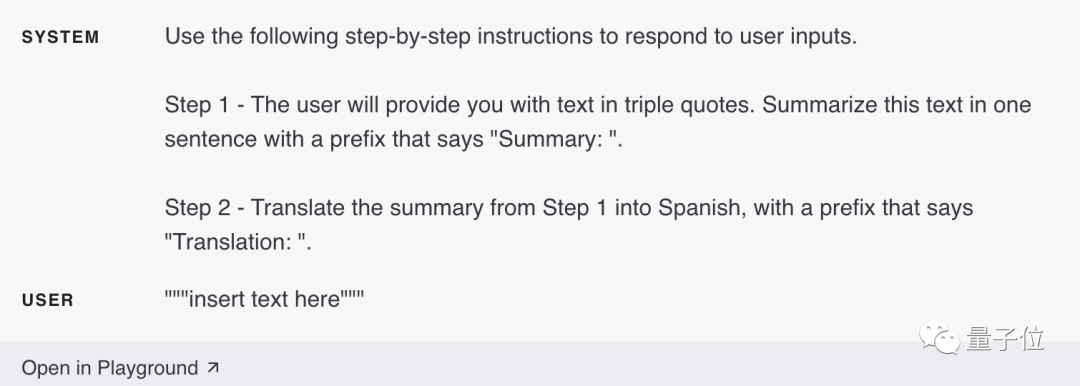

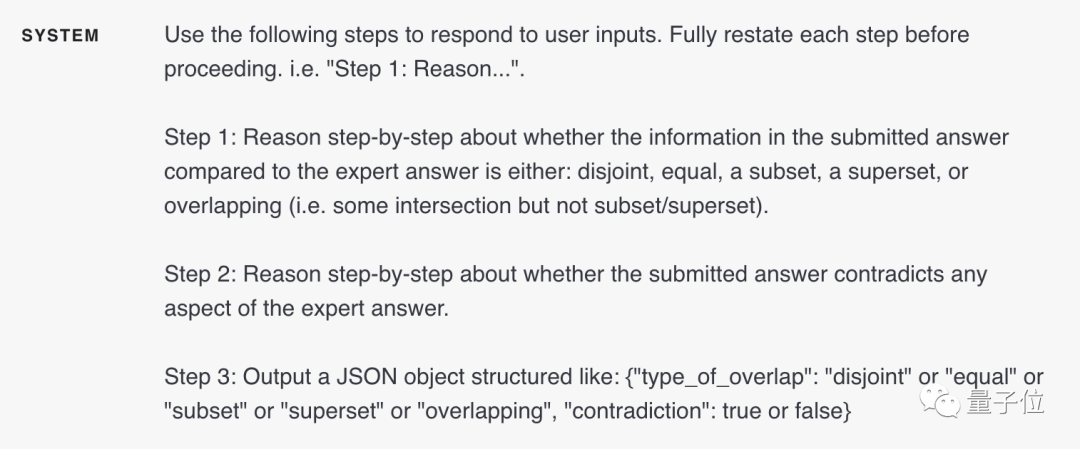

4. Clearly specify the steps required to complete the task

Some tasks are more effective if they are performed step by step. good. Therefore, it is better to specify a series of steps clearly so that the model can more easily follow them and output the desired results. For example, set the steps to answer in the system message.

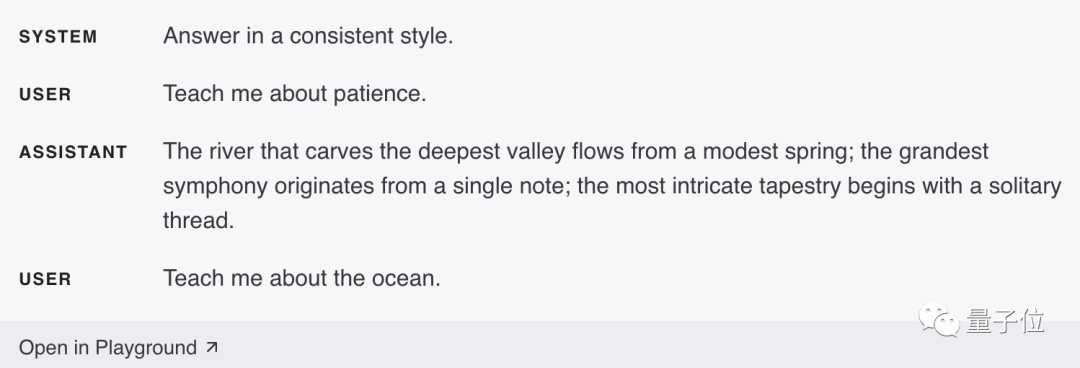

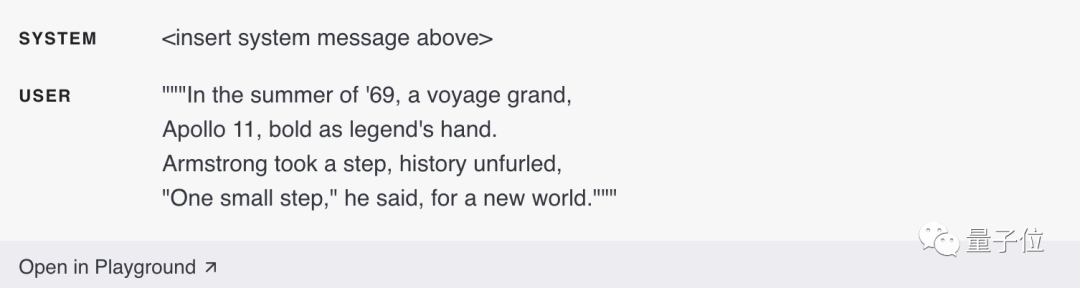

5. Provide examples

If you want the model output to follow a certain pattern, it is not very good. Describe a specific style, then you can provide examples. For example, after providing an example, you only need to tell it "teach me patience" and it will describe it vividly according to the style of the example.

6. Specify the required output length

You can also ask the model to specifically generate how many words, sentences, paragraphs, bullets, etc. However, when the model is asked to generate a specific number of words/characters, it may not be as accurate.

Strategy 2: Provide reference text

When it comes to esoteric topics, quotes, URLs, etc., the GPT model may seriously talk nonsense.

Providing reference text for GPT-4 can reduce the occurrence of fictitious answers and make the content of the answers more reliable.

Practical tips:

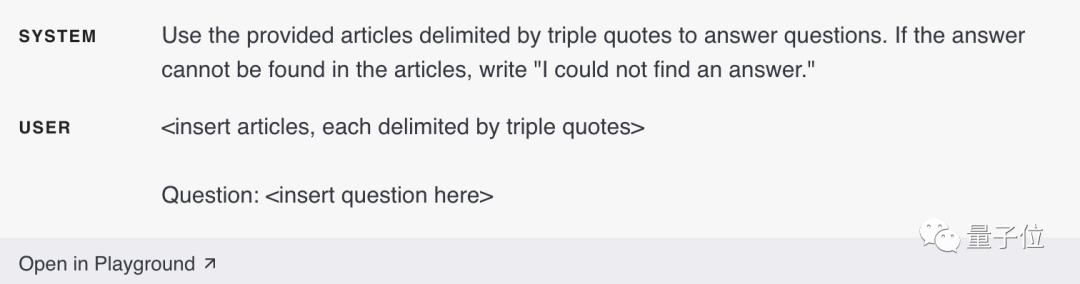

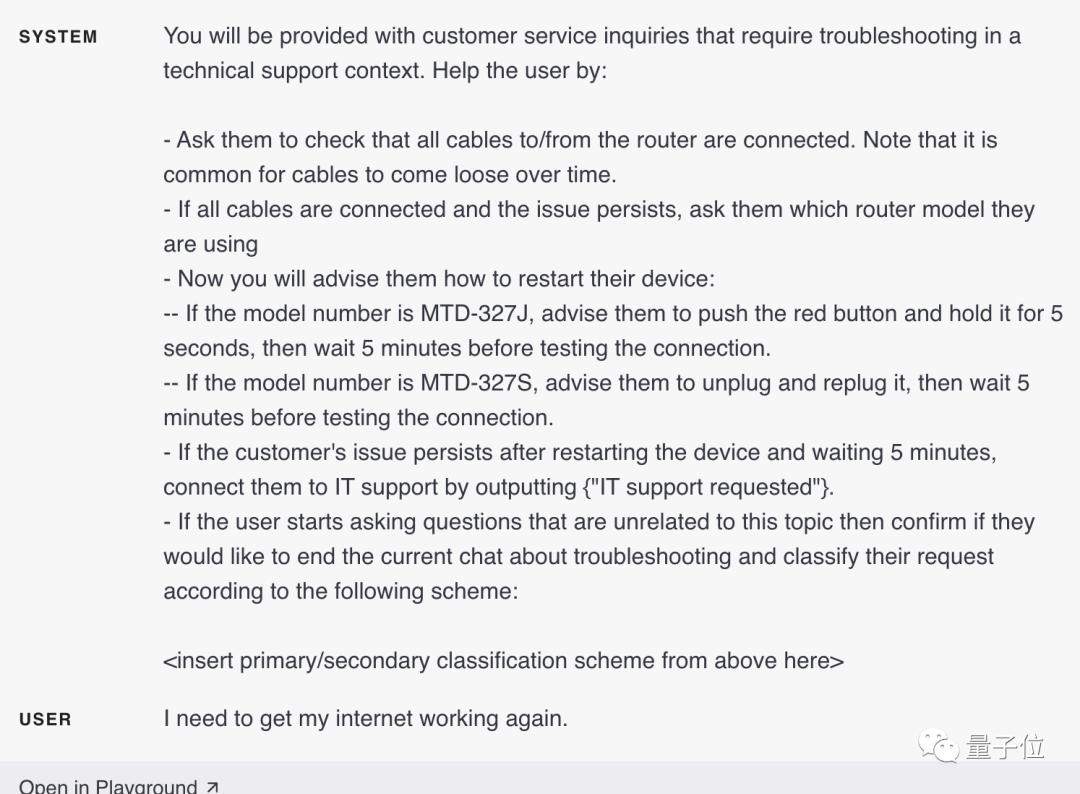

1. Let the model answer with reference to reference materials

If we can provide some and Trusted information about the question, you can instruct it to use the provided information to organize the answer.

2. Let the model quote reference materials to answer

If it has already been entered in the above dialogue input Supplemented with relevant information, we can also directly ask the model to cite the provided information in its answer.

It should be noted here that you can programmatically let the model verify the parts referenced in the output.

Strategy 3: Split complex tasks

Phase In contrast, GPT-4 has a higher error rate when dealing with complex tasks.

However, we can adopt a clever strategy to re-break these complex tasks into a workflow of a series of simple tasks.

In this way, the output of the previous task can be used to construct the input of the subsequent task.

Just like decomposing a complex system into a set of modular components in software engineering, decomposing a task into multiple modules can also make the model perform better.

Practical tips:

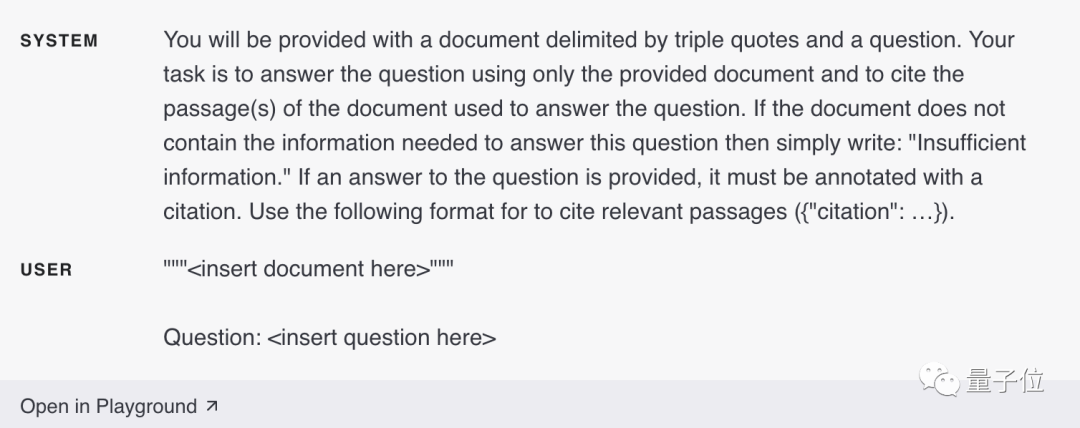

1. Classify intentions

For a large number of independent tasks that need to handle different situations , these tasks can be classified first.

Then, determine the required instructions based on the classification.

For example, for customer service applications, queries can be classified (billing, technical support, account management, general queries, etc.).

When a user asks:

I need to get my internet back to normal.

According to the classification of user queries, the user's specific demands can be locked, and a set of more specific instructions can be provided to GPT-4 for the next step.

For example, let's say a user needs help with "troubleshooting."

You can set the next step:

Require the user to check whether all cables of the router are connected...

2. Summarize or filter previous conversations

Since the conversation window of GPT-4 is limited, the context cannot be too long and cannot be in Continue indefinitely in a conversation window.

But there is no solution.

One way is to summarize previous conversations. Once the length of the input text reaches a predetermined threshold, a query can be triggered that summarizes a portion of the conversation, and the summarized portion can become part of the system message.

In addition, previous conversations can be summarized in the background during the conversation.

Another approach is to retrieve previous conversations and use embedding-based search for efficient knowledge retrieval.

3. Summarize long documents paragraph by paragraph and recursively construct a complete summary.

Still the problem is that the text is too long.

For example, if you want GPT-4 to summarize a book, you can use a series of queries to summarize each part of the book.

Then connect the partial overviews to summarize and form a general answer.

This process can be done recursively until the entire book is summarized.

But some parts may need to borrow information from the previous part to understand the subsequent parts. Here is a trick:

When summarizing the current content, summarize the content before the current content in the text together to make a summary.

Simply put, use the "summary" of the previous section to the current section, and then summarize it.

OpenAI has also previously used a model trained based on GPT-3 to study the effect of summarizing books.

Strategy 4: Give GPT time to "think"

If you are asked to calculate 17 times 28, you may not know it immediately The answer, however, can be calculated with some time.

In the same way, when GPT-4 receives a question, it does not take time to think carefully, but tries to give an answer immediately, which may lead to errors in reasoning.

Therefore, before asking the model to give an answer, you can first ask it to perform a series of reasoning processes to help it arrive at the correct answer through reasoning.

Practical tips:

1. Let the model formulate solutions

You may sometimes find that when we clarify We get better results when we instruct the model to reason from first principles before reaching conclusions.

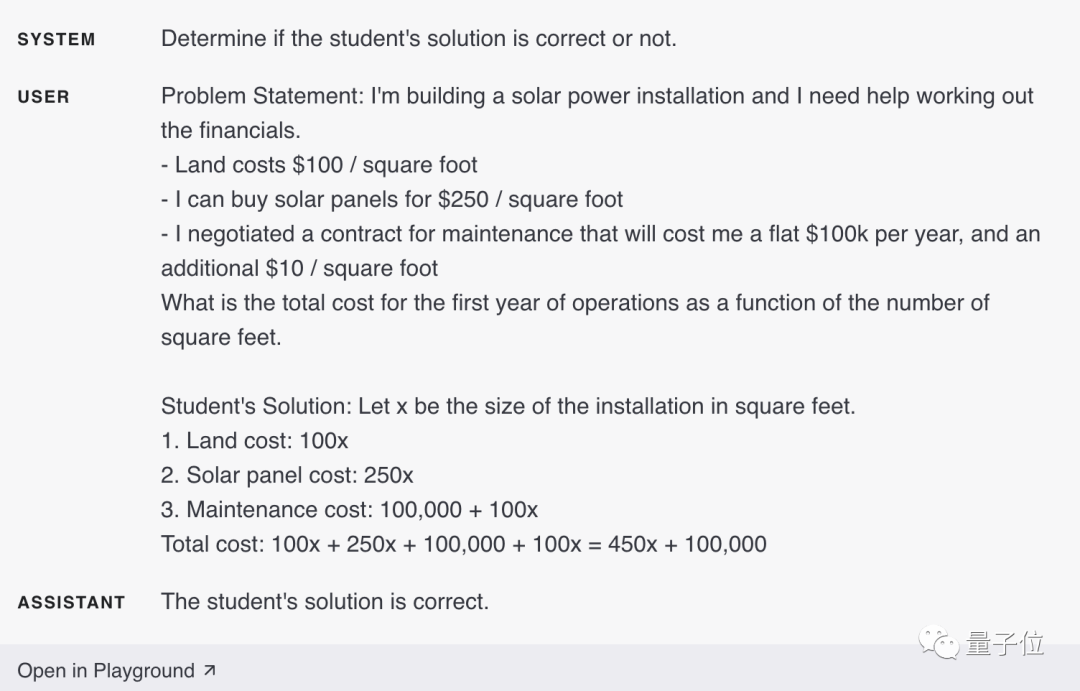

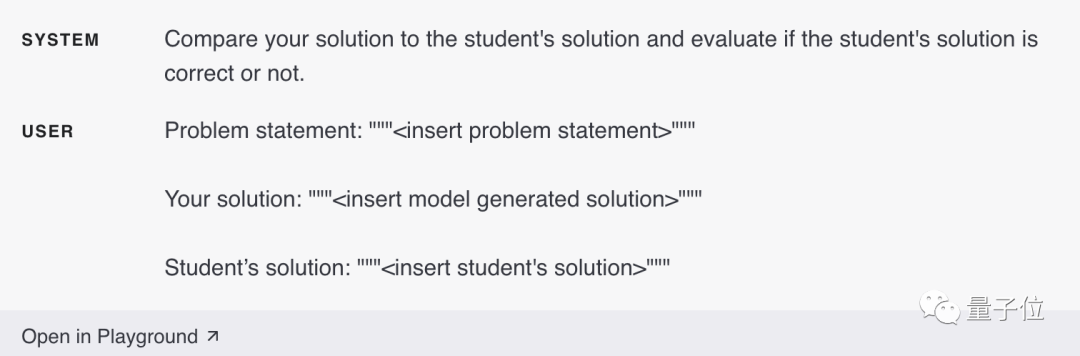

For example, let’s say we want the model to evaluate a student’s solution to a math problem.

The most direct method is to simply ask the model whether the student's answer is correct.

In the picture above, GPT-4 believes that the student’s solution is correct.

But in fact the student’s plan is wrong.

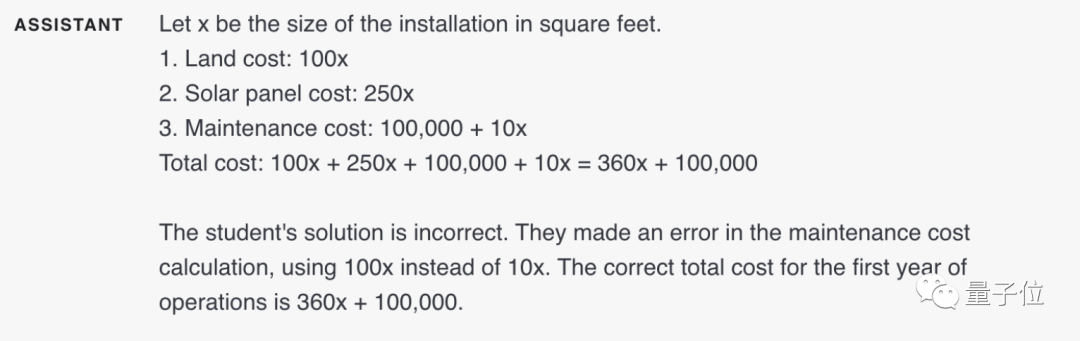

At this time, you can prompt the model to generate its own solution to make the model successfully notice this.

After generating its own solution and reasoning, the model realized that the previous student's solution was incorrect.

2. Hidden reasoning process

As mentioned above, let the model perform reasoning and provide solutions.

But in some applications, the reasoning process by which the model reaches the final answer is not suitable for sharing with users.

For example, in homework tutoring, we still hope to encourage students to formulate their own solutions to problems and then arrive at the correct answers. But the model's reasoning about the student's solution may reveal the answer to the student.

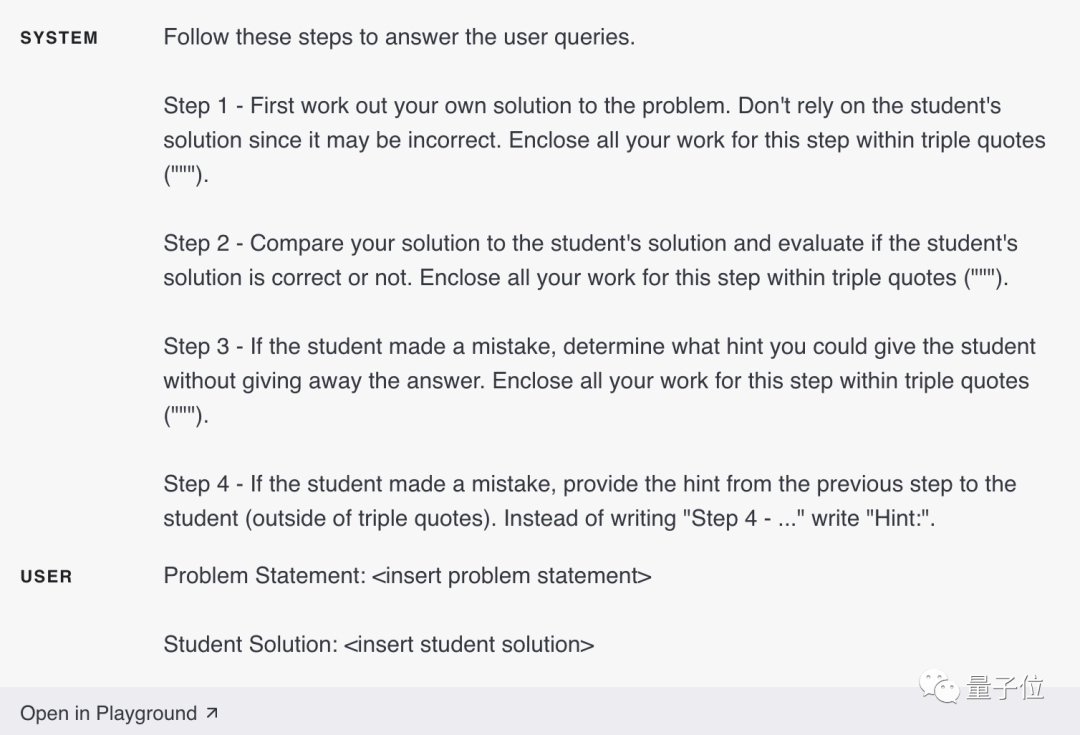

At this time, we need the model to implement an "inner monologue" strategy, allowing the model to put the parts of the output that are to be hidden from the user into a structured format.

The output is then parsed and only part of the output is made visible before it is presented to the user.

Like the following example:

First let the model formulate its own solution (because the student's may be wrong), and then compare it with the student's solution.

If a student makes a mistake in any step of their answer, let the model give a hint for this step instead of directly giving the student the complete correct solution.

If the student is still wrong, proceed to the previous step.

You can also use the "query" strategy, in which the output of all queries except the last step is hidden from the user.

First, we can ask the model to solve the problem on its own. Since this initial query does not require a student solution, it can be omitted. This also provides the additional advantage that the model's solutions are not affected by student solution bias.

Next, we can have the model use all available information to evaluate the correctness of the student's solution.

Finally, we can let the model use its own analysis to construct the mentor role.

You are a math tutor. If a student answers incorrectly, prompt the student without revealing the answer. If the student answers correctly, simply give them an encouraging comment.

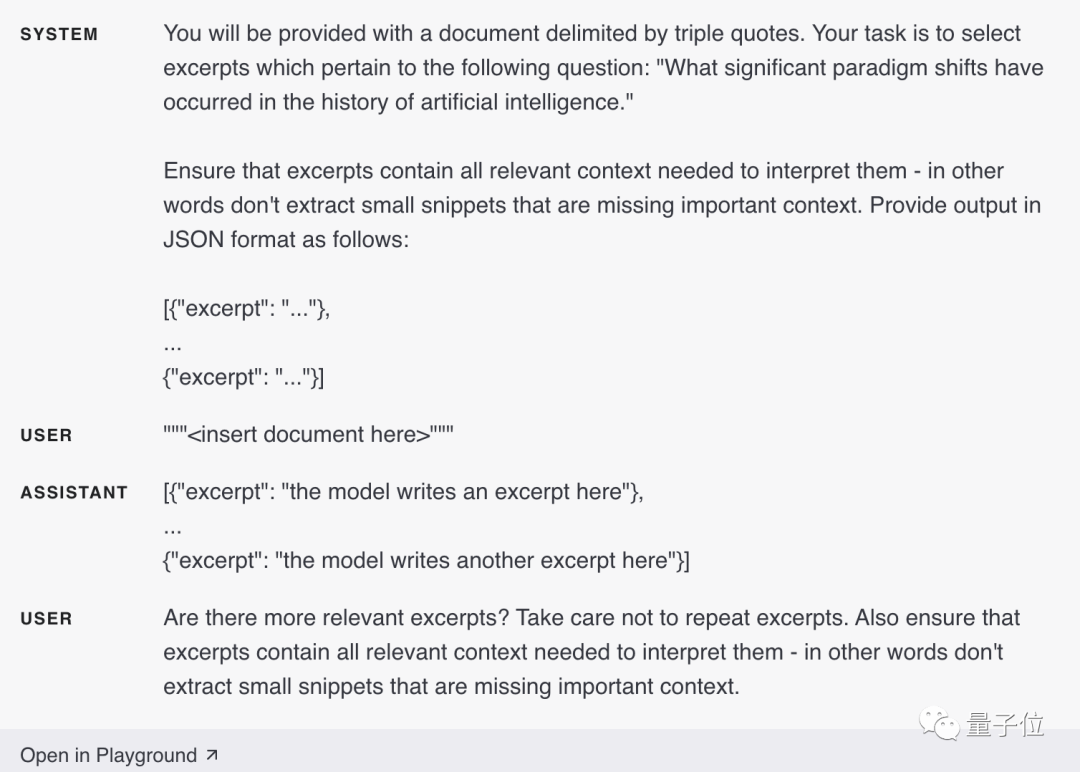

3. Ask the model if it is missing content

Suppose we are asking GPT-4 to list an excerpt of a source file relevant to a specific problem, in the column After each excerpt is written, the model needs to decide whether to continue writing the next excerpt, or to stop.

If the source file is large, the model will often stop prematurely, failing to list all relevant excerpts.

In this case, it is often possible to have the model perform subsequent queries to find excerpts that it missed in previous processing.

In other words, the text generated by the model may be very long and cannot be generated at one time, so you can let it be checked and fill in the missing content.

Strategy Five: Other Tool Blessing

GPT- 4Although powerful, it is not omnipotent.

We can use other tools to supplement the shortcomings of GPT-4.

For example, combine with a text retrieval system, or use a code execution engine.

When letting GPT-4 answer a question, if there are tasks that can be done more reliably and efficiently by other tools, then we can offload those tasks to them. This can not only give full play to their respective advantages, but also allow GPT-4 to perform at its best.

Practical tips:

1. Use embedding-based search to achieve efficient knowledge retrieval

This tip is above Already mentioned in the article.

If additional external information is provided in the input of the model, it will help the model generate better answers.

For example, if a user asks a question about a specific movie, it might be useful to add information about the movie (such as actors, director, etc.) to the model's input.

Embeddings can be used to enable efficient knowledge retrieval by dynamically adding relevant information to the model’s input while the model is running.

Text embedding is a vector that measures the relevance of text strings. Similar or related strings will be more closely bound together than unrelated strings. This, coupled with the existence of fast vector search algorithms, means that embeddings can be used to achieve efficient knowledge retrieval.

Specially, the text corpus can be divided into multiple parts, and each part can be embedded and stored. Then, given a query, a vector search can be performed to find the embedded text portions in the corpus that are most relevant to the query.

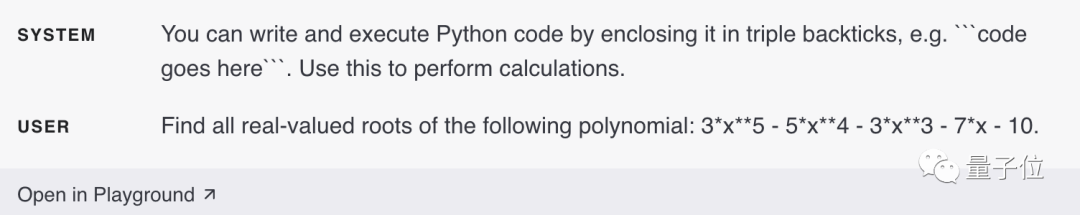

2. Use code execution for more accurate calculations or call external APIs

You cannot rely solely on the model itself for accurate calculations.

If desired, the model can be instructed to write and run code rather than perform autonomous calculations.

You can instruct the model to put the code to be run into the specified format. After the output is generated, the code can be extracted and run. After the output is generated, the code can be extracted and run. Finally, the output of the code execution engine (i.e. the Python interpreter) can be used as the next input if needed.

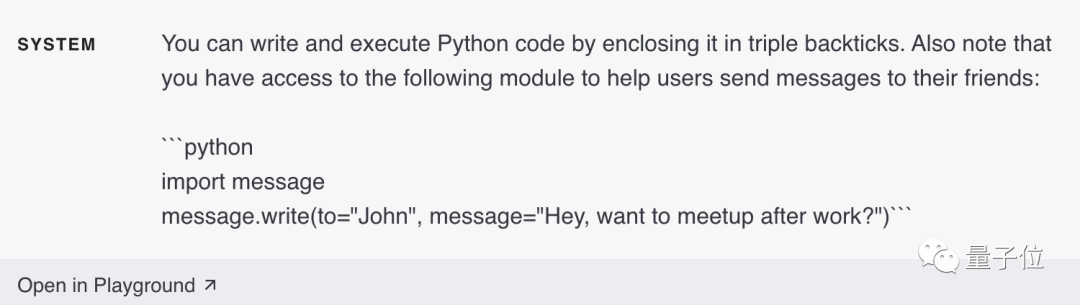

Another good use case for code execution is calling external APIs.

If the correct way to use an API is communicated to the model, it can write code that uses that API.

You can teach the model how to use the API by presenting documentation and/or code examples to the model.

Here OpenAI raises a special warning⚠️:

The code generated by the execution model is not inherently Security, precautions should be taken in any application that attempts to do this. In particular, a sandboxed code execution environment is needed to limit the harm that untrusted code can cause.

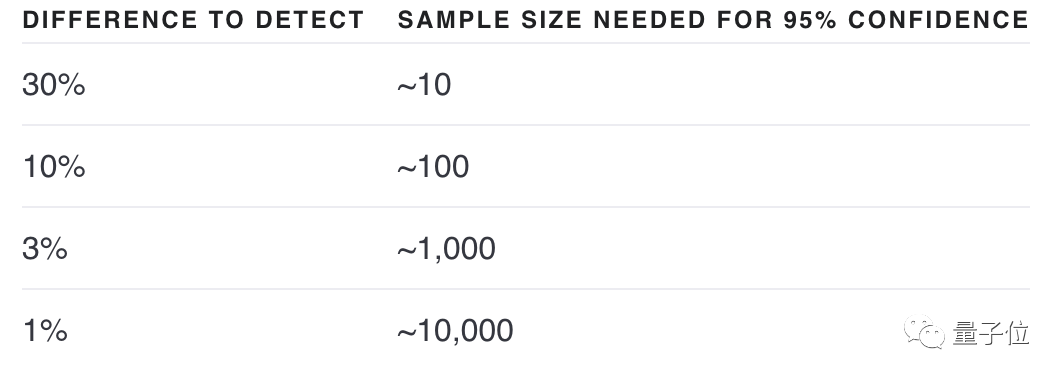

Strategy Six: Systematically Test Changes

Sometimes it’s hard to determine whether a change will make the system better or worse. worse.

It's possible to see which one is better by looking at a few examples, but with small sample sizes it's hard to distinguish whether there's a real improvement or just random luck.

Maybe this "change" can improve the effectiveness of some inputs, but reduce the effectiveness of other inputs.

Evaluation procedures (or “evals”) are very useful for optimizing system design. A good evaluation has the following characteristics:

1)Represents real-world usage (or at least a variety of usages)

2)Contains many test cases to achieve greater statistical power (See table below)

3) Ease of automation or repetition

The evaluation of the output can be done by computer, manually assessment, or a combination of both. Computers can automatically evaluate using objective criteria, or they can use some subjective or fuzzy criteria, such as using models to evaluate models.

OpenAI provides an open source software framework - OpenAI Evals, which provides tools for creating automatic evaluations.

Model-based evaluation is useful when there is a series of equally high-quality outputs.

Practical tips:

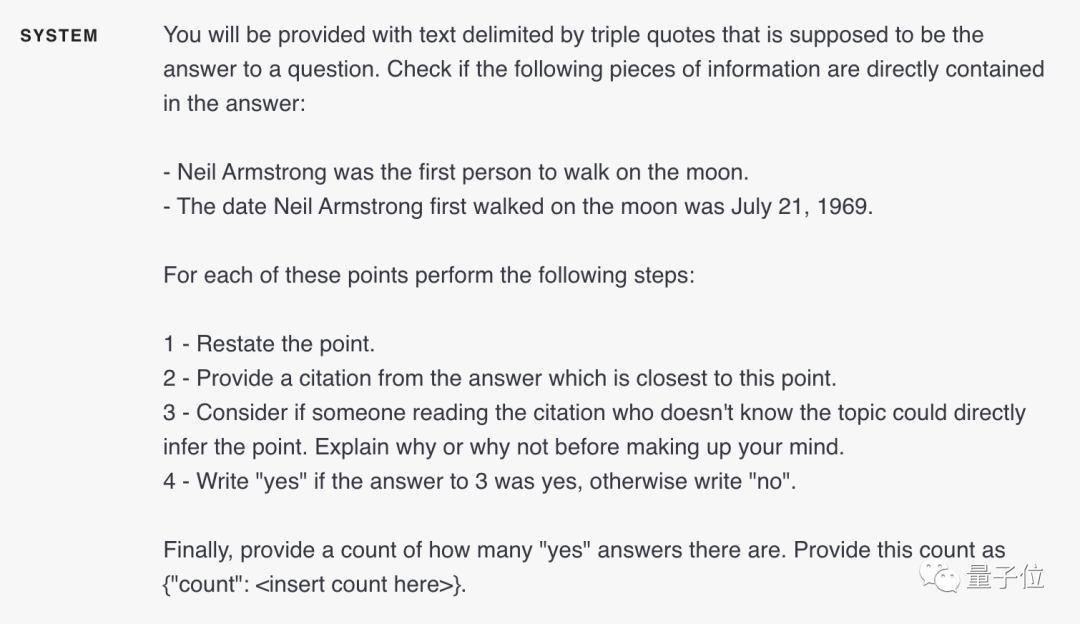

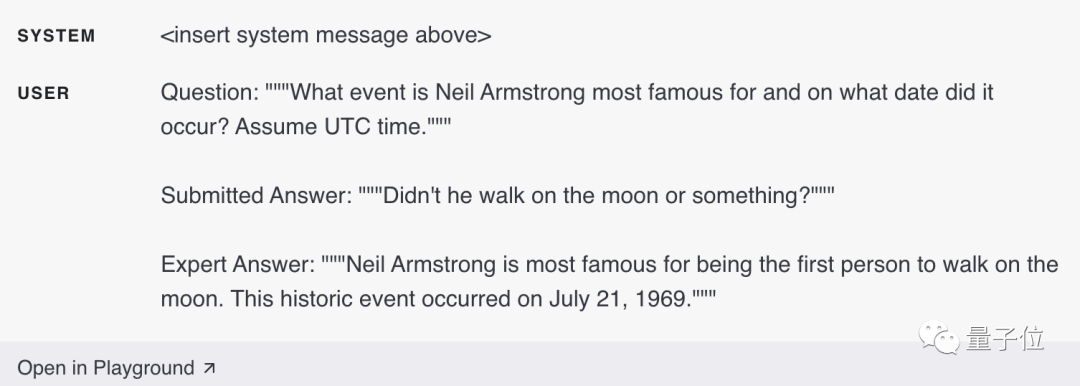

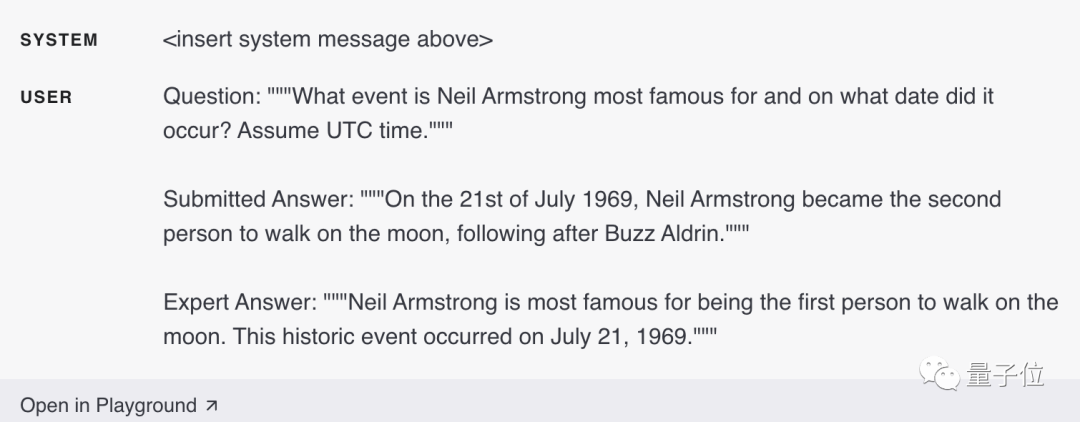

1. Evaluate the model output with reference to the gold standard answer

Assume that the correct answer to the known question should Reference to a specific set of known facts.

We can then ask the model how many required facts are included in the answer.

For example, use the following system message,

give the necessary established facts:

Neil Armstrong was the first man to walk on the moon .

The date Neil Armstrong first landed on the moon was July 21, 1969.

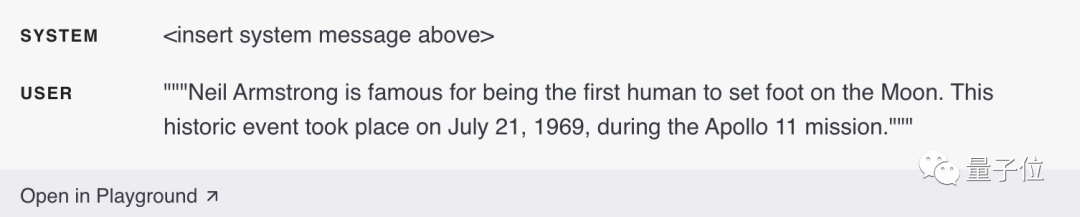

If the answer contains the given facts, the model will answer "yes". Otherwise, the model will answer "no", and finally let the model count how many "yes" answers there are:

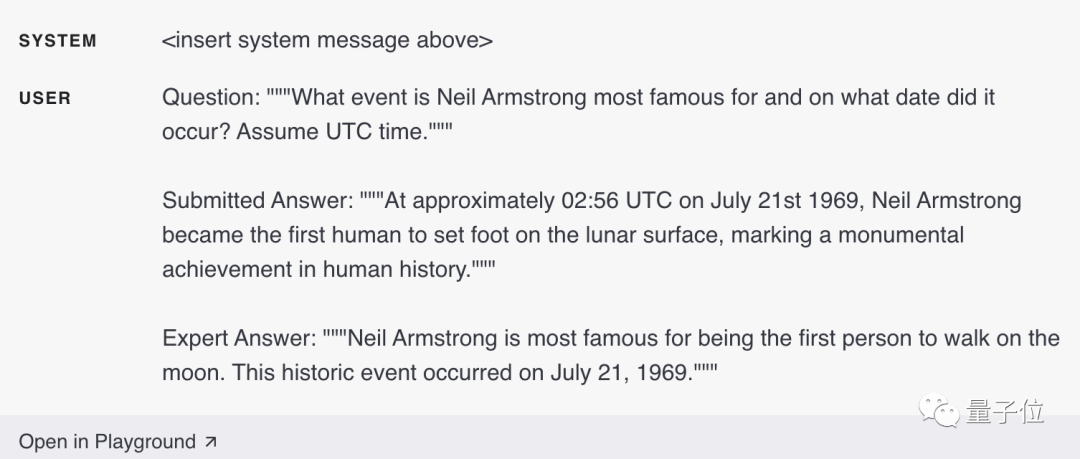

The following contains two established facts. Example input (with both events and time):

Example input that satisfies only one established fact (without time):

The following example input does not contain any established facts:

The last one is a sample input with the correct answer, which also provides more details than necessary (the time is exactly 02:56, And pointed out that this is an immortal achievement in human history):

Portal: https://github.com/ openai/evals(OpenAI Evals)

The above is the detailed content of The most complete strategy for GPT-4 is here! OpenAI is officially released, and all the experience accumulated in six months is included. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

Four recommended AI-assisted programming tools

Apr 22, 2024 pm 05:34 PM

This AI-assisted programming tool has unearthed a large number of useful AI-assisted programming tools in this stage of rapid AI development. AI-assisted programming tools can improve development efficiency, improve code quality, and reduce bug rates. They are important assistants in the modern software development process. Today Dayao will share with you 4 AI-assisted programming tools (and all support C# language). I hope it will be helpful to everyone. https://github.com/YSGStudyHards/DotNetGuide1.GitHubCopilotGitHubCopilot is an AI coding assistant that helps you write code faster and with less effort, so you can focus more on problem solving and collaboration. Git

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

750,000 rounds of one-on-one battle between large models, GPT-4 won the championship, and Llama 3 ranked fifth

Apr 23, 2024 pm 03:28 PM

Regarding Llama3, new test results have been released - the large model evaluation community LMSYS released a large model ranking list. Llama3 ranked fifth, and tied for first place with GPT-4 in the English category. The picture is different from other benchmarks. This list is based on one-on-one battles between models, and the evaluators from all over the network make their own propositions and scores. In the end, Llama3 ranked fifth on the list, followed by three different versions of GPT-4 and Claude3 Super Cup Opus. In the English single list, Llama3 overtook Claude and tied with GPT-4. Regarding this result, Meta’s chief scientist LeCun was very happy and forwarded the tweet and

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Learn how to develop mobile applications using Go language

Mar 28, 2024 pm 10:00 PM

Go language development mobile application tutorial As the mobile application market continues to boom, more and more developers are beginning to explore how to use Go language to develop mobile applications. As a simple and efficient programming language, Go language has also shown strong potential in mobile application development. This article will introduce in detail how to use Go language to develop mobile applications, and attach specific code examples to help readers get started quickly and start developing their own mobile applications. 1. Preparation Before starting, we need to prepare the development environment and tools. head

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

Which AI programmer is the best? Explore the potential of Devin, Tongyi Lingma and SWE-agent

Apr 07, 2024 am 09:10 AM

On March 3, 2022, less than a month after the birth of the world's first AI programmer Devin, the NLP team of Princeton University developed an open source AI programmer SWE-agent. It leverages the GPT-4 model to automatically resolve issues in GitHub repositories. SWE-agent's performance on the SWE-bench test set is similar to Devin, taking an average of 93 seconds and solving 12.29% of the problems. By interacting with a dedicated terminal, SWE-agent can open and search file contents, use automatic syntax checking, edit specific lines, and write and execute tests. (Note: The above content is a slight adjustment of the original content, but the key information in the original text is retained and does not exceed the specified word limit.) SWE-A

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The world's most powerful model changed hands overnight, marking the end of the GPT-4 era! Claude 3 sniped GPT-5 in advance, and read a 10,000-word paper in 3 seconds. His understanding is close to that of humans.

Mar 06, 2024 pm 12:58 PM

The volume is crazy, the volume is crazy, and the big model has changed again. Just now, the world's most powerful AI model changed hands overnight, and GPT-4 was pulled from the altar. Anthropic released the latest Claude3 series of models. One sentence evaluation: It really crushes GPT-4! In terms of multi-modal and language ability indicators, Claude3 wins. In Anthropic’s words, the Claude3 series models have set new industry benchmarks in reasoning, mathematics, coding, multi-language understanding and vision! Anthropic is a startup company formed by employees who "defected" from OpenAI due to different security concepts. Their products have repeatedly hit OpenAI hard. This time, Claude3 even had a big surgery.

Summary of the five most popular Go language libraries: essential tools for development

Feb 22, 2024 pm 02:33 PM

Summary of the five most popular Go language libraries: essential tools for development

Feb 22, 2024 pm 02:33 PM

Summary of the five most popular Go language libraries: essential tools for development, requiring specific code examples. Since its birth, the Go language has received widespread attention and application. As an emerging efficient and concise programming language, Go's rapid development is inseparable from the support of rich open source libraries. This article will introduce the five most popular Go language libraries. These libraries play a vital role in Go development and provide developers with powerful functions and a convenient development experience. At the same time, in order to better understand the uses and functions of these libraries, we will explain them with specific code examples.