Technology peripherals

Technology peripherals

AI

AI

Nature issues AIGC ban! Submissions that use AI for visual content will not be accepted

Nature issues AIGC ban! Submissions that use AI for visual content will not be accepted

Nature issues AIGC ban! Submissions that use AI for visual content will not be accepted

As one of the most authoritative scientific journals, Nature recently made it clear:

prohibits the use of image and video content created by generative artificial intelligence (AIGC)!

This also means that, except for articles whose topics discuss AI, any work accepted by Nature must ensure that there is no visual content generated or enhanced by AIGC.

This objection vote is filled with a line of big words:

Integrity, permission, privacy and intellectual property protection

Some netizens believe:

In fact, this is a new step taken by us to re-discuss the "truth claim" of photography.

Digital photography and Photoshop both changed our relationship with media, and AIGC may do it again.

The popularity of ChatGPT has promoted AIGC’s strong “out of the circle”, and all walks of life are competing to explore its potential. Controversy has persisted over whether AIGC should be used to display visual content in science, art, publishing, and other fields.

Concerns about AIGC are inseparable from the intensification of infringement caused by irregular use of AIGC in the past six months.

As early as January this year, in the Northern District Court of California, three cartoonists launched a class action lawsuit against three AIGC commercial application companies, including Stability AI, accusing Stable Diffusion of infringement. Similar cases are common.

Last month, Douyin, one of the most popular short video social platforms at the moment, proposed eleven platform specifications and industry initiatives:

Become a creator and anchor , users, merchants, advertisers and other platform participants must comply with 11 specifications when using the generative artificial intelligence technology applied by Douyin.

This includes prominently marking the content generated by AIGC, and prohibiting the use of AIGC technology to create and publish infringing content, as well as content that violates scientific common sense, practices fraud, and spreads rumors.

Nature, which has a history of 153 years, has only a simple "no" towards AIGC.

NatureWhy should we disable AIGC?

After generative AI tools such as ChatGPT and Midjourney have been widely used and their capabilities have grown rapidly, Nature cannot sit still.

There have been months of intense discussions and consultations on this issue.

The final result is:

Unless it is an article specifically about artificial intelligence, Nature will not be able to write articles specifically about artificial intelligence in the foreseeable future. No photography, video or illustration content created in whole or in part using AIGC will be published.

Nature will not allow the use of AIGC in visual content, and the reason boils down to:

Integrity issues.

Whether scientific or artistic creation, the publishing process should be based on a shared commitment to integrity.

One of them is to keep the process transparent.

Ensuring the accuracy and authenticity of data and image sources is the shared responsibility of researchers, editors, and publishers. This is something that existing AIGC tools cannot do.

The AIGC tool does not provide a way to access data and image sources, so this verification is not possible.

Another big problem is the issue of attribution.

The importance of accurate attribution of sources when using or citing existing works cannot be ignored and is a core principle in scientific and artistic publishing.

Obviously, the content generated by the AIGC tool cannot clearly identify the ownership issue.

Consent and permission is also one of the factors that must be considered.

If intellectual property content is involved, consent and permission must be obtained, and AIGC once again failed to meet expectations on this issue.

The AIGC system is trained on images from unidentified sources. AIGC often uses some copyrighted works for training without permission. There are situations where privacy rights may be violated, such as using someone else's photos or videos without their permission.

In addition to privacy issues, these "deep fake" contents can also easily accelerate the spread of false information.

One More Thing

Although Nature does not accept visual content generated by AIGC, it does allow the text to contain content assisted by AIGC.

Provided that some caveats are followed:

The use of such large language model (LLM) tools needs to be documented in the research methods or acknowledgments section of the paper, and we expect authors to provide all Sources of data, including data generated by assisted artificial intelligence. Furthermore, any LLM tool will not be accepted as the author of the paper.

Nature believes that this is done to protect content creation results. The world is on the brink of an artificial intelligence revolution that holds great promise but is also rapidly upending long-established traditions in science, the arts, publishing, and more. Careless use of AI could unravel centuries of systems that protect scientific integrity and protect content creators from exploitation.

What do you think of it?

Reference link:

[1]https://www.nature.com/articles/d41586-023-01546-4

[2]https://arstechnica.com/information-technology/2023/06/nature-bans-ai-generated-art-from-its-153-year-old- science-journal/

The above is the detailed content of Nature issues AIGC ban! Submissions that use AI for visual content will not be accepted. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Unifying characters and changing scenes, PixVerse, a video generation artifact, has been played out by netizens, and its super consistency has become a 'killer move'

Apr 01, 2024 pm 02:11 PM

Unifying characters and changing scenes, PixVerse, a video generation artifact, has been played out by netizens, and its super consistency has become a 'killer move'

Apr 01, 2024 pm 02:11 PM

Another double click is the debut of a new feature. Have you ever wanted to change the background of a character in a picture, but the AI always produces the effect of "the object is neither the person nor the object". Even in mature generation tools such as Midjourney and DALL・E, some prompt skills are required to maintain character consistency, otherwise the characters will change around and you will not achieve the results you want. However, this time it’s your chance. The new "Character-Video" function of the AIGC tool PixVerse can help you achieve all this. Not only that, it can generate dynamic videos to make your characters more vivid. Enter a picture and you will be able to get the corresponding dynamic video results. On the basis of maintaining the consistency of the characters, the rich background elements and character dynamics allow the generated results to be

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

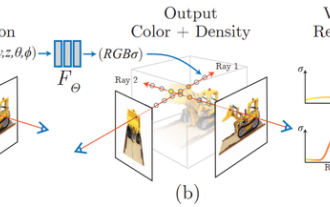

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

1 Introduction Neural Radiation Fields (NeRF) are a fairly new paradigm in the field of deep learning and computer vision. This technology was introduced in the ECCV2020 paper "NeRF: Representing Scenes as Neural Radiation Fields for View Synthesis" (which won the Best Paper Award) and has since become extremely popular, with nearly 800 citations to date [1 ]. The approach marks a sea change in the traditional way machine learning processes 3D data. Neural radiation field scene representation and differentiable rendering process: composite images by sampling 5D coordinates (position and viewing direction) along camera rays; feed these positions into an MLP to produce color and volumetric densities; and composite these values using volumetric rendering techniques image; the rendering function is differentiable, so it can be passed

Xiaomi Photo Album AIGC editing function officially launched: supports intelligent image expansion and magic elimination Pro

Mar 14, 2024 pm 10:22 PM

Xiaomi Photo Album AIGC editing function officially launched: supports intelligent image expansion and magic elimination Pro

Mar 14, 2024 pm 10:22 PM

According to news on March 14, Xiaomi officially announced today that the AIGC editing function of Xiaomi Photo Album is officially launched on Xiaomi 14 Ultra mobile phones, and will be fully launched on Xiaomi 14, Xiaomi 14 Pro and Redmi K70 series mobile phones within this month. The AI large model brings two new functions to Xiaomi Photo Album: Intelligent Image Expansion and Magic Elimination Pro. AI smart image expansion supports the expansion and automatic composition of poorly composed pictures. The operation method is: open the photo album to edit - enter cropping and rotation - click smart image expansion. Magic Elimination Pro can seamlessly eliminate passers-by in tourist photos. The method of use is: open the photo album to edit - enter Magic Elimination - click Pro in the upper right corner. At present, Xiaomi 14Ultra machine has launched intelligent image expansion and magic elimination Pro functions.

ChatGPT nemesis, introduces five free and easy-to-use AIGC detection tools

May 22, 2023 pm 02:38 PM

ChatGPT nemesis, introduces five free and easy-to-use AIGC detection tools

May 22, 2023 pm 02:38 PM

Introduction After the launch of ChatGPT, it was like Pandora's box was opened. We are now observing a technological shift in many ways of working. People are using ChatGPT to create websites, apps, and even write novels. With the hype and introduction of AI-generating tools, we have also seen an increase in bad actors. If you follow the latest news, you must have heard that ChatGPT has passed the Wharton MBA exam. To date, ChatGPT has passed exams covering fields ranging from medicine to law degrees. Beyond exams, students are using it to submit assignments, writers are submitting generative content, and researchers can produce high-quality papers by simply typing in prompts. To combat the abuse of generative content

AIGC innovates customer service, and Weiyin builds a '1+5' generative AI intelligent product matrix

Sep 15, 2023 am 11:57 AM

AIGC innovates customer service, and Weiyin builds a '1+5' generative AI intelligent product matrix

Sep 15, 2023 am 11:57 AM

Artificial intelligence technology, which consists of natural language processing, speech recognition, speech synthesis, machine learning and other technologies, has been widely recognized in various industries. Being at the forefront of AI applications, starting from the end of 2022, Weiyin has continued to witness the surprises brought by AIGC technology, and is also fortunate to participate in this technology wave that covers the world. After training, testing, tuning and application, Weiyin combined its rich customer service industry experience with powerful large model capabilities to develop a generative AI customer service robot suitable for both the agent side and the business side. At the same time, Weiyin also connected the underlying capabilities with Weiyin Vision series of intelligent products, ultimately forming a "1+5" Weiyin generative AI intelligent product matrix. Among them, "1" is the large model service platform for Weiyin's independent training.

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

The marketing effect has been greatly improved, this is how AIGC video creation should be used

Jun 25, 2024 am 12:01 AM

After more than a year of development, AIGC has gradually moved from text dialogue and picture generation to video generation. Looking back four months ago, the birth of Sora caused a reshuffle in the video generation track and vigorously promoted the scope and depth of AIGC's application in the field of video creation. In an era when everyone is talking about large models, on the one hand we are surprised by the visual shock brought by video generation, on the other hand we are faced with the difficulty of implementation. It is true that large models are still in a running-in period from technology research and development to application practice, and they still need to be tuned based on actual business scenarios, but the distance between ideal and reality is gradually being narrowed. Marketing, as an important implementation scenario for artificial intelligence technology, has become a direction that many companies and practitioners want to make breakthroughs. Once you master the appropriate methods, the creative process of marketing videos will be

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass