Technology peripherals

Technology peripherals

AI

AI

Prompt unlocks speech language model generation capabilities, and SpeechGen implements speech translation and patching multiple tasks.

Prompt unlocks speech language model generation capabilities, and SpeechGen implements speech translation and patching multiple tasks.

Prompt unlocks speech language model generation capabilities, and SpeechGen implements speech translation and patching multiple tasks.

- Paper link: https://arxiv.org/pdf/2306.02207.pdf

- Demo page: https://ga642381.github.io/SpeechPrompt/speechgen.html

- Code: https://github.com /ga642381/SpeechGen

Large language models (LLMs) are used in The aspect of artificial intelligence generated content (AIGC) has attracted considerable attention, especially with the emergence of ChatGPT.

However, how to process continuous speech with large language models remains an unsolved challenge, which hinders the application of large language models in speech generation. Because speech signals contain rich information, such as speaker and emotion, beyond pure text data, speech-based language models (speech LM) continue to emerge.

Although speech language models are still in their early stages compared to text-based language models, they have great potential because speech data contains richer information than text. It's full of expectations.

Researchers are actively exploring the potential of the prompt paradigm to unleash the power of pre-trained language models. This prompt guides the pre-trained language model to perform specific downstream tasks by fine-tuning a small number of parameters. This technique is popular in the NLP field because of its efficiency and effectiveness. In the field of speech processing, SpeechPrompt has demonstrated significant improvements in parameter efficiency and achieved competitive performance in various speech classification tasks.

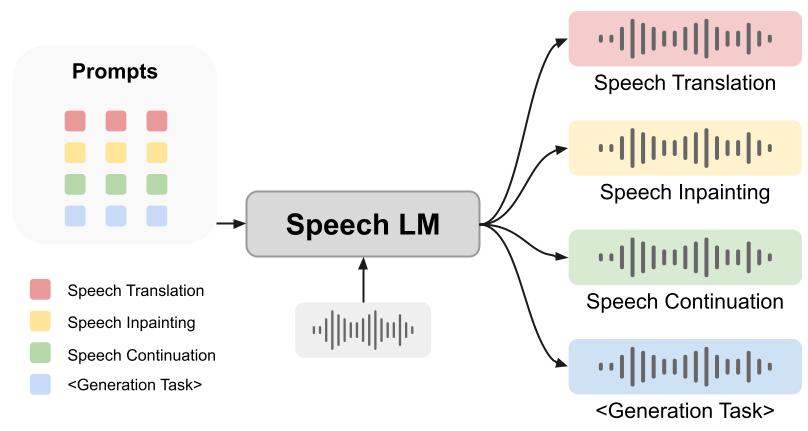

However, whether hints can help speech language models complete the generation task is still an open question. In this paper, we propose an innovative unified framework: SpeechGen, aiming to unleash the potential of speech language models for generation tasks. As shown in the figure below, a piece of speech and a specific prompt (prompt) are fed to speech LM as input, and speech LM can perform specific tasks. For example, if the red prompt is used as input, speech LM can perform the task of speech translation.

The framework we propose has the following advantages:

1. Textless: We The framework and the speech language model it relies on are independent of text data and have immeasurable value. After all, the process of getting paired text with speech is time-consuming and cumbersome, and in some languages the right text isn't even possible. The text-free feature allows our powerful speech generation capabilities to cover various language needs, benefiting all mankind.

2. Versatility: The framework we developed is extremely versatile and can be applied to a variety of speech generation tasks. The experiments in the paper use speech translation, speech restoration, and speech continuity as examples.

3. Easy to follow: The framework we propose provides a general solution for various speech generation tasks, making it easy to design downstream models and loss functions.

4. Transferability: Our framework is not only easily adaptable to more advanced speech language models in the future, but also contains huge potential to further improve efficiency and effectiveness. What is particularly exciting is that with the advent of advanced speech language models, our framework will usher in even more powerful developments.

5. Affordability: Our framework is carefully designed to only require training a small number of parameters instead of an entire huge language model. This greatly reduces the computational burden and allows the training process to be performed on a GTX 2080 GPU. University laboratories can also afford such computational overhead.

SpeechGen Introduction

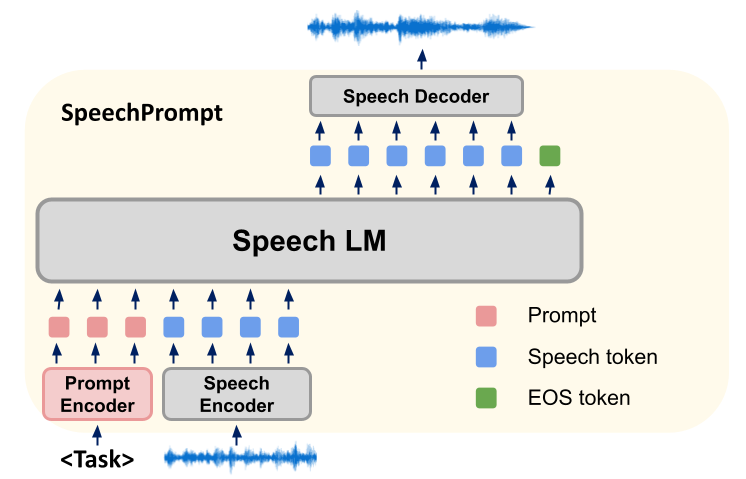

Our research method is to build a new framework SpeechGen, which mainly uses speech language models (Spoken Language Models, SLMs) to conduct various Fine-tuning for downstream speech generation tasks. During training, the parameters of SLMs are kept constant and our method focuses on learning task-specific prompt vectors. SLMs efficiently generate the output required for a specific speech generation task by simultaneously conditioning cue vectors and input units. These discrete unit outputs are then input into a unit-based speech synthesizer, which generates corresponding waveforms.

Our SpeechGen framework consists of three elements: speech encoder, SLM and speech decoder (Speech Decoder).

First, the speech encoder takes a waveform as input and converts it into a sequence of units derived from a limited vocabulary. To shorten the sequence length, repeated consecutive units are removed to produce a compressed sequence of units. The SLM then acts as a language model for the sequence of units, optimizing the likelihood by predicting the previous unit and subsequent units of the sequence of units. We make prompt adjustments to the SLM to guide it to generate appropriate units for the task. Finally, the tokens generated by the SLM are processed by a speech decoder, converting them back into waveforms. In our cue tuning strategy, cue vectors are inserted at the beginning of the input sequence, which guides the direction of SLMs during generation. The exact number of hints inserted depends on the architecture of the SLMs. In a sequence-to-sequence model, cues are added to both the encoder input and the decoder input, but in an encoder-only or decoder-only architecture, only a hint is added in front of the input sequence.

In sequence-to-sequence SLMs (such as mBART), we adopt self-supervised learning models (such as HuBERT) to process the input and target speech. Doing so generates discrete units for the input and corresponding discrete units for the target. We add hint vectors in front of both encoder and decoder inputs to construct the input sequence. In addition, we further enhance the guidance ability of cues by replacing key-value pairs in the attention mechanism.

In model training, we use cross-entropy loss as the objective function for all generation tasks, and calculate the loss by comparing the model's prediction results with the target discrete unit labels. In this process, the cue vector is the only parameter in the model that needs to be trained, while the parameters of SLMs remain unchanged during the training process, which ensures the consistency of model behavior. By inserting cue vectors, we guide SLMs to extract task-specific information from the input and increase the likelihood of producing output consistent with a specific speech generation task. This approach allows us to fine-tune and adjust the behavior of SLMs without modifying their underlying parameters.

In general, our research method is based on a new framework SpeechGen, which guides the generation process of the model by training prompt vectors, and enables it to effectively generate specific speech Generate the output of the task.

Experiment

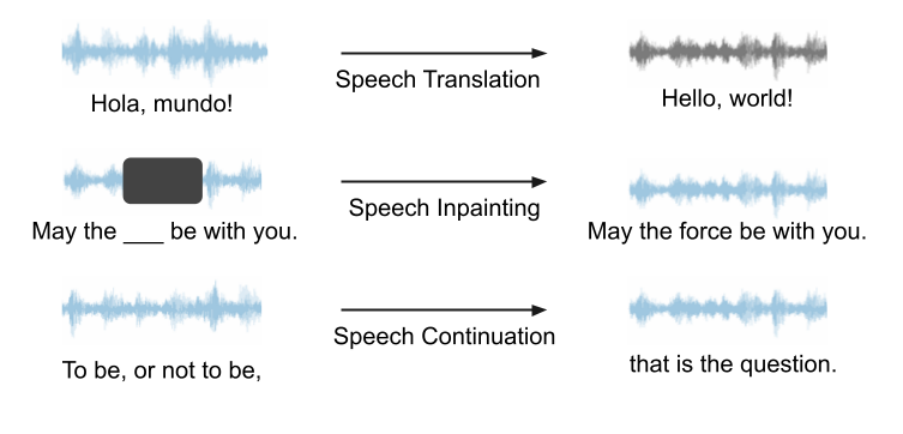

Our framework can be used for any speech LM and various generation tasks, and has great potential. In our experiments, since VALL-E and AudioLM are not open source, we choose to use Unit mBART as the speech LM for case study. We use speech translation, speech inpainting, and speech continuation as examples to demonstrate the capabilities of our framework. A schematic diagram of these three tasks is shown below. All tasks are voice input, voice output, no text help required.

Voice Translation

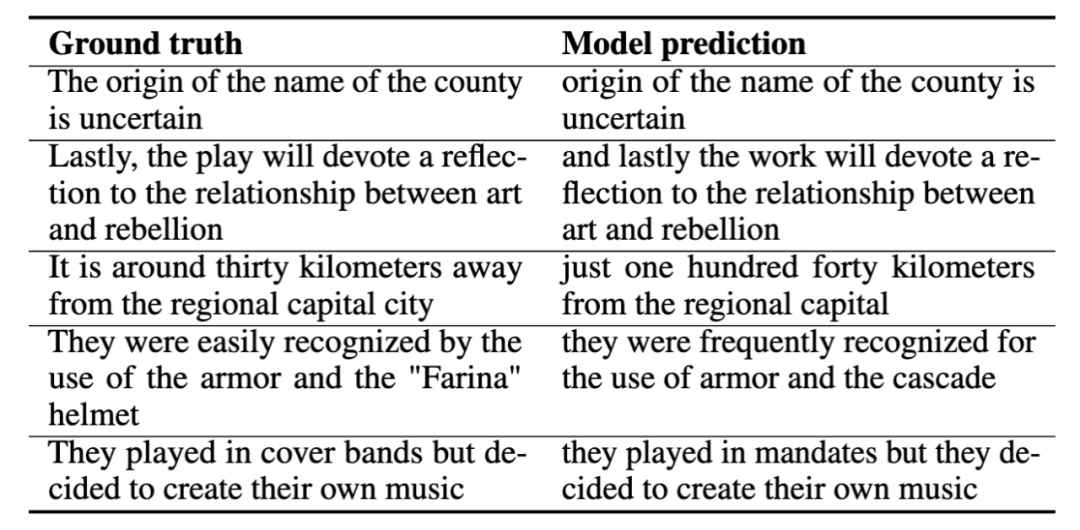

We are training When doing speech translation, the Spanish-to-English task is used. We input Spanish speech into the model and hope that the model will produce English speech without text help in the whole process. Below are several examples of speech translation, where we show the correct answer (ground truth) and the model prediction (model prediction). These demonstration examples show that the model's predictions capture the core meaning of the correct answer.

Speech inpainting

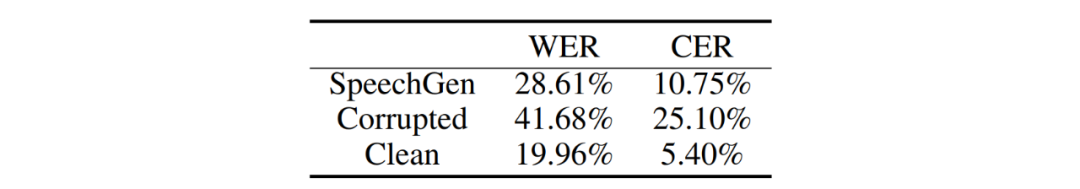

In our experiments with speech inpainting, we specifically Audio clips longer than 2.5 seconds are selected as the target speech for subsequent processing, and a speech clip with a duration ranging from 0.8 to 1.2 seconds is selected through a random selection process. We then mask the selected segments to simulate missing or damaged parts in a speech inpainting task. We used word error rate (WER) and character error rate (CER) as metrics to assess the degree of repair of damaged segments.

Comparative analysis of the output generated by SpeechGen and damaged speech, our model can significantly reconstruct spoken vocabulary, reducing WER from 41.68% to 28.61% and CER from 25.10% reduced to 10.75%, as shown in the table below. This means that our proposed method can significantly improve the ability of speech reconstruction, ultimately promoting the accuracy and understandability of speech output.

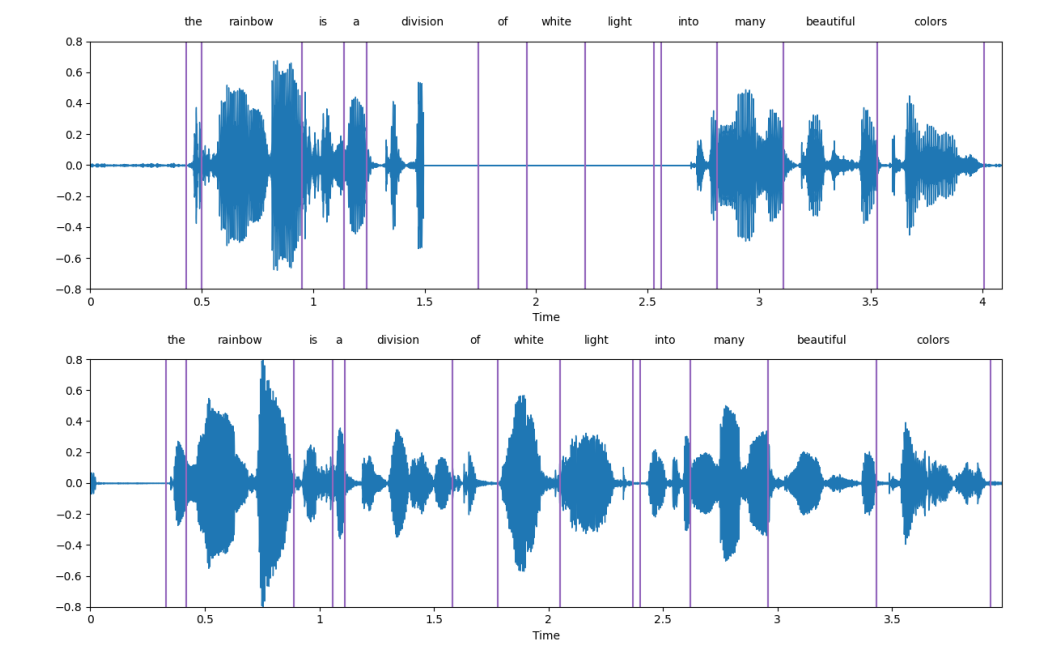

The following picture is a display example. The upper sub-picture is the damaged speech, and the lower sub-picture is The speech generated by SpeechGen can be seen that SpeechGen repairs the damaged speech very well.

Voice Continuous

We will pass LJSpeech demonstrates practical applications for speech continuous tasks. During the training prompt (prompt), our strategy is to let the model only see the seed segment of the fragment. This seed segment occupies a proportion of the total length of the speech. We call this the condition ratio (condition ratio, r), and let The model continues to generate subsequent speech.

The following are some examples. The black text represents the seed segment, and the red text is the sentence generated by SpeechGen (the text here is first obtained through speech recognition. During training And during the inference process, the model completely performs speech-to-speech tasks and does not receive any text information at all). Different condition ratios enable SpeechGen to generate sentences of varying lengths to achieve coherence and complete a complete sentence. From a quality perspective, the generated sentences are basically syntactically consistent with the seed fragments and are semantically related. Although, the generated speech still cannot perfectly convey a complete meaning. We anticipate that this issue will be addressed in more powerful speech models in the future.

Shortcomings and Future Directions

Speech language models and speech generation are in a booming stage, and our framework provides a way to cleverly leverage powerful language models Possibility of speech generation. However, this framework still has some room for improvement, and there are many issues worthy of further study.

1. Compared with text-based language models, speech language models are still in the initial stage of development. Although the cueing framework we proposed can inspire the speech language model to do speech generation tasks, it cannot achieve excellent performance. However, with the continuous advancement of speech language models, such as the big turn from GSLM to Unit mBART, the performance of prompts has improved significantly. In particular, tasks that were previously challenging for GSLM now show better performance under Unit mBART. We expect that more advanced speech language models will emerge in the future.

2. Beyond content information: Current speech language models cannot fully capture speaker and emotional information, which brings challenges to the current speech prompt framework in effectively processing this information. challenge. To overcome this limitation, we introduce plug-and-play modules that specifically inject speaker and emotion information into the framework. Going forward, we anticipate that future speech language models will integrate and exploit information beyond these to improve performance and better handle speaker- and emotion-related aspects of speech generation tasks.

3. Possibility of prompt generation: For prompt generation, we have flexible options and can integrate various types of instructions, including text and image instructions. Imagine that we could train a neural network to take images or text as input, instead of using trained embeddings as hints like in this article. This trained network will become the hint generator, adding variety to the framework. This approach will make prompt generation more interesting and colorful.

Conclusion

In this paper we explored the use of hints to unlock the performance of speech language models across a variety of generative tasks. We propose a unified framework called SpeechGen that has only ~10M trainable parameters. Our proposed framework has several major properties, including text-freeness, versatility, efficiency, transferability, and affordability. To demonstrate the capabilities of the SpeechGen framework, we use Unit mBART as a case study and conduct experiments on three different speech generation tasks: speech translation, speech repair, and speech continuation.

When this paper was submitted to arXiv, Google proposed a more advanced speech language model-SPECTRON, which showed us that the speech language model can model speakers and Possibility of information such as emotions. This is undoubtedly exciting news. As advanced speech language models continue to be proposed, our unified framework has great potential.

The above is the detailed content of Prompt unlocks speech language model generation capabilities, and SpeechGen implements speech translation and patching multiple tasks.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

Evaluating the cost/performance of commercial support for a Java framework involves the following steps: Determine the required level of assurance and service level agreement (SLA) guarantees. The experience and expertise of the research support team. Consider additional services such as upgrades, troubleshooting, and performance optimization. Weigh business support costs against risk mitigation and increased efficiency.

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

The learning curve of a PHP framework depends on language proficiency, framework complexity, documentation quality, and community support. The learning curve of PHP frameworks is higher when compared to Python frameworks and lower when compared to Ruby frameworks. Compared to Java frameworks, PHP frameworks have a moderate learning curve but a shorter time to get started.

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

The lightweight PHP framework improves application performance through small size and low resource consumption. Its features include: small size, fast startup, low memory usage, improved response speed and throughput, and reduced resource consumption. Practical case: SlimFramework creates REST API, only 500KB, high responsiveness and high throughput

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

Performance comparison of Java frameworks

Jun 04, 2024 pm 03:56 PM

According to benchmarks, for small, high-performance applications, Quarkus (fast startup, low memory) or Micronaut (TechEmpower excellent) are ideal choices. SpringBoot is suitable for large, full-stack applications, but has slightly slower startup times and memory usage.

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Writing clear and comprehensive documentation is crucial for the Golang framework. Best practices include following an established documentation style, such as Google's Go Coding Style Guide. Use a clear organizational structure, including headings, subheadings, and lists, and provide navigation. Provides comprehensive and accurate information, including getting started guides, API references, and concepts. Use code examples to illustrate concepts and usage. Keep documentation updated, track changes and document new features. Provide support and community resources such as GitHub issues and forums. Create practical examples, such as API documentation.

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

Choose the best Go framework based on application scenarios: consider application type, language features, performance requirements, and ecosystem. Common Go frameworks: Gin (Web application), Echo (Web service), Fiber (high throughput), gorm (ORM), fasthttp (speed). Practical case: building REST API (Fiber) and interacting with the database (gorm). Choose a framework: choose fasthttp for key performance, Gin/Echo for flexible web applications, and gorm for database interaction.

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

In Go framework development, common challenges and their solutions are: Error handling: Use the errors package for management, and use middleware to centrally handle errors. Authentication and authorization: Integrate third-party libraries and create custom middleware to check credentials. Concurrency processing: Use goroutines, mutexes, and channels to control resource access. Unit testing: Use gotest packages, mocks, and stubs for isolation, and code coverage tools to ensure sufficiency. Deployment and monitoring: Use Docker containers to package deployments, set up data backups, and track performance and errors with logging and monitoring tools.

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

There are five misunderstandings in Go framework learning: over-reliance on the framework and limited flexibility. If you don’t follow the framework conventions, the code will be difficult to maintain. Using outdated libraries can cause security and compatibility issues. Excessive use of packages obfuscates code structure. Ignoring error handling leads to unexpected behavior and crashes.