Technology peripherals

Technology peripherals

AI

AI

ChatGPT big update! OpenAI offers programmers a gift package: API adds killer capabilities and prices are reduced, new models and four times the context are coming

ChatGPT big update! OpenAI offers programmers a gift package: API adds killer capabilities and prices are reduced, new models and four times the context are coming

ChatGPT big update! OpenAI offers programmers a gift package: API adds killer capabilities and prices are reduced, new models and four times the context are coming

ChatGPT evolved again overnight, and OpenAI launched a large number of updates in one go!

#The core is the new function calling capability of the API. Similar to the web version of the plug-in, the API can also use external tools.

This ability is put in the hands of developers, and capabilities that the ChatGPT API does not originally have can also be solved by various third-party services.

Some people think that this is a killer feature and the most important update since the release of ChatGPT API.

In addition, this update about ChatGPT APIEach one is very important, not only the capacity is increased, but the price is also lower:

- Introducing new versions of gpt-4-0613 and gpt-3.5-turbo-0613 models

- gpt-3.5-turbo context length increased by 400%, from 4k to 16k

- gpt-3.5-turbo input token price reduced by 25%

- The most advanced embeddings model price reduced by 75%

- GPT-4 API will be opened on a large scale until the queue list is cleared

When the news reached China, some netizens believed that this was a major challenge facing large domestic models.

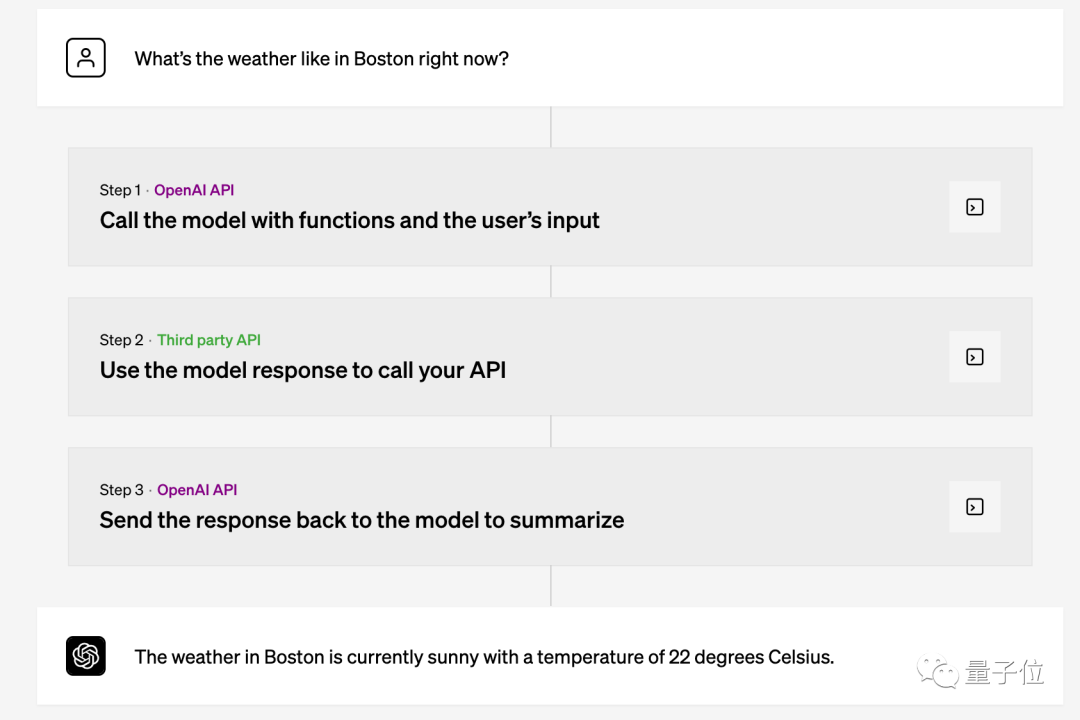

The API decides when to use the tool

According to the official introduction of OpenAI, function calls support both the new version of GPT-4 and GPT-3.5.

Developers only need the model to describe the functions they need to use. When to call which function is determined by the model itself based on the prompt words, which is the same as the mechanism of ChatGPT calling plug-ins.

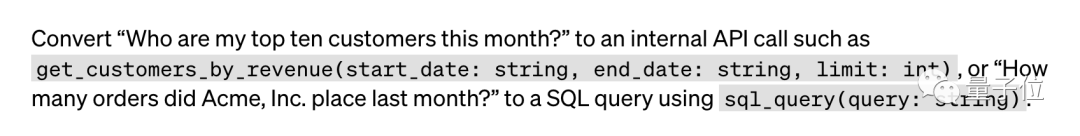

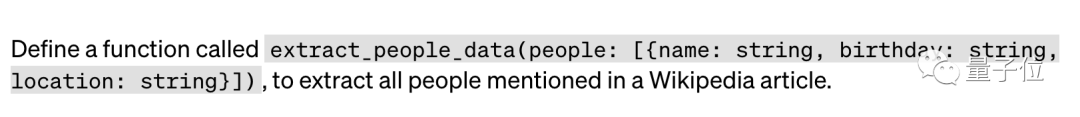

For specific usage methods, the official gives three examples:

First, the chatbot calls an external API to perform operations or answer questions , like "Send someone an email" or "What's the weather like today?"

Second, convert natural language into API calls or database queries, such as "How many orders were there last month?" ” will automatically generate SQL query statements.

Third , automatically extract structured data from the text, for example, you only need to define the required "name, birthday" , location" and give a web link, you can automatically extract all the character information mentioned in a Wikipedia article.

This new feature has cheered the majority of netizens, especially developers, saying that with it, work efficiency will be greatly improved.

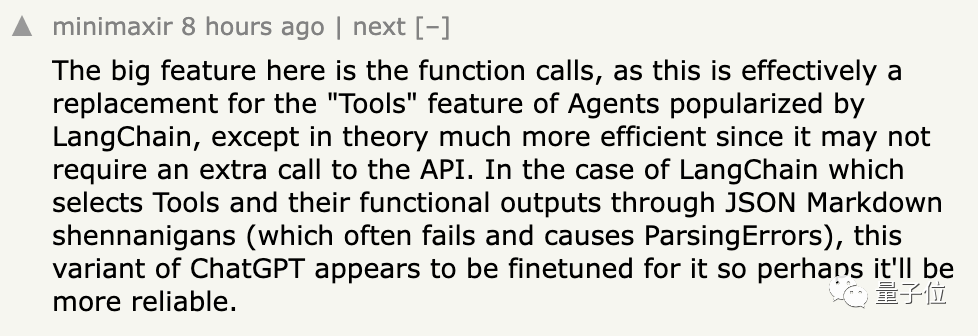

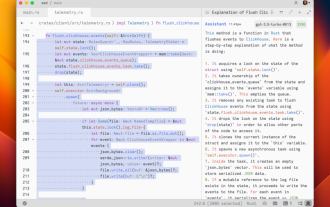

In the past, if you wanted GPT to call functions, you needed to use LangChain's tools.

……

Although LangChain theoretically has higher operating efficiency, its reliability is inferior to the specially tuned new GPT.

Updated version, lower price

Currently, the new version of the model has gradually begun to iterate.

The latest versions of gpt-4-0613, gpt-3.5-turbo-0613 and the extended context length gpt-4-32k-0613 all support function calls.

gpt-3.5-turbo-16k does not support function calls and provides 4 times the context length, which means that one request can support approximately 20 pages of text.

Old models are also beginning to be gradually abandoned.

Apps using the initial versions of gpt-3.5-turbo and gpt-4 will be automatically upgraded to the new version on June 27th

Developers who need more time to transition can also manually specify to continue Use the old version, but all old version requests will be completely obsolete after September 13th.

After talking about this timeline, let’s take a look at the price.

After the upgrade, OpenAI not only did not increase the price of the product, but also lowered the price.

The first is the most used gpt-3.5-turbo (4k token version).

The price of input tokens has been reduced by 25%, and is now US$0.0015 per thousand tokens, which is 666,000 tokens per US$1.

The price of the output token per thousand tokens is 0.002 US dollars, which is 1 US dollar and 500,000 tokens.

If converted into English text, it is roughly 700 pages for 1 US dollar.

The price of embeddings model has plummeted, directly reduced by 75%.

Every thousand tokens only cost 0.0001 USD, which is 1 USD and 10 million tokens.

In addition, the newly launched 16K token version of GPT3.5-Turbo provides four times the processing power of the 4K version, but is only twice the price.

The prices of input and output tokens are US$0.003 and US$0.004 per thousand tokens respectively.

In addition, a few netizens reported that the monthly bill dropped from 100 to a few cents. It is still unclear what the specific situation is.

Finally, don’t forget to queue up for GPT-4 API testing qualifications if necessary.

OpenAI catches "Chain", Microsoft later

Many netizens pointed out that OpenAI's new "function call" is basically a replica of the "Tools" in Langchain.

Perhaps in the future, OpenAI will also copy more functions of Langchain, such as Chains and Indexes.

Langchain is the most popular open source development framework in the field of large models, which can integrate various large model capabilities to quickly build applications.

The team also recently received US$10 million in seed round financing.

Although this update of OpenAI will not directly "kill" the entrepreneurial project of Langchain.

But developers originally needed LangChain to implement some functions, but now they no longer need it.

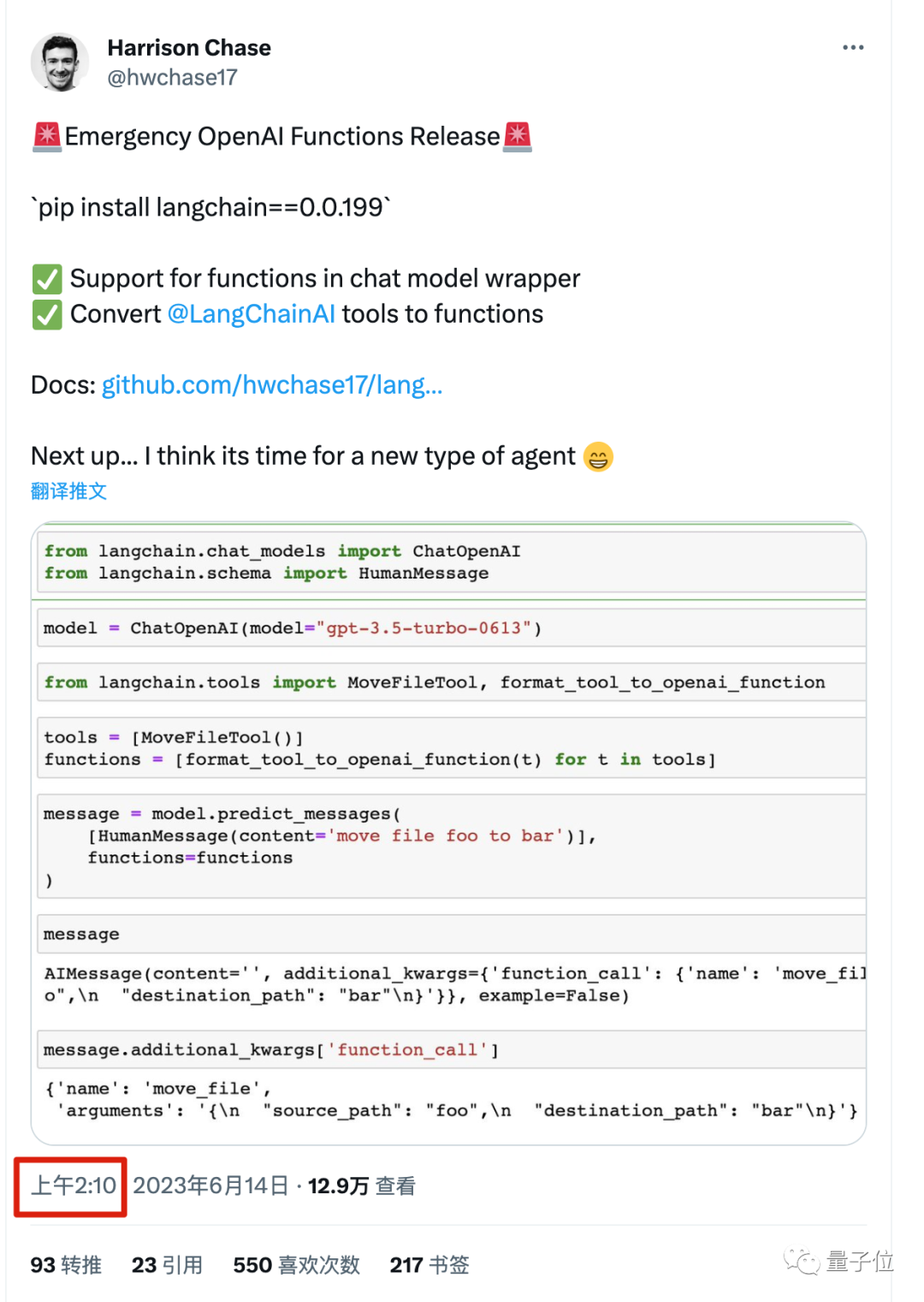

# Looking at Langchain’s reaction, the desire to survive is indeed very strong.

Within 10 minutes after OpenAI officially released the update, Langchain immediately announced that it was “already working on compatibility.”

And a new version was released in less than an hour. In addition to supporting new official functions, it can also convert tools already written by developers into OpenAI functions.

In addition to queuing up to lament the ridiculously fast development speed, netizens also thought about an unavoidable question:

What should you do if OpenAI destroys your entrepreneurial project?

In this regard, OpenAI CEO Sam Altman just made a statement recently.

At the exchange meeting held by Humanloop at the end of May, Altman once said:

With the exception of consumer-level applications such as ChatGPT, try to avoid competing with customers.

Now it seems that development tools are not included in the scope of avoiding competition.

In addition to startups that compete with OpenAI, there is another entity that cannot be ignored:

Microsoft, OpenAI’s largest sponsor, also provides OpenAI API services through the Azure cloud.

Just recently, some developers reported that after switching from the official OpenAI API to the Microsoft Azure version, the performance was significantly improved.

Specifically:

- median latency reduced from 15 seconds to 3 seconds

- 95th percentile latency reduced from 60 seconds to 15 seconds

- The average number of tokens processed per second increased three times, from 8 increased to 24.

Including some discounts from Azure, it is even cheaper than before.

#But the update speed of Microsoft Azure is generally several weeks slower than OpenAI.

Use OpenAI for rapid iteration during the development phase, and move to Microsoft Azure for large-scale deployment. Have you learned anything?

Update announcement https://openai.com/blog/function-calling-and-other-api-updates.

GPT-4 API queue https://openai.com/waitlist/gpt-4-api.

Reference link:

[1]https://news .ycombinator.com/item?id=36313348.

##[2]https://twitter.com/svpino/status/1668695130570903552.

[3]https://weibo.com/1727858283/N5cjr0jBq.

[4]https://twitter.com/LangChainAI/status/1668671302624747520.

[5]https://twitter.com/hwchase17/status/1668682373767020545.

[6]https://twitter.com/ItakGol/status/1668336193270865921.

The above is the detailed content of ChatGPT big update! OpenAI offers programmers a gift package: API adds killer capabilities and prices are reduced, new models and four times the context are coming. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Not long ago, OpenAISora quickly became popular with its amazing video generation effects. It stood out among the crowd of literary video models and became the focus of global attention. Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team has fully open sourced the world's first Sora-like architecture video generation model "Open-Sora1.0", covering the entire training process, including data processing, all training details and model weights, and join hands with global AI enthusiasts to promote a new era of video creation. For a sneak peek, let’s take a look at a video of a bustling city generated by the “Open-Sora1.0” model released by the Colossal-AI team. Open-Sora1.0

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

Ollama is a super practical tool that allows you to easily run open source models such as Llama2, Mistral, and Gemma locally. In this article, I will introduce how to use Ollama to vectorize text. If you have not installed Ollama locally, you can read this article. In this article we will use the nomic-embed-text[2] model. It is a text encoder that outperforms OpenAI text-embedding-ada-002 and text-embedding-3-small on short context and long context tasks. Start the nomic-embed-text service when you have successfully installed o