Technology peripherals

Technology peripherals

AI

AI

OpenAI launches major update to GPT chatbot API for developers and lowers price

OpenAI launches major update to GPT chatbot API for developers and lowers price

OpenAI launches major update to GPT chatbot API for developers and lowers price

News on June 14th, OpenAI today announced a major update to its large language model API (including GPT-4 and gpt-3.5-turbo), including new function calling functions, Reduce usage costs and provide a 16,000 token version for the gpt-3.5-turbo model.

Large language model (LLM) is an artificial intelligence technology that can process natural language. Its "context window" is equivalent to a short-term memory that can store input content or chat robot dialogue. content. In language models, increasing the context window size has become a technical race, and Anthropic recently announced that its Claude language model can provide 75,000 token context window options. In addition, OpenAI has also developed a 32,000 token GPT-4 version, but it has not yet been publicly launched.

OpenAI has just launched a new 16000 context window version of gpt-3.5-turbo, named "gpt-3.5-turbo-16k", which can handle inputs up to 16000 tokens in length, which means that Processing approximately 20 pages of text at a time, this is a big improvement for developers who need to model processing and generate larger blocks of text.

In addition to this change, OpenAI also lists at least four other major new features:

- Introducing function calling capabilities in the Chat Completions API

- Improved and "more steerable" versions of #GPT-4 and gpt-3.5-turbo

- Reduced the price of the "ada" embedded model by 75%

- Reduced gpt -The input token price of the 3.5-turbo model has been reduced by 25%

The function call function makes it easier for developers to build functions that can call external tools, convert natural language into external API calls, or perform database queries. chatbot. For example, it can convert an input like "Send an email to Anya to see if she wants coffee next Friday" into a function call like "send_email (to: string, body: string)". In particular, this feature also makes it easier for API users to generate output in JSON format, something that was previously difficult to achieve.

Regarding the "more controllable" aspect, which is a technical term for how to make LLM behave the way you want, OpenAI said its new "gpt-3.5-turbo-0613" model will include " More reliable control via system messages" functionality. System messages are a special instruction input in the API that tell the model how to behave, such as "You are Grimes and you only talk about milkshakes."

In addition to functional improvements, OpenAI also provides considerable cost reduction. Notably, the token price of the popular gpt-3.5-turbo model is reduced by 25%. This means that developers can now use this model for about $0.0015 per 1000 tokens and $0.002 per 1000 tokens, which equates to about 700 pages of text per dollar. The gpt-3.5-turbo-16k model is priced at US$0.003 per 1000 tokens and US$0.004 per 1000 tokens.

In addition, IT House noticed that OpenAI also reduced the price of its "text-embedding-ada-002" embedding model by 75%. Embedding models are a technique that allows computers to understand words and concepts, converting natural language into a digital language that machines can understand, which is important for tasks such as searching for text and recommending relevant content.

Because OpenAI is constantly updating its models, old models won’t persist. Today, the company also announced it is starting to retire some earlier versions of its models, including gpt-3.5-turbo-0301 and gpt-4-0314. Developers can continue to use these models until September 13, after which these older models will no longer be available, the company said. It is worth noting that OpenAI’s GPT-4 API is still on the waiting list and is not yet fully open.

The above is the detailed content of OpenAI launches major update to GPT chatbot API for developers and lowers price. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Step-by-step guide to using Groq Llama 3 70B locally

Jun 10, 2024 am 09:16 AM

Translator | Bugatti Review | Chonglou This article describes how to use the GroqLPU inference engine to generate ultra-fast responses in JanAI and VSCode. Everyone is working on building better large language models (LLMs), such as Groq focusing on the infrastructure side of AI. Rapid response from these large models is key to ensuring that these large models respond more quickly. This tutorial will introduce the GroqLPU parsing engine and how to access it locally on your laptop using the API and JanAI. This article will also integrate it into VSCode to help us generate code, refactor code, enter documentation and generate test units. This article will create our own artificial intelligence programming assistant for free. Introduction to GroqLPU inference engine Groq

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

Caltech Chinese use AI to subvert mathematical proofs! Speed up 5 times shocked Tao Zhexuan, 80% of mathematical steps are fully automated

Apr 23, 2024 pm 03:01 PM

LeanCopilot, this formal mathematics tool that has been praised by many mathematicians such as Terence Tao, has evolved again? Just now, Caltech professor Anima Anandkumar announced that the team released an expanded version of the LeanCopilot paper and updated the code base. Image paper address: https://arxiv.org/pdf/2404.12534.pdf The latest experiments show that this Copilot tool can automate more than 80% of the mathematical proof steps! This record is 2.3 times better than the previous baseline aesop. And, as before, it's open source under the MIT license. In the picture, he is Song Peiyang, a Chinese boy. He is

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

From 'human + RPA' to 'human + generative AI + RPA', how does LLM affect RPA human-computer interaction?

Jun 05, 2023 pm 12:30 PM

Image source@visualchinesewen|Wang Jiwei From "human + RPA" to "human + generative AI + RPA", how does LLM affect RPA human-computer interaction? From another perspective, how does LLM affect RPA from the perspective of human-computer interaction? RPA, which affects human-computer interaction in program development and process automation, will now also be changed by LLM? How does LLM affect human-computer interaction? How does generative AI change RPA human-computer interaction? Learn more about it in one article: The era of large models is coming, and generative AI based on LLM is rapidly transforming RPA human-computer interaction; generative AI redefines human-computer interaction, and LLM is affecting the changes in RPA software architecture. If you ask what contribution RPA has to program development and automation, one of the answers is that it has changed human-computer interaction (HCI, h

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud launches NotePin AI wearable recorder for $169

Aug 29, 2024 pm 02:37 PM

Plaud, the company behind the Plaud Note AI Voice Recorder (available on Amazon for $159), has announced a new product. Dubbed the NotePin, the device is described as an AI memory capsule, and like the Humane AI Pin, this is wearable. The NotePin is

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

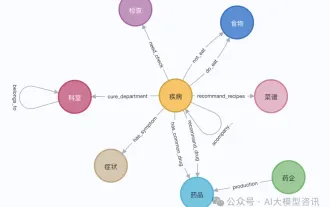

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

GraphRAG enhanced for knowledge graph retrieval (implemented based on Neo4j code)

Jun 12, 2024 am 10:32 AM

Graph Retrieval Enhanced Generation (GraphRAG) is gradually becoming popular and has become a powerful complement to traditional vector search methods. This method takes advantage of the structural characteristics of graph databases to organize data in the form of nodes and relationships, thereby enhancing the depth and contextual relevance of retrieved information. Graphs have natural advantages in representing and storing diverse and interrelated information, and can easily capture complex relationships and properties between different data types. Vector databases are unable to handle this type of structured information, and they focus more on processing unstructured data represented by high-dimensional vectors. In RAG applications, combining structured graph data and unstructured text vector search allows us to enjoy the advantages of both at the same time, which is what this article will discuss. structure

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

Visualize FAISS vector space and adjust RAG parameters to improve result accuracy

Mar 01, 2024 pm 09:16 PM

As the performance of open source large-scale language models continues to improve, performance in writing and analyzing code, recommendations, text summarization, and question-answering (QA) pairs has all improved. But when it comes to QA, LLM often falls short on issues related to untrained data, and many internal documents are kept within the company to ensure compliance, trade secrets, or privacy. When these documents are queried, LLM can hallucinate and produce irrelevant, fabricated, or inconsistent content. One possible technique to handle this challenge is Retrieval Augmented Generation (RAG). It involves the process of enhancing responses by referencing authoritative knowledge bases beyond the training data source to improve the quality and accuracy of the generation. The RAG system includes a retrieval system for retrieving relevant document fragments from the corpus

Google AI announces Gemini 1.5 Pro and Gemma 2 for developers

Jul 01, 2024 am 07:22 AM

Google AI announces Gemini 1.5 Pro and Gemma 2 for developers

Jul 01, 2024 am 07:22 AM

Google AI has started to provide developers with access to extended context windows and cost-saving features, starting with the Gemini 1.5 Pro large language model (LLM). Previously available through a waitlist, the full 2 million token context windo