Technology peripherals

Technology peripherals

AI

AI

Challenge NVIDIA! AMD launches AI chip that can run larger models and write poetry

Challenge NVIDIA! AMD launches AI chip that can run larger models and write poetry

Challenge NVIDIA! AMD launches AI chip that can run larger models and write poetry

The number two player in the AI computing power market, chip manufacturer AMD, has launched a new artificial intelligence GPU MI300 series of chips to compete with Nvidia in the artificial intelligence computing power market.

In the early morning of June 14th, Beijing time, AMD held the "AMD Data Center and Artificial Intelligence Technology Premiere" as scheduled, and launched the AI processor MI300 series at the meeting. MI300X, specifically optimized for large language models, will ship to a select group of customers later this year.

AMD CEO Su Zifeng first introduced MI300A, which is the world’s first accelerated processor (APU) accelerator for AI and high-performance computing (HPC). There are 146 billion transistors spread across 13 chiplets. Compared with the previous generation MI250, the performance of MI300 is eight times higher and the efficiency is five times higher.

Subsequently, Su Zifeng announced the most watched product of this conference-MI300X, which is a version optimized for large language models.

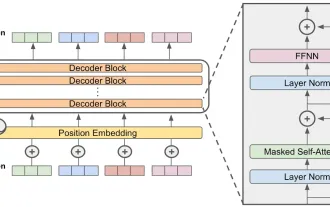

"I like this chip," Su Zifeng said. The MI300X chip and its CDNA architecture are designed for large language models and other cutting-edge artificial intelligence models.

Large language models for generative AI applications use large amounts of memory as they run more and more calculations. AMD demonstrated the MI300X running a 40 billion parameter Falcon model. OpenAI’s GPT-3 model has 175 billion parameters.

She also used Hugging Face’s large model based on MI300X to write a poem about San Francisco, where the event was held.

Su Zifeng said that the HBM (high bandwidth memory) density provided by MI300X is 2.4 times that of NVIDIA H100, and the HBM bandwidth is 1.6 times that of competing products. This means AMD can run larger models than the Nvidia H100.

"Model sizes are getting larger and larger, and you actually need multiple GPUs to run the latest large language models," Su Zifeng pointed out. With the increase in memory on AMD chips, developers will not need as many GPUs, Can save costs for users.

AMD also said that it will launch an Infinity Architecture that integrates 8 M1300X accelerators in one system. Similar systems are being developed by Nvidia and Google, which combine eight or more GPUs into a box for artificial intelligence applications.

A good chip is not only the product itself, but also needs a good ecosystem. One reason AI developers have historically favored Nvidia chips is that it has a well-developed software package called CUDA that gives them access to the chip's core hardware functionality.

In order to benchmark NVIDIA's CUDA, AMD launched its own chip software "ROCm" to create its own software ecosystem.

Su Zifeng also told investors and analysts that artificial intelligence is the company’s “largest and most strategic long-term growth opportunity.” “We believe that the data center artificial intelligence accelerator (market) will grow by more than 50% The compound annual growth rate will grow from about US$30 billion this year to more than US$150 billion in 2027.”

Large language models such as ChatGPT require the highest performance GPUs for computing. Nowadays, NVIDIA has an absolute advantage in this market, owning 80% of the market, while AMD is regarded as a strong challenger.

Although AMD did not disclose the price, this move may put price pressure on Nvidia GPUs, such as the latter's H100, which can reach more than 30,000 US dollars. Falling GPU prices may help reduce the high cost of generating AI applications.

However, many of AMD's products at this conference were in a "leading" position in terms of performance. However, the capital market did not push up AMD's stock price. Instead, it closed down 3.61%, while its peer Nvidia closed up 3.90%, its market value for the first time. Closed above the $1 trillion mark.

[Source: The Paper]

The above is the detailed content of Challenge NVIDIA! AMD launches AI chip that can run larger models and write poetry. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1392

1392

52

52

36

36

110

110

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

Best AI Art Generators (Free & Paid) for Creative Projects

Apr 02, 2025 pm 06:10 PM

The article reviews top AI art generators, discussing their features, suitability for creative projects, and value. It highlights Midjourney as the best value for professionals and recommends DALL-E 2 for high-quality, customizable art.

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

Is ChatGPT 4 O available?

Mar 28, 2025 pm 05:29 PM

ChatGPT 4 is currently available and widely used, demonstrating significant improvements in understanding context and generating coherent responses compared to its predecessors like ChatGPT 3.5. Future developments may include more personalized interactions and real-time data processing capabilities, further enhancing its potential for various applications.

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Getting Started With Meta Llama 3.2 - Analytics Vidhya

Apr 11, 2025 pm 12:04 PM

Meta's Llama 3.2: A Leap Forward in Multimodal and Mobile AI Meta recently unveiled Llama 3.2, a significant advancement in AI featuring powerful vision capabilities and lightweight text models optimized for mobile devices. Building on the success o

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

Best AI Chatbots Compared (ChatGPT, Gemini, Claude & More)

Apr 02, 2025 pm 06:09 PM

The article compares top AI chatbots like ChatGPT, Gemini, and Claude, focusing on their unique features, customization options, and performance in natural language processing and reliability.

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

Top AI Writing Assistants to Boost Your Content Creation

Apr 02, 2025 pm 06:11 PM

The article discusses top AI writing assistants like Grammarly, Jasper, Copy.ai, Writesonic, and Rytr, focusing on their unique features for content creation. It argues that Jasper excels in SEO optimization, while AI tools help maintain tone consist

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

How to Access Falcon 3? - Analytics Vidhya

Mar 31, 2025 pm 04:41 PM

Falcon 3: A Revolutionary Open-Source Large Language Model Falcon 3, the latest iteration in the acclaimed Falcon series of LLMs, represents a significant advancement in AI technology. Developed by the Technology Innovation Institute (TII), this open

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

Choosing the Best AI Voice Generator: Top Options Reviewed

Apr 02, 2025 pm 06:12 PM

The article reviews top AI voice generators like Google Cloud, Amazon Polly, Microsoft Azure, IBM Watson, and Descript, focusing on their features, voice quality, and suitability for different needs.

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

Top 7 Agentic RAG System to Build AI Agents

Mar 31, 2025 pm 04:25 PM

2024 witnessed a shift from simply using LLMs for content generation to understanding their inner workings. This exploration led to the discovery of AI Agents – autonomous systems handling tasks and decisions with minimal human intervention. Buildin