For big AI models who can't get NVIDIA GPUs, AMD chips are here!

AMD’s “ultimate weapon” is here!

On June 13th, Eastern Time, AMD announced at the "AMD Data Center and Artificial Intelligence Technology Premiere" that it will launch the most advanced artificial intelligence GPU MI300X chip to date, which can accelerate ChatGPT and other chat robots. It uses generative artificial intelligence for processing speed and can use up to 192GB of memory.

In contrast, NVIDIA's H100 chip only supports 120GB of memory, which is generally believed to mean that NVIDIA's dominant position in this emerging market may be challenged. The MI300X will begin shipping to select customers later this year.

Although investors expect AMD to compete with NVIDIA in the field of AI chips, analysts have not followed the trend and blown away this powerful weapon of AMD. Instead, they point out very sensibly that AMD wants to compete with NVIDIA in the field of artificial intelligence chips. There is still a long way to go in the field of smart chips to challenge NVIDIA's industry leadership, and this chip alone cannot do it.

Citi chip analyst Chris Danely said in the latest report that AMD's MI300 chip seems to have achieved a huge design victory, but considering the performance limitations and history of failure, he is skeptical about the sustainability of graphics/CPU ICs. Xing expressed doubts, "While we expect AMD to continue to gain market share from Intel, its new Genoa products appear to be growing slower than expected."

On the same day, Karl Freund, founder and chief analyst of Cambrian-AI Research LLC, also wrote in Forbes that Although AMD’s newly launched chip has aroused huge interest from all parties in the market, it is not the same as Nvidia’s. Compared with the H100 chip, MI300X faces some challenges, mainly in the following four aspects:

NVIDIA began full shipping of the H100 today, and the company has by far the largest ecosystem of software and researchers in the AI industry.

Second, although the MI300X chip offers 192GB of memory, Nvidia will soon catch up at this point, and may even overtake it in the same time frame, so this is not a big advantage. The MI300X will be very expensive and won't have a significant cost advantage over Nvidia's H100.

The third is the real key: MI300 does not have a Transformer Engine (a library for accelerating Transformer models on NVIDIA GPUs) like H100, which can double the performance of large language models (LLM). If it takes a year to train a new model with thousands of (NVIDIA) GPUs, then training with AMD hardware may take another 2-3 years, or invest 3 times as many GPUs to solve the problem.

Finally, AMD has not yet disclosed any benchmarks. But performance when training and running LLM depends on the system design and GPU, so I’m looking forward to seeing some comparisons with industry competitors later this year.

However, Freund also added that MI300X may become an alternative to Nvidia’s GH200 Grace Hopper super chip. Companies like OpenAI and Microsoft need such alternatives, and while he doubts AMD will give these companies an offer they can't refuse, AMD won't take much market share away from Nvidia.

AMD’s share price has risen by 94% since the beginning of this year. Yesterday, the US stock market closed down 3.61%, while its peer Nvidia closed up 3.90%, with its market value closing above the US$1 trillion mark for the first time.

In fact, Nvidia is ahead not only because of its chips, but also because of the software tools they have provided to artificial intelligence researchers for more than a decade. Moor Insights & Strategy analyst Anshel Sag said: "Even if AMD is competitive in terms of hardware performance, people are still not convinced that its software solutions can compete with Nvidia."

is expected to drive healthy competition among technology companies

American technology critic Billy Duberstein pointed out on the 12th that with the popularity of ChatGPT, AI seems to have opened up a new field, and a competition has begun among leading companies in many industries to capture market share. Whether Nvidia or AMD wins this race, every technology company will benefit.

Duberstein said potential customers are very interested in the MI300. According to a June 8 article in Digital Times that he quoted, data center customers are desperately looking for alternatives to Nvidia products. Nvidia currently occupies a dominant position in the high-profit and high-growth market of artificial intelligence GPUs, accounting for 60% to 70% of the market share in the field of AI servers.

Duberstein went on to point out that given the current high price of Nvidia H100 servers, data center operators would like to see Nvidia have a third-party competitor, which would help reduce the price of AI chips. Therefore, this is a huge advantage for AMD and a challenge for Nvidia. This can lead to good profitability for every market participant.

Last month, Morgan Stanley analyst Joseph Moore adjusted his forecast for AMD's artificial intelligence revenue, saying it could be "several times higher" than initially expected.

Chinese companies competing for the GPU AI industry chain are expected to continue to benefit from it

Currently, major technology companies around the world are competing for AI tickets. Compared with overseas giants, Chinese large technology companies are more urgent to purchase GPUs.

According to "LatePost", after the Spring Festival this year, major Chinese Internet companies with cloud computing businesses have placed large orders with NVIDIA. Byte has ordered more than $1 billion in GPUs from Nvidia this year, and another large company's order has also exceeded at least 1 billion yuan. And Byte's orders this year alone may be close to the total number of commercial GPUs sold by Nvidia in China last year.

Guojin Securities continues to be optimistic about AMD’s new moves and continues to be optimistic about the AI industry chain. The agency believes that the continued popularity of generative AI has driven strong demand for AI chips. NVIDIA's second-quarter data center business guidance has exceeded expectations, and TSMC's 4nm, 5nm, and 7nm capacity utilization has increased significantly, which are the best proof.

Guojin Securities pointed out that according to industry chain research, the leading optical module-DSP chip manufacturer stated that AI-related business is expected to grow rapidly in the future; the leading CPU/GPU heat sink company stated that AI GPU experienced significant quarter-on-quarter growth in the second and third quarters. Leading companies said that in the next quarter, demand for server PCIe Retimer chips will accelerate and gradually increase. It is expected that between 2024 and 2025, new products in the AI industry will promote the development of the AI industry chain and bring sustained economic benefits.

The above is the detailed content of For big AI models who can't get NVIDIA GPUs, AMD chips are here!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1369

1369

52

52

AMD Radeon RX 7800M in OneXGPU 2 outperforms Nvidia RTX 4070 Laptop GPU

Sep 09, 2024 am 06:35 AM

AMD Radeon RX 7800M in OneXGPU 2 outperforms Nvidia RTX 4070 Laptop GPU

Sep 09, 2024 am 06:35 AM

OneXGPU 2 is the first eGPUto feature the Radeon RX 7800M, a GPU that even AMD hasn't announced yet. As revealed by One-Netbook, the manufacturer of the external graphics card solution, the new AMD GPU is based on RDNA 3 architecture and has the Navi

AMD Z2 Extreme chip for handheld consoles tipped for an early 2025 launch

Sep 07, 2024 am 06:38 AM

AMD Z2 Extreme chip for handheld consoles tipped for an early 2025 launch

Sep 07, 2024 am 06:38 AM

Even though AMD tailor-made the Ryzen Z1 Extreme (and its non-Extreme variant) for handheld consoles, the chip only ever found itself in two mainstream handhelds, the Asus ROG Ally (curr. $569 on Amazon) and Lenovo Legion Go (three if you count the R

Deal | Lenovo ThinkPad P14s Gen 5 with 120Hz OLED, 64GB RAM and AMD Ryzen 7 Pro is 60% off right now

Sep 07, 2024 am 06:31 AM

Deal | Lenovo ThinkPad P14s Gen 5 with 120Hz OLED, 64GB RAM and AMD Ryzen 7 Pro is 60% off right now

Sep 07, 2024 am 06:31 AM

Many students are going back to school these days, and some may notice that their old laptop isn't up to the task anymore. Some college students might even be in the market for a high-end business notebook with a gorgeous OLED screen, in which case t

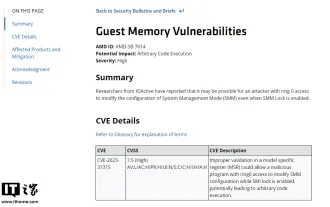

AMD announces 'Sinkclose' high-severity vulnerability, affecting millions of Ryzen and EPYC processors

Aug 10, 2024 pm 10:31 PM

AMD announces 'Sinkclose' high-severity vulnerability, affecting millions of Ryzen and EPYC processors

Aug 10, 2024 pm 10:31 PM

According to news from this site on August 10, AMD officially confirmed that some EPYC and Ryzen processors have a new vulnerability called "Sinkclose" with the code "CVE-2023-31315", which may involve millions of AMD users around the world. So, what is Sinkclose? According to a report by WIRED, the vulnerability allows intruders to run malicious code in "System Management Mode (SMM)." Allegedly, intruders can use a type of malware called a bootkit to take control of the other party's system, and this malware cannot be detected by anti-virus software. Note from this site: System Management Mode (SMM) is a special CPU working mode designed to achieve advanced power management and operating system independent functions.

Beelink SER9: Compact AMD Zen 5 mini-PC announced with Radeon 890M iGPU but limited eGPU options

Sep 12, 2024 pm 12:16 PM

Beelink SER9: Compact AMD Zen 5 mini-PC announced with Radeon 890M iGPU but limited eGPU options

Sep 12, 2024 pm 12:16 PM

Beelink continues to introduce new mini-PCs and accompanying accessories at a rate of knots. To recap, little over a month has passed since it released the EQi12, EQR6 and the EX eGPU dock. Now, the company has turned its attention to AMD's new Strix

First Minisforum mini PC with Ryzen AI 9 HX 370 rumored to launch with high price tag

Sep 29, 2024 am 06:05 AM

First Minisforum mini PC with Ryzen AI 9 HX 370 rumored to launch with high price tag

Sep 29, 2024 am 06:05 AM

Aoostar was among the first to announce a Strix Point mini PC, and later, Beelink launched the SER9with a soaring starting price tag of $999. Minisforum joined the party by teasingthe EliteMini AI370, and as the name suggests, it will be the company'

IFA 2024 | New Lenovo Yoga Pro 7 debuts with AMD Strix Point processor

Sep 06, 2024 am 06:42 AM

IFA 2024 | New Lenovo Yoga Pro 7 debuts with AMD Strix Point processor

Sep 06, 2024 am 06:42 AM

Asus had the first round of AMD Strix Point laptop launches, and now Lenovo has joined the party. Among the newly launched laptops is the Yoga Pro 7, which packs the Ryzen AI 9 365. Compared to the Hawk Point option launched earlier this year, the ne

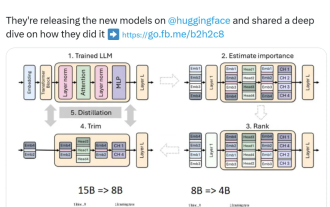

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

Nvidia plays with pruning and distillation: halving the parameters of Llama 3.1 8B to achieve better performance with the same size

Aug 16, 2024 pm 04:42 PM

The rise of small models. Last month, Meta released the Llama3.1 series of models, which includes Meta’s largest model to date, the 405B model, and two smaller models with 70 billion and 8 billion parameters respectively. Llama3.1 is considered to usher in a new era of open source. However, although the new generation models are powerful in performance, they still require a large amount of computing resources when deployed. Therefore, another trend has emerged in the industry, which is to develop small language models (SLM) that perform well enough in many language tasks and are also very cheap to deploy. Recently, NVIDIA research has shown that structured weight pruning combined with knowledge distillation can gradually obtain smaller language models from an initially larger model. Turing Award Winner, Meta Chief A