Backend Development

Backend Development

PHP Tutorial

PHP Tutorial

Efficiently crawl web page data: combined use of PHP and Selenium

Efficiently crawl web page data: combined use of PHP and Selenium

Efficiently crawl web page data: combined use of PHP and Selenium

With the rapid development of Internet technology, Web applications are increasingly used in our daily work and life. In the process of web application development, crawling web page data is a very important task. Although there are many web scraping tools on the market, these tools are not very efficient. In order to improve the efficiency of web page data crawling, we can use the combination of PHP and Selenium.

First of all, we need to understand what PHP and Selenium are. PHP is a powerful open source scripting language commonly used for web development. Its syntax is similar to C language and is easy to learn and use. Selenium is an open source tool for web application testing. It can simulate user operations in the browser and obtain data on the web page. Selenium supports various browsers, including Chrome, Firefox and Safari.

Secondly, we need to install Selenium WebDriver. Selenium WebDriver is a component of Selenium that can call APIs of various browsers to implement automated testing and data crawling of web applications. Before using Selenium WebDriver, you need to install the Selenium WebDriver driver. For example, if you want to use the Chrome browser, you need to download the corresponding version of ChromeDriver.

Next, we can use PHP to write the crawler program. First, we need to import the Selenium WebDriver library:

<?php

require_once('vendor/autoload.php');

use FacebookWebDriverRemoteRemoteWebDriver;

use FacebookWebDriverWebDriverBy;Then, we can use RemoteWebDriver to open the browser and access the target website:

$host = 'http://localhost:4444/wd/hub';

$driver = RemoteWebDriver::create($host, DesiredCapabilities::chrome());

$driver->get('http://www.example.com');After accessing the website, we can use WebDriverBy to select the page elements and get their data. For example, if you want to get all the links on the page, you can use the following code:

$linkElements = $driver->findElements(WebDriverBy::tagName('a'));

$links = array();

foreach ($linkElements as $linkElement) {

$links[] = array(

'text' => $linkElement->getText(),

'href' => $linkElement->getAttribute('href')

);

}This code will get all the links on the page and save their text and URL into an array.

You can also use WebDriverBy to simulate user operations in the browser. For example, if you want to enter a keyword in the search box and click the search button, you can use the following code:

$searchBox = $driver->findElement(WebDriverBy::id('search-box'));

$searchBox->sendKeys('keyword');

$searchButton = $driver->findElement(WebDriverBy::id('search-button'));

$searchButton->click();This code will enter the keyword in the search box and click the search button.

Finally, we need to close the browser and exit the program:

$driver->quit(); ?>

Generally speaking, the combination of PHP and Selenium can greatly improve the efficiency of web page data crawling. Whether it is to obtain web page data or simulate user operations in the browser, it can be achieved through Selenium WebDriver. Although using Selenium WebDriver requires some additional configuration and installation, its effectiveness and flexibility are unmatched by other web scraping tools.

The above is the detailed content of Efficiently crawl web page data: combined use of PHP and Selenium. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

CakePHP Working with Database

Sep 10, 2024 pm 05:25 PM

Working with database in CakePHP is very easy. We will understand the CRUD (Create, Read, Update, Delete) operations in this chapter.

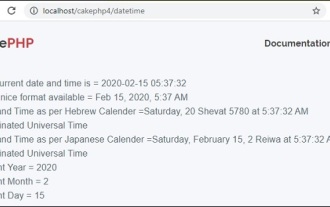

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

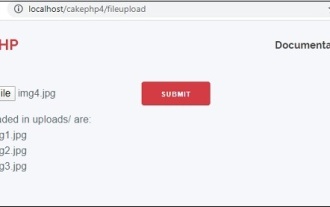

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

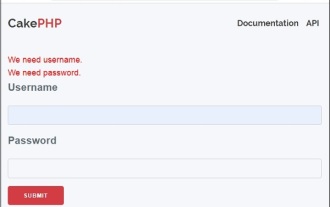

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.

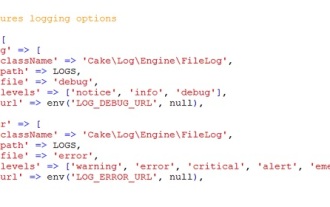

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

CakePHP Logging

Sep 10, 2024 pm 05:26 PM

Logging in CakePHP is a very easy task. You just have to use one function. You can log errors, exceptions, user activities, action taken by users, for any background process like cronjob. Logging data in CakePHP is easy. The log() function is provide

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c