Using PHP and Selenium to implement crawler data collection

With the continuous development of Internet technology, data has become an extremely valuable resource. More and more companies are beginning to pay attention to the value of data and improve their competitiveness through data mining and analysis. In this process, data collection becomes the first step in data analysis.

Currently, crawler technology is a very common method of data collection. Crawler technology can be used to effectively obtain various data on the Internet, such as product information, forum posts, news articles, etc. on some websites. In this article, we will introduce how to use PHP and Selenium to implement crawler data collection.

1. What is Selenium?

Selenium is a tool for testing web applications. It supports multiple browsers, including Chrome, Firefox, IE, etc. Selenium can automate browser operations on the Web, such as clicking links, entering data into text boxes, submitting forms, and more.

In data collection, Selenium can be used to simulate the browser's operation of the web page, thereby realizing data collection. Generally speaking, the steps for collecting data are as follows:

- Use Selenium to open the webpage to be collected

- Perform operations on the webpage, such as entering data into the text box, clicking buttons, etc. Wait

- Get the required data

2. Use PHP to call Selenium

Selenium itself is written in Java, so we need to write a Selenium script in Java, Then call it using PHP.

- Install Java and Selenium

First, we need to install Java and Selenium. Here, we take Ubuntu as an example, just execute the following command:

sudo apt-get install default-jre

sudo apt-get install default-jdk

Download Selenium's Java library is placed in your project directory.

- Write Selenium script

In the project directory, create a file named selenium.php, and then write a Java script in it, such as the following code:

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.chrome.ChromeDriver;

public class SeleniumDemo {

public static void main(String[] args) {

System.setProperty("webdriver.chrome.driver", "/path/to/chromedriver"); // chromedriver的路径

WebDriver driver = new ChromeDriver();

driver.get("http://www.baidu.com"); // 要访问的网站

String title = driver.getTitle(); // 获取网页标题

System.out.println(title);

driver.quit(); // 退出浏览器

}

}This script will open a Chrome browser and visit the Baidu homepage, then get the title of the web page and output it. You need to replace "/path/to/chromedriver" with the actual path on your machine.

- Calling Selenium

In the selenium.php file, use the exec() function to call the Java script. The code is as follows:

<?php

$output = array();

exec("java -cp .:/path/to/selenium-java.jar SeleniumDemo 2>&1", $output);

$title = $output[0];

echo $title;

?>Here, we PHP's exec() function is used to call the Java script, and "/path/to/selenium-java.jar" needs to be replaced with the actual path on your machine.

After executing the above code, you should be able to see Baidu’s webpage title output on the screen.

3. Use Selenium to implement data collection

With the foundation of Selenium, we can start to implement data collection. Taking the product data collection of a Jingdong Mall as an example, here is a demonstration of how to use Selenium to implement it.

- Open the webpage

First, we need to open the homepage of Jingdong Mall and search for the products to be collected. During this process, you need to pay attention to the loading time of the web page. Using the sleep() function can make the program pause for a period of time and wait for the web page to be fully loaded.

<?php

$output = array();

exec("java -cp .:/path/to/selenium-java.jar JingDongDemo 2>&1", $output);

echo $output[0]; // 输出采集到的商品数据

?>

// JingDongDemo.java

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.firefox.FirefoxDriver;

import java.util.List;

import java.util.concurrent.TimeUnit;

public class JingDongDemo {

public static void main(String[] args) {

System.setProperty("webdriver.gecko.driver", "/path/to/geckodriver"); // geckodriver的路径

WebDriver driver = new FirefoxDriver();

driver.manage().timeouts().implicitlyWait(10, TimeUnit.SECONDS); // 等待网页加载

driver.get("http://www.jd.com"); // 打开网站

driver.findElement(By.id("key")).sendKeys("Iphone 7"); // 输入要搜索的商品

driver.findElement(By.className("button")).click(); // 单击搜索按钮

try {

Thread.sleep(5000); // 等待网页完全加载

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}- Get product data

Next, we need to get the product data in the search results. In JD.com's webpage, product data is placed in a div with class "gl-item". We can use findElements() to obtain all qualified div elements and parse the contents one by one.

List<WebElement> productList = driver.findElements(By.className("gl-item")); // 获取所有商品列表项

for(WebElement product : productList) { // 逐个解析商品数据

String name = product.findElement(By.className("p-name")).getText();

String price = product.findElement(By.className("p-price")).getText();

String commentCount = product.findElement(By.className("p-commit")).getText();

String shopName = product.findElement(By.className("p-shop")).getText();

String output = name + " " + price + " " + commentCount + " " + shopName + "

";

System.out.println(output);

}At this point, we have successfully implemented crawler data collection using PHP and Selenium. Of course, there are many things that need to be paid attention to during the actual data collection process, such as the anti-crawler strategy of the website, browser and Selenium version compatibility, etc. I hope this article can provide some reference for friends who need data collection.

The above is the detailed content of Using PHP and Selenium to implement crawler data collection. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

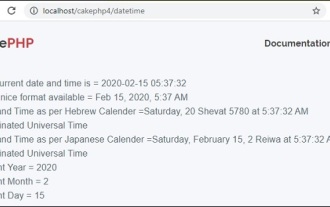

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

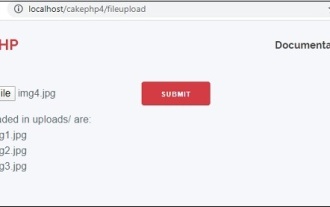

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

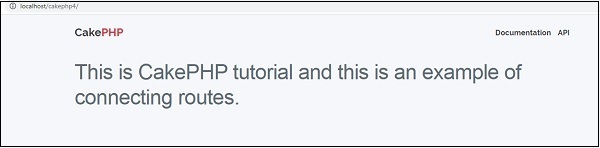

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

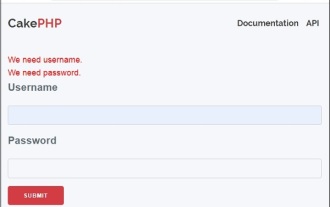

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.