Python Server Programming: Deep Learning with PyTorch

With the rapid development of artificial intelligence technology, deep learning technology has become an essential tool in many application fields. As a popular deep learning framework, PyTorch has become the first choice of many researchers and engineers. This article will introduce how to use PyTorch for deep learning in Python server programming.

- Introduction to PyTorch

PyTorch is an open source Python deep learning framework that provides flexible design concepts and tools to help researchers and engineers quickly build and train Various deep neural networks. The core idea of PyTorch is "instant execution", which allows users to check and modify network models in real time to achieve better training results.

The main advantages of using PyTorch include:

- Easy to use: PyTorch provides an intuitive API and documentation, making it easy for novices to start using it.

- Flexibility: PyTorch provides a variety of flexible design concepts and tools, allowing users to freely design and experiment with different network structures.

- Easy to customize: PyTorch allows users to use the powerful functions of Python to customize network layers and training processes to achieve more advanced deep learning functions.

- Server Programming Basics

Using PyTorch for deep learning in server programming requires basic server programming knowledge. The basics of server programming will not be introduced in detail here, but we need to pay attention to the following aspects:

- Data storage: Servers usually need to read and write large amounts of data, so they need to use efficient Data storage methods, such as databases, file systems, etc.

- Network communication: Servers usually need to handle various network requests, such as HTTP requests, WebSocket requests, etc.

- Multi-threading and multi-process: In order to improve the performance and stability of the server, it is usually necessary to use multi-threading or multi-process to process requests.

- Security: The server needs to protect the security of data and systems, including firewalls, encryption, authentication, authorization, etc.

- Application of PyTorch in server programming

The application of PyTorch in server programming usually includes the following aspects:

- Model training: The server can use PyTorch for model training in a multi-GPU environment, thereby accelerating the training speed and improving model performance.

- Model inference: The server can use PyTorch for model inference, providing real-time responses to client requests.

- Model management: The server can use PyTorch to manage multiple models, allowing users to quickly switch and deploy different models.

- Multi-language support: PyTorch can be integrated with other programming languages, such as Java, C, etc., to integrate with different application scenarios.

- Example: Train and deploy a model using PyTorch

The following is a simple example that shows how to train and deploy a model using PyTorch.

First, we need to download and prepare the training data set. Here we use the MNIST handwritten digit recognition data set. Then, we need to define a convolutional neural network for training and inference.

import torch.nn as nn

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5, 1)

self.conv2 = nn.Conv2d(20, 50, 5, 1)

self.fc1 = nn.Linear(4*4*50, 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4*4*50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x, dim=1)Next, we need to define a training function for training the convolutional neural network defined above. Here we use cross entropy loss function and stochastic gradient descent optimization algorithm.

def train(model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()Finally, we need to define an inference function for model inference at deployment time.

def infer(model, device, data):

model.eval()

with torch.no_grad():

output = model(data.to(device))

pred = output.argmax(dim=1, keepdim=True)

return pred.item()Through the above steps, we can train and deploy a simple convolutional neural network model.

- Summary

Through the introduction of this article, we have learned how to use PyTorch for deep learning in Python server programming. As a flexible deep learning framework, PyTorch can quickly build and train various deep neural networks, while having the advantages of ease of use and customization. We can use PyTorch for model training, model inference, and model management to improve server performance and application capabilities.

The above is the detailed content of Python Server Programming: Deep Learning with PyTorch. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

BERT is a pre-trained deep learning language model proposed by Google in 2018. The full name is BidirectionalEncoderRepresentationsfromTransformers, which is based on the Transformer architecture and has the characteristics of bidirectional encoding. Compared with traditional one-way coding models, BERT can consider contextual information at the same time when processing text, so it performs well in natural language processing tasks. Its bidirectionality enables BERT to better understand the semantic relationships in sentences, thereby improving the expressive ability of the model. Through pre-training and fine-tuning methods, BERT can be used for various natural language processing tasks, such as sentiment analysis, naming

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

PyCharm is a powerful integrated development environment (IDE), and PyTorch is a popular open source framework in the field of deep learning. In the field of machine learning and deep learning, using PyCharm and PyTorch for development can greatly improve development efficiency and code quality. This article will introduce in detail how to install and configure PyTorch in PyCharm, and attach specific code examples to help readers better utilize the powerful functions of these two. Step 1: Install PyCharm and Python

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent Space Embedding (LatentSpaceEmbedding) is the process of mapping high-dimensional data to low-dimensional space. In the field of machine learning and deep learning, latent space embedding is usually a neural network model that maps high-dimensional input data into a set of low-dimensional vector representations. This set of vectors is often called "latent vectors" or "latent encodings". The purpose of latent space embedding is to capture important features in the data and represent them into a more concise and understandable form. Through latent space embedding, we can perform operations such as visualizing, classifying, and clustering data in low-dimensional space to better understand and utilize the data. Latent space embedding has wide applications in many fields, such as image generation, feature extraction, dimensionality reduction, etc. Latent space embedding is the main

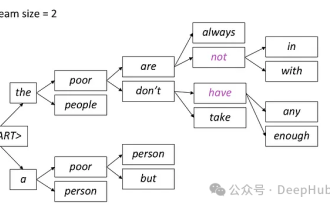

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

In natural language generation tasks, sampling method is a technique to obtain text output from a generative model. This article will discuss 5 common methods and implement them using PyTorch. 1. GreedyDecoding In greedy decoding, the generative model predicts the words of the output sequence based on the input sequence time step by time. At each time step, the model calculates the conditional probability distribution of each word, and then selects the word with the highest conditional probability as the output of the current time step. This word becomes the input to the next time step, and the generation process continues until some termination condition is met, such as a sequence of a specified length or a special end marker. The characteristic of GreedyDecoding is that each time the current conditional probability is the best

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Before we understand the working principle of the Denoising Diffusion Probabilistic Model (DDPM) in detail, let us first understand some of the development of generative artificial intelligence, which is also one of the basic research of DDPM. VAEVAE uses an encoder, a probabilistic latent space, and a decoder. During training, the encoder predicts the mean and variance of each image and samples these values from a Gaussian distribution. The result of the sampling is passed to the decoder, which converts the input image into a form similar to the output image. KL divergence is used to calculate the loss. A significant advantage of VAE is its ability to generate diverse images. In the sampling stage, one can directly sample from the Gaussian distribution and generate new images through the decoder. GAN has made great progress in variational autoencoders (VAEs) in just one year.

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

As a powerful deep learning framework, PyTorch is widely used in various machine learning projects. As a powerful Python integrated development environment, PyCharm can also provide good support when implementing deep learning tasks. This article will introduce in detail how to install PyTorch in PyCharm and provide specific code examples to help readers quickly get started using PyTorch for deep learning tasks. Step 1: Install PyCharm First, we need to make sure we have

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning