Create a simple web crawler using PHP

With the continuous development of the Internet, access to information has become more and more convenient. However, the massive amount of information also brings us a lot of troubles. How to efficiently obtain the information we need has become a very important task. In the process of automating the acquisition of information, web crawlers are widely used.

Web crawler is a program that automatically obtains Internet information. It is usually used for tasks such as search engines, data mining, and commodity price tracking. The web crawler will automatically access the specified website or web page, and then parse the HTML or XML data to obtain the required information.

Today, this article will introduce how to create a simple web crawler using PHP language. Before we start, we need to understand the basic knowledge of the PHP language and some basic concepts of web development.

1. Get the HTML page

The first step of the Web crawler is to get the HTML page. This step can be achieved using PHP's built-in functions. For example, we can use the file_get_contents function to get the HTML page of a URL address and save it to a variable. The code is as follows:

1 2 |

|

In the above code, we define a $url variable to store the target URL address, and then use the file_get_contents function to get the HTML page of the URL address and store it in the $html variable.

2. Parse the HTML page

After obtaining the HTML page, we need to extract the required information from it. HTML pages usually consist of tags and tag attributes. Therefore, we can use PHP's built-in DOM manipulation functions to parse HTML pages.

Before using the DOM operation function, we need to load the HTML page into a DOMDocument object. The code is as follows:

1 2 |

|

In the above code, we created an empty DOMDocument object. , and use the loadHTML function to load the obtained HTML page into the DOMDocument object.

Next, we can get the tags in the HTML page through the DOMDocument object. The code is as follows:

1 |

|

In the above code, we use the getElementsByTagName function to get the tags specified in the HTML page. For example, get all hyperlink tags:

1 |

|

Get all image tags:

1 |

|

Get all paragraph tags:

1 |

|

3. Parse tag attributes

In addition to getting the tag itself, we also need to parse the attributes of the tag, for example, get the href attributes of all hyperlinks:

1 2 3 4 |

|

In the above code, we use the getAttribute function to get the designation of the specified tag The attribute value is then stored in the $href variable.

4. Filter useless information

When parsing HTML pages, we may encounter some useless information, such as advertisements, navigation bars, etc. In order to avoid the interference of this information, we need to use some techniques to filter out useless information.

Commonly used filtering methods include:

- Filtering based on tag name

For example, we can only get text tags:

1 |

|

- Filtering based on CSS selectors

Use CSS selectors to easily locate the required tags, for example, get all tags with the class name "list":

1 |

|

- Filtering based on keywords

You can easily delete unnecessary information by keyword filtering, for example, delete all tags containing the "advertising" keyword:

1 2 3 4 5 |

|

In In the above code, we use the strpos function to determine whether the text content of the label contains the "advertising" keyword. If it does, use the removeChild function to delete the label.

5. Store data

Finally, we need to store the obtained data for subsequent processing. In PHP language, arrays or strings are usually used to store data.

For example, we can save all hyperlinks into an array:

1 2 3 4 5 |

|

In the above code, we use the array_push function to store the href attribute of each hyperlink into $links_arr in the array.

6. Summary

Through the introduction of this article, we have learned how to use the PHP language to create a simple web crawler. In practical applications, we need to optimize the implementation of crawlers based on different needs, such as adding a retry mechanism, using proxy IP, etc. I hope that readers can further understand the implementation principles of web crawlers through the introduction of this article, and can easily implement their own web crawler programs.

The above is the detailed content of Create a simple web crawler using PHP. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

CakePHP Project Configuration

Sep 10, 2024 pm 05:25 PM

In this chapter, we will understand the Environment Variables, General Configuration, Database Configuration and Email Configuration in CakePHP.

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 Installation and Upgrade guide for Ubuntu and Debian

Dec 24, 2024 pm 04:42 PM

PHP 8.4 brings several new features, security improvements, and performance improvements with healthy amounts of feature deprecations and removals. This guide explains how to install PHP 8.4 or upgrade to PHP 8.4 on Ubuntu, Debian, or their derivati

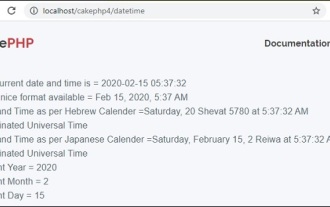

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

CakePHP Date and Time

Sep 10, 2024 pm 05:27 PM

To work with date and time in cakephp4, we are going to make use of the available FrozenTime class.

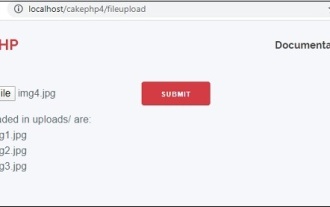

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

CakePHP File upload

Sep 10, 2024 pm 05:27 PM

To work on file upload we are going to use the form helper. Here, is an example for file upload.

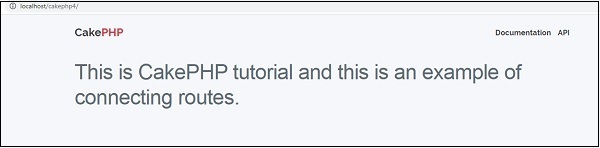

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

CakePHP Routing

Sep 10, 2024 pm 05:25 PM

In this chapter, we are going to learn the following topics related to routing ?

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

Discuss CakePHP

Sep 10, 2024 pm 05:28 PM

CakePHP is an open-source framework for PHP. It is intended to make developing, deploying and maintaining applications much easier. CakePHP is based on a MVC-like architecture that is both powerful and easy to grasp. Models, Views, and Controllers gu

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

How To Set Up Visual Studio Code (VS Code) for PHP Development

Dec 20, 2024 am 11:31 AM

Visual Studio Code, also known as VS Code, is a free source code editor — or integrated development environment (IDE) — available for all major operating systems. With a large collection of extensions for many programming languages, VS Code can be c

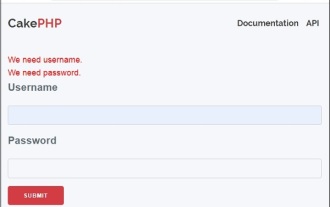

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

CakePHP Creating Validators

Sep 10, 2024 pm 05:26 PM

Validator can be created by adding the following two lines in the controller.